Client Deprecation in Confluent Cloud

The Apache Kafka® 3.7 distribution released in Q1, 2024 included KIP-896. KIP-896 is the proposal to remove support for older client protocol API versions in Kafka 4.0. The 3.7 release marked these older, client protocol API versions deprecated with a notice that they will be completely dropped by 4.0. This page discusses the changes in Confluent client behavior resulting from KIP-896.

Confluent provides one year of extended compatibility for customers’ Confluent Cloud clusters. Starting February 2026, deprecated client requests will no longer be accepted and will result in errors.

Minimal compatible baseline

With the release of Kafka 4.0 in 2025, Confluent sets the new baseline for client protocol API versions to Kafka 2.1.0, which was released in November 2018. As a result, clients connecting to new clusters must use compatible API versions.

The following chart describes the minimal compatible versions of some popular client libraries:

Client | Version | Released |

|---|---|---|

Java client for Kafka | 2.1.0 | November 2018 |

Python (confluent-kafka-python) | 1.8.2 | October 2021 |

.NET (confluent-kafka-dotnet) | 1.8.2 | October 2021 |

Go (confluent-kafka-go) | 1.8.2 | October 2021 |

C/C++ (librdkafka) | 1.8.2 | October 2021 |

KafkaJS | 1.15.0 | November 2020 |

Sarama | 1.29.1 | June 2021 |

kafka-python | 2.0.2 | Sep 2020 |

Any librdkafka-based protocols not listed in the preceding table have a version minimum of 1.8.2 as specified in the October 2021 release of Apache Kafka®.

List of deprecated Kafka APIs

API | Deprecated version |

|---|---|

Produce | V0-V2 |

Fetch | V0-V3 |

ListOffset | V0 |

Metadata | None |

OffsetCommit | V0-V1 |

OffsetFetch | V0 |

FindCoordinator | None |

Heartbeat | None |

LeaveGroup | None |

SyncGroup | None |

DescribeGroups | None |

ListGroups | None |

SaslHandshake | None |

ApiVersions | None |

CreateTopics | V0-V1 |

DeleteTopics | V0 |

DeleteRecords | None |

InitProducerId | None |

OffsetForLeaderEpoch | V0-V1 |

AddPartitionsToTxn | None |

AddOffsetsToTxn | None |

EndTxn | None |

WriteTxnMarkers | None |

TxnOffsetCommit | None |

DescribeAcls | V0 |

CreateAcls | V0 |

DeleteAcls | V0 |

DescribeConfigs | V0 |

AlterConfigs | None |

AlterReplicaLogDirs | V0 |

DescribeLogDirs | V0 |

SaslAuthenticate | None |

CreatePartitions | None |

CreateDelegationToken | V0 |

RenewDelegationToken | V0 |

ExpireDelegationToken | V0 |

DescribeDelegationToken: | V0 |

DeleteGroups | None |

How to identify deprecated client requests

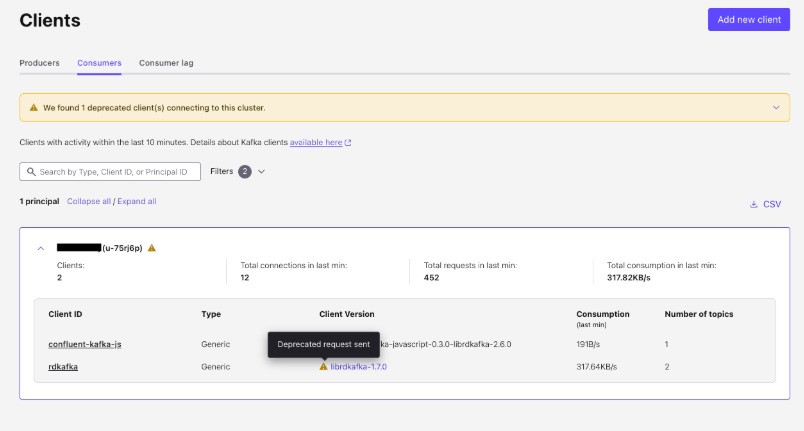

You can monitor deprecated client requests from the Confluent Cloud Console on the Clients overview page. You can filter clients by producers or consumers. You can also use this page to identify clients with deprecated requests. The Client Version column marks deprecated clients and their associated client version with a warning icon. Display a tooltip by hovering the mouse over the marker:

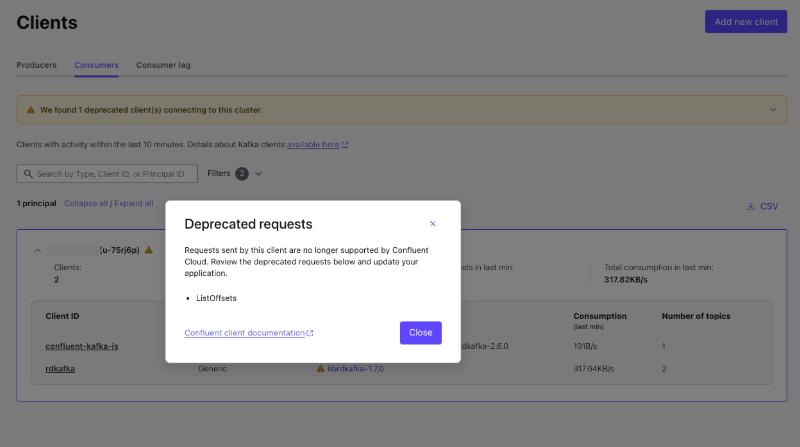

Click on the tool tip to get more information:

Users with the MetricsViewer role can query the io.confluent.kafka.server/deprecated_request_count metric in the Confluent Cloud Metrics API to identify clients sending deprecated requests. The query returns the client’s ID, the principal using that client, and the request type the client is using.

Note

Because Confluent Cloud identifies clients by principal and client ID, if your installation has multiple clients using the same principal and client ID, the Confluent Cloud Console may incorrectly report that all of them have deprecated requests. In this scenario, you must manually inspect each client to identify the one requiring an update.

The following example illustrates how to use the Metrics API to query for a deprecated client:

Create a JSON file named

deprecated_requests_query.jsonusing the following template:{ "group_by": [ "metric.client_id", "metric.principal_id", "metric.type", "resource.kafka.id" ], "aggregations": [ { "metric": "io.confluent.kafka.server/deprecated_request_count", "agg": "SUM" } ], "filter": { "op": "AND", "filters": [ { "field": "resource.kafka.id", "op": "EQ", "value": "CLUSTER_ID" } ] }, "granularity": "ALL", "intervals": ["TIMESTAMP"] }

Change

lkc-CLUSTER_IDand theMY_TIMESTAMPvalues to match your environment, for example,lck-abcd0for theCLUSTER_IDand2025-04-11T00:00:00.000Z/PT10Mfor theTIMESTAMP.Save the JSON file.

Submit the JSON query as a POST.

In the example below, change

<API_KEY>and<SECRET>to match your environments:http 'https://api.telemetry.confluent.cloud/v2/metrics/cloud/query' --auth '<API_KEY>:<SECRET>' < deprecated_requests_query.json

Successful output will resemble the following:

{ "data": [ { "timestamp": "2025-04-11T00:00:00Z", "value": 1026.0, "resource.kafka.id": "lkc-xxxxx", "metric.principal_id": "u-xxxxx", "metric.type": "Produce", "metric.client_id": "cloud_client_id_1" }, { "timestamp": "2025-04-11T00:00:00Z", "value": 6.0, "resource.kafka.id": "lkc-xxxxx", "metric.principal_id": "u-xxxxx", "metric.type": "ListOffsets", "metric.client_id": "cloud_client_id_1" } ] }

For more information on how to query the Metrics API, see Confluent Cloud Metrics.

Recommended upgrades based on client ID

Many Kafka clients many not report their official software library or version in their requests. If this is the case, you must inspect a client’s clientId to identify the client type. Some client software embed recognizable identifiers, others do not. Further, some client identifiers have custom formats making it hard to determine their version or library.

The following list provides some examples of client IDs, the likely client type given the ID, and the actions you can take to upgrade the corresponding client:

Client ID resembles | What type of client? | Suggested action to try |

|---|---|---|

| Possibly a Java producer/consumer using the default | If a Java client, upgrade to the latest Java client version. |

| Possibly an Elastic’s filebeats product using the Sarama Kafka client under-the-hood. | Base your approach on the |

( | The latest version of | |

| Sarama, a go client maintained by IBM. Sarama serves as the underlying client for other tools like beats | Upgrade to at least 1.29.1 and make sure that the Kafka is set to at least the 2.1.1 version. |

| This client either be a Confluent maintained or a third-party client that uses | Upgrade to at least the librdkafka library’s 1.8.2 version. Ideally use the latest version. |

| A popular Python library for Kafka ( | Upgrade to the latest version. |

| A popular JS library for Kafka ( | No longer maintained. Try a different library such as |

| Likely to be the “Kaffe” Kafka elixir client | As this library appears to be inconsistently maintained, consider moving to something with more maintenance such as the kafka_ex:master branch. |

| The aiokafka Python client library | Upgrade to the latest version. |

| The faust library which serves as a Python alternative for Kafka Streams. | Upgrade to the latest version. |

| Possibly the Zendesk “ruby-kafka” client. | This client is officially deprecated by Zendesk. Consider another Ruby Kafka client such as the karafka/rdkafka-ruby client which a third-party maintains. |