Kafka Plugin for JetBrains IDEs for Confluent Cloud

Use the Kafka Plugin for JetBrains IDEs to enhance your development experience while working with Confluent Cloud or other Apache Kafka® clusters.

The Kafka Plugin for JetBrains IDEs enables you to work with Kafka directly from an IntelliJ-based IDE, like IDEA. The plugin provides a comprehensive set of tools for monitoring and managing Kafka event-streaming processes.

The plugin enables you to:

Connect to Kafka clusters with support for various authentication methods

Produce and consume messages in different formats, like JSON, Avro, and Protobuf

Manage topics and monitor consumer groups

Integrate with schema registries, Confluent Schema Registry and AWS Glue Schema Registry

Use SSH tunneling and SSL connections

Integrate with Spring Boot for Kafka connections

The plugin provides a dedicated tool window with an intuitive UI for interacting with Kafka clusters, making it easier to develop and test Kafka-based applications.

Install in IntelliJ IDEA

The following steps show you how to install the Kafka Plugin for JetBrains IDEs in IntelliJ IDEA.

Open IntelliJ IDEA.

In the navigation menu, click Plugins, or press ⌘+Option+S (macOS) or CTRL+ALT+S (Windows/Linux) to open the Settings dialog, and select Plugins.

In the search text box, type “kafka”, and select the Kafka plugin by Confluent.

Click Install, and in the Third-Party Plugins dialog, click Accept.

Restart IntelliJ IDEA. Open an existing project or create a new project.

In the Tool window bar, click Kafka. Also, you can open the tool window with View > Tool Windows > Kafka.

The Kafka tool window opens.

Add a new connection to a Kafka cluster

Click + to add a new connection to a Kafka cluster.

In the Kafka Broker section, select Cloud to connect to a Kafka cluster hosted in Confluent Cloud, or Cluster to connect to a self-hosted Kafka cluster.

In the Configuration section, enter the details for your Kafka cluster. For more information, see Client Configuration Settings.

This is an example configuration file for a Confluent Cloud cluster:

# Required connection configs for Kafka producer, consumer, and admin bootstrap.servers={{ BROKER_ENDPOINT }} security.protocol=SASL_SSL sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username='{{ CLUSTER_API_KEY }}' password='{{ CLUSTER_API_SECRET }}'; sasl.mechanism=PLAIN # Required for correctness in Apache Kafka clients prior to 2.6 client.dns.lookup=use_all_dns_ips # Best practice for higher availability in Apache Kafka clients prior to 3.0 session.timeout.ms=45000 # Best practice for Kafka producer to prevent data loss acks=all # Required connection configs for Confluent Cloud Schema Registry schema.registry.url=https://{{ SR_ENDPOINT }} basic.auth.credentials.source=USER_INFO basic.auth.user.info={{ SR_API_KEY }}:{{ SR_API_SECRET }}Note

For Confluent Cloud, you can generate a configuration file by creating a new client in the Confluent Cloud Console and then downloading the configuration file.

In the Cloud Console, navigate to your cluster.

In the navigation menu, click Clients and click Add new client.

In the Build a client page, follow the instructions to build a Java client.

Download the zip file and extract the contents.

Copy the contents of the client.properties file into the IntelliJ IDEA Configuration section.

Click Test connection.

When the test result is Connected, click OK.

The Kafka tool window updates to show the topics in the cluster.

Create a new topic

The Kafka Plugin for JetBrains IDEs enables creating Kafka topics easily within the IDE.

Above the Topics list, click + to create a new topic.

The Create Topic dialog opens.

In the Topic name text box, enter “input_topic”.

For the Partition count and Replication factor settings, accept the default values.

Click OK.

The new topic appears in the Topics list.

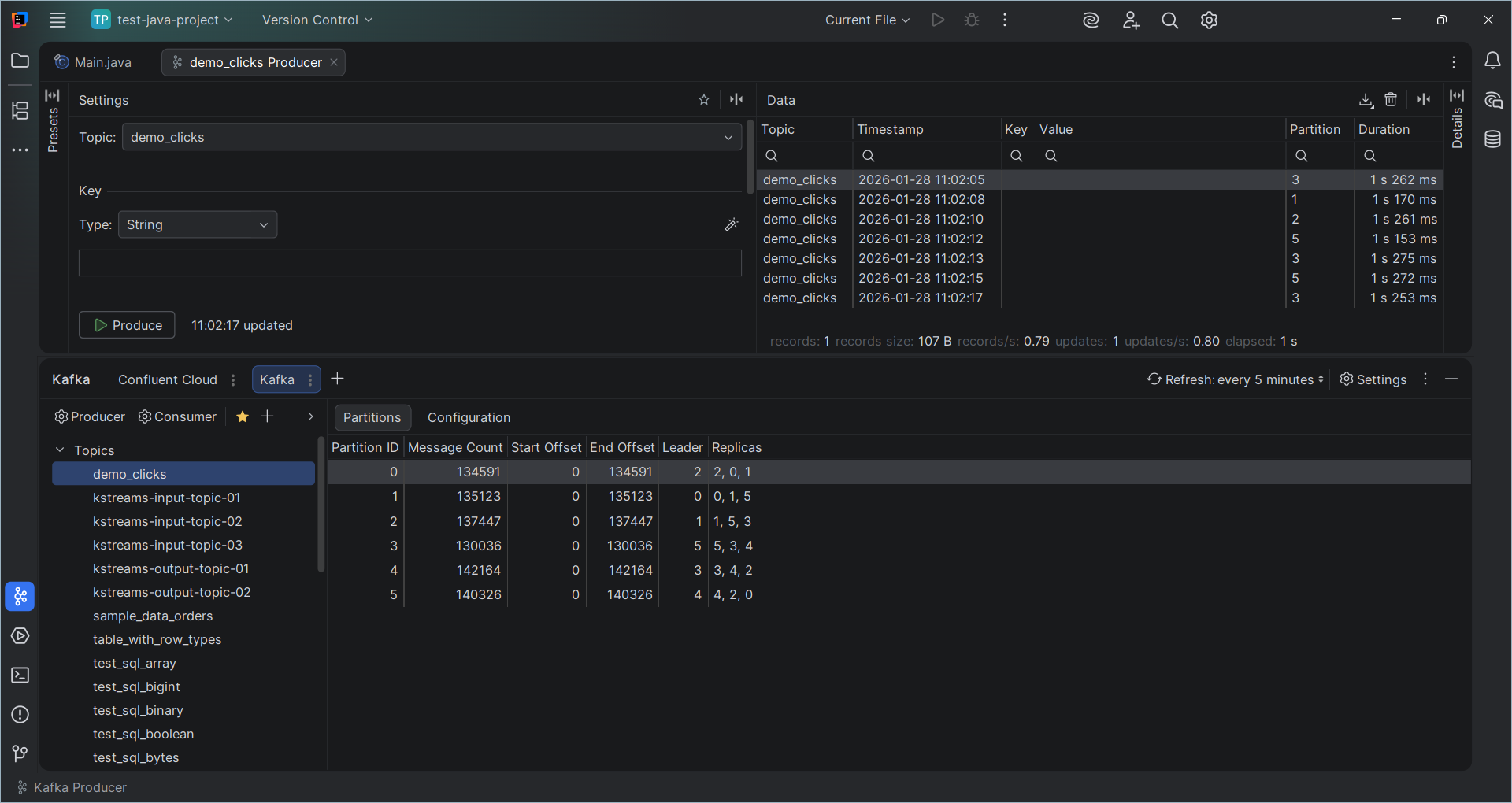

Produce to a topic

The Kafka Plugin for JetBrains IDEs enables producing messages to Kafka topics easily within the IDE. In this step, you produce a message to the topic you created in the previous step.

Above the Topics list, click Producer to configure and launch a producer.

A new tab opens with a corresponding Settings section.

In the Settings section, click the Topic dropdown.

Select input_topic.

In the Value section, copy the following example message into the textbox. Ensure that the Type dropdown is set to JSON.

{ "id": "123e4567-e89b-12d3-a456-426614174000", "timestamp": 1638360000000, "customer": { "name": "John Smith", "email": "john.smith@example.com", "address": "123 Main St, Suite 456, Anytown, ST 12345", "phone": "(555) 123-4567" }, "order": { "orderId": "AB123456", "product": "Ergonomic Steel Keyboard", "price": "199.99", "department": "Electronics", "quantity": 2 } }

In the Flow section, select Generate random keys.

Click Produce.

The Data section updates to show the message you produced.

In the Data section, double-click input_topic.

The Details panel opens and shows the message’s Key and Value. Scroll down to see the message’s header and metadata.

Consume from a topic

The Kafka Plugin for JetBrains IDEs enables consuming messages from Kafka topics easily within the IDE. In this step, you consume a message from “input_topic”.

Above the Topics list, click Consumer to configure and launch a consumer.

A new tab opens with a corresponding Settings section.

In the Settings section, click the Topic dropdown.

Select input_topic.

Click Start Consuming.

The input_topic Consumer starts consuming messages from the topic.

Click the input_topic Producer tab, and click Produce.

Return to the input_topic Consumer tab. The message produced in the previous step is displayed in the Data section.

Click Stop Consuming.