Manage Kafka Cluster Configuration Settings in Confluent Cloud

This topic describes the default Apache Kafka® cluster configuration settings in Confluent Cloud. For a complete description of all Kafka configurations, see Confluent Platform Configuration Reference.

Considerations:

You cannot edit cluster settings on Confluent Cloud on Basic, Standard, Enterprise, and Freight clusters, but many configuration settings are available at the topic level instead. For more information, see Manage Topics in Confluent Cloud.

You can change some configuration settings on Dedicated clusters using the Confluent CLI or REST API. See Change cluster settings for Dedicated clusters.

The default maximum timeout for registered consumers is different for Confluent Cloud Kafka clusters than for Confluent Platform clusters and cannot be changed.

group.max.session.timeout.msdefault is 1200000 ms (20 minutes)

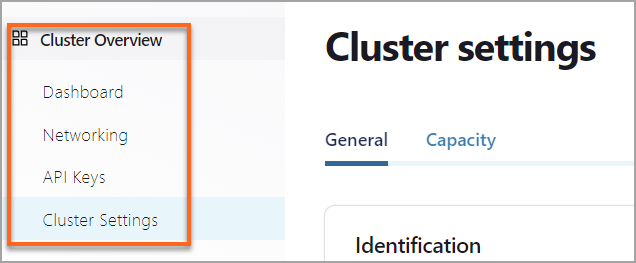

Access cluster settings in the Confluent Cloud Console

You can access settings for your clusters with the Cloud Console.

To do so:

Sign in to your Confluent account.

Select an environment and choose a cluster.

In the navigation menu, select Cluster Overview > Cluster settings and the Cluster settings page displays.

Note

You may also see Networking and Security tabs depending on the type of cluster and how it is configured.

Change cluster settings for Dedicated clusters

The following table lists editable cluster settings for Dedicated clusters and their default parameter values.

Parameter Name | Default | Editable | More Info |

|---|---|---|---|

false | Yes | ||

TLSv1.2 | Yes | Options: | |

“” | Yes | ||

6 | Yes | Limits vary, see: Kafka Cluster Types in Confluent Cloud | |

9223372036854775807 | Yes | Min: | |

604800000 | Yes | Set to -1 for Infinite Storage |

To modify these settings, use the CLI or the Kafka REST APIs. For more information, see Get Started with Confluent CLI or Kafka REST API Quick Start for Confluent Cloud.

Changes to the settings are applied to your Confluent Cloud cluster without additional action on your part and are persistent until the setting is explicitly changed again.

Important

These settings apply only to Dedicated clusters and cannot be modified on Basic, Standard, Enterprise, and Freight clusters.

Enable automatic topic creation

Automatic topic creation (auto.create.topics.enable) is disabled (false) by default to help prevent unexpected costs. The following commands show how to enable it. For more on this property, see broker configurations.

confluent kafka cluster configuration update --config auto.create.topics.enable=true

curl --location --request PUT 'https://<REST endpoint>/kafka/v3/clusters/<cluster-id>/broker-configs/auto.create.topics.enable' \

--header 'Authorization: Basic <base64-encoded-key-and-secret>' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "true"

}'

Manage TLS protocols

By default, all Kafka clusters on Confluent Cloud use TLS 1.2. For Dedicated clusters only, you can enable TLS 1.3 by updating the Kafka broker configuration property for the Kafka cluster. For all other Confluent Cloud cluster types, you cannot currently enable TLS 1.3.

For details on migrating to TLS 1.3, see Migrate to TLS 1.3 on Dedicated Clusters.

Use the following confluent kafka cluster configuration update command to enable TLS 1.3 on a Dedicated cluster:

confluent kafka cluster configuration update \

--cluster <cluster-id> \

--config ssl.enabled.protocols=<tls-protocols>

For example, to enable TLS 1.3 on a Dedicated cluster lkc-abc123, but to continue supporting TLS 1.2, you can use the following command:

confluent kafka cluster configuration update \

--cluster lkc-abc123 \

--config ssl.enabled.protocols=TLSv1.3,TLSv1.2

After you run the command, you should see the response: Successfully requested to update configuration "ssl.enabled.protocols"..

You can verify that the Dedicated cluster is using TLS 1.3 by running the confluent kafka cluster configuration describe command. For details, see Verify TLS protocols.

For details on using the confluent kafka cluster configuration update command, see confluent kafka cluster configuration update.

To enable TLS 1.3 on a Dedicated cluster, use the PUT /kafka/v3/clusters/{cluster_id}/broker-configs/ssl.enabled.protocols endpoint. Replace the <REST endpoint> placeholder with the REST endpoint for the Kafka cluster you want to update, replace the <cluster-id> placeholder with the ID of the Dedicated cluster you want to update, and replace the <tls-protocols> placeholder with the TLS protocols you want to enable.

curl --location --request PUT 'https://<REST endpoint>/kafka/v3/clusters/<cluster-id>/broker-configs/ssl.enabled.protocols' \

--header 'Authorization: Basic <base64-encoded-key-and-secret>' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "<tls-protocols>"

}'

For example, to enable TLS 1.3 on a Dedicated cluster lkc-abc123, but to continue supporting TLS 1.2, you can use the following cURL command:

curl --location --request PUT 'https://pkc-00000.region.provider.confluent.cloud/kafka/v3/clusters/lkc-abc123/broker-configs/ssl.enabled.protocols' \

--header 'Authorization: Basic BASIC_AUTH_TOKEN' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "TLSv1.3,TLSv1.2"

}'

For details on using the PUT /kafka/v3/clusters/{cluster_id}/broker-configs/ssl.enabled.protocols endpoint, see Update Dynamic Broker Configs.

After enabling TLS 1.3, you need to configure your clients to use TLS 1.3 as well. For Kafka clients, you need to update the ssl.enabled.protocols configuration property to include TLSv1.3.

Restrict cipher suites

The following commands show how to restrict the allowed TLS/SSL cipher suites (ssl.cipher.suites). For more on this property, see broker configurations.

confluent kafka cluster configuration update \

--cluster <cluster-id> \

--config ssl.cipher.suites=["<cipher-suites>"]

curl --location --request PUT 'https://<REST endpoint>/kafka/v3/clusters/<cluster-id>/broker-configs/ssl.cipher.suites' \

--header 'Authorization: Basic <base64-encoded-key-and-secret>' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "<cipher-suites>"

}'

Supported cipher suites:

#TLS 1.2

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305_SHA256

#TLS 1.3

TLS_AES_256_GCM_SHA384

TLS_AES_128_GCM_SHA256

TLS_CHACHA20_POLY1305_SHA256

Change the default number of partitions for new topics

The following commands show how to set the default number of partitions (num.partitions) for newly created topics. On clusters, num.partitions allows you set a default for newly created topics. On topics, num.partitions allows you to specify the number of partitions for a particular topic. For more information, see topic configurations and confluent kafka cluster configuration update.

confluent kafka cluster configuration update --config num.partitions=<n>

curl --location --request PUT 'https://<REST endpoint>/kafka/v3/clusters/<cluster-id>/broker-configs/num.partitions' \

--header 'Authorization: Basic <base64-encoded-key-and-secret>' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "<int>"

}'

Change maximum compaction lag time

The following commands show how to set the default maximum lag compaction time (log.cleaner.max.compaction.lag.ms) for new topics. For more on this property, see max.compaction.lag.ms.

confluent kafka cluster configuration update --config log.cleaner.max.compaction.lag.ms=<int>

curl --location --request PUT 'https://<REST endpoint>/kafka/v3/clusters/<cluster-id>/broker-configs/log.cleaner.max.compaction.lag.ms' \

--header 'Authorization: Basic <base64-encoded-key-and-secret>' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "<int>"

}'

Change log retention time

The following commands show how to set the default log retention time (log.retention.ms) for new topics. For more on this property, see retention.ms.

confluent kafka cluster configuration update --config log.retention.ms=<int>

curl --location --request PUT 'https://<REST endpoint>/kafka/v3/clusters/<cluster-id>/broker-configs/log.retention.ms' \

--header 'Authorization: Basic <base64-encoded-key-and-secret>' \

--header 'Content-Type: application/json' \

--data-raw '{

"value": "<int>"

}'