Use the Flink SQL Query Profiler in Confluent Cloud for Apache Flink

The Query Profiler is a tool in Confluent Cloud for Apache Flink® that provides enhanced visibility into how a Flink SQL statement is processing data, which enables rapid identification of bottlenecks, data skew issues, and other performance concerns.

To use the Query Profiler, see Profile a Query.

The Query Profiler is a dynamic, real-time visual dashboard that provides insights into the computations performed by your Flink SQL statements. It boosts observability, enabling you to monitor running statements and diagnose performance issues during execution. The Query Profiler presents key metrics and visual representations of the performance and behavior of individual tasks, subtasks, and operators within a statement. Query Profiler is available in the Confluent Cloud Console.

Key features of the Query Profiler include:

Monitor in real time: Track the live performance of your Flink SQL statements, enabling you to react quickly to emerging issues.

View detailed metrics: The profiler provides a breakdown of performance metrics at various levels, including statement, task, operator, and partition levels, which helps you understand how different components of a Flink SQL job are performing.

Visualize data flow: The profiler visualizes data flow as a job graph, showing how data is processed through different tasks and operators. This helps you identify operators experiencing high latency, large amounts of state, or workload imbalances.

Reduce manual analysis: By offering immediate visibility into performance data, the profiler reduces the need for extensive manual logging and analysis, which can consume significant developer time. This enables you to focus on optimizing your queries and improving performance.

The Query Profiler helps you manage the complexities of stream processing applications and optimize query performance in real time.

Available metrics

The Query Profiler provides the following metrics for the tasks in your Flink statements.

Metric | Definition |

|---|---|

Backpressure | Percentage of time a subtask is stuck because it has produced output that it can’t send downstream. The downstream subtask has fallen behind and is temporarily unable to receive more records. Query Profiler reports the maximum backpressure value among all parallel subtasks for this task during the reporting interval. |

Busyness | Percentage of time a subtask is doing productive work. Query Profiler reports the maximum busyness value among all parallel subtasks for this task during the reporting interval. Note that idleness and busyness do not always add up to 100% because backpressure is a separate state. |

Bytes in/min | Amount of data received by a task per minute. |

Bytes out/min | Amount of data sent by a task per minute. |

Idleness | Percentage of time a subtask is neither backpressured nor busy. Query Profiler reports the maximum idleness value among all parallel subtasks for this task during the reporting interval. Note that idleness and busyness do not always add up to 100% because backpressure is a separate state. |

Messages in/min | Number of events the task receives per minute. |

Messages out/min | Number of events the task sends out per minute. |

State size | Amount of data stored by the task during processing to track information across events. |

Watermark | Timestamp Flink uses to track event time progress and handle out-of-order events. |

The Query Profiler provides the following metrics for the operators in your Flink statements.

Metric | Definition |

|---|---|

Messages in/min | Number of events the operator receives per minute. |

Messages out/min | Number of events the operator sends out per minute. |

State size | Amount of data stored by the operator during processing to track information across events. |

Watermark | Timestamp Flink uses to track event time progress and handle out-of-order events. |

The Query Profiler provides the following metrics for the Kafka partitions in your data source(s).

Metric | Definition |

|---|---|

Active | Percentage of time the partition is active. An active partition processes events and creates watermarks to keep your statements running smoothly. |

Blocked | Percentage of time the partition is blocked. A blocked partition is overwhelmed with data, causing delays in the watermark calculation. |

Idle | Percentage of time the partition is idle. An idle partition has not received any events for a certain time period and is not contributing to the watermark calculation. |

Troubleshoot Flink SQL statements

The Query Profiler enables you to examine your running Flink SQL statements:

Verify throughput: is it fast enough?

Verify liveness: is data flowing?

Verify efficiency: is it cost-effective?

This guide covers the three most common operational scenarios, explaining the core concepts and providing a step-by-step resolution workflow.

Scenario 1: The High-Latency Problem (Throughput)

The issue: The job is running, but consumer lag is increasing, or the statement can’t keep up with input volume.

Always check this scenario first. If your job is backpressured, it could also cause the symptoms described in Scenario 2 (Stalled Watermarks).

Concepts and context

At any moment, each parallel instance of a Flink SQL task, which is called a subtask, is in one of three states: idle, busy, or backpressured.

Backpressured: A subtask is stuck because it has produced output that it can’t send downstream. The downstream subtask has fallen behind and is temporarily unable to receive more records. Backpressure propagates upstream, from sink to source.

Busy: A subtask is doing productive work.

Idle: A subtask is neither backpressured nor busy.

The task-level Backpressure, Busyness, and Idleness metrics reported in Query Profiler are the maximum values reported by any of the parallel subtasks for that task during the reporting interval. The rationale behind reporting these worst-case values is that it only takes one overwhelmed instance to significantly degrade the performance of the entire Flink SQL job.

Tasks vs. operators: The nodes in the graph are tasks. A single task may contain multiple operators that are chained together, for example, a join chained with a filter. You must infer which operator inside the task is causing the bottleneck.

Usually, there is only one “heavy” operator in the chain, which is almost always a join, an aggregation, or a sink materializer.

Almost always, these operators run in separate tasks, as they are usually keyed differently.

If you see a task that is 100% busy, you are almost certain that the vast majority of the busyness can be attributed to one of these “known to be heavy” operators.

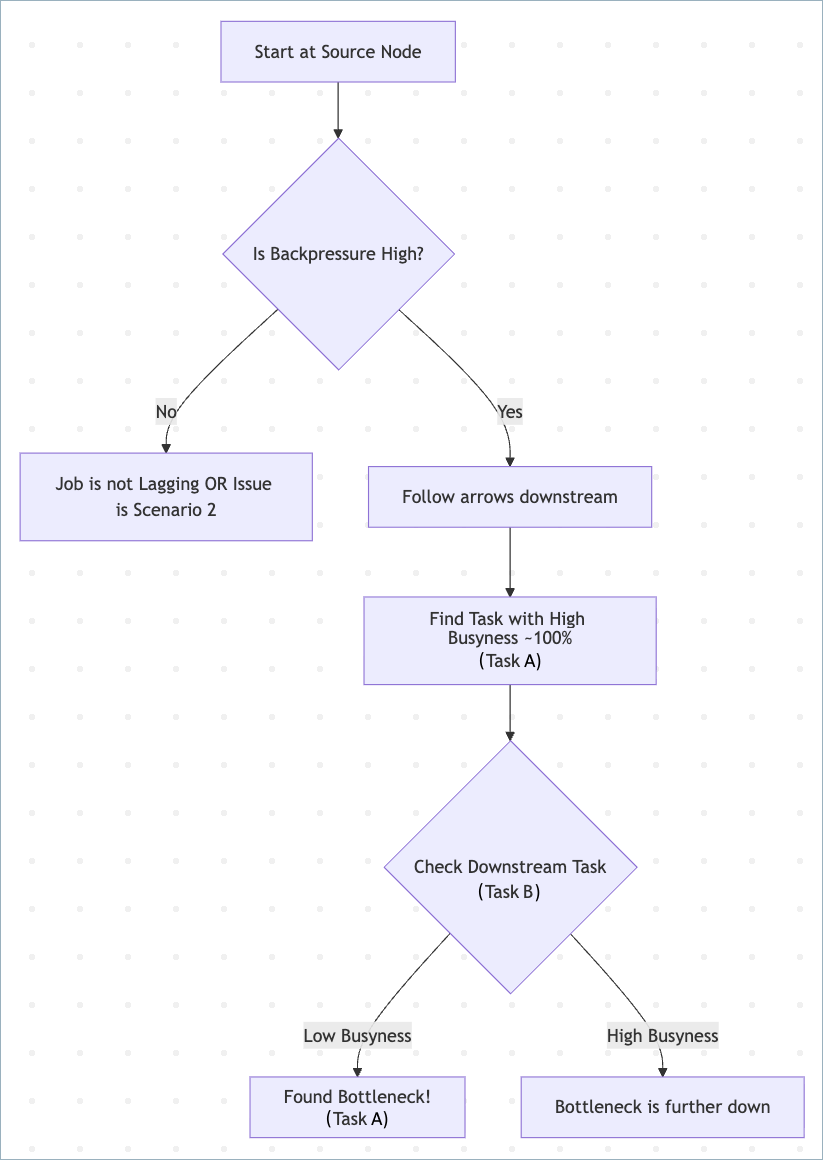

Step 1: Find the bottleneck

Use the following flowchart to identify the bottleneck.

Start at the source node. If lag exists, the source usually shows high backpressure.

Follow the graph downstream.

Look for the most busy task, with busyness near 100%.

The bottleneck is the task performing heavy work and is 100% busy, while its downstream neighbor is relatively idle, waiting for data.

Usually, the first upstream task with busyness near 100% is the bottleneck, even if the downstream has high busyness, like 90%.

If multiple tasks are 100% busy, focus on the one with the most complex logic like joins or aggregations.

Step 2: Analyze the bottleneck task

Click the bottleneck task and check its metrics.

Check the skew score.

Because busyness reports the MAX of subtasks, a task can report 100% busy even if only one subtask is overloaded.

Check for an uneven distribution, for example, one worker processing 10x more data than others.

For operators with significant state, look at the state size distribution first.

For operators with smaller state, check independently the data skew on

numRecordsIn/numRecordsOutandnumBytesIn/numBytesOut.The Query Profiler presents skew for all of them, but it is important to understand that skew may be less reliable when values are very low, for example very low state/very low throughput.

Identify the heavy operator.

You can see individual operator metrics in the operator tab.

Usual suspects: Joins, Aggregations, Window functions, and Sinks.

Unlikely: Calc, Filter, or Map (unless using complex UDFs/Regex).

Check for window firing (edge case).

If an upstream window operator and a downstream operator both show “Medium” busyness, like 60%, and fluctuating backpressure, the bottleneck might be moving. This happens when a window fires, momentarily flooding the downstream operator.

Step 3: Apply the fix

Fix the bottleneck task.

If skew is high:

Check your distribution keys (GROUP BY or PARTITION BY keys). Avoid keys with frequent NULL or default values.

Use a WHERE clause to filter bad data before it hits the aggregation.

If busyness is high (CPU Bound) and evenly distributed:

Scale up by increasing the MAX_CFU of your compute pool. Flink Autopilot will increase the parallelism of the bottleneck task.

Optimize logic. Look for expensive operations that can be simplified, like regular expressions, complex JSON parsing, or large UNNESTs.

Step 4: Verify the fix

Backpressure at the source should drop to 0% or fluctuate at low levels.

Consumer lag should decrease continuously.

Scenario 2: The “No Output” Problem (Liveness)

The statement is RUNNING and has available capacity, meaning that it’s not backpressured, but no data is appearing in the sink.

Ensure the job is not backpressured. If the job is backpressured, watermark flow is also obstructed.

Concepts and context

Watermarks: Markers inserted into the stream to measure event-time progress. For more information, see Time and Watermarks.

The Minimum Watermark Rule: If reading from multiple partitions, the global watermark is determined by the slowest partition.

Idle partition: A partition sending no data. If it sends no data, it advances no watermarks. This freezes the global time, preventing windows and interval joins from triggering.

Blocked partition (watermark alignment): A partition status meaning Flink has paused reading from a fast partition to let older data from other partitions catch up. This is usually a healthy symptom of Flink managing alignment, not a root cause requiring user intervention.

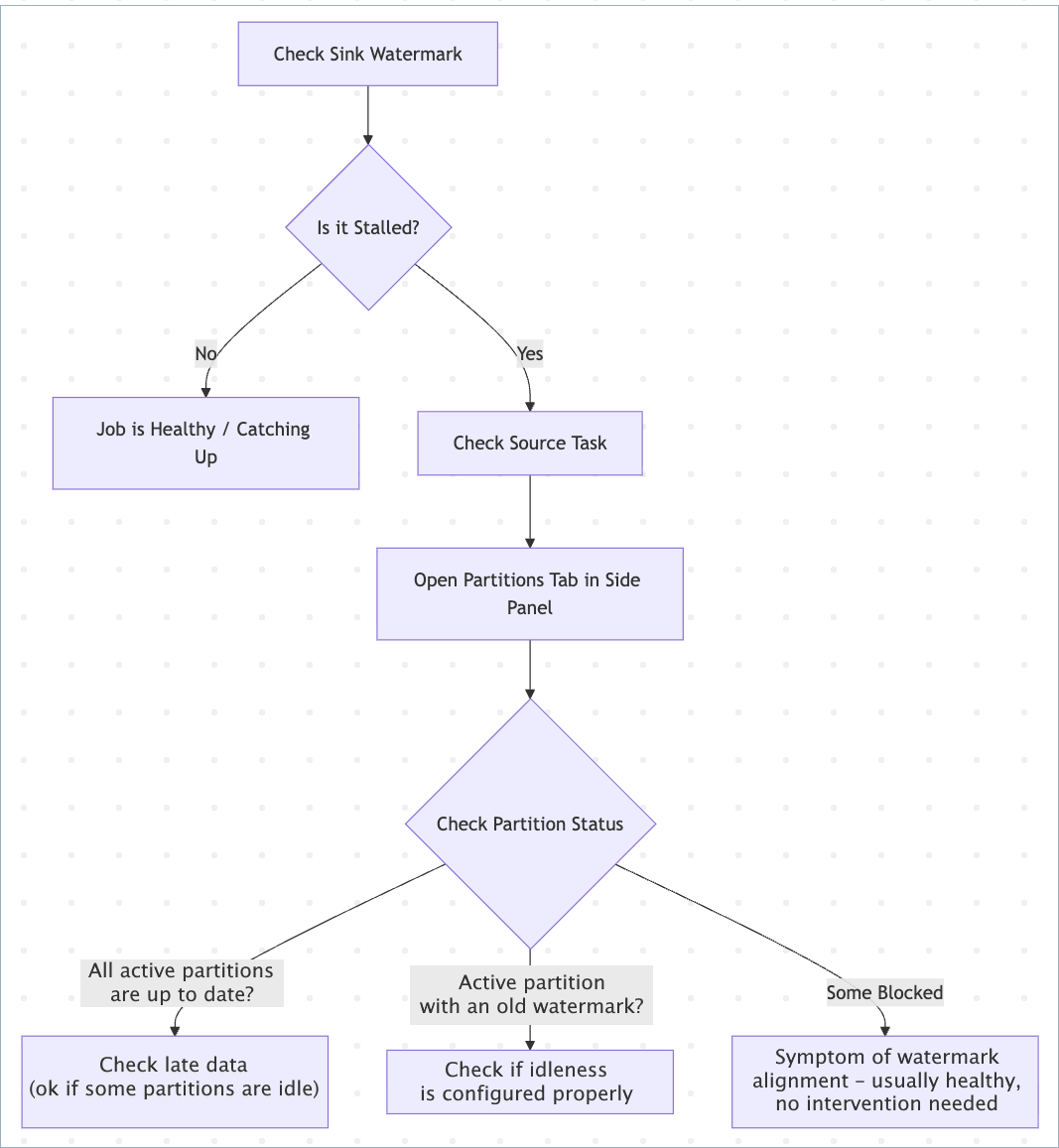

Step 1: Check the sink watermark

Use the following flowchart to identify the bottleneck.

Look at the Sink task card.

Healthy: Timestamp is near real-time.

Stalled: Timestamp is old, for example, 4 hours behind, or empty (-).

Inspect source partitions.

If a partition is marked as idle, this means idleness detection has kicked in, and the partition isn’t blocking watermarks.

Are watermarks stalled with no backpressure? If yes, check active partitions that are holding back the combined watermarks, those with the lowest watermark, and decide if they should be marked as idle sooner/more aggressively.

This holds true if all partitions are active and if some are idle/blocked. For stalled watermarks, always focus on active partitions with old watermarks.

A common problem occurs when you have “de-facto” idle or almost-idle partitions, but you haven’t configured idleness detection. In this case, all partitions are marked as active, with a large spread of watermark values between partitions. For example, truly active partitions would have watermarks that are nearly up to date, while de-facto idle partitions have a stalled watermark from the distant past.

Step 2: Apply the fix

Update your table definition to include the sql.tables.scan.idle-timeout property. This tells Flink to ignore partitions that haven’t sent data for a specific duration. This approach may lead to dropped late records when a more aggressive watermarking strategy is applied.

CREATE TABLE orders (

...

WATERMARK FOR order_time AS order_time - INTERVAL '5' SECOND

) WITH (

'sql.tables.scan.idle-timeout' = '1 minute'

);

Step 3: Verify the fix

Redeploy the statement.

Check the Watermark metric on the sink. It should advance steadily, even if some source partitions remain idle.

Scenario 3: High Cost or “Degraded” Status (Efficiency)

The statement is consuming excessive CFUs and increasing your bill, or it has entered a DEGRADED status and is failing to checkpoint.

Concepts and context

Unbounded state: Operations like JOIN without interval and GROUP BY without windows must store data in memory forever unless you tell Flink when to forget it.

State bloat: As state grows, checkpoints become slower and more expensive, eventually causing the job to degrade or crash.

Retractions and changelogs: Operators that handle updates or deletions, like SinkMaterializer and ChangelogNormalize, require significant state to manage the stream history correctly.

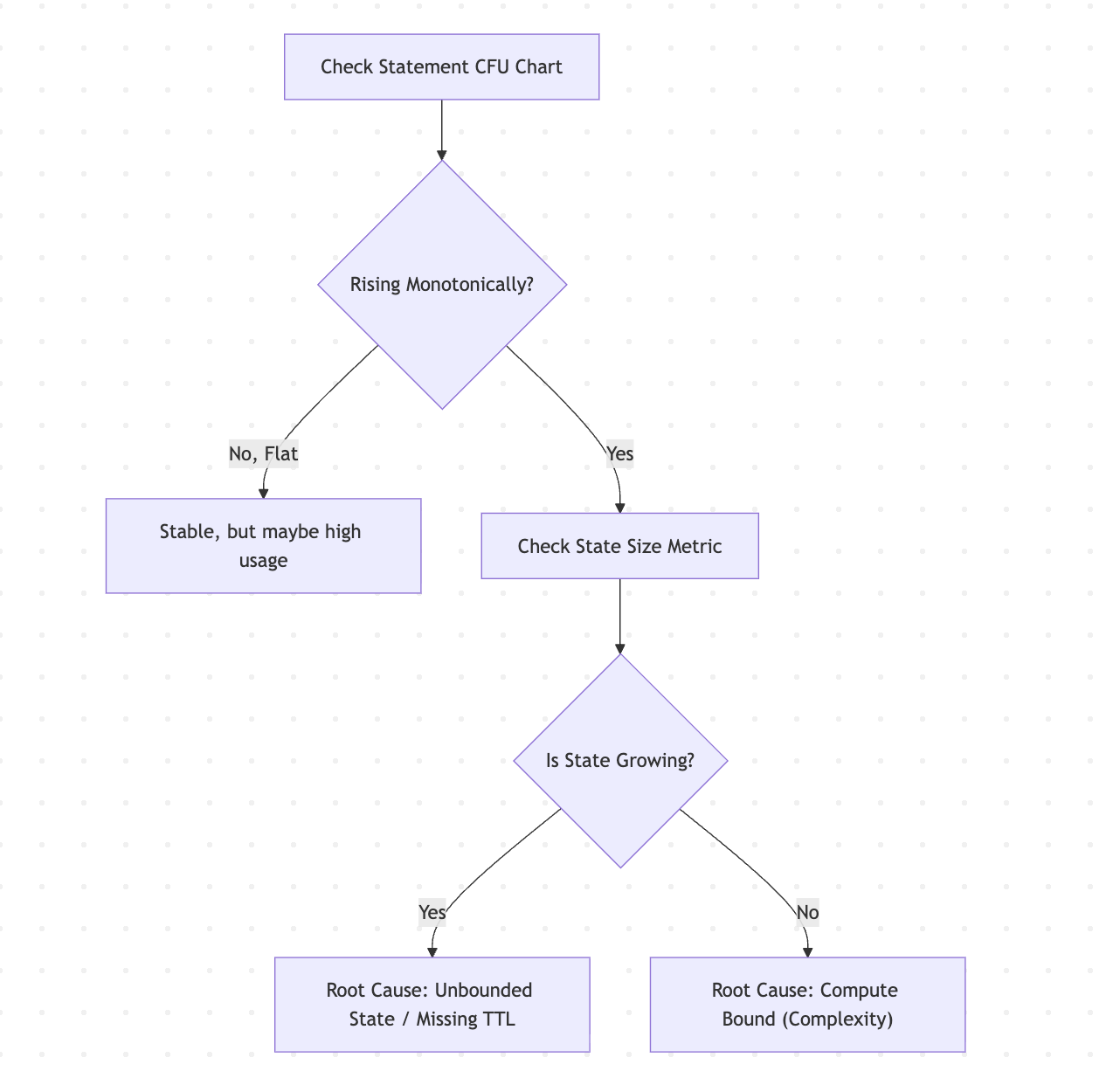

Step 1: Check resource trends

Use the following flowchart to check for unbounded state and state bloat.

Check resource trends.

Healthy: CFUs rise during startup, then plateau.

Unhealthy: CFUs continually rise over time until hitting the pool limit.

Identify the resource driver.

State Bloat: Click on the heavy operators and check the State Size.

Common culprits are join and aggregation operators.

Changelog operators: Look for SinkMaterializer or ChangelogNormalize. These operators maintain state to handle data retractions and updates.

Compute Bloat: Check Busyness. If Busyness is consistently 100% across all nodes, the query complexity is high for the cluster size.

Step 2: Apply the fix

Fix unbounded state

Consider the following approaches to fix unbounded state. This list is not exhaustive. Fixing efficiency issues may sometimes require completely rewriting the query logic to avoid heavy operations or unnecessary state retention.

Apply a state time-to-live (TTL) hint to specify operator-level Idle State Retention Time (TTL).

SELECT /*+ STATE_TTL('posts'='6h', 'users'='2d') */ * FROM posts JOIN users ON posts.user_id = users.id;

Use interval joins instead of regular joins to limit the matching window.

-- Efficient: Join only if events are within 1 hour of each other -- ... WHERE o.ts BETWEEN c.ts - INTERVAL '1' HOUR AND c.ts

Use temporal joins (lookup joins) against a versioned table. This can vastly reduce state usage, compared with standard stream-stream joins.

Fix resource exhaustion

If the query is optimized and TTL is applied, but the statement is still degraded, the workload requires more resources. Increase the compute pool size (Max CFUs).

Step 3: Verify the fix

Statement CFU usage should stabilize.

State size should stop growing monotonically. State size still can grow monotonically during the bootstrapping phase of the job, but growth should slow down and approach a reasonable limit.

State size can be unbounded and initially can grow very quickly, for example, 1 GB/hour, but after the job catches up, state should grow very slowly, for example, 1 GB/year.

The statement status should return to RUNNING.