FAQ for Confluent Cloud Metrics

Find answers to frequently asked questions about Confluent Metrics.

Can the Metrics API be used to reconcile my bill?

The Metrics API is intended to provide information for the purposes of monitoring, troubleshooting, and capacity planning. It is not intended as an audit system for reconciling bills as the metrics may not include request overhead for the Kafka protocol. For more details, see the billing documentation.

Why am I seeing empty data sets for topics that exist on queries other than for retained_bytes?

If there are only values of 0.0 in the time range queried, than the API will return an empty set. When there is non-zero data within the time range, time slices with values of 0.0 are returned.

Why didn’t retained_bytes decrease after I changed the retention policy for my topic?

The value of retained_bytes is calculated as the maximum over the interval for each data point returned. If data has been deleted during the current interval, you will not see the effect until the next time range window begins. For example, if you produced four GB of data per day over the last 30 days and queried for retained_bytes over the last three days with a one day interval, the query would return values of 112 GB, 116 GB, 120 GB as a time series. If you then deleted all data in the topic and stopped producing data, the query would return the same values until the next day. When queried at the start of the next day, the same query would return 116 GB, 120 GB, 0 GB.

What are the supported granularity levels?

Data is stored at a granularity of one minute. However, the allowed granularity for a query is restricted by the size of the query’s interval.

For the currently supported granularity levels and query restrictions, see the API Reference.

What is the retention time of metrics in the Metrics API?

Metrics are retained for seven days.

Do not confuse retention time with the granularity levels and query intervals mentioned in the API Reference. Confluent uses granularity and intervals to validate requests. Retention time refers to the length of time Confluent stores data. The largest interval Confluent supports is seven days. Making a request with an interval greater than seven days is useless because Confluent doesn’t retain data past seven days.

How do I monitor consumer lag?

Query for the average consumer lag by using the Metrics API.

Also, there are multiple other ways to monitor Consumer lag including the client metrics, UI, CLI, and Admin API. These methods are all available when using Confluent Cloud.

How do I know if a given metric is in preview or generally available (GA)?

You can find each metric’s lifecycle stage, for example, preview or generally available, in the response from the /descriptors/metrics endpoint. While a metric is in preview stage, you may find breaking changes to labels without an API version change. This type iteration is necessary for Confluent to stabilize changes and ensure the metric is suitable for most use cases.

What should I do if a query to Metrics API returns a timeout response (HTTP error code 504)?

If queries are exceeding the timeout (maximum query time is 60s) you may consider one or more of the following approaches:

Reduce the time interval.

Reduce the granularity of data returned.

Break up the query on the client side to return fewer data points. For example, you can query for specific topics instead of all topics at once.

These approaches are especially important to when querying for partition-level data over days-long intervals.

Why are my Confluent Cloud metrics displaying only 1hr/6hrs/24hrs worth of data?

This is a known limitation that occurs in some clusters with a partition count of more than 2,000. Work is ongoing to resolve this issue, but there is no fix at this time.

What should I do if a query returns a 5xx response code?

Confluent recommends you retry these type of responses. Usually, this is an indication of a transient server-side issue. You should design your client implementations for querying the Metrics API to be resilient to this type of response for minutes-long periods.

How do I collect metrics for Confluent Cloud resources using Cloud Console?

From the Administration menu, select Metrics.

From Explore available metrics, select a Metric and a Resource. If there is data available for the metric you selected, the chart displays the data.

You can select a new time interval to meet your needs.

To copy a cURL template of the query used to display the selected data, select Copy cURL template. A template of the cURL command is added to your clipboard. Paste the template into a command prompt (Windows) or terminal (Mac, Linux). Edit the template to add an API key and secret to authenticate the request.

Does Cloud Console make use of all the metrics available through the Metrics API?

Cloud Console uses the Metrics API to provide metrics. However, if you have a high number of resources, Cloud Console may not be able to query all metrics. For example, say you have 1,000 or more topics or consumer groups, Cloud Console may not be able to query metrics for the topics list or consumer lag pages. In that situation, you should use the Metrics API to get information unavailable through Cloud Console.

How do I access notifications with Cloud Console?

Access the Manage notifications page by clicking the Alert bell icon in the upper right of the console. To learn more about notifications, see Notifications for Confluent Cloud.

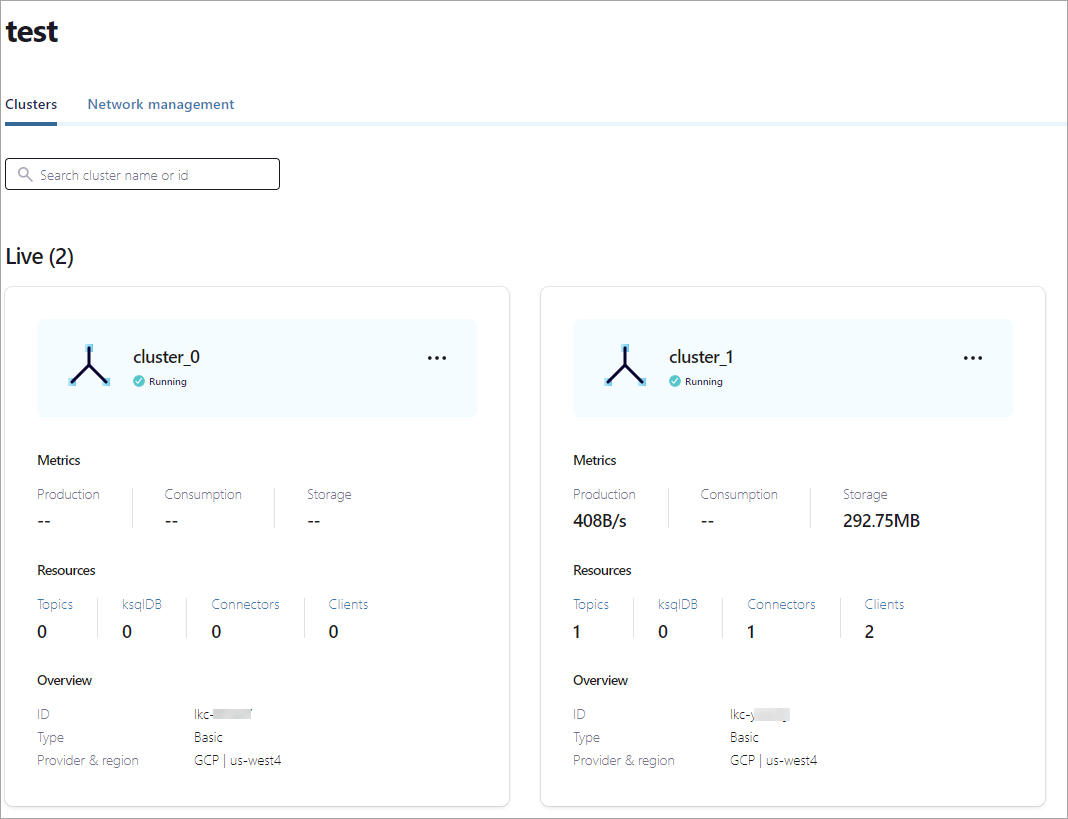

How do I monitor cluster activity with Cloud Console?

You can monitor cluster activity and usage from the Clusters page within each of your environments. To view the page, sign in to Confluent Cloud, choose an environment, and the Clusters page displays.

How do I integrate Confluent Cloud metrics with third-party monitoring tools?

Confluent Cloud supports integrations with several third-party monitoring tools including Datadog, Dynatrace, Grafana Cloud, Prometheus, and New Relic OpenTelemetry. These integrations allow you to monitor Confluent Cloud alongside the rest of your applications using out-of-the-box dashboards. For setup instructions and API key requirements, see Integrate Confluent Cloud Metrics API with Third-party Monitoring Tools.

What’s the difference between using the Metrics API directly vs. third-party integrations?

Third-party integrations provide pre-built dashboards and simplified setup through their UIs, while direct Metrics API usage offers more flexibility for custom queries and integrations. Use third-party integrations for quick setup with standard dashboards, or use the Metrics API directly for custom monitoring solutions and programmatic access. For more information, see Confluent Cloud Metrics.

Why don’t I see consumer lag metrics for my consumer group?

The Metrics API doesn’t include consumers that use the assign() method because the coordinator of a consumer group does not manage consumer assignment for these consumers. Additionally, consumer groups with no active consumers return no results, and lag values don’t update during rebalancing. For more details, see Use the Metrics API to monitor Kafka consumer groups.

What’s the difference between consumer lag in the Metrics API vs. kafka-consumer-groups command?

The Metrics API differs from the kafka-consumer-groups command output in two key ways: consumer groups do not update lag values during rebalancing, and consumer groups with no active consumers return no result. This can cause different values between the two methods during rebalancing periods. For more information, see Use the Metrics API to monitor Kafka consumer groups.

How do I set up alerts for notifications?

You can configure notifications for account, billing, and service events using email, Slack, Microsoft Teams, or generic webhooks. Notifications are categorized by severity level and type. For setup instructions, see Notifications for Confluent Cloud.

What type of API key do I need for the Metrics API?

You must use an API Key resource-scoped for resource management to communicate with the Metrics API. Cluster-scoped API keys will not work for accessing metrics. For information on creating the correct API key, see Confluent Cloud Metrics.

How do I troubleshoot authentication issues with the Metrics API?

Authentication failures are commonly caused by using the wrong API key scope. Ensure you’re using an API key resource-scoped for resource management, not for Kafka clusters. The API key and secret should be Base64 encoded in the authorization header. For authentication requirements, see the API Reference.

What are KIP-714 client metrics and how do they differ from server-side metrics?

KIP-714 enables Kafka clients to push selected metrics directly to Confluent Cloud brokers for centralized observability. This differs from traditional server-side metrics by providing client-side perspective on performance and enabling centralized collection of client metrics without separate infrastructure. For more information, see KIP-714 Client Metrics.

Why are my client applications being throttled?

Throttling is normal in cloud services to prevent cluster outages. Check cluster load metrics to determine if throttling is due to server-side capacity limits or client-side implementation issues. Server-side throttling typically correlates with high cluster load, while client-side issues may involve unbalanced partition access. For analysis guidance, see Review client application throttles.

What should I do if I need metrics data older than seven days?

Metrics are retained for seven days in the Metrics API. For longer-term storage, you’ll need to implement your own collection and storage solution by regularly querying the API and storing results. Consider using the export endpoint for Prometheus-compatible data collection. The retention limitation is covered in the existing FAQ question about metrics retention time.

How do I track usage by team or application on Dedicated clusters?

Assign each team or application its own service account, then use the Metrics API with the principal_id label to separate usage by service account. This enables accurate cost allocation and showback calculations based on actual resource consumption. This only for Dedicated clusters. For detailed implementation, see Track Usage by Team on Dedicated Clusters in Confluent Cloud.

What metrics help me determine if I need to scale my Dedicated cluster?

Key metrics include cluster load percentage, CKU count, hot partition indicators, consumer lag, and client throttling metrics. Monitor these together to make informed scaling decisions. A comprehensive analysis approach is covered in Dedicated Cluster Performance and Expansion in Confluent Cloud. This only for Dedicated clusters.

When should I be concerned about cluster load percentage for my Dedicated cluster?

Cluster load is a percentage value between 0-100%, with 0% indicating no load and 100% representing a fully-saturated cluster. You should expect higher latency and some throttling if cluster load is greater than 80%. Higher load values commonly result in higher latencies and client throttling. For details on interpreting and using cluster load metrics, see Monitor cluster load. This only for Dedicated clusters.

How do I identify if my Dedicated cluster has data skew issues?

Data skew manifests as a significant difference between average and maximum cluster load values. If you see a low average cluster load but much higher maximum cluster load, this indicates skew where some nodes are overloaded while others are underutilized. Use the hot partition metrics to identify problematic partitions. For more information on identifying and addressing skew, see Monitor cluster load and Identify hot partitions. This only for Dedicated clusters.

When should I expand my Dedicated cluster vs. optimize my applications?

Use the cluster load metric to evaluate expansion needs. If cluster load consistently exceeds 70-80% or you see sustained high load with performance issues, expansion may help. However, consider the historical perspective and whether the load represents normal peaks or sustained issues. For comprehensive guidance on performance evaluation and expansion decisions, see Dedicated Cluster Performance and Expansion in Confluent Cloud. This only for Dedicated clusters.

How does the Metrics API handle requests that include inaccessible resources?

The /export endpoint on the Metrics API supports partial success responses for request that include deleted or inaccessible resources. The response body, in Prometheus format, includes metrics for all accessible resources. For any inaccessible resources, an error status metric is included in the response body, allowing you to identify individual resource failures without losing visibility into the rest of your fleet. For more information, see this support article.

Note

Partial success response is a Limited Availability feature in Confluent Cloud.

Why is my resource count inaccurate?

Resource counts are calculated using resources that are accessible from within Confluent Cloud and do not include data from private or restricted networks.