Manage Security for Cluster Linking on Confluent Cloud

This page describes security considerations, configurations, options, and use cases for Cluster Linking on Confluent Cloud.

RBAC roles and Kafka ACLs summary

A user needs role-based access control (RBAC) or Apache Kafka® ACLs on the destination cluster in order to create and manage cluster links and mirror topics.

The cluster link itself needs specific RBAC roles or Kafka ACLs on the source cluster to read data and metadata.

Note that RBAC role(s) and Kafka ACL(s) are not mutually exclusive. A principal may have a mix of RBAC roles and Kafka ACLs, if that would better suit your security model. In the table below, authentication options are API key or OAuth for all roles and operations.

Principal | Operation | Cluster | Kafka RBAC Roles | or | Kafka ACLs |

|---|---|---|---|---|---|

User, or your tooling | Create or modify a cluster link | Destination cluster |

| or | ALTER: Cluster |

User, or your tooling | Create or modify a cluster link | Source cluster for a source-initiated link |

| or | ALTER: Cluster |

User, or your tooling | Create or modify a mirror topic | Destination cluster |

| or |

|

User, or your tooling | Create or modify a mirror topic | Source cluster for a source-initiated link |

| or |

|

User, or your tooling | List mirror topics and their statuses | Destination cluster | ClusterOperator | or |

|

User, or your tooling |

| Destination cluster | ClusterOperator and ResourceOwner on Topics | or |

|

User, or your tooling |

| Source cluster | CloudClusterAdmin and ResourceOwner on Topics | or |

|

Cluster link |

| Source cluster | CloudClusterAdmin and ALTER:Cluster | or |

|

User, or your tooling |

| Destination cluster | CloudClusterAdmin and ResourceOwner on Topics | or |

|

Cluster link | Mirror topics | Source cluster |

| or |

|

Cluster link | Sync consumer offsets | Source cluster |

| or |

|

Cluster link | Sync ACLs | Source cluster |

| or | DESCRIBE: Cluster |

Cluster link | Source-initiated cluster link to a Confluent Cloud cluster | Destination cluster | CloudClusterAdmin | or | ALTER: Cluster |

Cluster link | Source-initiated cluster link to a Confluent Cloud cluster | Source cluster | CloudClusterAdmin | or | ALTER: Cluster |

Tip

To learn more about the commands reverse-and-start, reverse-and-pause, and truncate-and-restore, see Reverse a source and mirror topic and Convert a mirror topic to a normal topic.

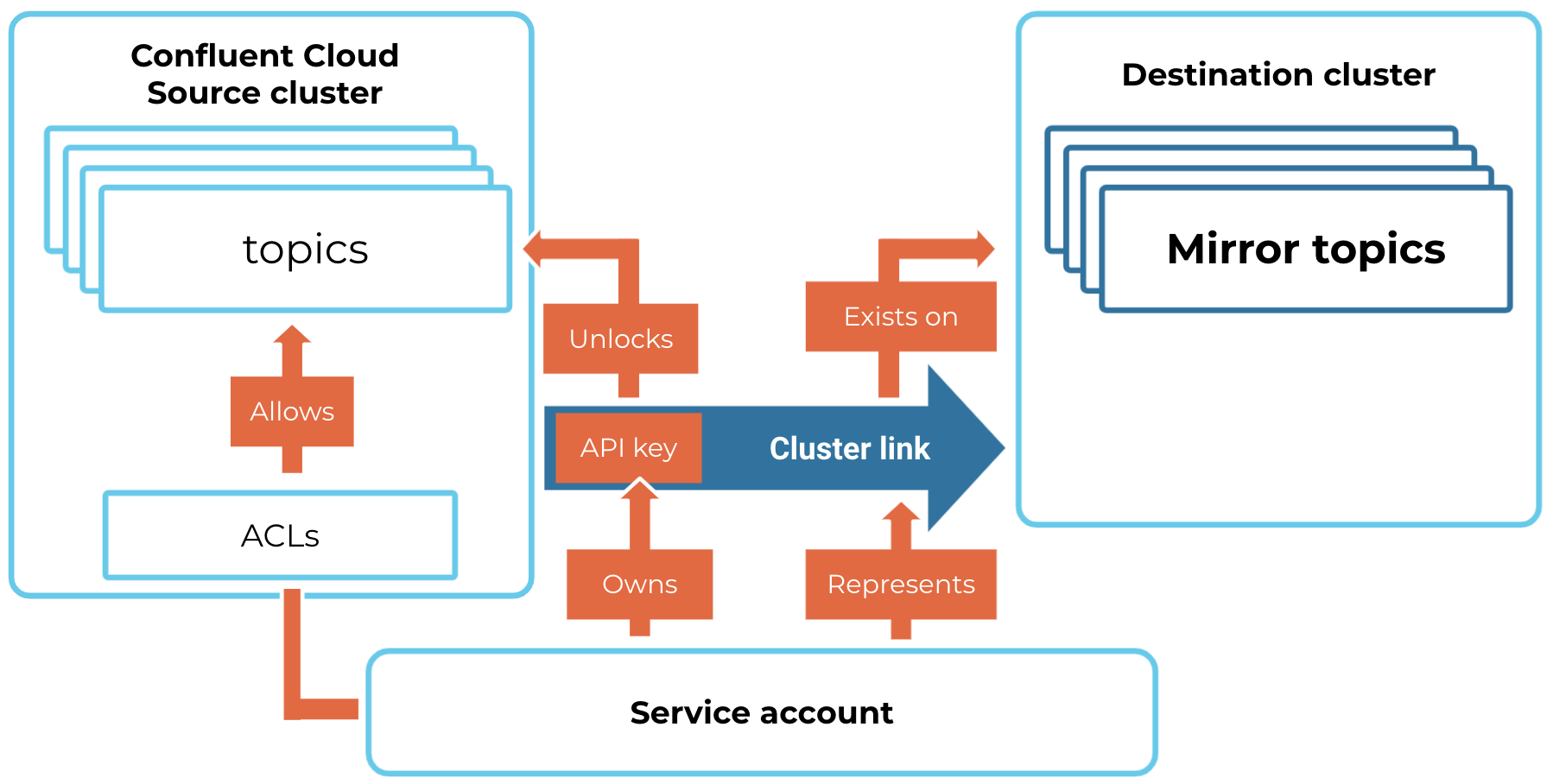

Security model

The Cluster Linking security model separates the permissions and credentials needed for each step of its lifecycle. This paradigm enables many teams to use cluster link operations on the topics that they already own. It also allows the owner of one cluster to share data over a cluster link with another team or organization, while only giving that team or organization READ access to the shareable, mirror topics.

Advantages

The advantages of this model are:

Permission to create and manage a cluster link is managed by role-based access control (RBAC) or access control lists (ACLs) on the destination cluster.

Cluster link access to the source cluster data is managed–-and can be limited–by role-based access control (RBAC) or access control lists (ACLs) on the source cluster. This allows the source cluster owner to secure the data leaving the cluster.

Operations performed on a cluster link and mirror topics are available in Confluent Cloud Audit Logs.

The cluster link has its own credentials and RBAC role-bindings / ACLs on the source cluster. You can manage its READ access to the source cluster just like any other Kafka consumer. So, you can use your existing security workflows with cluster links.

If the source cluster is a Confluent Cloud cluster, Cluster Linking supports all valid authentication and authorization mechanisms on Confluent Cloud, and uses these concepts in the same manner as a Kafka client application:

An admin creates a service account for the cluster link, and creates a source cluster API key and secret for that service account.

Alternatively, the cluster link can be authenticated with OAuth and its principal will be an identity pool.

If your source cluster is a Confluent Platform or open-source Apache Kafka cluster, the cluster link can use SASL/PLAIN, SASL/SCRAM, SASL/OAUTHBEARER (OAuth), and/or mTLS. It cannot use Kerberos.

The cluster link itself requires only read ACLs on the source cluster. No ACLs or credentials are required on the destination cluster in Confluent Cloud. This simplifies management.

When sharing data between teams, the owner of the source cluster can limit READ access to the mirror topics on the destination cluster on a topic-by-topic basis.

When sending data between the source and destination clusters, Cluster Linking uses Transport Layer Security (TLS) to encrypt data in transit.

If configured as such, Cluster Linking can also sync all client ACLs, or a configurable subset, from the source cluster to the destination cluster.

Building blocks

The building blocks of Cluster Linking are:

A cluster link, which connects a source cluster to a destination cluster

A mirror topic, which exists on the destination cluster, and receives a copy of all messages that go to its source topic

A source topic, which is a regular topic that exists on the source cluster and is the source of data for the mirror topic

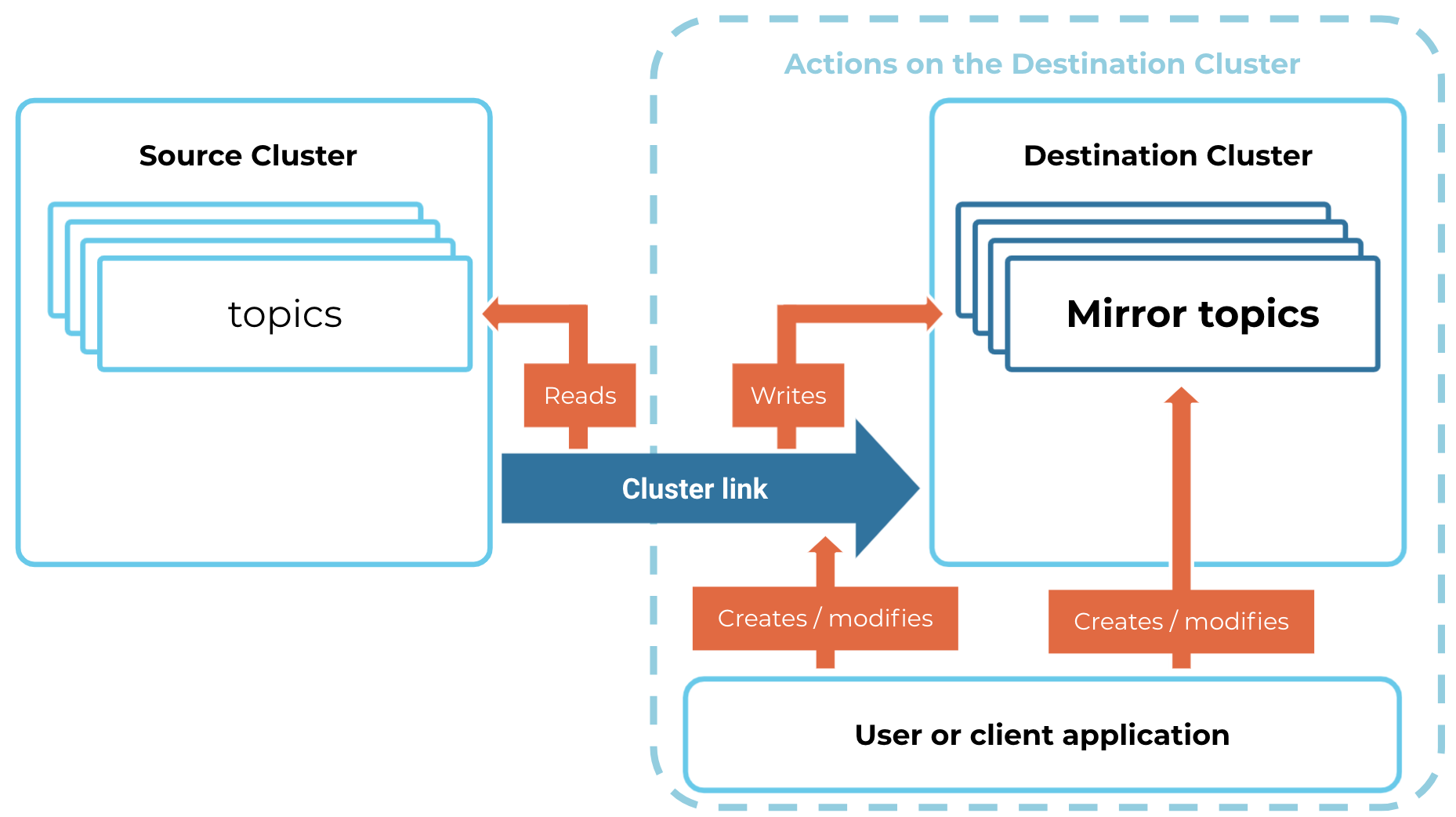

Cluster Linking lifecycle and permissions

Each phase of the Cluster Linking lifecycle requires different permissions. The phases are:

A user or client application creates a cluster link.

A user or client application creates a mirror topic using the cluster link.

The cluster link reads topic data, consumer offsets, and ACLs from the source cluster.

The cluster link writes topic data, consumer offsets, and ACLs to the destination cluster.

A user or client application modifies, promotes, or deletes the mirror topic.

A user or client application modifies or deletes the cluster link.

Creating or modifying a cluster link

To create or modify a cluster link, a user must be authenticated and authorized by either a service account or a Confluent Cloud user account for role-based permissions on specified resources.

If the user is authenticated with a Confluent Cloud user account, they must have the CloudClusterAdmin, EnvironmentAdmin, or OrganizationAdmin RBAC role over the destination cluster in order to be authorized to create, list, view, or modify cluster links.

If the user or client application is authenticated with a service account, then their service account needs an ACL to allow them to ALTER the destination cluster. To list the cluster links that exist on a destination cluster, their service account needs an ACL to allow them to DESCRIBE the destination cluster.

When you create a cluster link, you must give it a way to authenticate with the source cluster. The permissions for that are described below.

Creating or modifying a mirror topic

Tip

If you want to make sure that particular topics cannot be mirrored by the cluster link under any circumstances, then do not give authorization (through RBAC or ACLs) for the cluster link’s principal on those topics.

To create or modify a cluster link, a user must be authenticated and authorized by either a service account or a Confluent Cloud user account.

If the user is authenticated with a Confluent Cloud user account, they must have the CloudClusterAdmin, EnvironmentAdmin, or OrganizationAdmin RBAC role over the destination cluster in order to be authorized to create or modify mirror topics.

If the user or client application is authenticated with a service account, then their service account needs these ACLs on the destination cluster:

An ACL to allow them to ALTER the destination cluster.

An ACL to allow them to ALTERCONFIGS on the destination cluster.

An ACL to allow them to CREATE the mirror topic. This ACL can be one that allows them to create all topics, or it can be scoped by topic name(s).

ACLs required to list and describe mirror topics

The list mirrors command lists the mirror topics on a cluster and/or a cluster link. You can call this command through the Confluent Cloud API for Cluster Linking with `REST`: `GET /kafka/v3/clusters/<cluster-id>/links/<link-name>/mirrors` or from the Confluent CLI with confluent kafka mirror list.

The describe mirror command gives information about a specific mirror topic. You can call this command through the Confluent Cloud API with `REST`: `GET /kafka/v3/clusters/<cluster-id>/links/<link-name>/mirrors/<mirror-topic>` or from the Confluent CLI with confluent kafka mirror describe <mirror-topic>.

To list and describe mirror topics, you need one of the following ACLs:

The DESCRIBE ACL on a Cluster resource, which provides access to both list and describe for all topics on the cluster.

The DESCRIBE ACL on specific Topic(s), which provides access to both list and describe for the specified topics only.

Permissions for the cluster link to read from the source cluster

For every mirror topic on a cluster link, the cluster link reads topic data from the respective source topic. If configured to sync consumer offsets or ACLs, the cluster link also reads those from the source cluster. To read this, the cluster link must authenticate with the source cluster and be authorized to perform these actions.

Note

ACL sync for Confluent Cloud is only do-able between two Confluent Cloud clusters in the same Confluent Cloud organization, as detailed in ACL syncing.

Summary of ACLs a cluster link needs on the source cluster

Capability | Required ACLs on Source Cluster |

|---|---|

Mirror data from a source topic to a mirror topic | READ and DESCRIBE-CONFIGS for the source topic |

Sync ACLs from source cluster to destination cluster | DESCRIBE on the source cluster |

Sync a consumer group’s offsets from a source topic to a mirror topic | DESCRIBE on the source topic, READ and DESCRIBE the consumer group on the source cluster |

Enable both prefixing and auto-create mirror topics | DESCRIBE on the source cluster |

Create or modify a cluster link (if this is a source-initiated link) | ALTER:Cluster on the source cluster |

Providing the cluster link with an API key and secret

For testing or development, you can use a short-hand way to create a cluster link with an API key and secret in the Confluent CLI:

confluent kafka link create <link_name> --source-api-key <api-key> --source-api-secret <api-secret>

Using the CLI short-hand is not recommended for a cluster link in production because the terminal history could reveal your API key and secret.

Rather, you should pass the following three properties to your cluster link’s configuration:

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username='<api-key>' password='<api-secret>';

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

If you create the cluster link with the CLI, you can put those three lines in a config file (along with any other properties you want to configure on the link) and pass the file name to the command with the --config flag. For example:

confluent kafka link create <link_name> --config <path/to/file.config> ...

If you create the command via a REST API call, you can add the three properties to your array of configs:

{

"source_cluster_id": "<source cluster's ID>",

"configs": [

{

"name": "security.protocol",

"value": "SASL_SSL"

},

{

"name": "sasl.mechanism",

"value": "PLAIN"

},

{

"name": "sasl.jaas.config",

"value": "org.apache.kafka.common.security.plain.PlainLoginModule required username='<api-key>' password='<api-secret>';"

},

...

],

...

}

The security credentials stored on the link are encrypted and cannot be retrieved by a user. There is no way for someone to query the cluster link to get its API key or secret.

Tip

As an advanced option, OAuth can be used for authentication rather than a service account and API key. To learn more, see the section on how to use OAuth to authenticate a cluster link to the source or remote cluster.

Changing or rotating the security credentials on a cluster link

If you need to change (or “rotate”) the security credentials on a cluster link, you can simply update the link’s sasl.jaas.config property with the new API key and secret. For example, if you create a new file called new-creds.config with an updated API key and secret:

# new-creds.config has this:

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username='<api-key>' password='<api-secret>';

Then, you can run this command to specify that the cluster link use the new credentials in new-creds.config

confluent kafka link configuration update <link-name> --config new-creds.config

Note

If the cluster link loses authorization to read data from the source cluster, the cluster link may be unavailable but the destination topics will continue in an ACTIVE state of mirroring. When authorization is restored, mirror topics will be accessible (viewable).

Writing to the destination cluster

On Confluent Cloud, cluster links don’t need any extra permissions to write data to the destination cluster. Because they exist on the destination cluster, they already have the appropriate permissions.

Transport Layer Security (TLS)

For a cluster link between two Confluent Cloud clusters, data in transit is encrypted through one-way TLS V1.2 (in the Java community, this is referred to as one-way SSL).

For a cluster link from a Confluent Platform or Apache Kafka cluster to a Confluent Cloud cluster, data-in-transit must use one-way TLS (in the Java community, this is referred to as one-way SSL). It will default to the latest version of TLS that both clusters have enabled. TLS 1.2 and 1.3 are enabled by default in Apache Kafka.

Older versions of SSL can be enabled, but this is discouraged due to known security vulnerabilities.

mutual TLS (mTLS) authentication

Cluster Linking can use mTLS (two-way verification) for some, but not all, data exchanges:

Confluent Cloud to Confluent Cloud does not use mTLS; it uses TLS and SASL as described above in Transport Layer Security (TLS).

Data coming into Confluent Cloud from OSS Kafka can be configured to use mTLS.

Data coming into Confluent Cloud from Confluent Platform can be configured to use either mTLS or source-initiated cluster links with TLS+SASL. mTLS can be used for source-initiated cluster links only in hybrid scenarios where the source cluster is Confluent Platform and destination cluster is a Dedicated Confluent Cloud cluster.

When you use mTLS or certificates, you must configure the Confluent Cloud cluster to authenticate to the Confluent Platform or Kafka source cluster using a certificate, keystore, and truststore. These must be stored on the cluster link’s configuration properties in PEM format (a Privacy Enhanced Mail Base64 encoded format). Pass this configuration in when creating the cluster link in the Confluent Cloud Console, the REST API, or the Confluent CLI. To learn more about PEM files and possible configurations, see the Apache Kafka KIP that introduced PEM file support.

Use these configuration properties (not all may be required):

security.protocol=SSL

ssl.truststore.type=PEM

ssl.keystore.type=PEM

ssl.keystore.certificate.chain

ssl.keystore.key

ssl.key.password

ssl.truststore.certificates

Make sure your PEM format uses newlines (\n). Here’s an example configuration:

security.protocol=SSL

ssl.keystore.type=PEM

ssl.keystore.certificate.chain=-----BEGIN CERTIFICATE-----\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX ... xXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXx -----END CERTIFICATE-----

ssl.keystore.key=-----BEGIN ENCRYPTED PRIVATE KEY-----\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX ... xXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXxX -----END ENCRYPTED PRIVATE KEY-----

ssl.key.password=<REDACTED>

ssl.truststore.type=PEM

ssl.truststore.certificates=-----BEGIN CERTIFICATE-----\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX ... xXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxXxX\nxXxXxXxXxXxXx -----END CERTIFICATE-----

Only PKCS #8 keys are supported for the private key specified in ssl.keystore.key. If the key is encrypted, the key password must be specified using ssl.key.password.

Note

For Confluent CLI versions v3.51.0 or later, \n can be used in certificates, and \n is required in between multiple certificates. (In older versions of the Confluent CLI, before v3.51.0, there was an issue with using \n in certificates and the workaround was to replace the \n with a space.)

OAuth

As an advanced option, OAuth can be used to authenticate the cluster link to a source or remote Confluent Cloud, Confluent Platform, or Kafka cluster. This workflow works the same as it would for a Kafka consumer reading from the source cluster. To learn more, see Use OAuth/OIDC to Authenticate to Confluent Cloud.

Tip

Cluster links on Confluent Cloud that use OAuth must be created using either the Confluent CLI or REST API. Creating cluster links that use OAuth is not currently supported in the Confluent Cloud Console.

An existing cluster link can change its authentication mechanism from API Key to OAuth, or vice versa. This must be accomplished using the supported tools for creating an OAuth cluster link.

Confluent CLI example

Create a file of link configurations; for example,

oauth-link.config, with OAuth authentication configuration details along with any other cluster link configurations:security.protocol=SASL_SSL sasl.oauthbearer.token.endpoint.url=https://myidp.example.com/oauth2/default/v1/token sasl.login.callback.handler.class=org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginCallbackHandler sasl.mechanism=OAUTHBEARER sasl.jaas.config= \ org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required \ clientId='<client-id>' scope='<requested-scope>' clientSecret='<client-secret>' extension_logicalCluster='<cluster-id>' extension_identityPoolId='<pool-id>'; # additional cluster link configurations, like consumer offset sync, can go here

Pass this file in to

--configwhen creating the cluster link. For example:confluent kafka link create oauth-link --config oauth-link.config \ --source-cluster lkc-123456 \ --source-bootstrap-server pkc-12345.us-west-2.aws.confluent.cloud:9092

REST API example

In the POST request to create the cluster link, include the OAuth configurations in the standard format:

{

"name": "<config-name>",

"value": <config-value>

}

For example:

{

"source_cluster_id": "<source-cluster-id>",

"configs": [

{

"name": "security.protocol",

"value": "SASL_SSL"

},

{

"name": "bootstrap.servers",

"value": "<source-bootstrap-server>"

},

{

"name": "sasl.mechanism",

"value": "OAUTHBEARER"

},

{

"name": "sasl.oauthbearer.token.endpoint.url",

"value": "https://myidp.example.com/oauth2/default/v1/token"

},

{

"name": "sasl.login.callback.handler.class",

"value": "org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginCallbackHandler"

},

{

"name": "sasl.jaas.config",

"value": "org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required clientId='<client-id>' scope='<requested-scope>' clientSecret='<client-secret>' extension_logicalCluster='<cluster-id>' extension_identityPoolId='<pool-id>';"

}

]

}

Public internet exposure

Unless otherwise noted, Cluster Linking between two clusters on Confluent Cloud uses public IP addresses and public DNS. However, this does not necessarily mean that the cluster linked data goes over the public internet:

Traffic between two clusters that are both on Amazon Web Services (AWS) always stays on AWS and never goes to the public internet. Per the AWS documentation: “When using public IP addresses, all communication between instances and services hosted in AWS use AWS’s private network.”

Traffic between two clusters that are both on Microsoft Azure always stays on Azure and never goes to the public internet. Per the Azure documentation: “If the destination address is for one of Azure’s services, Azure routes the traffic directly to the service over Azure’s backbone network, rather than routing the traffic to the Internet. Traffic between Azure services doesn’t traverse the Internet.”

All traffic is encrypted in transit. See Transport Layer Security (TLS).

Note

Traffic between a non Confluent Cloud cluster and a Confluent Cloud cluster might not use public IP addresses and/or public DNS.

Cluster Linking in Confluent Cloud supports both single-cloud and multi-cloud deployments for clusters with private networking. In a single-cloud environment, replication uses the cloud provider’s routed backbone (AWS, Azure , Google Cloud). When linking clusters across clouds, data replication is encrypted and transmitted over authenticated connections.

Connectivity is controlled by allow-listing trusted Confluent IP addresses, and only TLS traffic to known internal endpoints is permitted. For Apache Kafka® clusters deployed in private networks, Cluster Linking enforces IP filtering, mutual TLS, and SASL authentication (on Confluent Platform) to ensure that only authorized clusters within the same organization can replicate data. Source-initiated replication to public Kafka clusters is permitted only after verifying the originating network, mitigating the risk of data exfiltration.

Practical examples

To see practical examples of how you might model security for different Cluster Linking deployments, refer to these use case tutorials:

Audit logs

Whenever a user or application tries to create or delete a cluster link or a mirror topic, authentication and authorization events are created in Confluent Cloud Audit Logs. This allows you to monitor Cluster Linking access and activity throughout Confluent Cloud.

Using role-based access control (RBAC) with Cluster Linking

Below is a quick demo of using Cluster Linking with Confluent Cloud RBAC, followed by a summary of the roles needed on different resources.

Demo

This short video shows how to set up a cluster link across clusters with RBAC enabled, and make topic data available across clusters with different owners.

In the example, the owner of a Webstore cluster gives the Logistics cluster owner role-based access to all topics prefixed as public, such as orders, clicks, and products data.

The Logistics owner can then mirror Webstore topics on the Logistics cluster as if they were native, and get visibility into that external, streaming data.

Confluent Cloud RBAC roles

To enable secure Cluster Linking on Confluent Cloud, you must either grant RBAC roles to principals, as described in this section, or grant specific ACLs as previously described.

At least one of the following RBAC roles is required to create a cluster link:

ResourceOwner on the Cluster

CloudClusterAdmin on the Cluster

EnvironmentAdmin on the cluster’s Environment

OrganizationAdmin on the cluster’s Organization

The Cluster Link service account requires one of the following sets of Confluent Cloud RBAC roles:

(Recommended) DeveloperRead and DeveloperManage on the source cluster Topic(s) to be mirrored

(Not recommended for production): Any of the roles shown above for creating a cluster link

Tip

The Cluster Link does not need any roles on the destination cluster; only the roles noted above for the source cluster.

ACLs and consumer offset sync

To sync ACLs, the cluster link must have the following role:

Operator on the Cluster

To sync consumer offsets, the cluster link must have one of the following roles:

Operator on the Cluster, or

DeveloperRead on the Consumer Groups