Manage Key Policies on Confluent Cloud for Google Cloud

This document provides guidelines for managing key policies for self-managed encryption keys (BYOK) in Confluent Cloud for Google Cloud. You can manage key Google Cloud key policies with a custom role or VPC Service Controls. Google Cloud custom roles is the recommended approach.

Avoid key policy updates

Because key policy misconfigurations can cause immediate cluster unavailability and service disruption, it is recommended that you avoid updates.

Manage key policies with a custom role (recommended)

You can manage the key policies using a Google Cloud custom role.

Create a custom role and grant it the following required permissions:

cloudkms.cryptoKeyVersions.useToDecryptcloudkms.cryptoKeyVersions.useToEncryptcloudkms.cryptoKeys.get

Add the Google Group ID provided in the Confluent Cloud Console as a new member and assign the custom role to it. The Google Group ID is unique per cluster and is created automatically during cluster creation. This ID remains static, but service accounts associated with the cluster can change over time.

Manage key policies with Google Cloud VPC Service Controls (advanced)

Confluent supports using Google Cloud VPC Service Controls to protect access to Google Cloud services such as Cloud Storage that are used by your Confluent Cloud clusters. Confluent supports granting the per-cluster Google Group ID in both ingress and egress policies. Other VPC Service Controls approaches are not supported.

To allow Confluent to access resources inside your service perimeter, add an ingress rule that allows the Confluent Google Group ID for your cluster. This is the same Google Group ID shown in the Confluent Cloud Console during cluster creation and is referenced above for the KMS key policy.

Configure VPC Service Controls with the Google Cloud portal

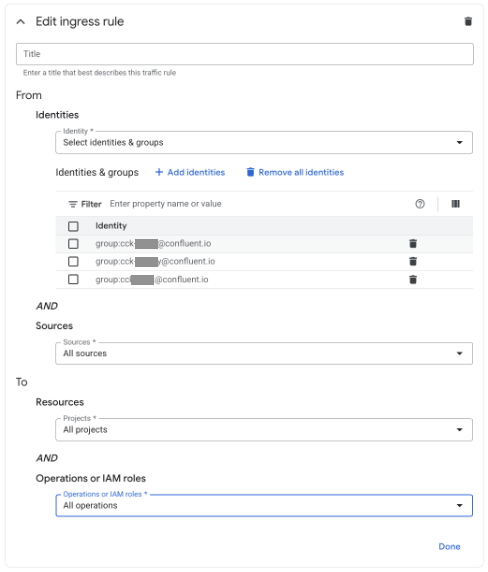

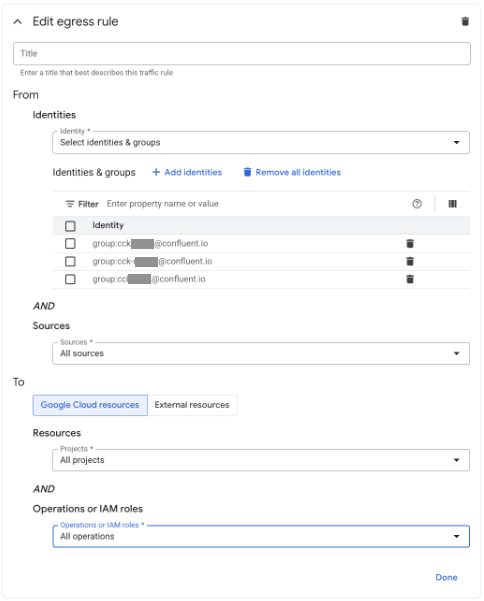

In Access Context Manager, edit your service perimeter and add an ingress rule.

Configure the ingress rule. Here is an example:

Create a matching egress rule that allows the same Confluent Google Group ID. Here is an example:

You can use Terraform to manage your VPC Service Controls configuration. Replace the placeholder values with your specific configuration:

<access-policy-id>: Access Context Manager policy ID<service-perimeter-name>: Name for your service perimeter<customer-project-id>: Google Cloud project ID<cck-12345@confluent.io>: Cluster Google Group ID

terraform {

required_version = ">= 1.0"

required_providers {

google = {

source = "hashicorp/google"

version = "~> 7.0"

}

}

}

resource "google_access_context_manager_service_perimeter" "confluent_vpc_sc" {

parent = "accessPolicies/<access-policy-id>"

name = "accessPolicies/<access-policy-id>/servicePerimeters/<service-perimeter-name>"

title = "confluent-cluster-vpc-sc"

description = "VPC Service Controls perimeter for Confluent Cloud cluster access"

status {

# Protected project

resources = [

"projects/<customer-project-id>"

]

# GCP services to restrict

restricted_services = [

"storage.googleapis.com",

"cloudkms.googleapis.com"

]

# Ingress Policy - Allow Confluent Cloud groups access

ingress_policies {

ingress_from {

identities = [

"group:<cck-12345@confluent.io>"

]

sources {

access_level = "*"

}

}

ingress_to {

resources = ["*"]

operations {

service_name = "*"

}

}

}

# Egress Policy - Allow Confluent Cloud groups access

egress_policies {

egress_from {

identities = [

"group:<cck-12345@confluent.io>"

]

}

egress_to {

resources = ["*"]

operations {

service_name = "*"

}

}

}

}

timeouts {}

use_explicit_dry_run_spec = false

}

- Recommendations:

Use

terraform planto preview changes before applying.Consider using Terraform modules to reuse this configuration across multiple clusters.

The

restricted_serviceslist can be customized based on which Google Cloud services your workloads need to access.For production deployments, consider using more restrictive

resourcesandoperationsscoping instead of wildcards.Replace

*placeholders with explicit services and project resources if your organization requires stricter scoping.

For details on configuring ingress and egress policies, see Configure service perimeter rules. For more information about CEL expressions in VPC Service Controls, see Using CEL expressions.

Update a Google Cloud KMS key policy

Follow these steps to update your Google Cloud KMS key policy without disrupting your Confluent Cloud cluster operations:

- Prerequisites

Administrative access to your Google Cloud project and KMS service.

Current working backup of your key policy.

Planned maintenance window for policy updates.

Understanding of the required Confluent permissions.

Create a backup of your current working key policy. You can retrieve the current policy using the gcloud CLI:

gcloud kms keys get-iam-policy \ <your-key-name> \ --keyring <your-keyring> \ --location <your-location> \ > gcp-kms-key-policy-backup-$(date +%Y-%m-%d).json

Apply the policy update during your planned maintenance window:

gcloud kms keys set-iam-policy \ <your-key-name> \ --keyring <your-keyring> \ --location <your-location> \ new-policy.json

For more information, see the gcloud kms keys set-iam-policy documentation.

Verify your cluster is still operational:

Check cluster status in the Confluent Cloud Console.

Verify producers and consumers are still functioning.

Monitor for any error messages or alerts.

Check Google Cloud audit logs for any access denied errors related to your KMS key:

gcloud logging read \ "resource.type=\"cloudkms_cryptokey\" AND protoPayload.methodName=\"Decrypt\" AND status.message!=\"OK\"" \ --freshness=10m

Monitor your cluster health for at least 30 minutes after the policy update:

Watch cluster metrics and health indicators.

Check for any encryption-related errors.

Verify that automatic operations (like scaling) continue to work.

If you encounter problems after updating the key policy:

Immediately restore the backup policy.

Monitor cluster recovery for up to 30 minutes.

Contact Confluent Support if the cluster doesn’t recover after restoring the original policy.