Tableflow Quick Start with Delta Lake Tables in Confluent Cloud

Confluent Tableflow enables exposing Apache Kafka® topics as Delta Lake tables.

This quick start guides you through the steps to get up and running with Confluent Tableflow for Delta Lake tables.

In this quick start, you perform the following steps:

Prerequisites

DeveloperWrite access on all schema subjects

CloudClusterAdmin access on your Kafka cluster

Assigner access on all provider integrations

Access to a Databricks workspace.

For more information, see Grant Role-Based Access for Tableflow in Confluent Cloud.

Step 1: Create a topic and publish data

In this step, you create a stock-trades topic by using Confluent Cloud Console. Click Add topic, provide the topic name, and create it with default settings. You can skip defining a contract.

Publish data to the stock-trades topic by using the Datagen Source connector with the Stock Trades data set. When you configure the Datagen connector, click Additional configuration and proceed through the provisioning workflow. When you reach the Configuration step, in the Select output record value format, select Avro. Click Continue and keep the default settings. For more information, see Datagen Source Connector Quick Start.

Step 2: Configure your S3 bucket and provider integration

Before you materialize your Kafka topic as a table, you must configure the storage bucket where the materialized tables are stored.

To access your S3 bucket and write materialized data into it, you must create a Confluent Cloud provider integration.

In the AWS Management Console, create an S3 bucket in your preferred AWS account. For this guide, name the bucket tableflow-quickstart-storage.

In your Confluent Cloud environment, navigate to the Provider Integrations tab to create a provider integration and grant Confluent Cloud access to your S3 bucket.

Click on Add Integration.

In the Configure role in AWS section, select New Role option.

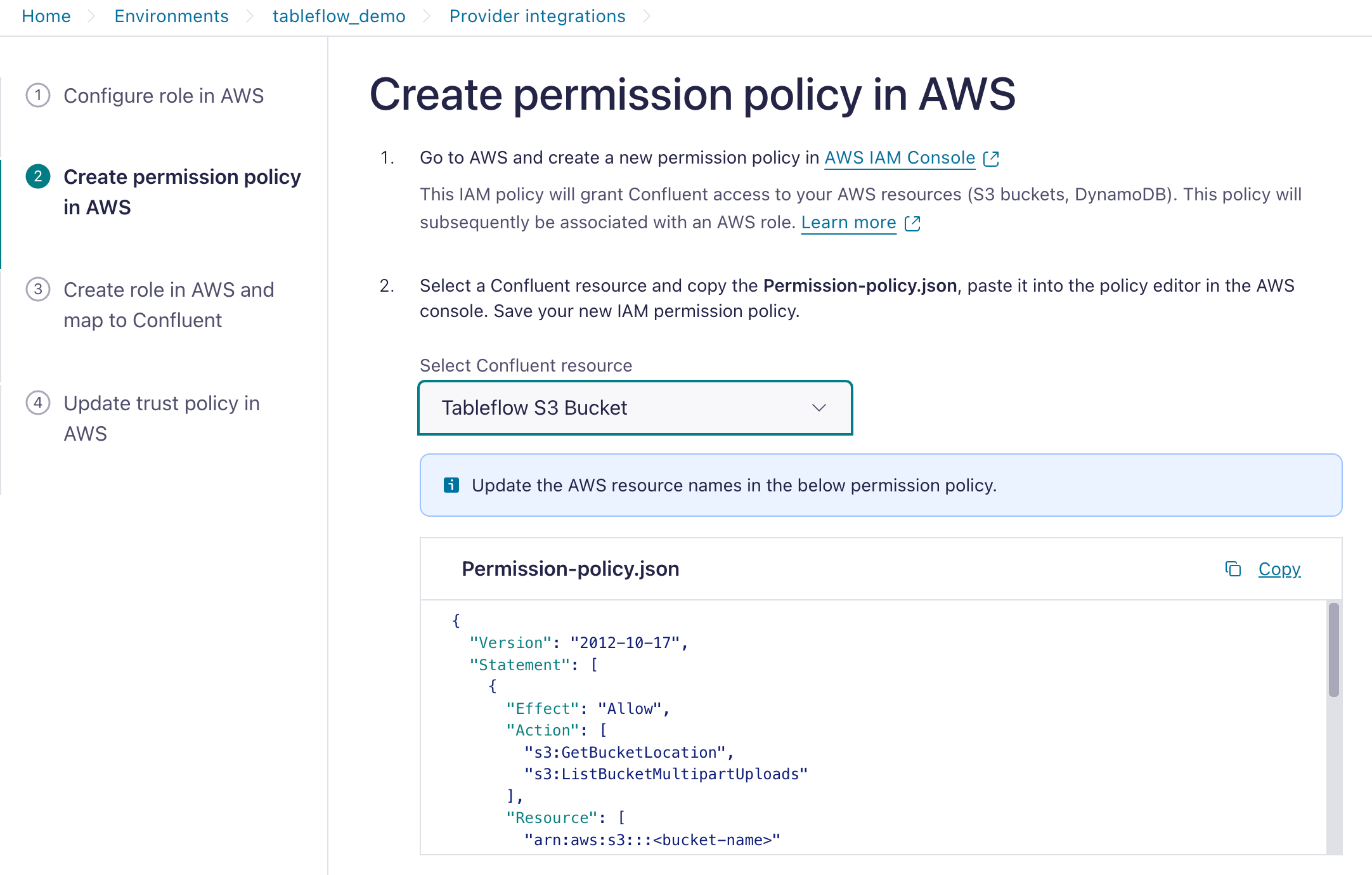

Select Tableflow S3 Bucket and copy the IAM Policy template.

In the AWS console, navigate to your IAM.

In the Access Management section, click Policies, and in the Policies page, click Create Policy.

As a best practice, you should create a designated IAM policy that grants Confluent Cloud access to your S3 location.

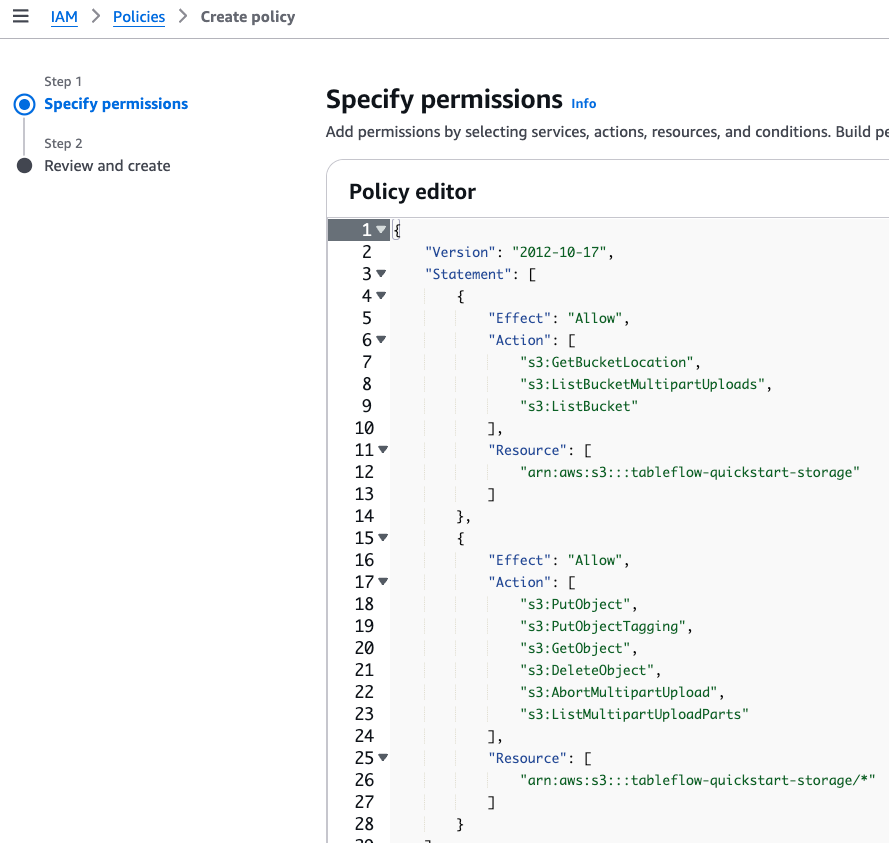

Paste the IAM policy template you obtained previously. Update it with the name of your S3 bucket, for example, tableflow-quickstart-storage, and create a new AWS IAM policy.

Navigate to AWS IAM Roles and click Create Role.

For the Trusted entity type, select Custom trust policy.

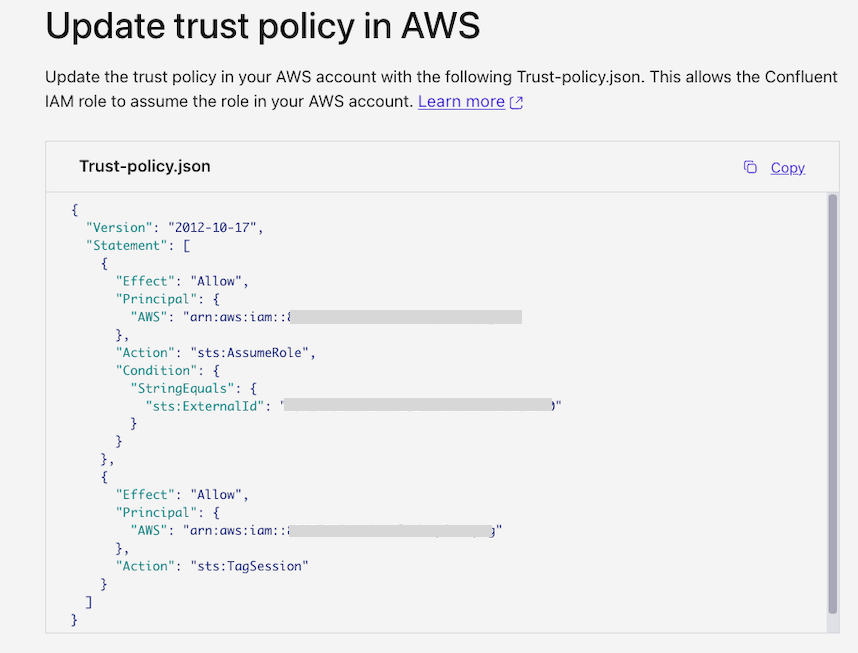

From the Tableflow UI in Cloud Console, copy the Trust-policy.json file and paste it into the policy editor in the AWS console.

Attach the permission policy you created previously and save your new IAM role, for example, tableflow-quickstart-role.

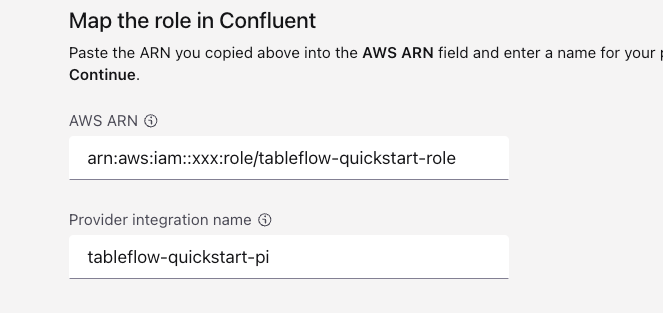

Copy the role ARN, for example,

arn:aws:iam::<xxx>:role/tableflow-quickstart-role.In the Cloud Console, locate the Map the role in Confluent section in the Provider Integration page. In the AWS ARN section, paste the ARN you copied previously and click Continue.

After creating the provider integration, update the trust policy of the AWS IAM role (tableflow-quickstart-role) using the trust policy displayed in Cloud Console.

Step 3: Enable Tableflow on your topic

With the provider integration configured, you can enable Tableflow on your Kafka topic to materialize it as a table in the storage bucket that you created in Step 2.

Navigate to your stock-trades topic and click Enable Tableflow.

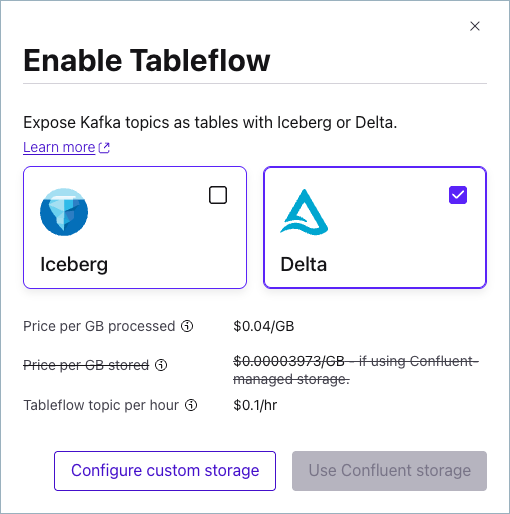

In the Enable Tableflow dialog, select Delta as the table format.

Click Configure custom storage.

In the Choose where to store your Tableflow data section, click Store in your bucket.

In the Provider integration dropdown, select the provider integration that you created in Step 2. Provide the name of the storage bucket that you created, which in this guide is tableflow-quickstart-storage.

Click Continue to review the configuration and launch Tableflow.

Materializing a newly created topic as a table can take a few minutes.

After enabling Tableflow, in the Monitor section, copy the storage location of the table.

For low-throughput topics in which Kafka segments have not been filled, Tableflow tries optimistically to publish data every 15 minutes. This is best-effort and not guaranteed.

Step 4: Create a read-only external location in Databricks

You can query Delta tables materialized by Tableflow in Databricks as external tables.

To create an external table in Databricks, you must first create an External Location.

Log in to the Databricks workspace that you use to query Delta Lake tables.

Click Catalog to open Catalog Explorer.

On the Quick access page, click External data, and in the External locations tab, and click Create external location.

In the Create a new external location dialog, select Manual and click Next.

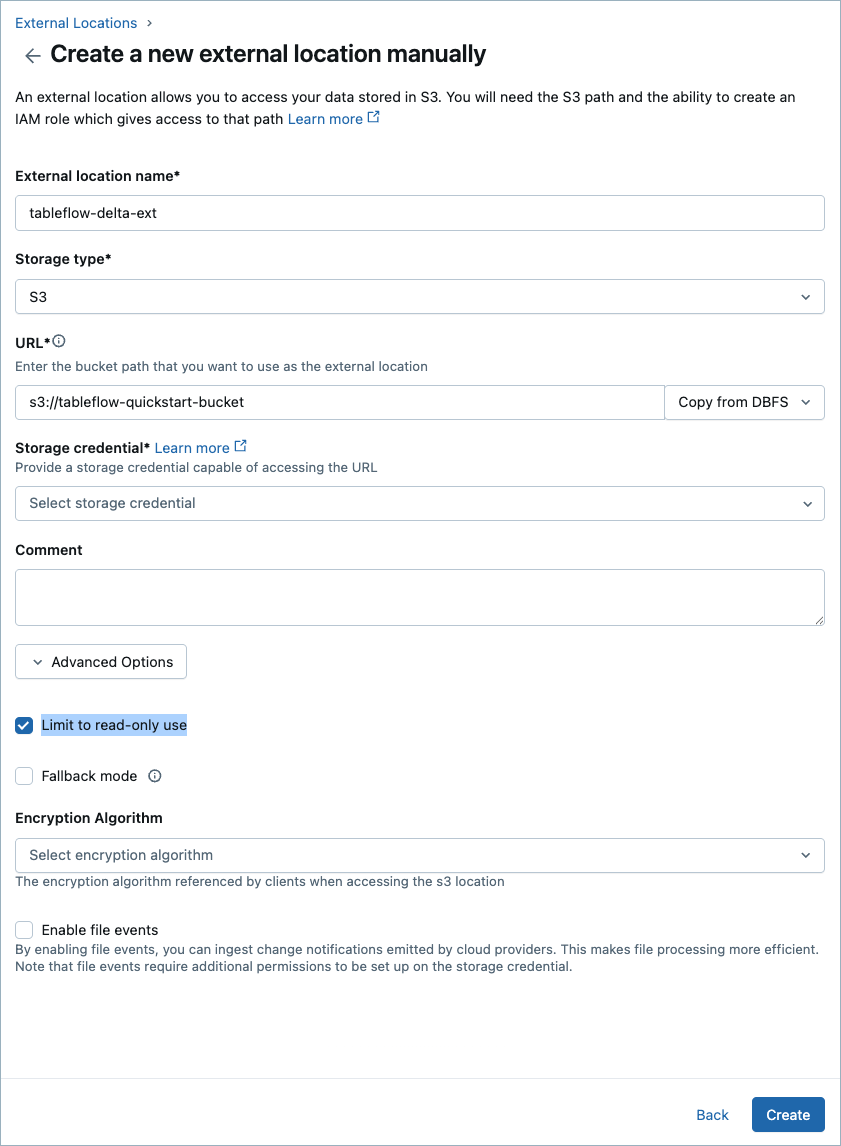

Create a new external location by providing the external location name, the URL of the S3 bucket where Delta Tables are stored, and create a new storage credential by following these steps.

Check Limit to read-only use to ensure that the external location is read-only.

Step 5: Automatically publish the table as an external Delta Lake table to Unity Catalog

Once Tableflow is enabled on your Kafka topic, you can set up Unity Catalog Integration to publish Delta Lake tables to Unity Catalog automatically by following these instructions: Integrate Tableflow with Unity Catalog.