Integrate Tableflow with Unity Catalog in Confluent Cloud

Unity Catalog provides a fine-grained, unified governance solution for all data and AI assets on the Databricks Data Intelligence Platform.

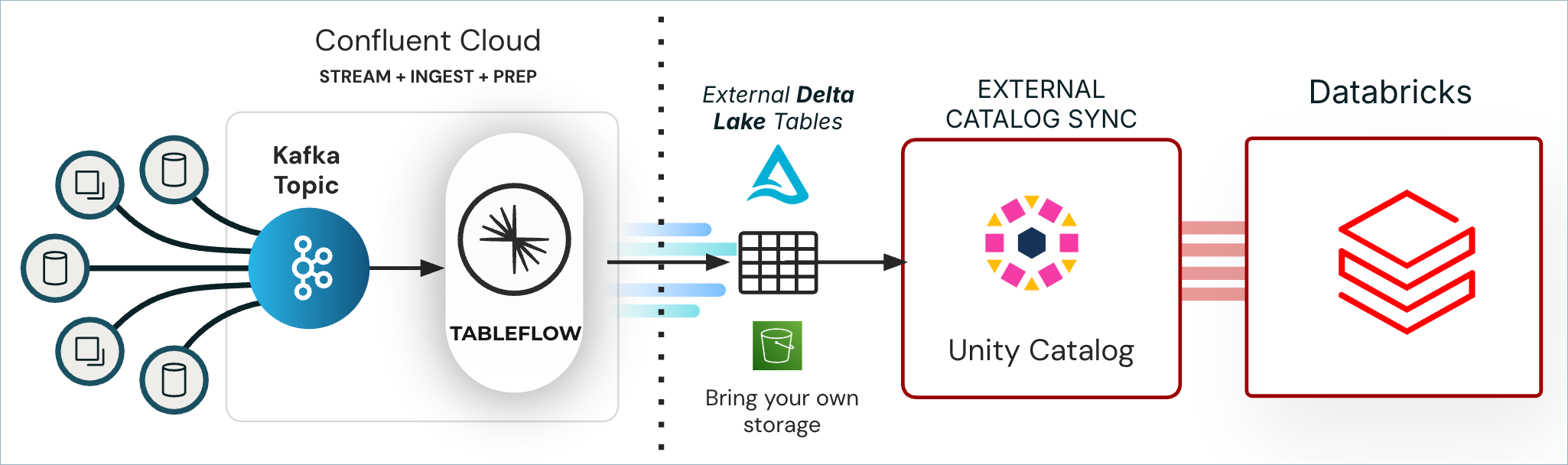

Tableflow’s Unity Catalog integration enables you to publish materialized tables to Unity Catalog, making them accessible as external Delta Lake tables in Databricks.

Databricks Unity Catalog integrates with Tableflow at the cluster level, enabling the automatic publication of all Delta Lake-enabled topics as tables within Unity Catalog.

Note

Tableflow Unity Catalog integration uses Databricks Unity Catalog Open Preview for creating external tables by using Unity Catalog open APIs. For more information, see Create external Delta tables from external clients.

Tableflow maps and creates a new schema in Unity Catalog using your Kafka cluster ID. Then it publishes all the topics that have Delta Lake format enabled as external Delta Lake tables in Unity Catalog.

Prerequisites

DeveloperWrite access on all schema subjects

CloudClusterAdmin access on your Kafka cluster

Assigner access on all provider integrations

Access to a Databricks workspace.

An external Delta Lake table, like the one created in Quick Start with Delta Lake Tables.

Configure Unity Catalog

Follow these steps to enable Databricks Unity Catalog sync for Delta Lake tables in Tableflow.

Log in to your Databricks workspace and create or select the catalog that you want to use to sync Delta Lake tables created by Tableflow. For this guide, the catalog is named tableflow-quick-start-catalog.

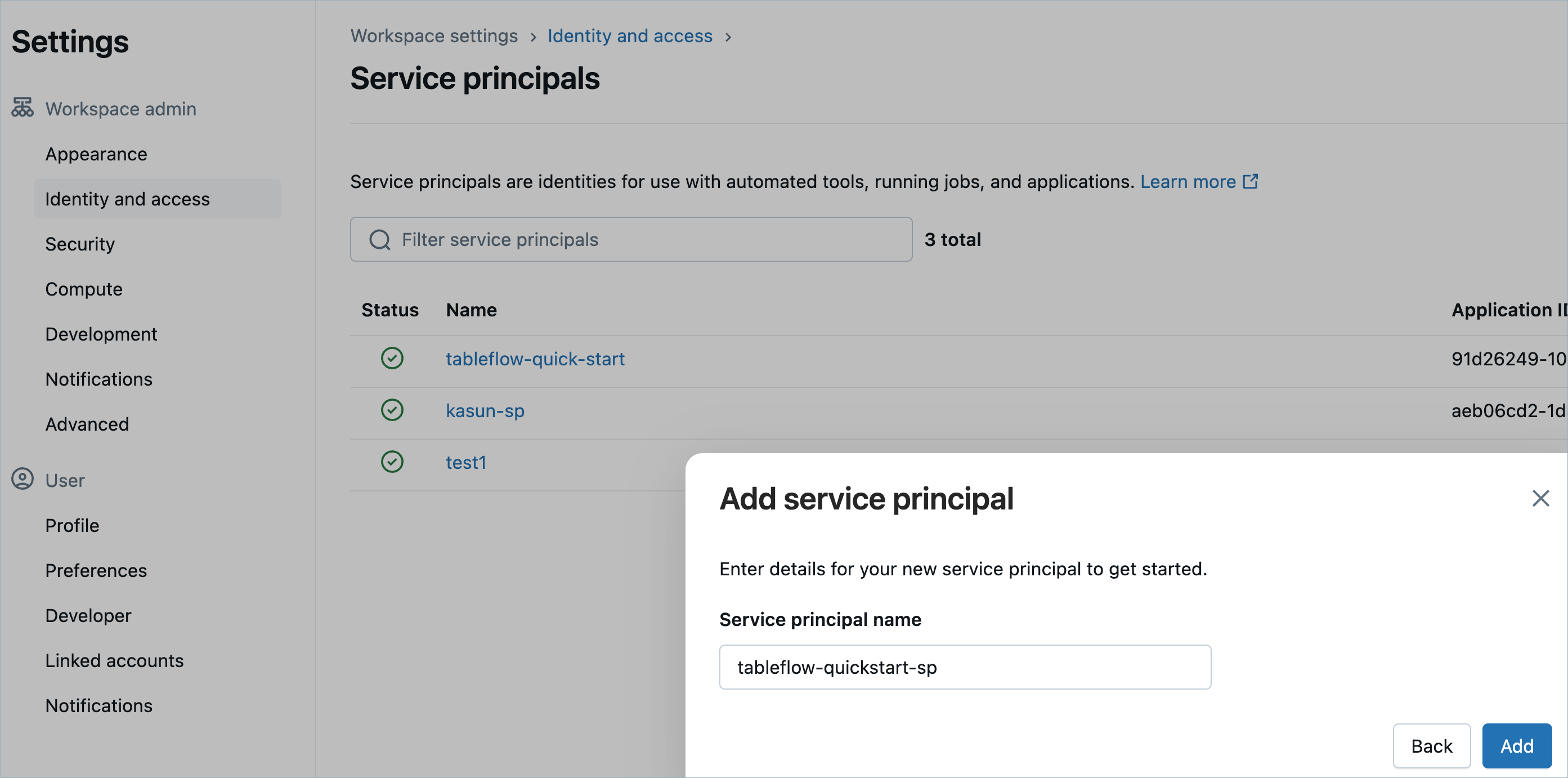

Navigate to the Databricks workspace settings and click Identity and Access.

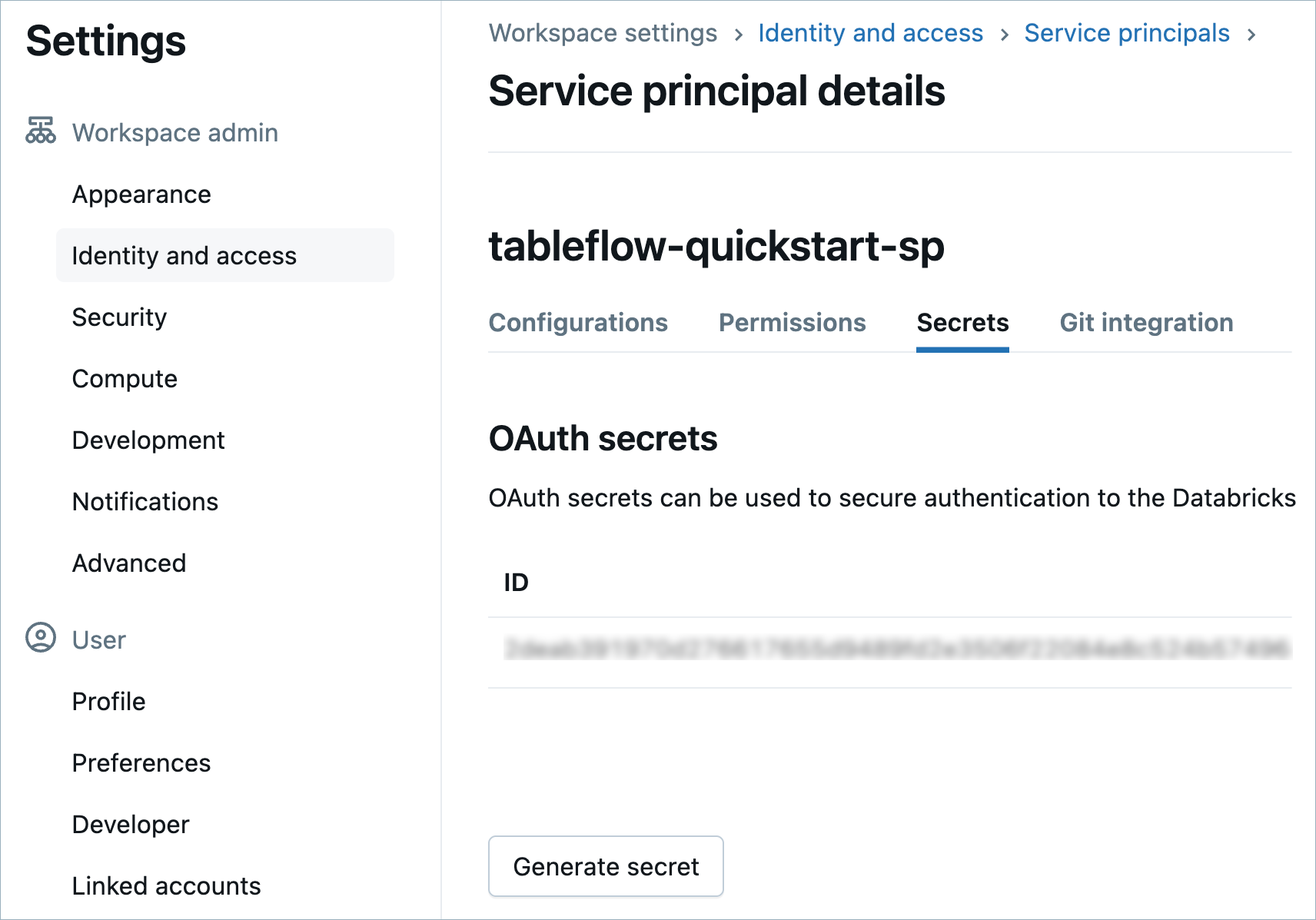

Create a new Service Principal named tableflow-quick-start-sp and generate a secret. Store the client ID and secret securely, because you will need them later to configure the Unity Catalog integration in Tableflow.

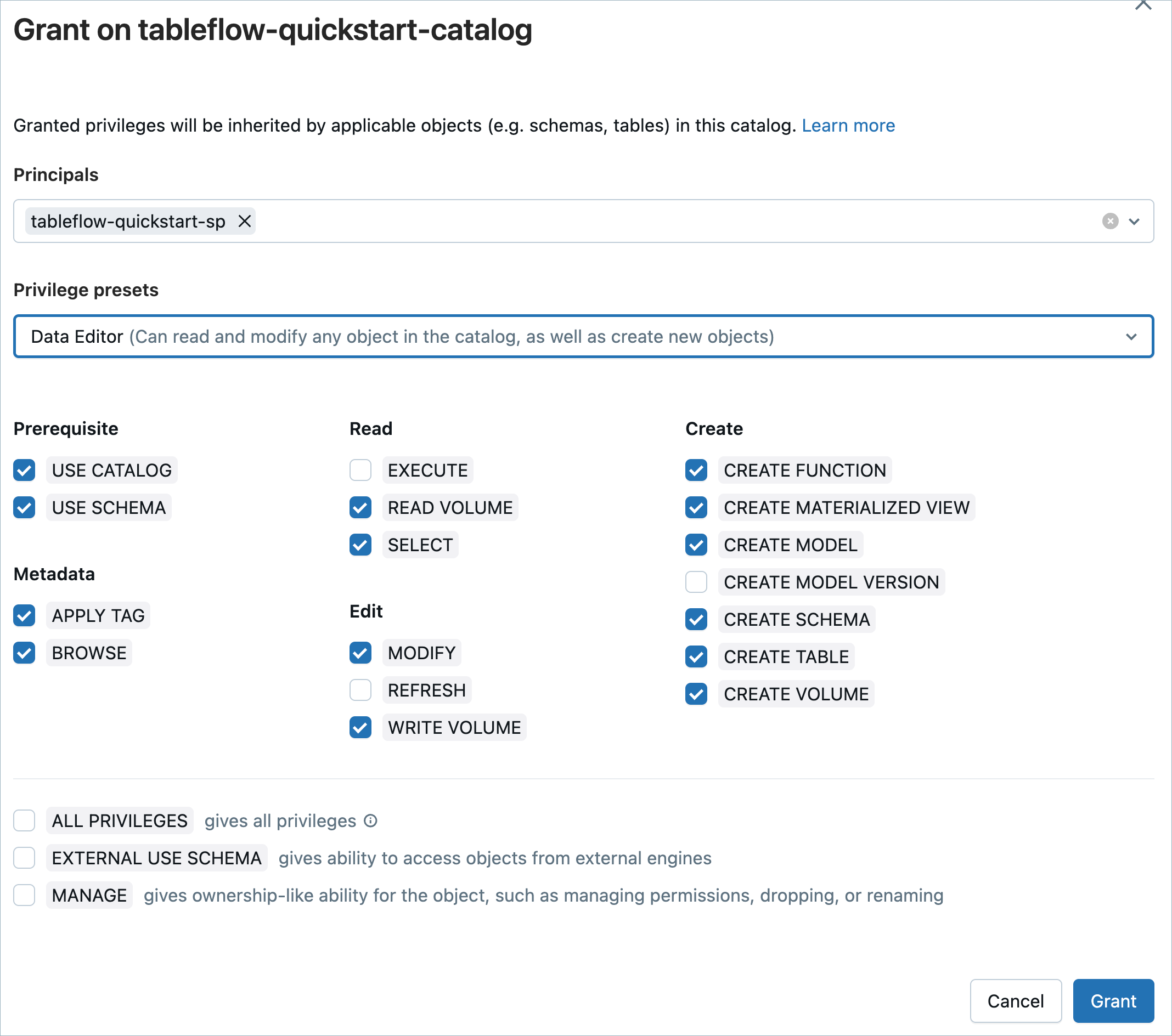

Navigate to the tableflow-quick-start-catalog and grant it the Data Editor access to the catalog.

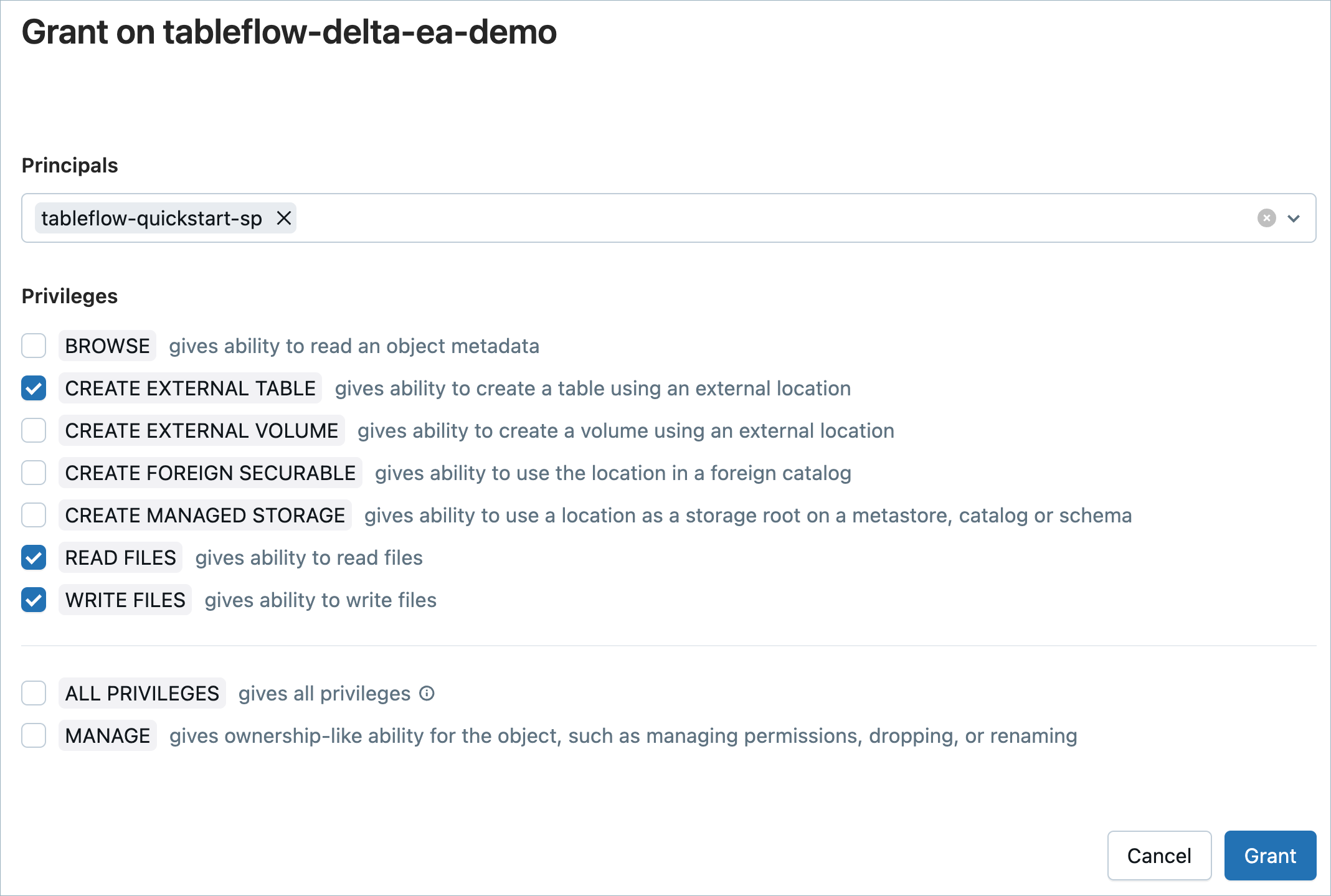

Navigate to the External Location you created previously and grant the following permissions to tableflow-quick-start-sp:

CREATE EXTERNAL TABLE

READ FILES

WRITE FILES

Configure Unity Catalog integration with Tableflow

Log in to Confluent Cloud Console.

Navigate to the Kafka cluster that has the topics you want to sync, and click Tableflow.

In the External Catalog Integrations section, click Add Integration.

In the Select integration type section, select Unity Catalog.

Provide a name to identify your catalog integration and click Continue.

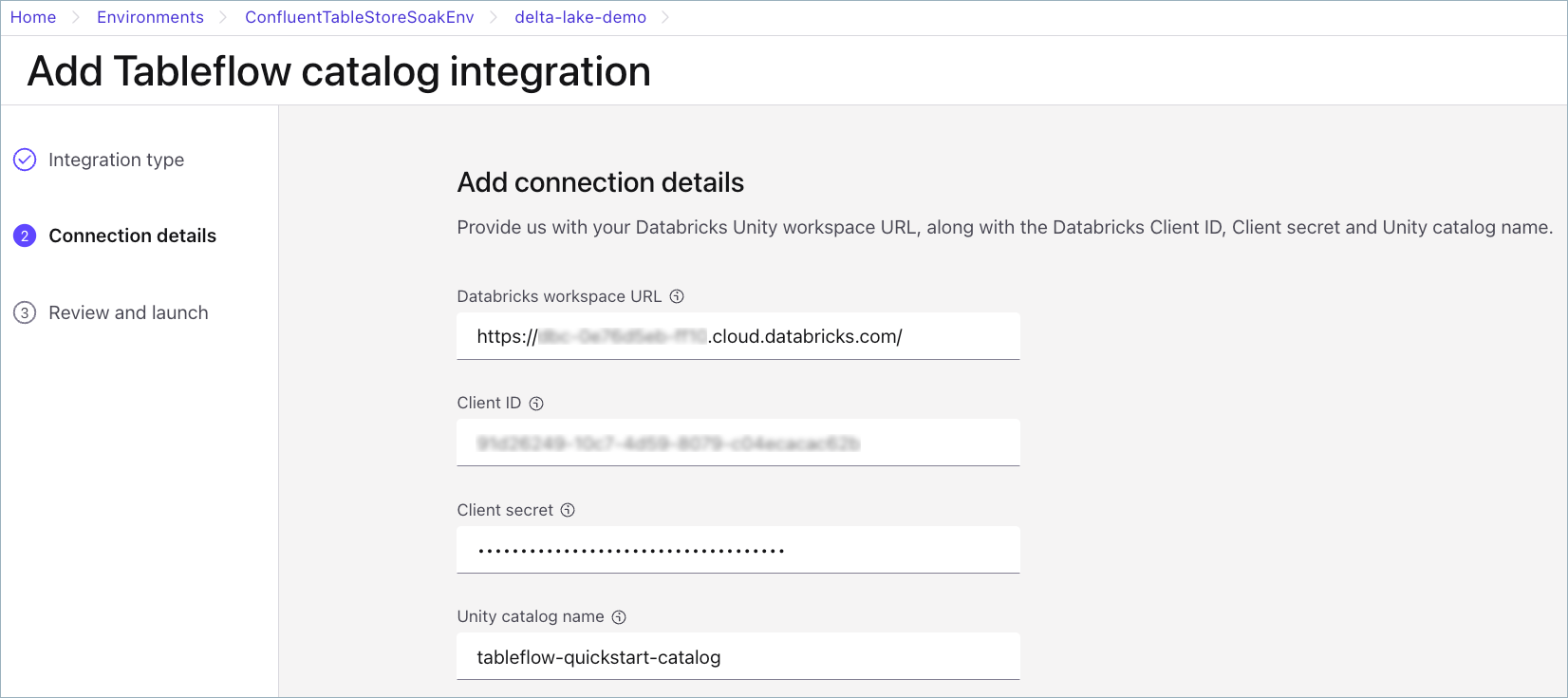

On the Add connection details page, provide the following inputs and click Continue.

Databricks Workspace URL

Client ID and secret of the service principal that you obtained in Step 2.

Name of the Unity Catalog to integrate with.

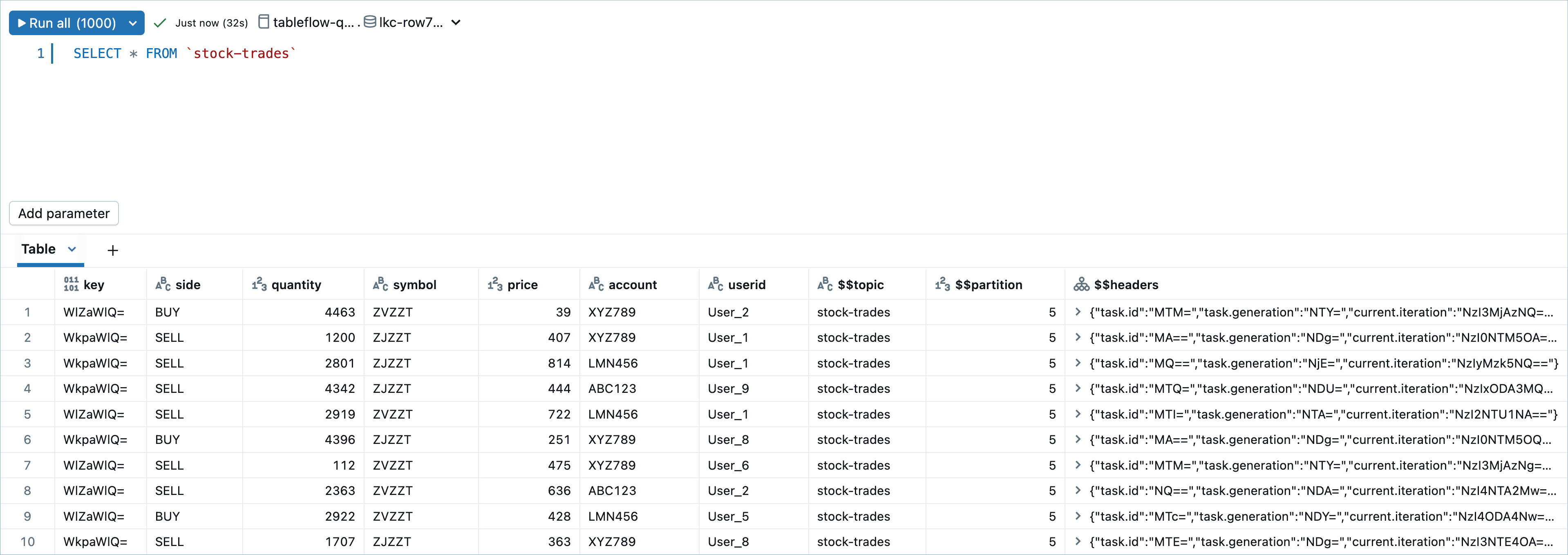

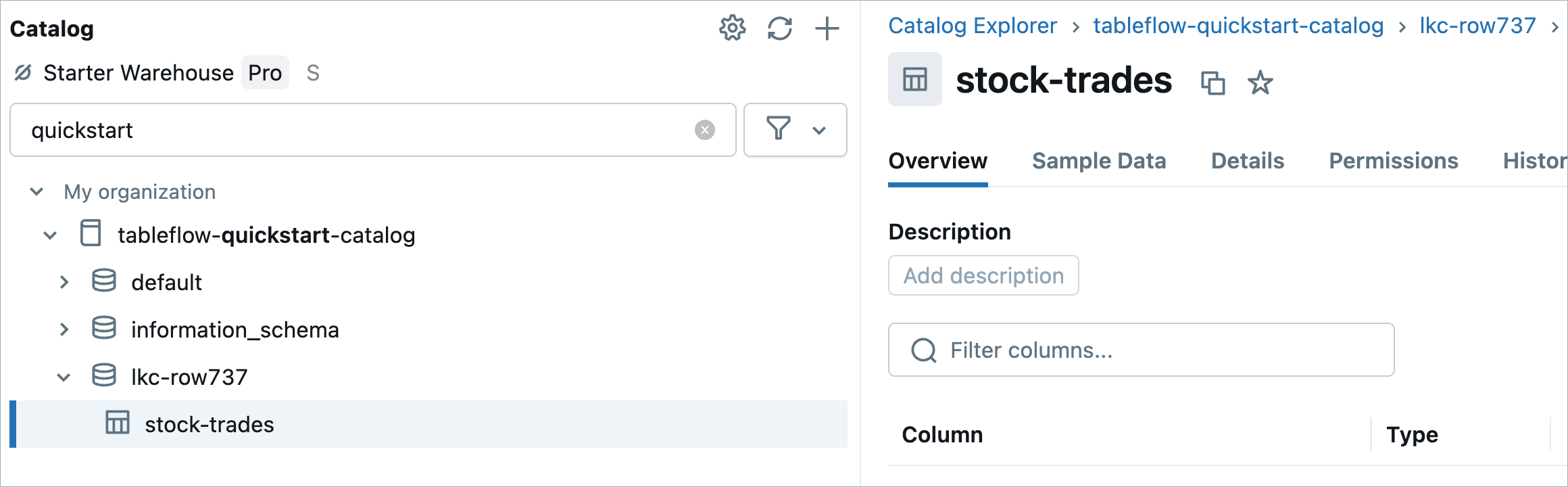

Return to your Databricks workspace, and in Catalog Explorer, confirm that the Delta table is published within the catalog, categorized under the schema name corresponding to your cluster ID.

Once the table is available in Databricks, you can perform queries on it and use it for your data analytics tasks. Also, you can govern this table in Unity Catalog like any other asset.