Integrate Catalogs with Tableflow in Confluent Cloud

An Apache Iceberg™ catalog manages metadata for Iceberg tables. It provides an abstraction layer over schema, partitioning, and file locations so that analytics engines can seamlessly interact with the data. Iceberg catalogs can be backed by different services, such as Hive, AWS Glue, or REST APIs, depending on the use case.

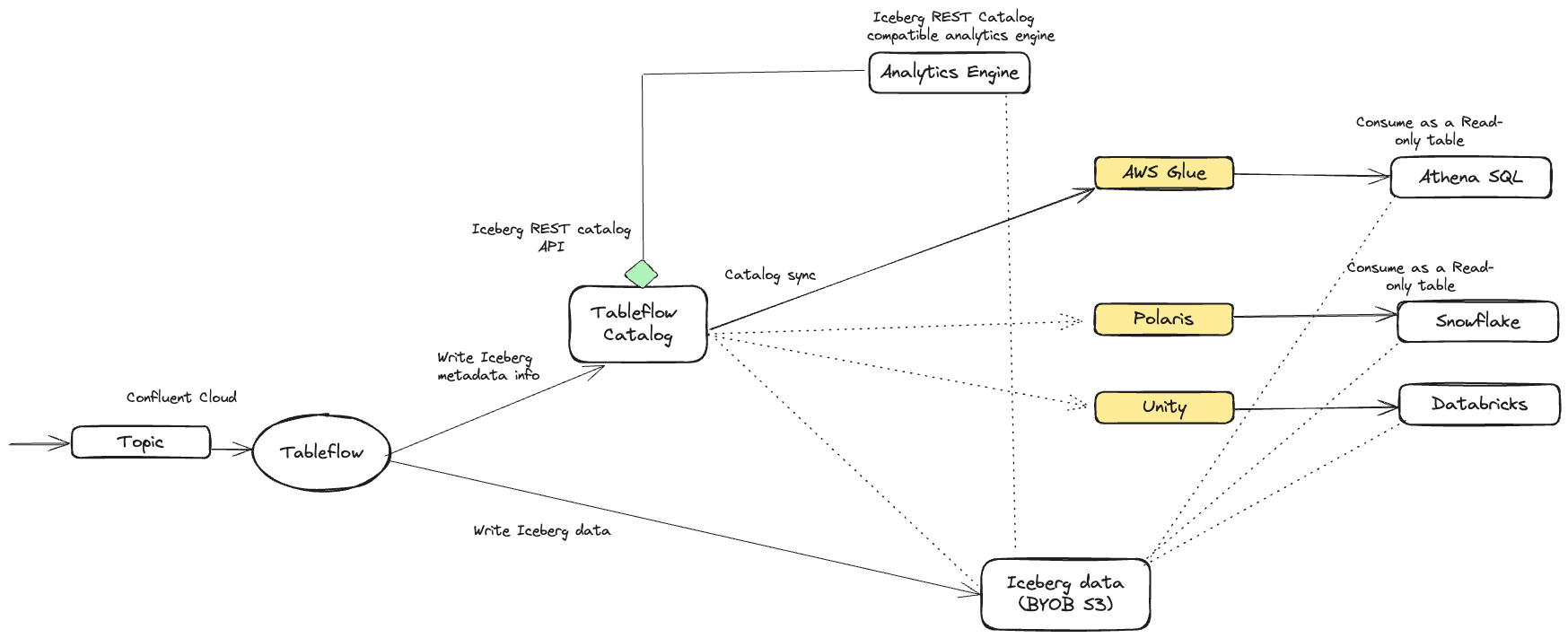

Tableflow offers a built-in Iceberg REST catalog service that enables you to access tables created by Tableflow. Also, you can configure catalog integrations with external services, such as AWS Glue Data Catalog, to keep external catalogs synchronized with Tableflow.

For Delta Lake tables, Tableflow also supports integration with Databricks through Unity Catalog. These Delta tables are available only through Unity Catalog integration.

The following diagram shows the integrations between Tableflow and the catalog services.

Tableflow Iceberg REST Catalog

Tableflow features an integrated Iceberg REST catalog (IRC), which enables seamless connections to any analytics or compute engine that supports the Iceberg REST catalog API.

Note

The Tableflow Iceberg REST Catalog doesn’t support credential vending for customer-managed storage buckets, so when you use Tableflow Catalog with your own storage, the analytics engine must have access to the storage location where you materialize Tableflow Iceberg tables.

To access the REST Catalog endpoint and obtain the necessary credentials, navigate to the Tableflow section in the Confluent Cloud Console. You can use this information within the analytics engine that supports the Iceberg REST catalog, enabling you to query Iceberg tables.

External catalog integration

Tableflow supports synchronizing Iceberg table metadata with multiple external catalogs per cluster, including AWS Glue, Snowflake (Polaris/Open Catalog), and Unity.

Each catalog type is supported through a specific Tableflow format, ensuring that external catalogs remain up-to-date and consistent while the Tableflow Catalog continues to serve as the single source of truth.

Tableflow enables multiple catalog syncs within a cluster, but only one integration is allowed per catalog type. For example, a Kafka cluster can connect only to one AWS Glue Data Catalog.

Key aspects of external catalog integration include:

Cluster-level integration: External catalogs can be integrated at the cluster level, allowing all topics materialized from the cluster to be published to the catalog service.

Metadata Synchronization: Tableflow synchronizes its metadata with the external catalog that is integrated with the Kafka cluster. When there’s a new update to a table, it’s immediately published in the external catalog. Delta Lake tables are published directly from Tableflow to the external catalog services (Unity Catalog).

Read-Only Tables: Iceberg tables exposed via external catalog synchronization are read-only. When working with Iceberg tables created by Tableflow, it’s essential to ensure that they are consumed as read-only tables.

Bring Your Own Storage (BYOS) support: Tableflow supports BYOS for all catalog integrations, providing flexibility in managing storage and infrastructure. External catalog integration is not supported with Tableflow Confluent-managed storage.

Note

Catalogs used with Tableflow must have access to the KMS key that encrypts the Tableflow data. This applies to both Confluent Managed Storage and Bring Your Own Storage (BYOS). For details on encryption behavior and required permissions, see Use self-managed encryption keys with Tableflow and Tableflow with KMS-encrypted S3 storage.

Tableflow supports the following catalog integrations and table formats.

AWS Glue Data Catalog — Apache Iceberg

Snowflake Open Catalog/Apache Polaris — Apache Iceberg

Databricks Unity Catalog — Delta Lake

Important

Topics must be materialized in order for catalog synchronization to complete. Enable Tableflow on a topic before enabling your external catalog provider. Catalog sync remains in the

pendingstate until at least one topic is enabled with Tableflow.