Monitor Confluent Platform topics

This page provides a centralized view for monitoring all Kafka topics within your connected Confluent Platform cluster.

An Apache Kafka® topic is a category or feed that stores messages. Producers write data to topics, and consumers read data from topics. Topics are grouped by cluster in environments. This interface lets you monitor the activity and high-level details of all your topics at a glance.

Configure topic reporting for large clusters

If your Confluent Platform cluster contains a large number of topics, the default packet size used to send the topic list to Confluent Cloud might be too small. This can cause the total topic count in the Confluent Cloud Console to appear inaccurate or fluctuate.

To prevent this, increase the maximum snapshot size on your Confluent Platform controllers:

The

confluent.catalog.collector.max.bytes.per.snapshotsetting increases the maximum packet size to 2 MB, which allows a larger topic list to be sent in a single snapshot.The

confluent.telemetry.exporter._usm.events.request.timeout.mssetting increases the event exporter request timeout to 180,000 ms (3 minutes), which prevents failures when exporting telemetry data from large clusters.

Apply these settings using the method that matches your cluster management.

For Confluent for Kubernetes deployments

In your Confluent Platform cluster resource YAML file, add the following

configOverridesto thespec:spec: configOverrides: server: - confluent.catalog.collector.max.bytes.per.snapshot: 2000000 - confluent.telemetry.exporter._usm.events.request.timeout.ms: 180000

Apply the configuration change to trigger a rolling restart of the controllers:

kubectl apply -f <your-confluent-platform.yaml>

For Ansible Playbooks for Confluent Platform deployments

In your Confluent Ansible variables file (for example,

hosts.yml), add the following properties under theall.vars.kafka_controller_custom_properties:all: vars: kafka_controller_custom_properties: confluent.catalog.collector.max.bytes.per.snapshot: 2000000 confluent.telemetry.exporter._usm.events.request.timeout.ms: 180000

Run your Confluent Ansible playbook to apply the changes.

For manual installations

On each of your Confluent Platform controller hosts, open the

server.propertiesfile for editing.Add the following property and set its value:

confluent.catalog.collector.max.bytes.per.snapshot=2000000 confluent.telemetry.exporter._usm.events.request.timeout.ms=180000

Restart your controllers for the configuration change to take effect.

After the controllers restart, the topic count in the Confluent Cloud Console should stabilize and accurately reflect the number of topics in your cluster.

Note

From the Confluent Cloud Console, you can only monitor Confluent Platform topics. To create, delete, or manage these topics, you must use the Confluent Control Center for your self-managed cluster.

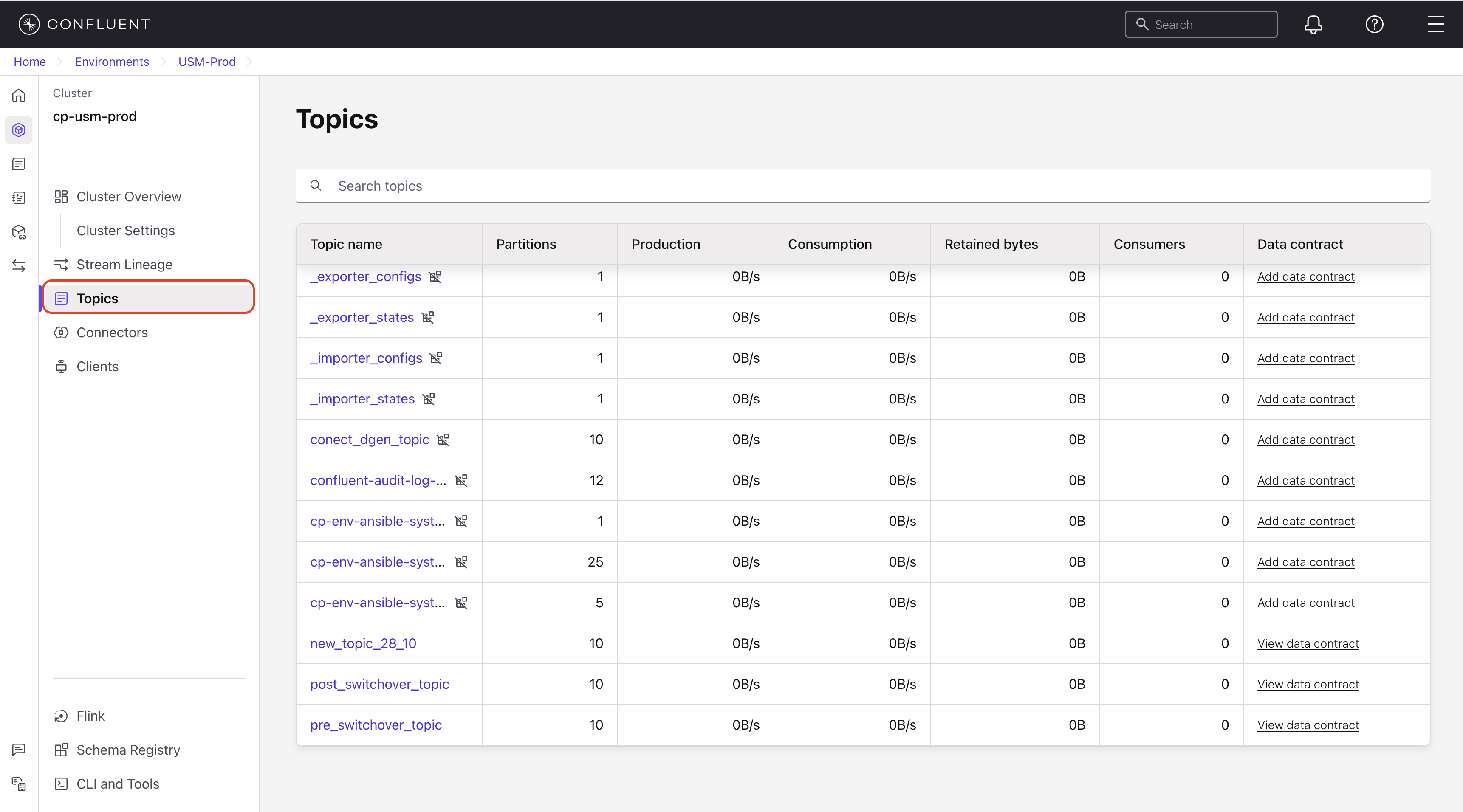

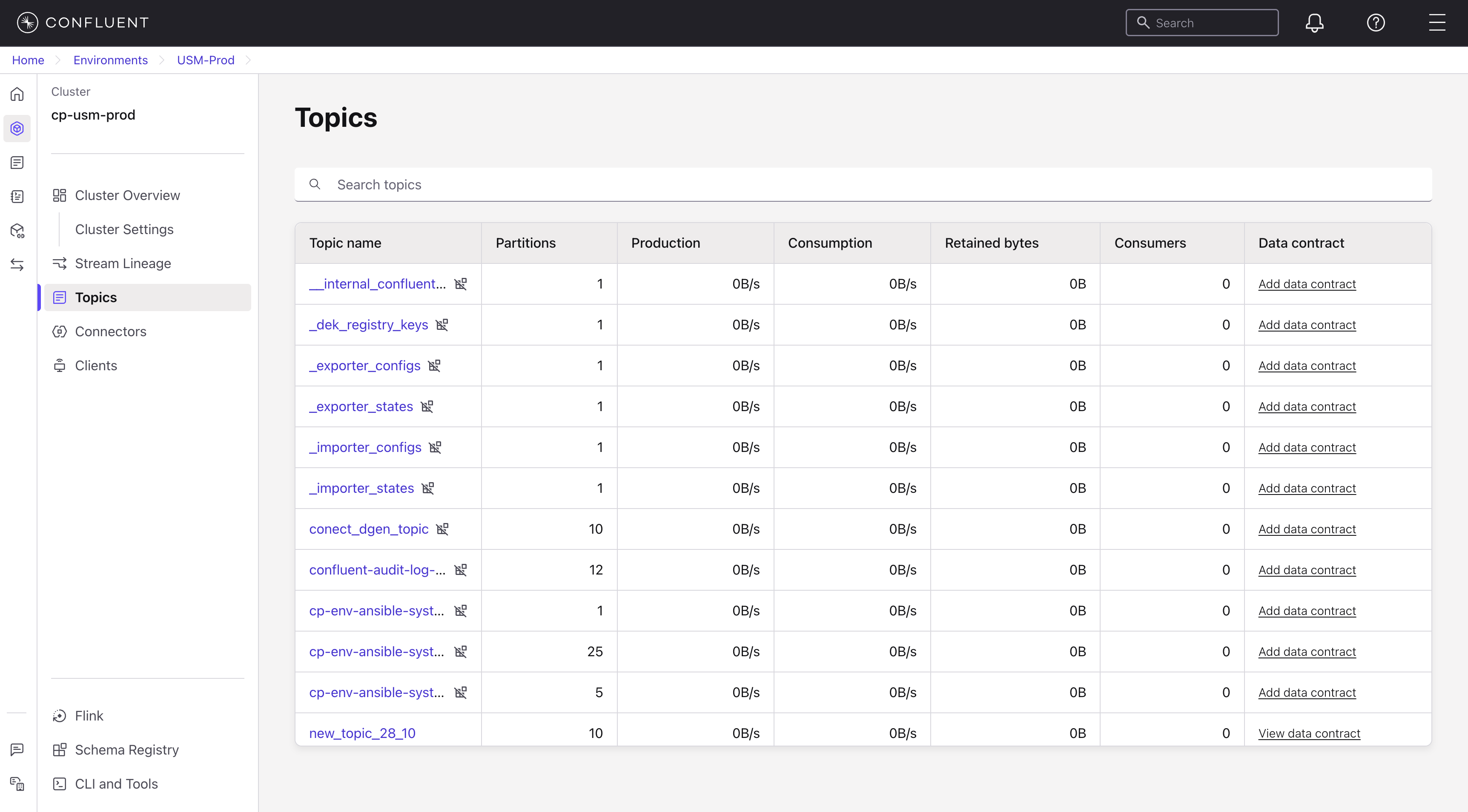

View the topics list

Understand the topics list

The Topics page provides a centralized view for all topics in your selected cluster. The list includes the following fields for each topic:

Topic name: The unique identifier for the topic.

Partitions: The number of partitions configured for the topic.

Production: The current rate of data written to the topic (bytes/sec).

Consumption: The current rate of data read from the topic (bytes/sec).

Retained bytes: The total size of data stored in the topic’s logs.

Consumer: The number of active consumer groups that read from the topic.

Data contract: A link to view the schema and data rules associated with the topic.

View individual topic details

To inspect the metrics, data contract, configuration, and metadata for a specific topic, select it from the topics list. This opens a detailed view with five tabs: Overview, Monitor, Data contract, Settings, and Details.

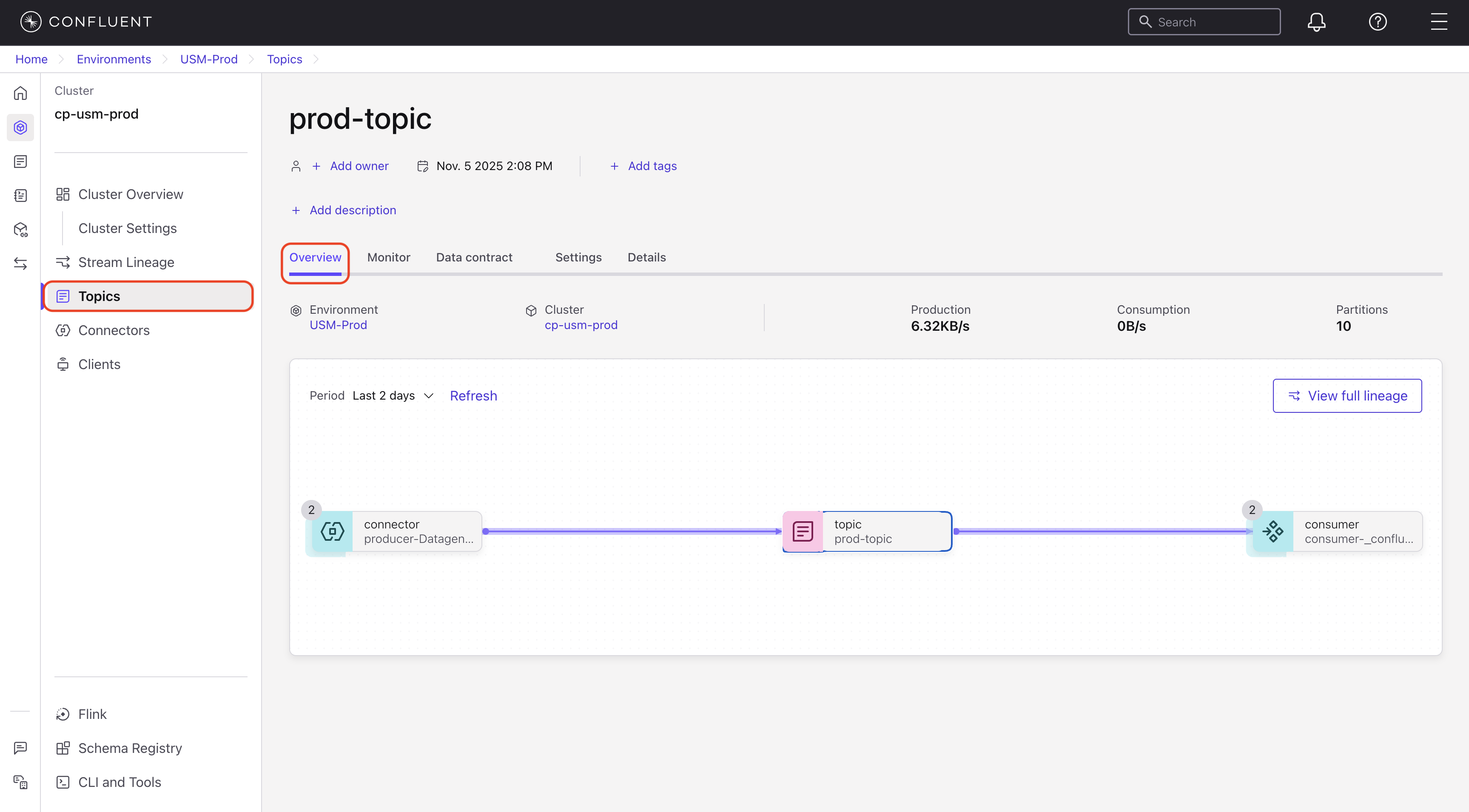

Overview tab

The Overview tab provides a high-level summary of the topic’s key metrics and stream lineage.

The tab displays the following information:

Environment: The environment where the topic is registered.

Cluster: The Confluent Platform cluster where the topic resides.

Production: The current rate of data written to the topic.

Consumption: The current rate of data read from the topic.

Partitions: The number of partitions configured for the topic.

The Overview tab includes a Stream Lineage visualization that shows the data flow for the topic. Click View full lineage to open the complete lineage diagram. For more information, see Track Data with Stream Lineage on Confluent Cloud.

Monitor tab

The Monitor tab provides a real-time summary of the message flow for the selected topic.

Production: The total rate of data produced (written) to this topic.

Consumption: The total rate of data consumed (read) from this topic.

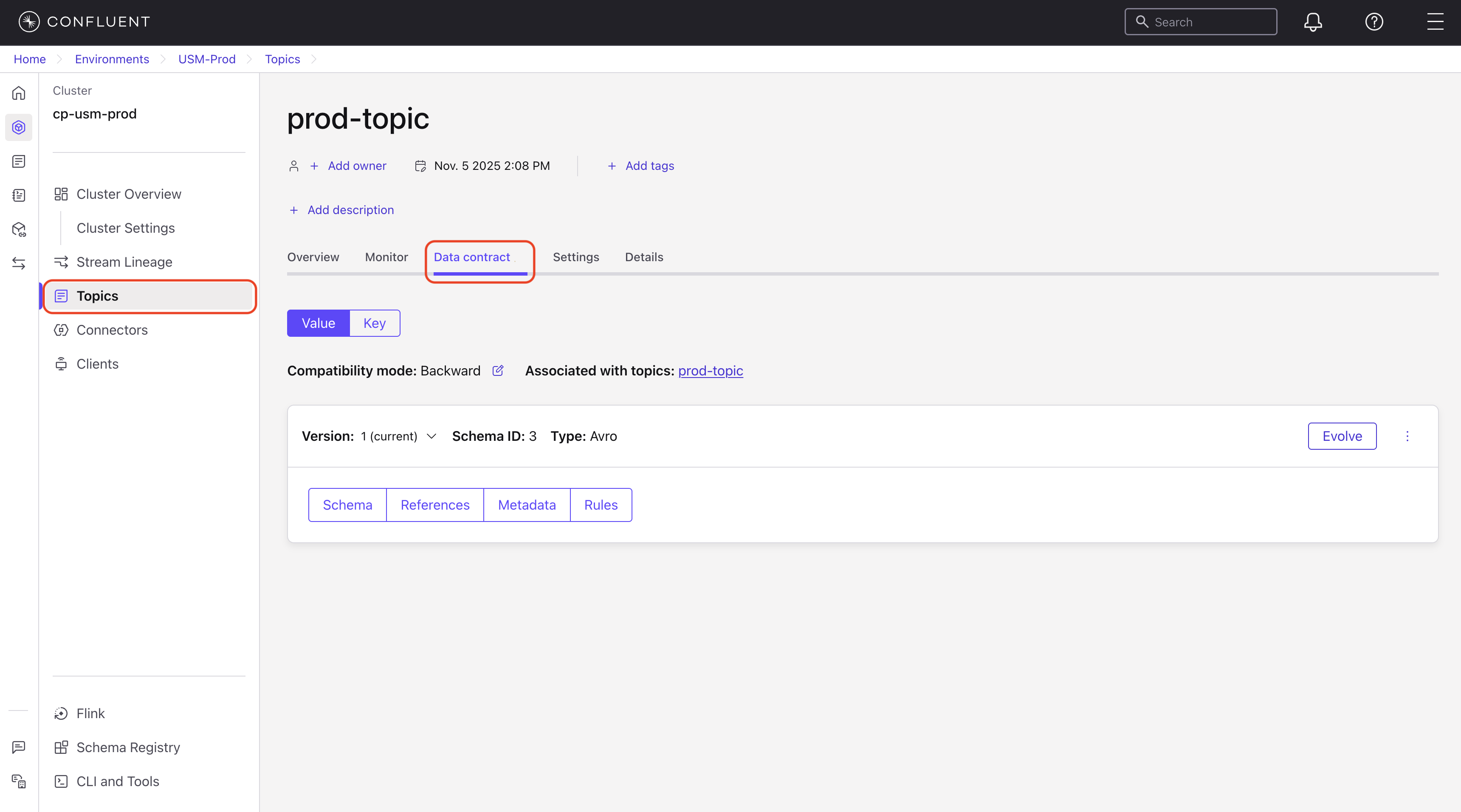

Data contract tab

The Data Contract tab defines the schema and rules for the data published to this topic, which ensures data quality and compatibility. This tab displays the schema, references, metadata, and any rules applied to the data.

If no data is present, click Create Data Contract to add a data contract. For instructions, see Create a schema (data contract).

The Data Contract tab displays the following specifications:

Schema: The JSON schema of the topic’s messages, which defines the structure and data types.

References: A list of any external schema references.

Metadata: Additional metadata about the schema.

Rules: Any rules or validations applied to the data.

Use the following to view topic data contracts.

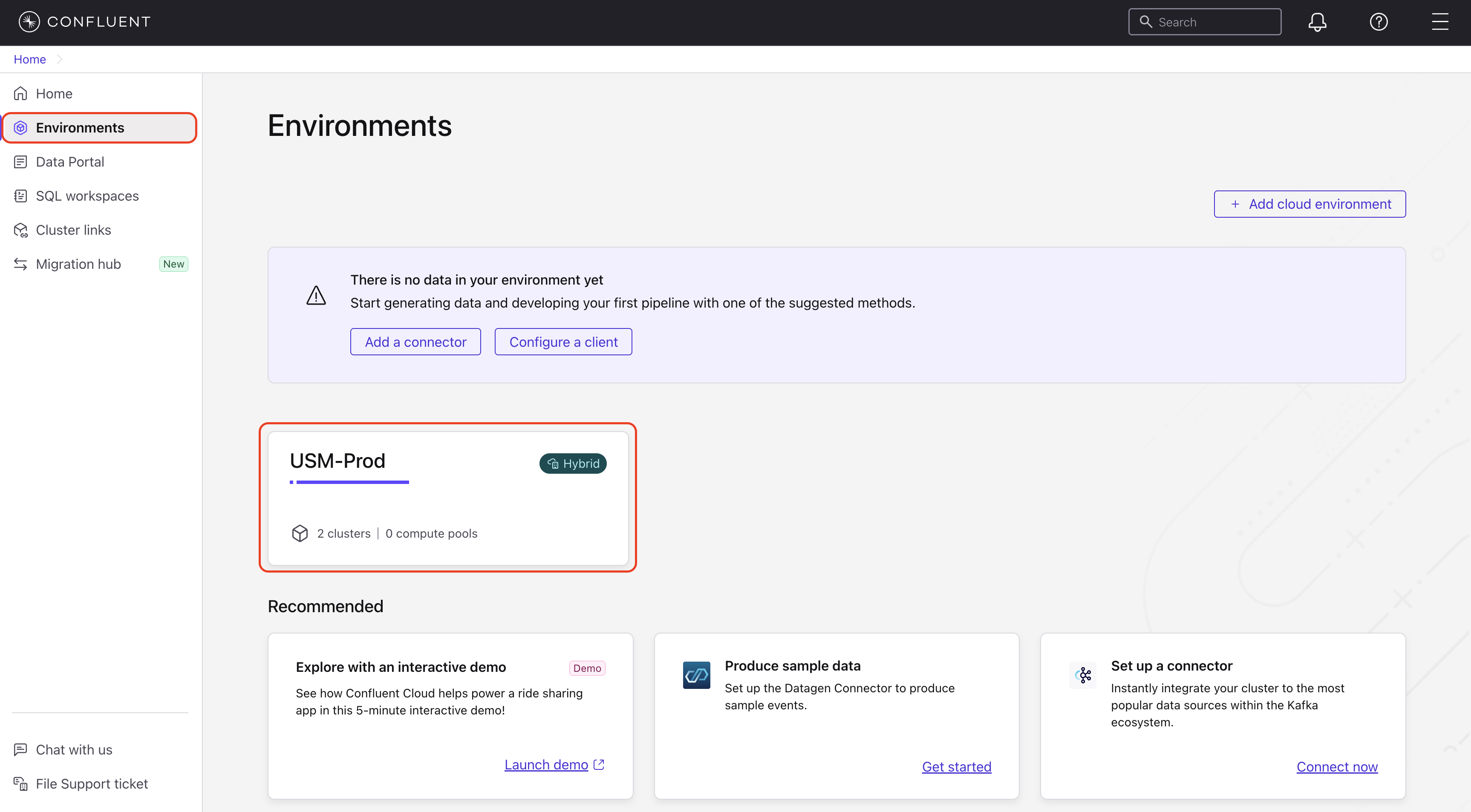

In the Confluent Cloud Console, navigate to Environments and select your environment.

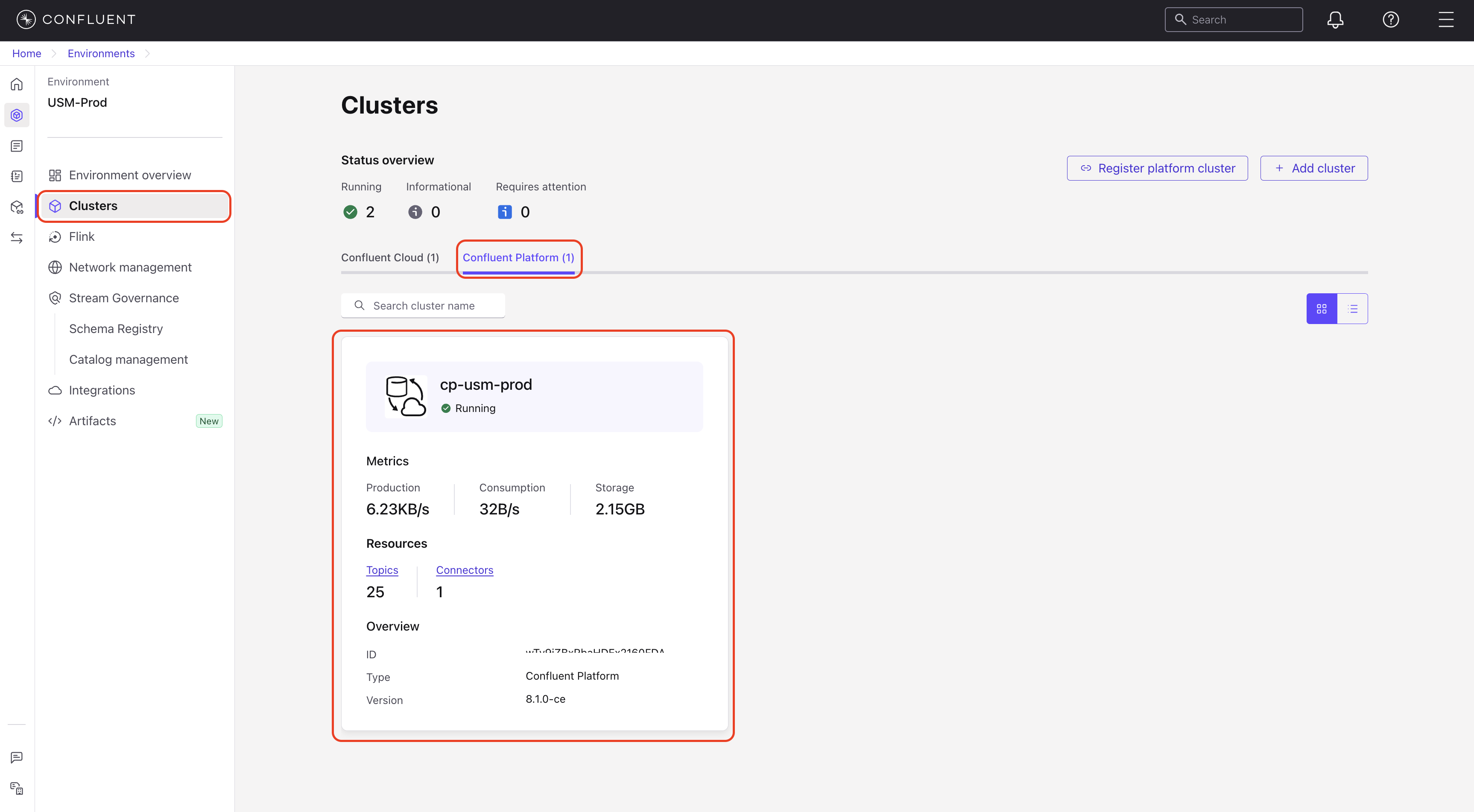

In the navigation menu, click Clusters and select a Confluent Platform cluster.

In the navigation menu, click Topics.

Select a topic from the list of topics.

Click Data Contract.

The data contract tab displays the schema and rules for the topic.

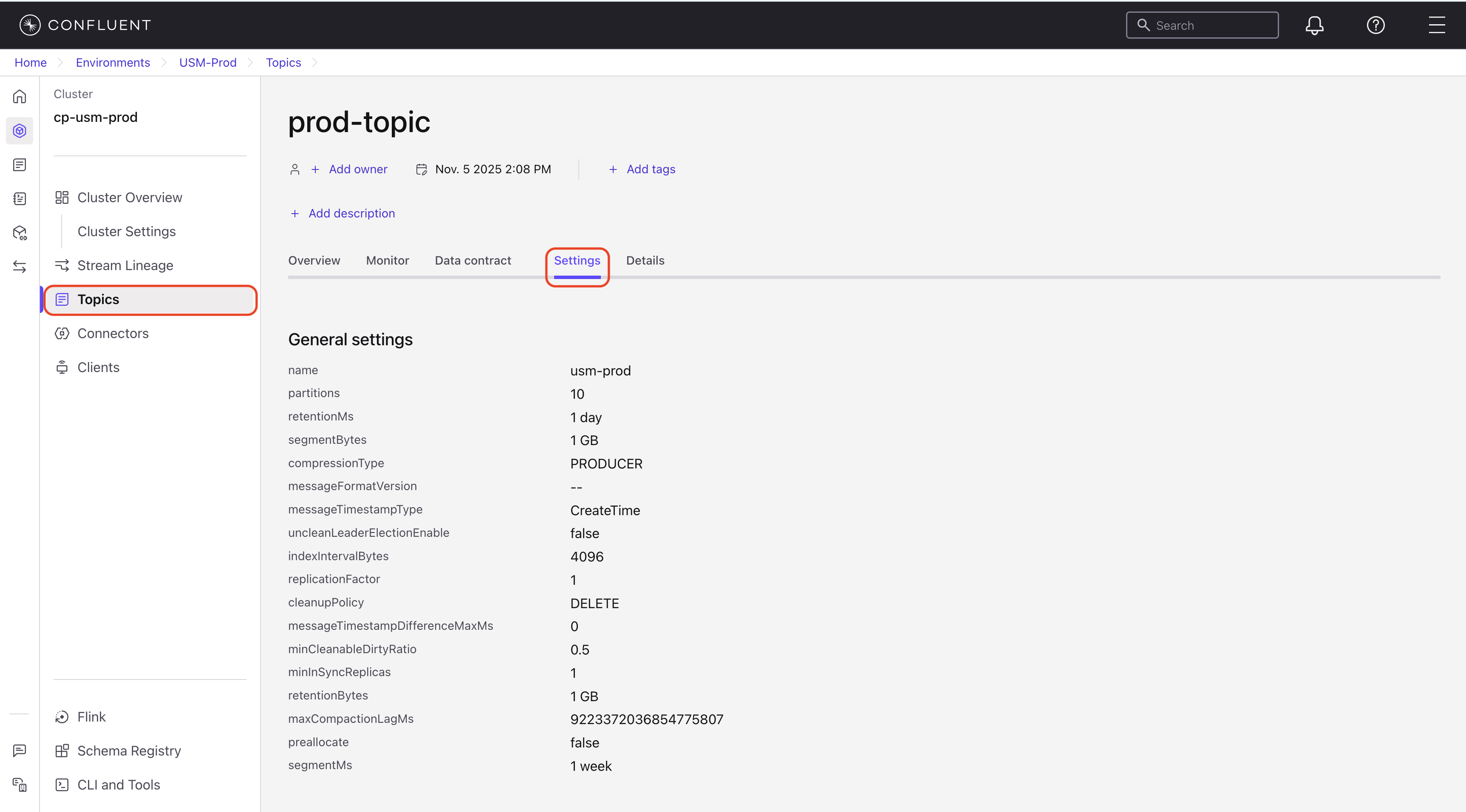

Settings tab

The Settings tab displays the topic’s configuration parameters. These settings are read-only in the Confluent Cloud Console and must be modified from your Confluent Platform environment.

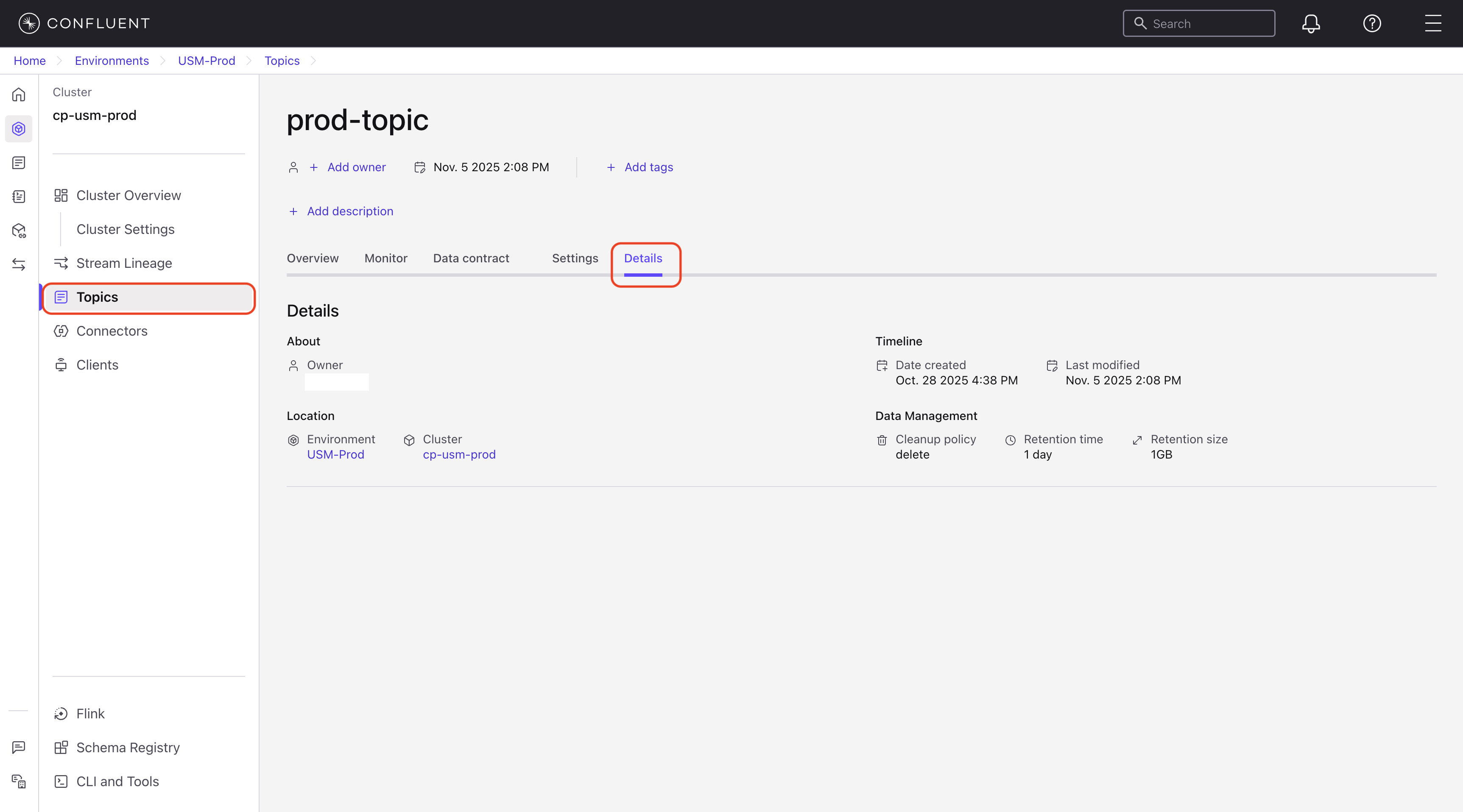

Details tab

The Details tab provides metadata and organizational information about the topic.

The Details tab includes the following sections:

About

Owner: User or team responsible for this topic.

Timeline

Date created: Creation date and time.

Last modified: Last update date and time.

Location

Environment: Confluent Cloud environment where the topic is registered.

Cluster: Confluent Platform cluster where the topic resides.

Data Management

Cleanup policy: Method for handling old log segments.

Retention time: Duration to retain messages.

Retention size: Maximum size for retained messages.