Configure RBAC for Confluent Platform Using Confluent for Kubernetes

Confluent for Kubernetes (CFK) supports Role-Based Access Control (RBAC). RBAC is powered by Confluent’s Metadata Service (MDS), which acts as the central authority for authorization and authentication data. RBAC leverages role bindings to determine which users and groups can access specific resources and what actions the users can perform on those resources.

Confluent provides audit logs, that record the runtime decisions of the permission checks that occur as users/applications attempt to take actions that are protected by ACLs and RBAC.

There are a set of principals and role bindings required for the Confluent components to function, and those are automatically generated when CFK is deployed.

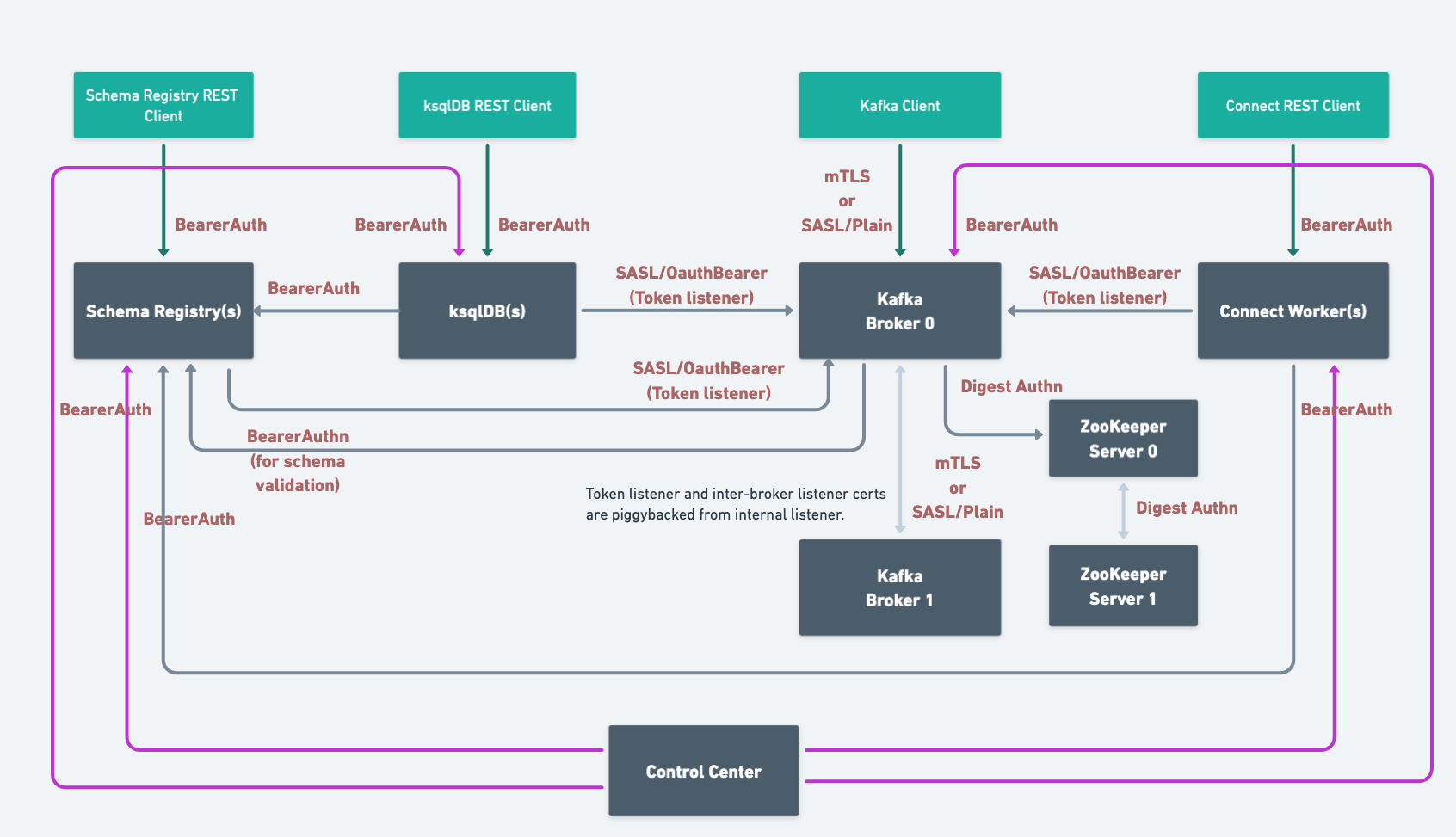

When you deploy Confluent Platform with RBAC enabled, CFK automates the security setup. Here’s the end-state architecture configured with LDAP:

Based on the components and features you use, you need to configure the following additional role bindings:

Requirements and considerations

The following are the requirements and considerations for enabling and using RBAC with CFK:

Confluent REST service is automatically enabled for RBAC and cannot be disabled when RBAC is enabled.

Use the Kafka bootstrap endpoint (same as the MDS endpoint) to access Confluent REST API.

RBAC with CFK can be enabled only for new installations. You cannot upgrade an existing cluster and enable it with RBAC.

When RBAC is enabled, CFK always uses the internal MDS endpoint. Even when you configure the external MDS listener endpoint and TLS settings, CFK does not use those settings.

The following are the requirements and considerations for enabling and using RBAC using LDAP:

You must have an LDAP server that Confluent Platform can use for authentication.

Currently, CFK only supports the

GROUPSLDAP search mode. The search mode indicates if the user-to-group mapping is retrieved by searching for group or user entries. If you need to use theUSERSsearch mode, specify using theconfigOverridessetting in the Kafka CR as below:spec: configOverrides: server: - ldap.search.mode=USERS

See Sample Configuration for User-Based Search for more information.

You must create the user principals in LDAP that will be used by Confluent Platform components. These are the default user principals:

Kafka:

kafka/kafka-secretConfluent REST API:

erp/erp-secretConfluent Control Center (Legacy):

c3/c3-secretksqlDB:

ksql/ksql-secretSchema Registry:

sr/sr-secretReplicator:

replicator/replicator-secretConnect:

connect/connect-secret

Create the LDAP user/password for a user who has a minimum of LDAP read-only permissions to allow Metadata Service (MDS) to query LDAP about other users. For example, you’d create a user

mdswith passwordDeveloper!Create a user for the Admin REST service in LDAP and provide the username and password.

Configure RBAC with CFK

The comprehensive security tutorial walks you through an end-to-end setup of role-based access control (RBAC) for Confluent with CFK. We recommend you take the CustomResource spec and the steps outlined in the scenario as a starting point and customize for your environment.

Enable RBAC for Kafka

To configure and deploy Kafka with the Confluent RBAC:

Specify the MDS and its provider settings in your Kafka Custom Resource (CR):

kind: Kafka spec: services: mds: --- [1] tokenKeyPair: --- [2] provider: --- [3] oauth: --- [4] ldap: --- [5]

[1] Required.

[2] Required. The token key pair to authenticate to MDS.

For details, see MDS authentication token keys.

[3] The identity provider settings.

oauthorldapfor RBAC.[4] Required for the OAuth authentication.

For the full list of the OAuth settings, see OAuth configuration.

[5] Required for the LDAP authentication.

Following are example configuration settings for LDAP:

ldap: address: ldap://ldap.confluent.svc.cluster.local:389 authentication: type: simple simple: secretRef: credential configurations: groupNameAttribute: cn groupObjectClass: group groupMemberAttribute: member groupMemberAttributePattern: CN=(.*),DC=test,DC=com groupSearchBase: dc=test,dc=com userNameAttribute: cn userMemberOfAttributePattern: CN=(.*),DC=test,DC=com userObjectClass: organizationalRole userSearchBase: dc=test,dc=com

Specify the RBAC settings in your Kafka Custom Resource (CR):

kind: Kafka spec: authorization: type: rbac --- [1] superUsers: --- [2] dependencies: kafkaRest: --- [3] authentication: type: --- [4] jaasConfig: --- [5] jaasConfigPassThrough: --- [6] oauthSettings: --- [7] tokenEndpointUri: expectedIssuer: jwksEndpointUri: subClaimName: bearer: --- [8] secretRef: --- [9] directoryPathInContainer: --- [10]

[1] Required.

[2] Required. The super users to be given the admin privilege on the Kafka cluster.

These users have no access to resources in other Confluent Platform clusters unless they also configured with specific role bindings on the clusters.

This list is in the

User:<user-name>format. For example:superUsers: - "User:kafka" - "User:testadmin"

[3] Required. The REST client configuration for MDS when RBAC is enabled.

[4] Required. Specify

beareroroauthfor RBAC.[5] Kafka client-side JaaS configuration. For details, see Create server-side SASL/PLAIN credentials using JAAS config.

[6] Kafka client-side JaaS configuration. For details, see Create server-side SASL/PLAIN credentials using JAAS config pass-through.

[7] Required for the authentication type

oauth([4]).For details on the settings, see OAuth configuration.

[8] Required for the authentication type

bearer([4]).[9] or [10] Required.

When RBAC is enabled (

spec.authorization.type: rbac), CFK always uses the Bearer authentication for Confluent components, ignoring thespec.authenticationsetting. It is not possible to set the component authentication type to mTLS when RBAC is enabled.[9] The username and password are loaded through secretRef.

The expected key is

bearer.txt.The value for the key is:

username=<username> password=<password>

[10] Provide the path where required credentials are injected by Vault. See [9] for the expected key and the value.

Configure the Admin REST Class CR.

For the rest of the configuration details for the Admin REST Class, see Manage Confluent Admin REST Class for Confluent Platform Using Confluent for Kubernetes.

kind: KafkaRestClass spec: kafkaRest: authentication: type: --- [1] bearer: --- [2] secretRef: --- [3] directoryPathInContainer: --- [4] oauth: --- [5] secretRef: --- [6] directoryPathInContainer: --- [7] configuration: --- [8] tokenEndpointUri:

[1] Required. Set to

oauthorbearerfor RBAC.[2] Required for the

bearerauthentication type ([1]).[3] or [4] Specify only one setting.

[3] The username and password are loaded through secretRef.

The expected key is

bearer.txt.The value for the key is:

username=<username> password=<password>

[4] Provide the path where required credentials are injected by Vault. See [3] for the expected key and the value.

[5] Required for the

oauthauthentication type ([1]).[6] or [7] Specify only one of the two.

[6] The secret that contains the OIDC client ID and the client secret for authorization and token request to the identity provider.

Create the secret that contains two keys with their respective values, clientId and clientSecret as following:

clientId=<client-id> clientSecret=<client-secret>

[7] The path where required OIDC client ID and the client secret are injected by Vault.

See Provide secrets for Confluent Platform component CR for providing the credential and required annotations when using Vault.

[8] OAuth settings. For the full list of the OAuth settings, see OAuth configuration.

When RBAC is enabled (

spec.authorization.type: rbac), CFK ignores thespec.authenticationsetting. It is not possible to set the component authentication type to mTLS when RBAC is enabled.If enabling the Confluent RBAC for a KRaft-based deployment, configure the KraftController CR as described in Enable RBAC for KRaft controller.

MDS authentication token keys

When using the Bearer authentication for RBAC, to sign the token generated by the MDS server, you can use the PKCS#1 or PKCS#8 PEM key format. When using PKCS#8, the private key can be unencrypted or encrypted with a passphrase.

To provide the MDS token keys:

Create a PEM key pair as described in Create a PEM key pair for MDS.

If using an encrypted private key:

Create a passphrase file with the name,

mdsTokenKeyPassphrase.txt.In the

mdsTokenKeyPassphrase.txtfile, add the key-value pair with themdsTokenKeyPassphrasekey and the passphrase that the private key was encrypted with:

mdsTokenKeyPassphrase=<passphrase>

Add the public key and the token key pair to a secret or inject it in a directory path in the container using Vault.

To use an encrypted private key, add the passphrase value to the secret or the directory path in the container, as well.

An example command to create a secret with an unencrypted private key:

kubectl create secret generic mds-token \ --from-file=mdsPublicKey.pem=mds-publickey.txt \ --from-file=mdsTokenKeyPair.pem=mds-tokenkeypair.txt \ --namespace confluent

An example command to create a secret with an encrypted private key:

kubectl create secret generic mds-token \ --from-file=mdsPublicKey.pem=mds-publickey.txt \ --from-file=mdsTokenKeyPair.pem=mds-tokenkeypair.txt \ --from-file=mdsTokenKeyPassphrase.txt=mdsTokenKeyPassphrase.txt \ --namespace confluent

In the Kafka CR, specify the token key pair to authenticate to MDS:

kind: Kafka spec: services: mds: tokenKeyPair: secretRef: --- [1] directoryPathInContainer: --- [2] encryptedTokenKey: --- [3]

[1] The name of the Kubernetes secret that contains the public key and the token key pair to sign the token generated by the MDS server.

[2] The directory path in the container where the required public key and the token key pair are injected by Vault.

[3] Optional. Set to

trueto use an encrypted private key. The default value isfalse.

Enable RBAC for KRaft controller

To enable the Confluent RBAC for a KRaft-based deployment:

After configuring MDS and Kafka as shown above in Enable RBAC for Kafka, configure the KraftController CR.

For KRaft-based Kafka, the KRaftController CR must include the

dependencies.mdsKafkaClustersection.kind: KRaftController spec: dependencies: mdsKafkaCluster: --- [1] bootstrapEndpoint: --- [2] authentication: --- [3] type: --- [4] jaasConfig: --- [5] jaasConfigPassThrough: --- [6] oauthbearer: --- [7] secretRef: —-- [8] directoryPathInContainer: --- [9] oauthSettings: --- [10] tls: --- [11] enabled: --- [12]

[1] Required.

[2] Required. Specify the MDS Kafka bootstrap endpoint.

[3] Specify the client-side authentication for the MDS Kafka cluster.

[4] Required. Specify the client-side authentication type for the MDS Kafka. Valid options are

plain,oauthbearer,mtls, andoauth.[5] When the authentication type (

type) is set toplain, specify the credential using JAAS configuration. For details, see Create client-side SASL/PLAIN credentials using JAAS config.[6] When the authentication type (

type) is set toplain, specify the credential usingjaasConfigPassThrough. For details, see Create client-side SASL/PLAIN credentials using JAAS config pass-through.[7] Use Oauth Bearer Token to provide principals to authenticate with MDS Kafka.

[8] The Oauth username and password are loaded through secretRef. The expected key is

bearer.txt, and the value for the key is:username=<username> password=<password>

An example command to create a secret to use for this property:

kubectl create secret generic oauth-client \ --from-file=bearer.txt=/some/path/bearer.txt \ --namespace confluent

[9] The directory in the Confluent Control Center (Legacy) container where the expected Bearer credentials are injected by vault. See above ([7]) for the expected format.

See Provide secrets for Confluent Platform component CR for providing the credential and required annotations when using Vault.

[10] Required for the

oauthauthentication type.For the full list of the OAuth settings, see OAuth configuration.

[11] The client-side TLS setting for the MDS Kafka cluster. For details, see Client-side mTLS authentication for Kafka.

[12] Required for mTLS authentication. Set to

true.

Enable RBAC for other Confluent Platform components

To configure and deploy other non-Kafka components with the Confluent RBAC:

Specify the settings in the component CR:

spec: authorization: type: rbac --- [1] kafkaRestClassRef: --- [2] name: default dependencies: mds: endpoint: --- [3] tokenKeyPair: --- [4] secretRef: directoryPathInContainer authentication: type: --- [5] oauth: --- [6] bearer: --- [7] secretRef: --- [8] directoryPathInContainer: --- [9]

[1] Required for RBAC.

[2] If

kafkaRestClassRefis not configured, the kafkaRestClass with the name,default, in the current namespace is used.[3] Required. MDS endpoint.

[4] Required. The token key pair and the public key to authenticate to the MDS. Use

secretRefordirectoryPathInContainerto specify.You need to add the public key and the token key pair to the secret or directoryPathInContainer. For details, see Create a PEM key pair for MDS.

An example command to create a secret is:

kubectl create secret generic mds-token \ --from-file=mdsPublicKey.pem=mds-publickey.txt \ --from-file=mdsTokenKeyPair.pem=mds-tokenkeypair.txt \ --namespace confluent

[5] Required. Set to

beareroroauth.[6] Required for the

oauthauthentication ([5]).For the full list of the OAuth settings, see OAuth configuration.

[7] Required for the

bearerauthentication ([5]).[8] or [9] Required.

When RBAC is enabled (

spec.authorization.type: rbac), CFK always uses the Bearer authentication for Confluent components, ignoring thespec.authenticationsetting. It is not possible to set the component authentication type to mTLS when RBAC is enabled.[8] The username and password are loaded through secretRef.

The expected key is

bearer.txt.The value for the key is:

username=<username> password=<password>

[9] Provide the path where required credentials are injected by Vault. See [8] for the expected key and the value.

Use an existing Admin REST Class or create a new Admin REST Class CR as described in the previous section.

Migrate LDAP-based RBAC to OAuth-based RBAC

Migrate RBAC from using LDAP to using both LDAP and OAuth

This section describes the steps to upgrade a Confluent Platform deployment configured with LDAP-based RBAC to LDAP and OAuth-based RBAC.

To migrate your Confluent Platform deployment to use OAuth, the Confluent Platform version must be 7.7.

Upgrading the Confluent Platform version and migrating to OAuth simultaneously is not supported.

Even though this upgrade can be done in one step, as described in this section, we recommend the two-step migration, MDS first, and the rest of the components to reduce failed restarts of components.

To migrate an existing Confluent Platform deployment from LDAP to LDAP and OAuth:

Upgrade the MDS with the required OAuth settings as described in Enable RBAC for Kafka and apply the CR with the

kubectl applycommand.Following is a sample snippet of a Kafka CR with LDAP and OAuth:

kind:kafka spec: services: mds: provider: ldap: address: ldaps://ldap.operator.svc.cluster.local:636 authentication: type: simple simple: secretRef: credential tls: enabled: true configurations: oauth: configurations: dependencies: kafkaRest: authentication: type: oauth jaasConfig: secretRef: oauth-secret oauthSettings:

After the Kafka role is complete, upgrade the rest of the Confluent Platform components.

Add the following annotation to the Schema Registry, Connect, and Confluent Control Center (Legacy) CRs:

kind: <component> metadata: annotations: platform.confluent.io/disable-internal-rolebindings-creation: "true"

Add the OAuth settings to the rest of the Confluent Platform components as described in Enable RBAC for KRaft controller and Enable RBAC for other Confluent Platform components and apply the CRs with the

kubectl applycommand.The following are sample snippets of the relevant settings in the component CRs.

kind: KRaftController spec: dependencies: mdsKafkaCluster: bootstrapEndpoint: authentication: type: oauth jaasConfig: secretRef: oauthSettings: tokenEndpointUri:

kind: KafkaRestClass spec: kafkaRest: authentication: type: oauth oauth: secretRef: configuration:

kind: SchemaRegistry spec: dependencies: mds: authentication: type: oauth oauth: secretRef: configuration:

If you have existing connectors, add the following to the Connect CR to avoid possible down time:

kind: Connect spec: configOverrides: server: - producer.sasl.login.callback.handler.class=org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerLoginCallbackHandler - consumer.sasl.login.callback.handler.class=org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerLoginCallbackHandler - admin.sasl.login.callback.handler.class=org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerLoginCallbackHandler

Log into Control Center (Legacy) and check if you can see Kafka, Schema Registry, and Connect.

Migrate RBAC from using LDAP and OAuth to using OAuth

After successful validation, you can remove LDAP from the RBAC configuration and only use OAuth in your deployment.

Migrate the clients using LDAP credentials to OAuth.

Remove the LDAP authentication settings. See Enable RBAC for Kafka for the LDAP settings you can remove.

Automated creation of role bindings for Confluent Platform component principals

CFK automatically creates all required role bindings for Confluent Platform components as ConfluentRoleBinding custom resources (CRs).

Review the role bindings created by CFK:

kubectl get confluentrolebinding

Grant role to Kafka user to access Schema Registry

Use the following ConfluentRolebinding CR to create the required role binding to access Schema Registry:

apiVersion: platform.confluent.io/v1beta1

kind: ConfluentRolebinding

metadata:

name: internal-schemaregistry-schema-validation

namespace: <namespace>

spec:

principal:

name: <user-id>

type: user

clustersScopeByIds:

schemaRegistryClusterId: <schema-registry-group-id>

kafkaClusterId: <kafka-cluster-id>

resourcePatterns:

- name: "*"

patternType: LITERAL

resourceType: Subject

role: DeveloperRead

Grant roles to a Confluent Control Center (Legacy) user to administer Confluent Platform

Control Center (Legacy) users require separate roles for each Confluent Platform component and resource they wish to view and administer in the Control Center (Legacy) UI. Grant explicit permissions to the users as shown below.

In the following example, the testadmin principal is used as a Control Center (Legacy) UI user.

Grant permission to view and administer Confluent Platform components

The rolebinding CRs in the examples GitHub repo specifies the permissions needed in CFK. Create the rolebindings with the following command:

kubectl apply -f \

https://raw.githubusercontent.com/confluentinc/confluent-kubernetes-examples/master/security/production-secure-deploy/controlcenter-testadmin-rolebindings.yaml

Check the roles created:

kubectl get confluentrolebinding

Troubleshooting: Verify MDS configuration

Log into MDS to verify the correct configuration and to get the Kafka cluster ID. You need the Kafka cluster ID for component role bindings.

Replace https://<mds_endpoint> in the below commands with the value you set in the spec.dependencies.mds.endpoint in the Kafka CR.

Log into MDS as the Kafka super user as below:

confluent login \ --url https://<mds_endpoint> \ --ca-cert-path <path-to-cacerts.pem>

You need to pass the

--ca-cert-pathflag if:You have configured MDS to serve HTTPS traffic (

kafka.spec.dependencies.mds.tls.enabled: true).The CA used to issue the MDS certificates is not trusted by system where you are running these commands.

Provide the Kafka username and password when prompted, in this example,

kafkaandkafka-secret.You get a response to confirm a successful login.

Verify that the advertised listeners are correctly configured using the following command:

curl -ik \ -u '<kafka-user>:<kafka-user-password>' \ https://<mds_endpoint>/security/1.0/activenodes/https

Get the Kafka cluster ID using one of the following commands:

confluent cluster describe --url https://<mds_endpoint>

curl -ik \ https://<mds_endpoint>/v1/metadata/id