Configure Security for Confluent Gateway using Confluent for Kubernetes

This section provides details on the following security configurations for a Confluent Gateway using CFK:

Security best practices and recommendations

Use separate Gateway-to-Broker credentials for each client

In Confluent Gateway deployments, there are typically two authentication layers in security configuration scenarios:

Client → Gateway (SASL/PLAIN)

Gateway → Broker (SASL/PLAIN or SASL/OAUTHBEARER), with secrets stored in AWS Secrets Manager, HashiCorp Vault, Azure Key Vault, or local directory.

The following are best practice recommendations:

Do not map multiple client users to a single Gateway-to-Broker credential.

Each client should ideally have its own Gateway-to-Broker SASL credential.

Avoid static “shared” credentials across multiple clients.

Authentication configuration

Confluent Gateway supports two modes for authenticating clients and forwarding traffic to Kafka clusters: identity passthrough and authentication swapping.

Identity passthrough: Client credentials are forwarded directly to Kafka clusters without modification.

Use identity passthrough for environments where the authentication method is uniform and Confluent Gateway transparency is sufficient.

With identity passthrough, all SASL authentication mechanisms, such as SASL/PLAIN or SASL/OAUTHBEARER, are supported.

Authentication swapping: Client credentials are transformed into different credentials before connecting to Kafka clusters.

When enabled, Confluent Gateway authenticates incoming clients, optionally using a different authentication mechanism than the backing Kafka cluster. Confluent Gateway then swaps the client identity and credentials as it forwards requests to brokers.

With authentication swapping:

SASL/PLAIN or mTLS authentication is supported for client to Confluent Gateway authentication.

SASL/PLAIN or SASL/OAUTHBEARER authentication is supported for Confluent Gateway to Kafka cluster authentication.

Use authentication swapping when you need to:

Migrate clients between source and destination clusters that have different authentication requirements, without modifying client applications.

Share cluster access with external clients while maintaining your internal authentication standards, even when you cannot enforce those standards directly on the client side.

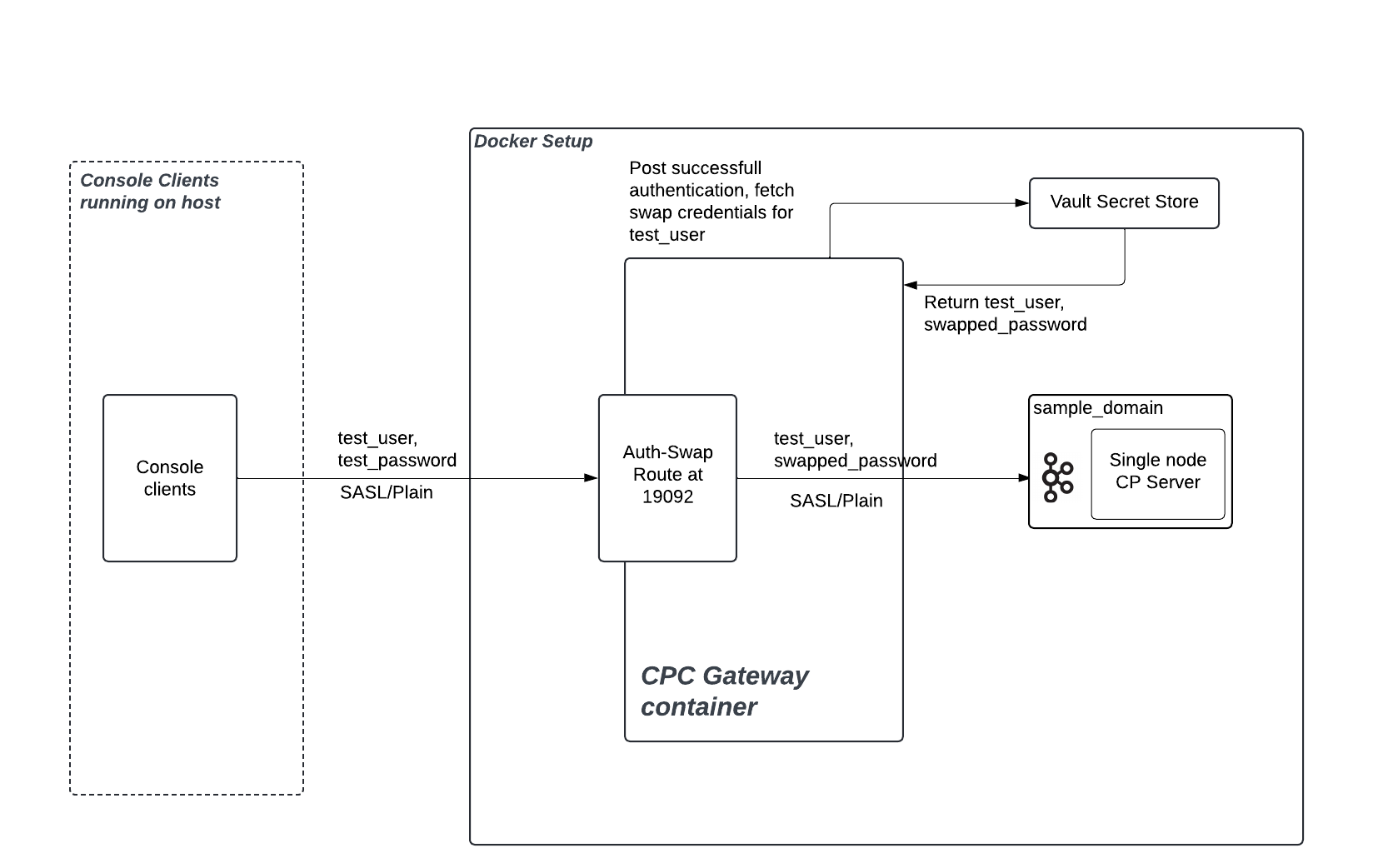

The following diagram shows a sample authentication flow for authentication swapping:

Some considerations when selecting the authentication mode in Confluent Gateway are:

You can configure either identity passthrough or authentication swapping for an individual route.

You cannot configure multiple authentication mechanisms for the same route while using authentication swapping.

Identity passthrough cannot be used for mTLS due to TLS termination at Confluent Gateway; currently, authentication swapping is mandatory for mTLS clients.

Authentication swapping requires more setup (identity stores, KMS, mappings) but adds flexibility and supports more complex enterprise scenarios.

Authentication swapping with multi-cluster streaming domains requires identical user identities and RBAC policies across all clusters. If clusters have different authentication systems or user permissions, use separate streaming domains instead.

Configure identity passthrough

When a route is configured for identity passthrough, Confluent Gateway forwards unaltered authentication information (no interception) directly to Kafka brokers, and it does not itself authenticate incoming clients. The brokers perform authentication and authorization checks.

Identity passthrough is supported for SASL authentication mechanisms, such as SASL/PLAIN or SASL/OAUTHBEARER.

To configure a route for identity passthrough in CFK:

kind: Gateway

spec:

routes:

- name:

security:

auth: passthrough --- [1]

client:

authentication:

mtls:

sslClientAuthentication: --- [2]

tls: --- [3]

[1] The authentication mode for the route. Set to

passthroughto enable identity passthrough.[2] If using TLS, set to

requiredto require clients to present their certificates. Set torequestedfor optional certificate presentation.[3] The TLS configuration for the client to Confluent Gateway communication. See TLS/SSL configuration for details.

Sample configurations for identity passthrough

TLS for Gateway to Client communication example:

kind: Gateway

spec:

routes:

- name:

security:

auth: passthrough

client:

authentication:

type: PLAIN

tls:

secretRef: my-tls-secret

TLS for Gateway to Client communication example with certificates required:

kind: Gateway

spec:

routes:

- name:

security:

auth: passthrough

client:

authentication:

type: PLAIN

mtls:

sslClientAuthentication: "required"

tls:

secretRef: my-tls-secret

Configure authentication swapping

When a route is configured for authentication swapping, Confluent Gateway authenticates incoming clients, optionally using a different authentication mechanism than the backing Kafka cluster. Confluent Gateway then swaps the client identity and credentials as it forwards requests to Kafka brokers.

Authentication swapping requires configuration of identity mapping, essentially integration with external Key Management Systems (KMS) or secret stores.

The following secret stores are supported for fetching the credentials:

HashiCorp Vault

AWS Secrets Manager

Azure Key Vault

To configure a route for authentication swapping in CFK:

kind: Gateway

spec:

routes:

- name: --- [1]

security:

auth: swap --- [2]

client: --- [3]

secretStore: --- [4]

cluster: --- [5]

[1] The unique name for the route.

[2] The authentication mode for the route. Set to

swapto enable authentication swapping.[3] How clients authenticate to Confluent Gateway. See Client authentication for authentication swapping.

[4] Reference to a secret store for exchanging credentials. See Secret store configuration.

[5] How Confluent Gateway authenticates to the Kafka cluster after swapping. See Cluster authentication for authentication swapping.

Client authentication for authentication swapping

Configure how clients authenticate to Confluent Gateway for authentication swapping.

You can use SASL/PLAIN or mTLS authentication methods.

SASL authentication

kind: Gateway

spec:

routes:

- name:

security:

auth: swap

client:

authentication:

type: --- [1]

jaasConfig: --- [2]

secretRef: --- [3]

jaasConfigPassthrough: --- [4]

secretRef: --- [5]

directoryPathInContainer: -- [6]

connectionsMaxReauthMs: --- [7]

[1] The SASL mechanism to use. Set to

plainfor SASL/PLAIN authentication.[2] [4] SASL credentials. Specify either

jaasConfigorjaasConfigPassthrough.[3] The Kubernetes secret that holds the credentials.

See Create client-side SASL/PLAIN credentials using JAAS config for creating the JAAS configuration secret.

[5] The Kubernetes secret that holds the credentials.

See Create client-side SASL/PLAIN credentials using JAAS config pass-through for creating the JAAS Passthrough credentials.

[6] The directory path in the container that has the JAAS configuration file.

See Create client-side SASL/PLAIN credentials using JAAS config pass-through for creating the JAAS Passthrough credentials.

[7] Maximum time in milliseconds before requiring client reauthentication. If the client does not reauthenticate within this time frame, the connection will be closed. Default is

0(infinite - no reauthentication is required).

mTLS Authentication

kind: Gateway

spec:

routes:

- name:

security:

auth: swap

client:

authentication:

type: --- [1]

mtls:

principalMappingRules: --- [2]

sslClientAuthentication: --- [3]

tls:

secretRef:

[1] The client authentication mechanism to use. Set to

mtlsfor mTLS authentication.[2] The pattern to read principal name from the certificates. Required for authentication swapping with mTLS authentication.

[3] The SSL client authentication mode. Set to

"required"to enable mandatory mTLS authentication from the client-side and to enforce certificate presentation.Valid values are

"requested"and"required".

Cluster authentication for authentication swapping

Configure how Confluent Gateway authenticates to the Kafka cluster for authentication swapping.

You can use SASL/PLAIN or SASL/OAUTHBEARER authentication methods.

SASL authentication

kind: Gateway

spec:

routes:

- name:

security:

auth: swap

cluster:

authentication:

type: --- [1]

jaasConfigPassthrough: --- [2]

secretRef: --- [3]

directoryPathInContainer: --- [4]

oauthSettings: --- [5]

tokenEndpointUri: --- [6]

[1] The SASL mechanism to use. Set to

plainfor SASL/PLAIN authentication, or set tooauthbearerfor SASL/OAUTHBEARER authentication.[2] Required for SASL/PLAIN authentication. Only

jaasConfigPassthroughis supported for cluster authentication.[3] The Kubernetes secret that holds the credentials. Specify one of [3] or [4].

The file content of the secret should be in the following format:

org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required clientId="%s" clientSecret="%s";

See Create client-side SASL/PLAIN credentials using JAAS config pass-through for creating the JAAS Passthrough credentials.

[4] The directory path in the container that contains the JAAS Passthrough configuration file.

See Create client-side SASL/PLAIN credentials using JAAS config pass-through for creating the JAAS Passthrough credentials.

[5] Required only if

cluster.authentication.type=oauthbearer([1]).[6] The URI for the OAuth token endpoint.

Sample configurations for authentication swapping

SASL/PLAIN to SASL/OAUTHBEARER example:

kind: Gateway

spec:

routes:

- name: route-name

security:

auth: swap

client:

authentication:

type: plain

jaasConfig:

secretRef: plain-secrets

connectionsMaxReauthMs:

tls:

secretStore: "oauth-secrets"

cluster:

authentication:

type: oauthbearer

jaasConfig:

secretRef: oauth-jaas-template

oauthSettings:

tokenEndpointUri: "https://idp.mycompany.io:8080/realms/cp/protocol/openid-connect/token"

mTLS to SASL/OAUTHBEARER example:

kind: Gateway

spec:

routes:

- name: route-name

security:

auth: swap

client:

authentication:

type: mtls

mtls:

principalMappingRules: ["RULE:^CN=([a-zA-Z0-9._-]+),OU=.*$/$1/", "RULE:^UID=([a-zA-Z0-9._-]+),.*$/$1/","DEFAULT"]

sslClientAuthentication: "required"

tls:

secretRef: tls-secrets

secretStore: "oauth-secrets"

cluster:

authentication:

type: oauthbearer

jaasConfigPassthrough:

secretRef: oauth-jaas-template

oauthSettings:

tokenEndpointUri: "https://idp.mycompany.io:8080/realms/cp/protocol/openid-connect/token"

TLS/SSL configuration

The following TLS/SSL configuration is supported for streaming domain configuration (streamingDomains.kafkaCluster.bootstrapServers.tls) and route configuration (routes.security.client.tls) with CFK.

tls:

ignoreTrustStoreConfig: --- [1]

jksPassword:

secretRef: --- [2]

secretRef: --- [3]

directoryPathInContainer: --- [4]

[1] Skip certificate validation (not recommended for production). Essentially, setting this to

truetrusts all certificates.Important

Ensure that

ignoreTrustStoreConfig: falseis set in all production configurations. Setting this totruedisables TLS certificate validation, introducing critical security risks, such as:Exposure to man-in-the-middle (MITM) attacks

Possibility of certificate spoofing

Compromise of encrypted traffic

Risk to data confidentiality

[2] Password that CFK uses for both JKS keystore and truststore. The same password is used as keystore’s key password.

[3] [4] Use either

secretRefordirectoryPathInContainerto provide the TLS/SSL certificates.An example command to create a Kubernetes secret with the JKS type TLS/SSL certificates:

kubectl create secret generic kafka-tls \ --from-file=keystore.jks=keystore.jks \ --from-file=truststore.jks=truststore.jks \ --from-file=jksPassword.txt=jksPassword.txt

For details on how to create the TLS/SSL certificates, see Provide TLS keys and certificates in PEM format and Provide TLS keys and certificates in Java KeyStore format.

Password configuration

Confluent Gateway supports the file-based and inline password configurations. Only one of the two should be provided.

password:

file: --- [1]

value: --- [2]

[1] The path to the password file.

[2] The inline password value.

Secret store configuration

Confluent Gateway uses secret stores, such as AWS Secrets Manager, Azure Key Vault, HashiCorp Vault, or a file, to securely manage authentication credentials and sensitive information. This setup is critical for several key operations:

Storing Confluent Gateway to Kafka broker credentials

Supporting authentication swapping scenarios

In cases where Confluent Gateway translates or “swaps” authentication (such as from mTLS clients to OAuthbearer brokers, or vice versa), secret stores are needed to store and fetch the credentials used in the swap. This allows each client connection to use its mapped broker credential, enhancing security and enabling fine-grained access control.

Interaction with these secret stores should always occur over TLS for confidentiality and integrity.

As a security best practice, configure Confluent Gateway to assume IAM roles with the least privilege principle. Avoid using static IAM user credentials to avoid sensitive credential exposure.

Ensure proper role trust policies are in place and limit permissions to only what the Confluent Gateway needs. For example in AWS:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue"

],

"Resource": [

"arn:aws:secretsmanager:us-east-1:123456789012:secret:gateway/*",

"arn:aws:secretsmanager:us-east-1:123456789012:secret:confluent/*"

],

"Condition": {

"StringEquals": {

"aws:RequestTag/Environment": "production"

}

}

}

]

}

Configure a secret store

Add the following configurations to the Confluent Gateway CR to configure a secret store:

kind: Gateway

spec:

secretStores:

- name: --- [1]

provider:

type: --- [2]

configSecretRef: --- [3]

certificateRef: --- [4]

[1] A unique name for the secret store.

[2] The type of the secret store. Set to

AWS(AWS Secrets Managers),Azure(Azure Key Vault),Vault(HashiCorp Vault), orFile(file).[3] Required. The name of the Kubernetes secret that contains the secret store configuration.

[4] Optional. The name of the Kubernetes secret that contains the certificates to be volume mounted in the Gateway pod.

The following sections provide examples for creating secret store configurations to be specified in the gateway.spec.secretStores.provider.configSecretRef field.

Create a secret store configuration for HashiCorp Vault

Note that connecting to HashiCorp Vault using a certificate is not supported in Confluent Gateway.

The following fields are supported for HashiCorp Vault configuration:

address: Required. Sets the address (URL) of the Vault server instance to connect to.authMethod: Required. The authentication method to use. You can set this toToken(the default value) or leave it empty.authToken: The authentication token for the Vault server to connect using theauthTokenmethod.path: Required. The path to the secret in Vault where the data is stored.prefixPath: The prefix path to the secret store.separator: The separator for the secret store. The default value is:.role: The role to use to connect to the Vault server.secret: The secret to use to connect to the Vault server.username: The username to use to connect to the Vault server.password: The password to use to connect to the Vault server.

An example command to create a Kubernetes secret for HashiCorp Vault using an authentication token configuration:

kubectl create secret generic vault-config \

--from-literal=address="https://vault.prod.company.com" \

--from-literal=authMethod="Token" \

--from-literal=authToken="<auth-token>" \

--from-literal=path="/secrets/prod/gateway/swap-creds" \

--from-literal=prefixPath="" \

--from-literal=separator=":"

An example command to create a Kubernetes secret for HashiCorp Vault using an AppRole configuration:

kubectl create secret generic vault-config \

--from-literal=address="https://vault.prod.company.com" \

--from-literal=authMethod="AppRole" \

--from-literal=role="<app-role>" \

--from-literal=secret="<app-role-secret>" \

--from-literal=path="/secrets/prod/gateway/swap-creds" \

--from-literal=prefixPath="" \

--from-literal=separator=":"

An example command to create a Kubernetes secret for HashiCorp Vault using a username and password configuration:

kubectl create secret generic vault-config \

--from-literal=address="https://vault.prod.company.com" \

--from-literal=authMethod="UserPass" \

--from-literal=username="<username>" \

--from-literal=password="<password>" \

--from-literal=path="/secrets/prod/gateway/swap-creds" \

--from-literal=prefixPath="" \

--from-literal=separator=":"

Create a secret store configuration for AWS Secrets Manager

If the environment (EC2 for instance) has an IAM Role attached with sufficient permissions, you do not need to specify accessKey or secretKey. The provider will automatically assume the attached IAM Role via the default AWS credential provider chain.

The following fields are supported for AWS Secrets Manager configuration:

regionof the AWS Secrets Manager.accessKeyof the AWS IAM Access Key ID. Only required when authenticating with IAM user credentials.secretKeyof the AWS IAM Secret Key corresponding to theaccessKey. Only required when authenticating with IAM user credentials.separatorof the AWS Secrets Manager. Defaults to:.

An example command to create a Kubernetes secret for AWS Secrets Manager using an IAM Role configuration:

kubectl create secret generic aws-us-west-2-secret-mgr-config \

--from-literal=region="us-west-2" \

--from-literal=accessKey="<ACCESS_KEY>" \

--from-literal=secretKey="<SECRET_KEY>" \

--from-literal=separator="/"

Create a secret store configuration for Azure Key Vault

The following fields are supported for Azure Key Vault configuration:

vaultUrlof the Azure Key Vault to connect to.credentialTypeof the Azure Key Vault.tenantIdof the Azure Key Vault.clientIdof the Azure Key Vault.clientSecretof the Azure Key Vault.prefixPathof the Azure Key Vault. The prefix path to the secret store. Defaults to an empty string.usernameof the Azure Key Vault.passwordof the Azure Key Vault.certificateTypeof the Azure Key Vault. You can connect to Azure Key Vault using the Privacy-Enhanced Mail (PEM) or Personal Information Exchange (PFX) format certificates. Set toPFXfor PFX certificates orPEMfor PEM certificates.certificatePathof the Azure Key Vault.certificatePfxPasswordof the Azure Key Vault.certificateSendChainof the Azure Key Vault.separator: The character or string used to split authentication data within the retrieved secret. Defaults to:.

An example command to create a Kubernetes secret for Azure Key Vault using Client ID and Secret configuration:

kubectl create secret generic azure-eu-vault-config \

--from-literal=vaultUrl="https://authswap.vault.azure.net/" \

--from-literal=credentialType="ClientCertificate" \

--from-literal=tenantId="<TENANT_ID>" \

--from-literal=clientId="<CLIENT_ID>" \

--from-literal=certificateType="PFX" \

--from-literal=certificatePfxPassword="password" \

--from-literal=separator="/"

An example command to create a Kubernetes secret for Azure Key Vault using Username and Password configuration:

kubectl create secret generic azure-eu-vault-config \

--from-literal=vaultUrl="https://authswap.vault.azure.net/" \

--from-literal=credentialType="UsernamePassword" \

--from-literal=tenantId="<TENANT_ID>" \

--from-literal=clientId="<CLIENT_ID>" \

--from-literal=username="<USERNAME>" \

--from-literal=password="<PASSWORD>" \

--from-literal=separator="/"

An example command to create a Kubernetes secret for Azure Key Vault using Client Certificate configuration:

kubectl create secret generic azure-keyvault \

--from-literal=vaultUrl="https://authswap.vault.azure.net/" \

--from-literal=credentialType="ClientCertificate" \

--from-literal=tenantId="<TENANT_ID>" \

--from-literal=clientId="<CLIENT_ID>" \

--from-literal=certificateType="PEM" \

--from-literal=certificatePath="/opt/ssl/client-cert.pem" \

--from-literal=separator="/"

An example command to create a Kubernetes secret for Azure Key Vault using PFX certificate configuration:

kubectl create secret generic azure-keyvault \

--from-literal=vaultUrl="https://authswap.vault.azure.net/" \

--from-literal=credentialType="ClientCertificate" \

--from-literal=tenantId="<TENANT_ID>" \

--from-literal=clientId="<CLIENT_ID>" \

--from-literal=certificateType="PFX" \

--from-literal=certificatePath="/opt/ssl/client-cert.pfx" \

--from-literal=certificatePfxPassword="<pfx-password>" \

--from-literal=separator="/"

Create a file-based secret store configuration

In file-based secret store configuration, each file represents the incoming client’s username. For example, if an incoming username is sales-cp-app and you want to swap it to sales-cc-app, the /etc/secrets/sales-cp-app file should contain sales-cc-app/sales-cc-secret-key.

The following fields are supported for file-based secret store configuration:

file: The path to the file that contains the secret store configuration.separator: The separator for the secret store. The default value is/.

An example command to create a Kubernetes secret for file-based secret store configuration:

kubectl create secret generic file-secret \

--from-file=file=/etc/secrets/sales-cp-app