Tutorial: Move Active-Passive Data Center to Multi-Region in Confluent Platform

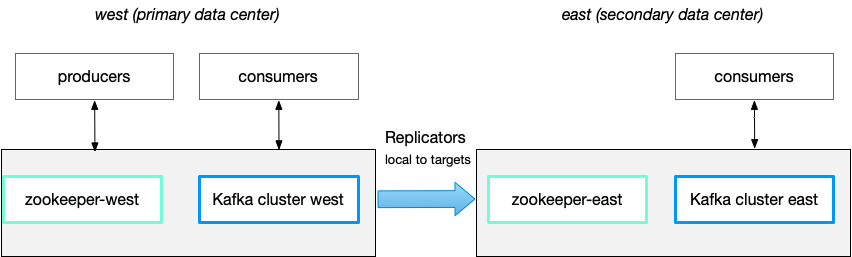

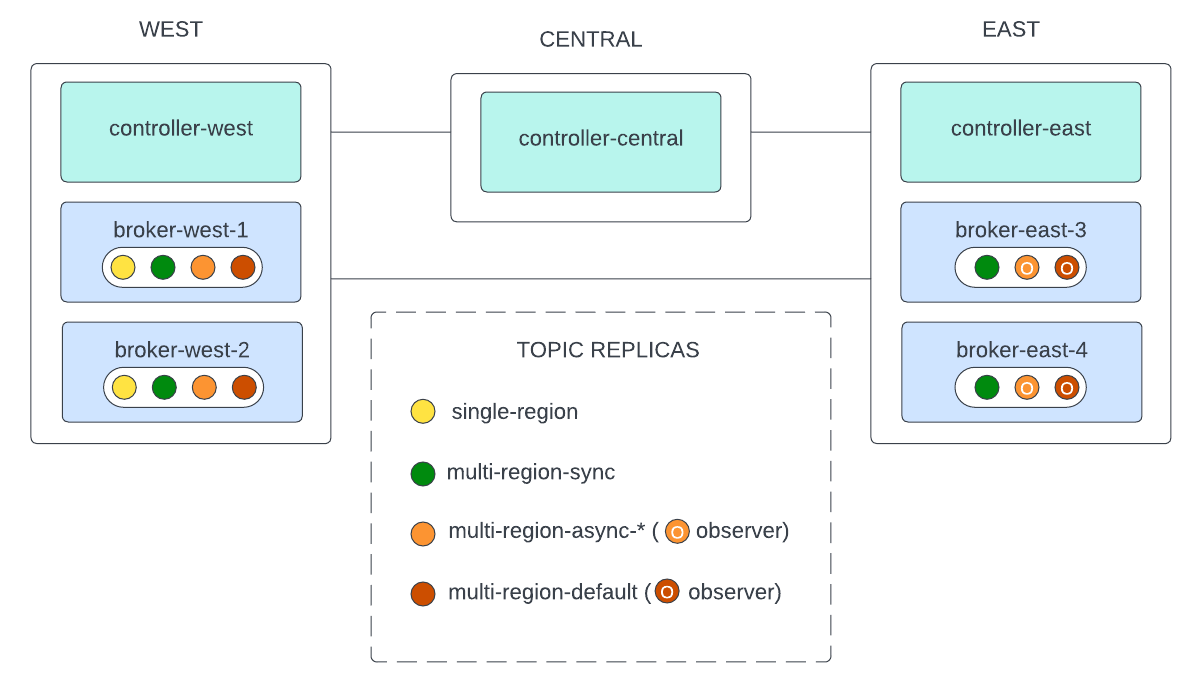

Confluent Platform provides a variety of technologies and architectures to create and manage clusters that span multiple regions. This tutorial shows you how to move a more traditional active-passive datacenter architecture that spans two regions to a multi-region stretched cluster that can leverage follower-fetching, observers, and replica placement available in Configure Multi-Region Clusters in Confluent Platform.

What the tutorial covers

This tutorial shows you how to move an existing active-passive cluster setup that uses Replicator for syncing across regions to a multi-region stretched cluster. Replicator is not needed for the stretched cluster because it is effectively a single cluster operating across multiple regions.

A broad outline of best practices and a sequence of steps is provided for pausing clients and replication, reconfiguring the clusters, and bringing the new clusters back online. However, detailed guidance on commands and configurations is not included. To get that kind of detail, refer to Configure Multi-Region Clusters in Confluent Platform and Tutorial: Configure Multi-Region Clusters in Confluent Platform.

You’ll begin by freeing up resources on the passive cluster; pausing all consumers, producers, and stopping replication. Then you’ll effectively extend your original active cluster by pulling in those freed up resources. In the process, you’ll change the configuration of the cluster into a mult-region cluster with the features and capabilities described in Configure Multi-Region Clusters in Confluent Platform.

A successful transition to a multi-region cluster is defined not only by assuring that everything works on the new deployment, but also by having options to recover if something fails due to unforeseeable circumstances. Therefore each step includes a ROLLBACK section with instructions on how to roll back to the previous state in case of failure. Only perform ROLLBACK if something goes wrong; do not perform these steps otherwise.

Understanding the “Before” and “After” architectures

Your starting point is assumed to be a 2-region active-passive architecture that uses Replicator to sync data across the differnet cluters.

The end state, after you’ve transitioned the datacenters, will be a multi-region, rack-aware cluster that uses a stretched 2.5 region active-passive stretch architecture like the one shown in the multi-region cluster tutorial example and demo’ed in the full tutorial here. The new datacenter setup will not require Replicator to sync data.

The steps below refer to these data centers (DCs):

West (original, passive DC)

East (original, active DC)

Central (new tiebreaker ZooKeeper DC, added in as a part of the new multi-region configuration)

Prerequisites

These instructions assume you have:

Two Confluent Platform clusters with an active-passive setup

Confluent Enterprise version 6.0.0 or later

One extra node dedicated to be the “light” ZooKeeper node

All instances within 50ms of network latency between each other

The tutorial refers to $CONFLUENT_HOME, which represents etc/kafka within your Confluent Platform install directory. For example, to set an environment variable for this:

export CONFLUENT_HOME=$HOME/confluent-7.5.12

PATH=$CONFLUENT_HOME/bin:$PATH

Step 1. Pause all consumers and producers

When all consumers and producers are paused, there should be no data flow in the cluster.

Potential data flow components:

ksqlDB Queries

Connectors

Application Producers

Application Consumers

Step 2. Pause replication

Ensure Replicator consumer lag is 0.

This is to ensure everything is in sync, which is not possible when producers and consumers are online because the 8 hour green zone does not stop data flow.

Stop replication.

Tip

Replicator is no longer needed in for a Multi-Region Clusters setup.

ROLLBACK option: Resume Replicator.

Step 3. Install the Confluent Platform on the Zookeeper Node (Central DC)

Have the Confluent Platform package and property file ready so that you can start it up in later steps.

ROLLBACK option: Delete the ZooKeeper package and the property files from the tiebreaker node.

Step 4. Gracefully stop DC West components

Stop everything on the DC West environment (your original passive cluster) in below order. DO NOT DELETE them

Broker

ZooKeeper

ROLLBACK option: Restart all of the above components.

Step 5. Make a backup of data directory and log4j for existing brokers and Zookeepers on DC West

Create backup copies of both the data directory and log4j logs .

Copy the

data.dirfile to another location.Copy

log4jlogs to another location.

ROLLBACK option: Delete the copied files. (No rollback actions are necessary.

Step 6. Delete DC West brokers and Zookeeper Datadir folder and log4j log folder

Since you will start ZooKeeper and brokers anew, delete the data on them:

Delete data on Zookeepers

Delete data on the brokers

Tip

You are not deleting these in EAST DC because they are your expanding cluster.

ROLLBACK option: Copy and paste the backed up files in step 4 back to where they were originally.

Step 7. Create backups of properties files for all DC West and DC East components

Make backup property files for below components:

West Zookeepers

West Brokers

East Zookeepers

East Brokers

ROLLBACK option: Delete the copied files. (No rollback actions are necessary.)

Step 8. Change all properties files in both environments to Multi-Region Clusters configuration

Change the properties file for these components:

Broker (Add two fields and change one field)

Make the following changes to your broker properties files (for example, in $CONFLUENT_HOME/etc/kafka/server.properties).

replica.selector.class=org.apache.kafka.common.replica.RackAwareReplicaSelector

broker.rack=<region>

zookeeper.connect <- this should now include the new zookeepers

Zookeeper

Ensure all zookeepers properties have the target quorum on them. (For example, update $CONFLUENT_HOME/etc/kafka/zookeeper.properties.)

server.<your-id-on-east-1>=<your-east-1>:2888:3888

server.<your-id-on-east-2>=<your-east-2>:2888:3888

server.<your-id-on-gtdc>=<your-gtdc-host>:2888:3888

server.<your-id-on-west-1>=<your-west-1-host>:2888:3888

server.<your-id-on-west-2>=<your-west-2-host>:2888:3888

ROLLBACK option: Copy and paste the backed up files in step 6 back to where they were originally.

Step 9. Shut down one Zookeeper on EAST DC

You need to move one Zookeeper to the EAST DC, so shut down one Zookeeper and move it to the new Central DC (Tiebreaker).

ROLLBACK option: Restart this East ZooKeeper with the previous properties.

Step 10. Start one Zookeeper on CENTRAL DC (tiebreaker)

To keep the cluster running properly, you will now start one ZooKeeper on the new Central DC with the Multi-Region Clusters configuration, then perform a rolling restart of the two Zookeeper instances on EAST DC.

Important

At this stage, the Zookeepers should restart with all information for all 3 ZooKeepers in each of the zookeeper.properties files, as shown:

server.<your-id-on-east-1>=<your-east-1>:2888:3888

server.<your-id-on-east-2>=<your-east-2>:2888:3888

server.<your-id-on-gtdc>=<your-gtdc-host>:2888:3888

Zookeepers will not join if they do not know each other. Therefore, the information in each of the ZooKeeper properties files must be the same.

ROLLBACK option: Gracefully shut down this ZooKeeper.

Step 11. Start two Zookeepers on WEST DC

Start two ZooKeeper instances on WEST Servers 1-2. Then you will perform a rolling restart of all three previous ZooKeeper instances with updated configurations that contain all ZooKeeper information.

Each zookeeper.properties file should have these configurations:

server.<your-id-on-east-1>=<your-east-1>:2888:3888

server.<your-id-on-east-2>=<your-east-2>:2888:3888

server.<your-id-on-gtdc>=<your-gtdc-host>:2888:3888

server.<your-id-on-west-1>=<your-west-1-host>:2888:3888

server.<your-id-on-west-2>=<your-west-2-host>:2888:3888

ROLLBACK option: Gracefully shut down these two Zookeepers.

Step 12. Start 4 Brokers on WEST DC

Start with 4 Brokers on WEST Servers 1-4.

Be sure to keep all previous working properties, and change only the following configurations:

replica.selector.class=org.apache.kafka.common.replica.RackAwareReplicaSelector

broker.rack=<region>

zookeeper.connect <- this should now include the new Zookeepers

broker.id <- this has to be unique since you are adding new brokers.

ROLLBACK option: Gracefully shut down these brokers.

Step 13. Perform a rolling restart of the brokers on EAST DC

Now update these properties and restart the existing EAST DC brokers:

replica.selector.class=org.apache.kafka.common.replica.RackAwareReplicaSelector

broker.rack=<region>

zookeeper.connect <- this should now include the new Zookeepers

Note that you do not change broker.id for these brokers because you want them to stay the same.

ROLLBACK option: Change the properties back to their original configurations, and perform a rolling restart.

Step 16. Check all components are working properly

Check the ZooKeeper logs to make sure everything is running properly.

Step 17. Alter topic configurations so they are utilizing Multi-Region Clusters capabilities

To alter the topic configuratinos to use the multi-region capabilities, refer to the examples shown in Replica Placement in the multi-region clusters tutorial.

Step 18. Rebalance the cluster

Either use the Confluent rebalancer tool to start the rebalance of the cluster based on previous placement or enable Manage Self-Balancing Kafka Clusters in Confluent Platform (for Confluent Platform 6.0.0+ clusters).

Step 19. Update consumer applications

Lastly, you can slowly update the consumer application with an additional property: client.rack.