Cluster Linking for Confluent Platform

The following sections provide an overview of Cluster Linking on Confluent Platform, including an explanation of what it is and how it works, use cases and architectures, best practices, and more.

What is Cluster Linking?

Cluster Linking enables you to directly connect clusters and mirror topics from one cluster to another. Cluster Linking makes it easy to build multi-datacenter, multi-region, and hybrid cloud deployments. It is secure, performant, tolerant of network latency, and built into Confluent Server and Confluent Cloud.

Unlike Replicator and MirrorMaker 2, Cluster Linking does not require running Connect to move messages from one cluster to another, and it creates identical “mirror topics” with globally consistent offsets. We call this “byte-for-byte” replication. Messages on the source topics are mirrored precisely on the destination cluster, at the same partitions and offsets. No duplicated records will appear in a mirror topic with regards to what the source topic contains.

Capabilities and comparisons

Cluster Linking replicates topics from one Kafka or Confluent cluster to another, providing the following capabilities:

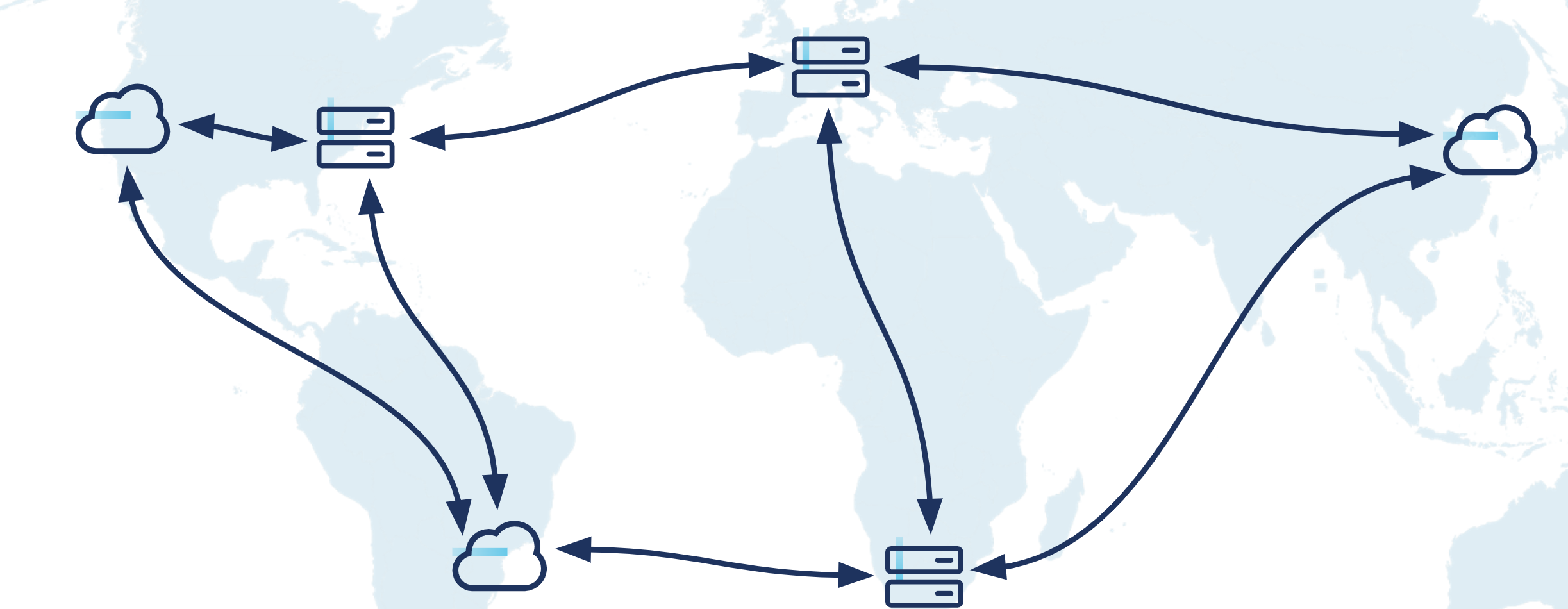

Global Replication: Unify data and applications from regions and continents around the world.

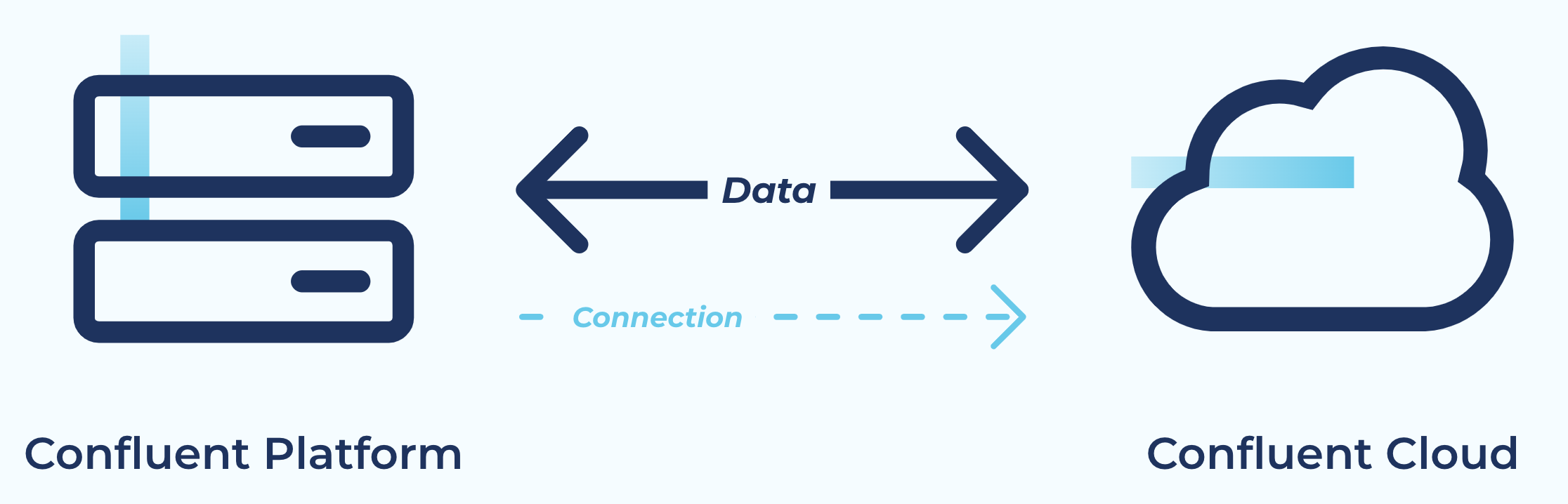

Hybrid cloud: Create a secure, scalable, and seamless bridge-to-cloud by linking an on-premise Confluent Platform cluster in a private cloud to a Confluent Cloud cluster in a public cloud.

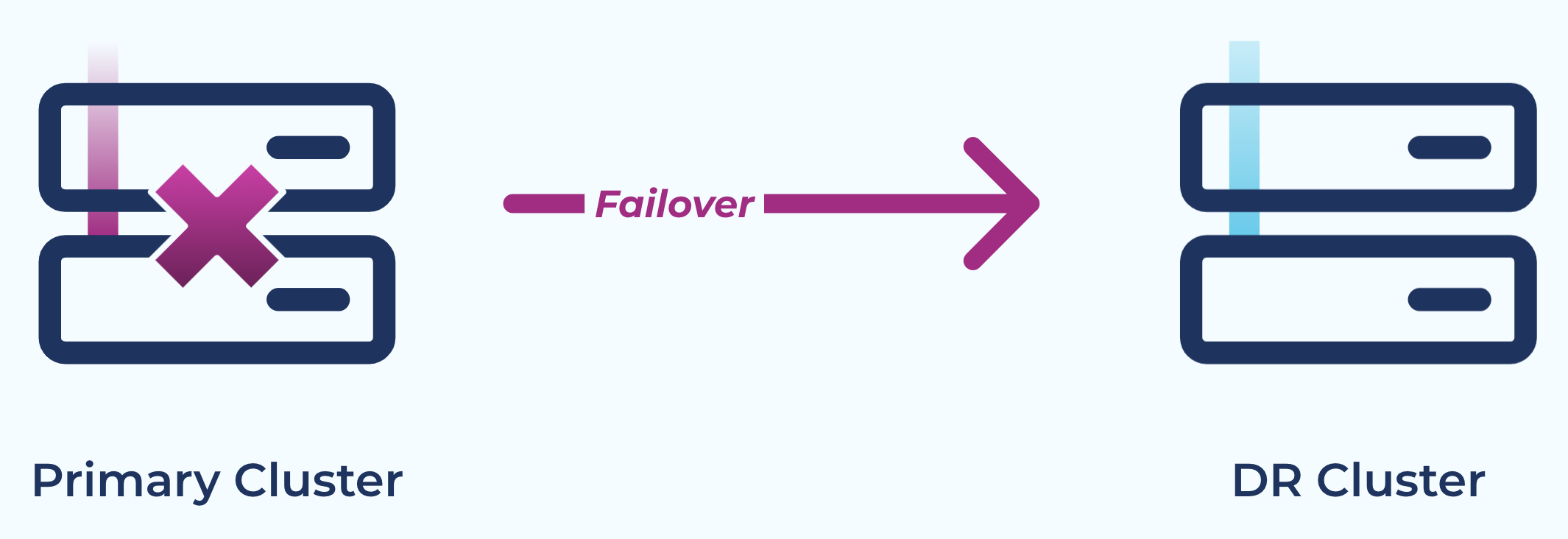

HA/DR: Build a multi-region high availability and disaster recovery (“HA/DR”) strategy that achieves low recovery times (RTOs) and minimal data loss (RPOs) by replicating topic data and metadata to another cluster.

Cluster migration: Migrate from an older cluster to one in a newer environment, region, or cloud.

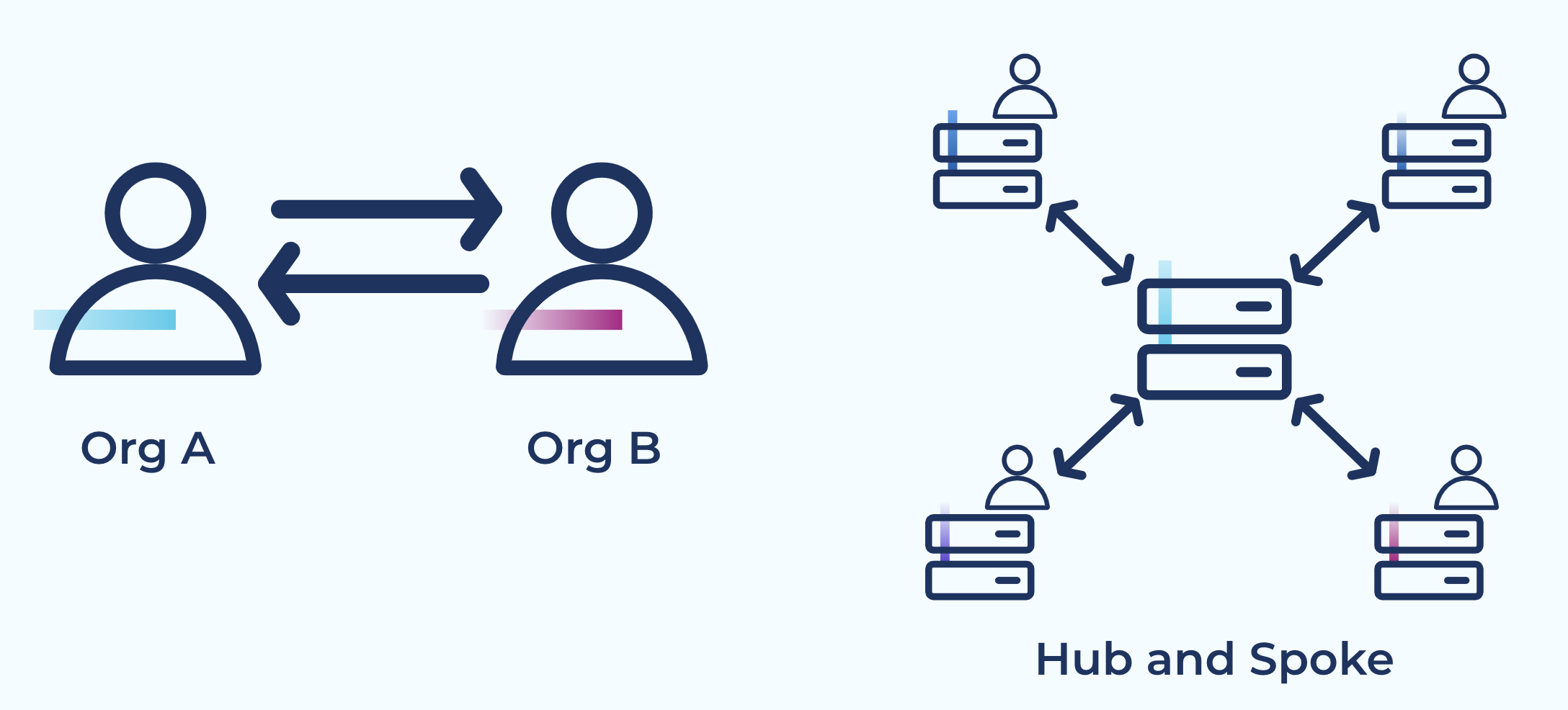

Aggregation: Combine data from many smaller clusters into one aggregate cluster.

Data sharing: Exchange data between different teams, lines-of-business, and organizations.

Compared to other Kafka replication options, Cluster Linking offers these advantages:

Built into Confluent Server and Confluent Cloud, so it does not depend on additional components, connectors, virtual machines, or custom processes.

Creates exact mirrors of topics, including offsets, to enable migration, failover, and rationalizing about your system without offset translation or custom tooling.

Can be dynamically updated via REST APIs, CLIs, and Kubernetes CRDs.

For compressed messages, byte-to-byte replication achieves faster throughput by avoiding decompression-and-recompression.

What’s supported

Cluster Linking is included as a part of Confluent Server. There are no additional or other licensing costs for Cluster Linking on Confluent Platform outside of the cost of the Confluent Enterprise License subscription. Following are the requirements for and supported features of Cluster Linking.

Requires Confluent Server destination cluster of Confluent Platform 7.x.x on the destination cluster.

Works with all clients.

Requires an inter-broker protocol (IBP) of 2.4 or higher on the source cluster, and an IBP of 2.7 or higher on the destination cluster. More specifically, for current Confluent Platform 7.x.x versions, if IBP of source cluster is 2.7 or lower, IBP of destination must be 2.7. If IBP of source cluster is 2.8 or higher, IBP of destination may be 2.7 or higher. What is not supported is for clusters using Confluent Platform 7.x.x to have a mismatch between source and destination IBP outside of these parameters. For a guide to upgrading, see Steps for upgrading to 7.6.x (ZooKeeper mode).

Requires

password.encoder.secretto be set on the Confluent Platform destination cluster’s brokers. If using a source-initiated cluster link (for example, from CP 7.1+ to Confluent Cloud), thenpassword.encoder.secretmust be set on the source cluster brokers, too; in other words, on all brokers. To learn how to set this property dynamically, see Update password configurations dynamically.Built-in custom resource in Confluent for Kubernetes.

Compatible with Ansible. To learn more, see Using Cluster Linking with Ansible.

Provides support for authentication and authorization, as described in Manage Security for Cluster Linking on Confluent Platform.

The source cluster can be Kafka or Confluent Server or Confluent Cloud; the destination cluster must be Confluent Server, which is bundled with Confluent Enterprise.

The destination cluster cannot be a lower Confluent Platform version than the source cluster, if the source cluster is also Confluent Platform.

Bidirectional links between two clusters are supported; but these must be established as two separate links, not a single link. The Hybrid tutorial gives an example of creating a bi-directional link between an on-premises Confluent Platform cluster and a Confluent Cloud cluster.

In addition to self-managed deployments on Confluent Platform, Cluster Linking is also available as a managed service on Confluent Cloud and in Hybrid cloud.

Source | Destination |

|---|---|

Confluent Platform 7.0.x or later [1] | Confluent Platform 7.0.0 or later |

Confluent Cloud | Confluent Platform 7.0.0 or later |

Kafka 3.0.x or later [1] | Confluent Platform 7.0.0 or later |

Confluent Platform 7.0.x or later [1] | Confluent Cloud [2] |

Confluent Cloud | Confluent Cloud [2] |

Kafka 3.0.x or later [1] | Confluent Cloud [2] |

Confluent Platform 7.1.0 or later (source-initiated link) | Confluent Platform 7.1.0 or later |

Confluent Platform 7.1.0 or later (source-initiated link) | Confluent Cloud |

Footnotes

Upgrade notes

When upgrading from Confluent Platform 6.2.0 to 7.0.0 or later version, make sure that

acl.sync.enabledis not set totruein$CONFLUENT_HOME/server.properties. (The default isfalse, so if this property is not specified, you can assume it is set tofalse.) Ifacl.sync.enabledis set totrueduring upgrade, existing cluster links will be marked as “failed” (state isLINK_FAILED).

Use cases and architectures

The following use cases can be achieved by the configurations and architectures shown.

Hybrid cloud

Use Case: Easily create a persistent and seamless bridge from on-premise environments to cloud environments. A cluster link between a Confluent Platform cluster in your datacenter and a Confluent Cloud cluster in a public cloud acts as a single secure, scalable hybrid data bridge that can be used by hundreds of topics, applications, and data systems. Cluster Linking can tolerate the high latency and unpredictable networking availability that you might have between on-premise infrastructure and the cloud, and recovers from reconnections automatically. Cluster Linking can replicate data bidirectionally between your datacenter and the cloud without any firewall holes or special IP filters because your datacenter always makes an outbound connection. Cluster Linking creates a byte-for-byte, globally consistent copy of your data that preserves offsets, making it easy to migrate on-premise applications to the cloud. Cluster Linking built into Confluent Platform and does not require any additional components to manage.

Tutorial: Tutorial: Link Confluent Platform and Confluent Cloud Clusters

Disaster recovery

Use Case: Create a Disaster Recovery (“DR”) cluster that is available to failover should your primary cluster experience an outage or disaster. Cluster Linking keeps your DR cluster in sync with data, metadata, topic structure, topic configurations, and consumer offsets so that you can achieve low recovery point objectives (“RPOs”) and recovery time objectives (“RTOs”), often measured in minutes. Cluster Linking for DR does not require an expensive network, complicated management, or extra software components. And because Cluster Linking preserves offsets and syncs consumer offsets, consumer applications of all languages can failover and pickup near the point where they left off, achieving low downtime without custom code or interceptors.

Global replication

Use Case: Stream data between the continents and regions where your business operates. Unify data from every region to create a global real-time event mesh. Aggregate data from different regions to drive the real-time applications and analytics that power your business. By making geo-local reads of real-time data possible, this can act like a content delivery network (CDN) for your Kafka events throughout the public cloud, private cloud, and at the edge.

Data sharing

Use Case: Share data between different teams, lines of business, or organizations in a pattern that provides high isolation between teams and efficient operational management. Cluster Linking keeps an in-sync mirror copy of relevant data on the consuming team’s cluster. This isolation empowers the consuming team to scale up hundreds of consumer applications, stream processing apps, and data sinks without impacting the producing team’s cluster: for the producing team, it’s the same load as one additional consumer. The producing team simply issues a security credential with access to the topics that the consuming team is allowed to read. Then the consuming team can create a cluster link, which they control, monitor, and manage.

Tutorial: Tutorial: Share Data Across Topics Using Cluster Linking for Confluent Platform

Customer Success Story (video): Real-Time Inter-Agency Data Sharing With Kafka, Kafka and Cluster Linking have transformed how government agencies share data: in real-time with faster onboarding of new data sets, real-time event notification, reduced cost for data sharing, and enhanced and enriched data sets for improved data quality.

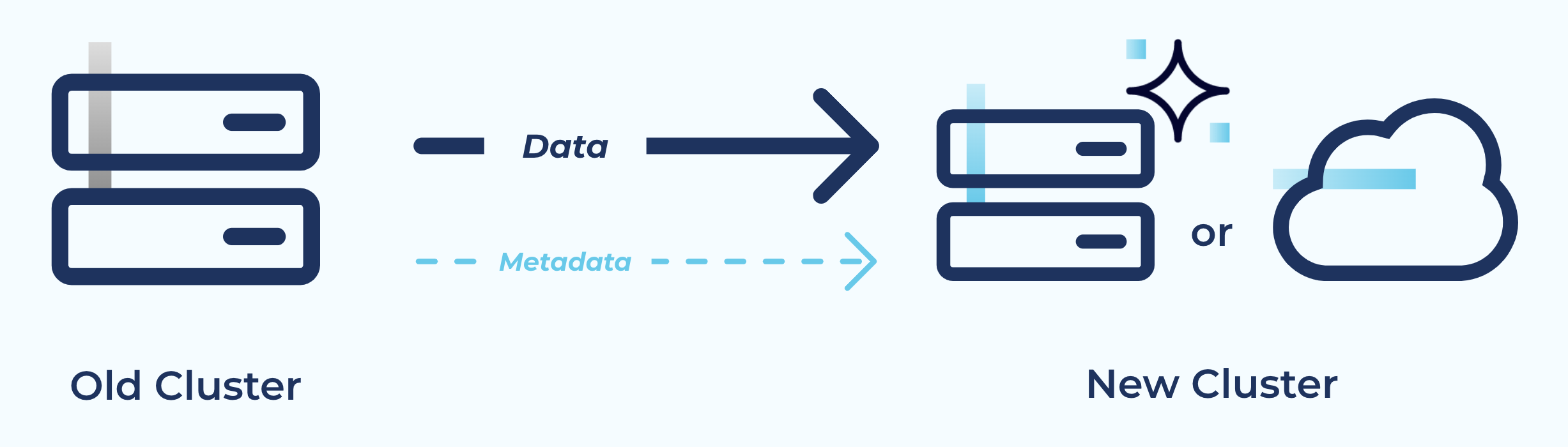

Cluster migration

Use Case: Seamlessly move from an on-premises Kafka or Confluent Platform cluster to a Confluent Cloud cluster, or from older infrastructure to new infrastructure, with low downtime and no data loss. Cluster Linking’s native offset preservation and consumer offset syncing allows every consumer application to switch from the old cluster to the new one when it’s ready. Topics can be migrated over one by one, or in a batch. Cluster Linking handles topic creation, configuration, and syncing.

Tutorial: Tutorial: Migrate Data with Cluster Linking on Confluent Platform

Customer Success Story: In SAS Powers Instant, Real-Time Omnichannel Marketing at Massive Scale with Confluent’s Hybrid Capabilities, the subtopic “A much easier migration thanks to Cluster Linking “ describes how SAS used Cluster Linking to migrate to Confluent for Kubernetes and other cloud-native solutions.

Scaling Cluster Linking

Because Cluster Linking fetches data from source topics, the first scaling unit to inspect is the number of partitions in the source topics. Having enough partitions lets Cluster Linking mirror data in parallel. Having too few partitions can make Cluster Linking bottleneck on partitions that are more heavily used.

In a Confluent Platform or Apache Kafka® cluster, you can scale Cluster Linking throughput as follows:

On the cluster link configurations, change the number of fetcher threads or change the fetch size to get better batching.

Improve the cluster’s maximum throughput by scaling the brokers vertically or horizontally.

Use the options listed under Cluster Link Replication Configurations to tune cluster link performance, which helps scale cluster link throughput.

In Confluent Cloud, Cluster Linking scales with the ingress and egress quotas of your cluster. Cluster Linking is able to use all remaining bandwidth in a cluster’s throughput quota: 150 MB/s per CKU egress on a Confluent Cloud source cluster or 50 MB/s per CKU ingress on a Confluent Cloud destination cluster, whichever is hit first. Therefore, to scale Cluster Linking throughput, simply adjust the number of CKUs on either the source, the destination, or both.

Note

On the destination cluster, Cluster Linking write takes lower priority than Kafka clients producing to that cluster; Cluster Linking will be throttled first.

Confluent proactively monitors all cluster links in Confluent Cloud and will perform tuning when necessary. If you find that your cluster link is not hitting these limits even after a full day of sustained traffic, contact Confluent Support.

To learn more, see recommended guidelines for Confluent Cloud.

Known limitations and best practices

When deleting a cluster link, first check that all mirror topics are in the

STOPPEDstate. If any are in thePENDING_STOPPEDstate, deleting a cluster link can cause irrecoverable errors on those mirror topics due to a temporary limitation.In Confluent Platform 7.1 and later, REST API calls to list and get source-initiated cluster links will have their destination cluster IDs returned under the parameter

destination_cluster_id. (This is a change from previous releases, where these were returned undersource_cluster_id.)For Confluent Platform in general, you should not use unauthenticated listeners. For Cluster Linking, this is even more important because Cluster Linking can access the listeners. As a best practice, always configure authentication on listeners. To learn more, see the Enable Security for a ZooKeeper-Based Cluster in Confluent Platform, the Authentication Methods in Confluent Platform, and the listener configuration examples in the brokers for the various protocols such as Authenticate with SASL using JAAS in Confluent Platform and Encrypt and Authenticate with TLS in Confluent Platform. See also, Manage Security for Cluster Linking on Confluent Platform.

All TLS/SSL key stores, trust stores and Kerberos keytab files must be stored at the same location on each broker in a given cluster. If not, cluster links may fail. Alternatively, you can configure a PEM certificate in-line on the cluster link configuration.

Cluster link configurations stored in files (TLS/SSL key stores, trust stores, Kerberos keytab files) should not be stored in

/tmpbecause/tmpfiles may get deleted, leaving links and mirrors in a bad state on some brokers.Confluent Control Center (Legacy) will only display mirror topics correctly if the Confluent Platform cluster and Control Center (Legacy) are connected to a REST Proxy API v3. If not connected to the v3 Confluent REST API, Control Center (Legacy) will display mirror topics as regular topics, which can lead to showing features that are not actually available on mirror topics; for example, producing messages or editing configurations. To learn how to configure these clusters for the v3 REST API, see Required Configurations for Control Center (Legacy).

Prerequisites are provided per tutorial or use case because these differ depending on the context. Tutorials are provided on topic data sharing and Tutorial: Link Confluent Platform and Confluent Cloud Clusters. Additional requirements for secure setups are provided in Manage Security for Cluster Linking on Confluent Platform.

Cluster Linking has not yet been fully tested to mirror topics that contain records produced using the Kafka transactions feature. Therefore, using Cluster Linking to mirror such topics is not supported and not recommended.

Cluster Linking for Confluent Platform between a source cluster running Confluent Platform 7.0.x or earlier (non-KRaft) and a destination cluster running in KRaft mode is not supported. Link creation may succeed, but the connection will ultimately fail (with a

SOURCE_UNAVAILABLEerror message). To work around this issue, make sure the source cluster is running Confluent Platform version 7.1.0 or later. If you have links from a Confluent Platform source cluster to a Confluent Cloud destination cluster, you must upgrade your source clusters to Confluent Platform 7.1.0 or later to avoid this issue.ACL migration (ACL sync), previously available in Confluent Platform 6.0.0 through 6.2.x, was removed in Confluent Platform 7.0.0 due to a security vulnerability, then re-introduced in Confluent Platform 7.1.0 with the vulnerability resolved. If you are using ACL migration in your pre-7.1.0 deployments, you should disable it or upgrade to 7.1.x. To learn more, see Authorization (ACLs).

Any customer-owned firewall that allows the cluster link connection from source cluster brokers to destination cluster brokers must allow the TCP connection to persist in order for Cluster Linking to work.

Prefixing is not supported in 7.1.0. For more information, see the note at the top of this section: Prefix Mirror Topics and Consumer Group Names.

Cluster Linking cannot replicate messages that use the v0 or v1 message format from the earliest versions of Kafka. Cluster Linking can replicate messages in the v2 format (introduced in Apache Kafka® v 0.11) and later. If Cluster Linking encounters a message with the v0 or v1 format, it will fail that mirror topic; that is, it will transition to a FAILED state and stop replication for that topic. To replicate a topic that contains messages in the v0 or v1 format, either begin replication for that topic after the last message in the v0 or v1 format, using the cluster link configuration

mirror.start.offset.spec, or use Confluent Replicator to replicate topics and messages.An issue exists where consumer group offsets that are deleted on the destination cluster (especially auto-deleted) persist, instead of being removed as expected. (Under the hood, the offsets are being re-replicated to the destination before retention settings delete the offsets from source. This results in extended retention of inactive consumer group offsets.) To prevent this from happening, you can extend retention on the destination to make sure data is deleted on the source before it is deleted on the destination. To do this, increase

offsets.retention.minuteson destination cluster by at least doubleoffsets.retention.check.interval.ms.