Important

You are viewing documentation for an older version of Confluent Platform. For the latest, click here.

Confluent Control Center¶

Confluent Control Center is a web-based tool for managing and monitoring Apache Kafka®. Control Center facilitates building and monitoring production data pipelines and streaming applications.

- Clusters home page

- View healthy and unhealthy clusters at a glance, search for a cluster being managed by Control Center, and click on a cluster tile to drill into the Brokers overview of critical metrics.

- Alerts

- Define the trigger criteria to detect anomalous events in monitoring data and perform actions that trigger an alert when those events occur. Set triggers, actions, and view alert history across all clusters being managed by Control Center. Configure notification actions for email, Slack, and PagerDuty. Pause and resume alert actions across all clusters when necessary.

- Brokers overview

- View essential Kafka metrics for brokers in a cluster.

- Topics

- Add and edit topics, view production and consumption metrics for a topic, browse and download messages, manage Schema Registry for a topic, and edit topic configuration settings.

- Connect

- Use Control Center to manage and monitor Kafka Connect, the toolkit for connecting external systems to Kafka. You can easily add new sources to load data from external data systems and new sinks to write data into external data systems. Additionally, you can manage, monitor, and configure connectors with Control Center. View the status of each connector and its tasks.

- ksqlDB

- Use Control Center to develop applications against ksqlDB, the streaming SQL engine for Kafka. You can apply schemas to your topics and treat them as streams or tables. You can further author real-time queries against them to build applications. Use the ksqlDB GUI in Control Center to: Run, persist, view, and terminate SQL queries; browse and download messages from query results; add, describe, and drop streams and tables using the web interface; and view or search for the schemas of available streams and tables in a ksqlDB cluster.

- Consumers

- View all the consumer groups associated with a selected Kafka cluster, including the number of consumers per group and the number of topics being consumed. View Consumer Lag across all relevant topics. The Consumers feature contains the redesigned streams monitoring page.

- Data Streams

- Use Control Center to monitor your data streams end-to-end, from producer to consumer. Use Control Center to verify that every message sent is received (and received only once), and to measure system performance end-to-end. Drill down to better understand cluster usage, and identify any problems.

- System Health

- Control Center can monitor the health of your Kafka clusters. View trends for important broker and topic health metrics, and set alerts on important cluster key performance indicators (KPIs).

Architecture¶

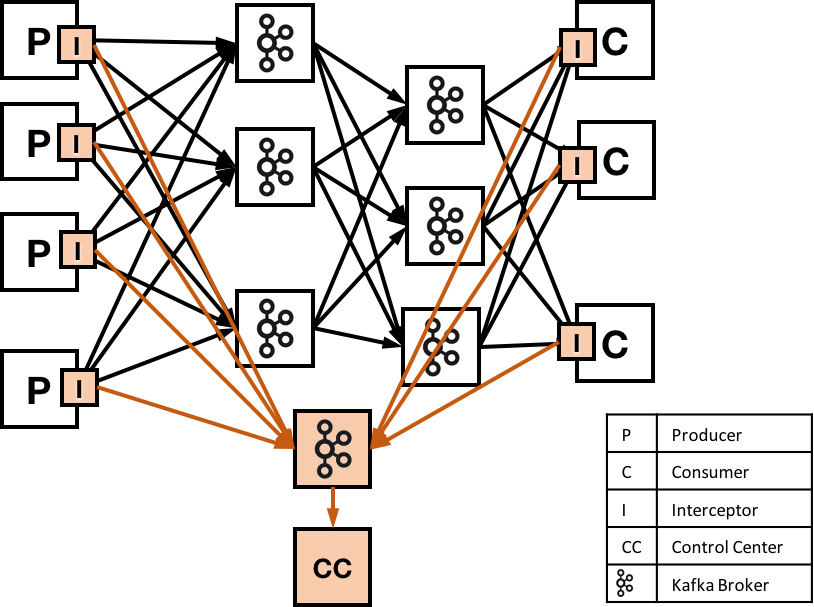

Control Center is comprised of these parts:

- Metrics interceptors that collect metric data on clients (producers and consumers).

- Kafka to move metric data.

- The Control Center application server for analyzing stream metrics.

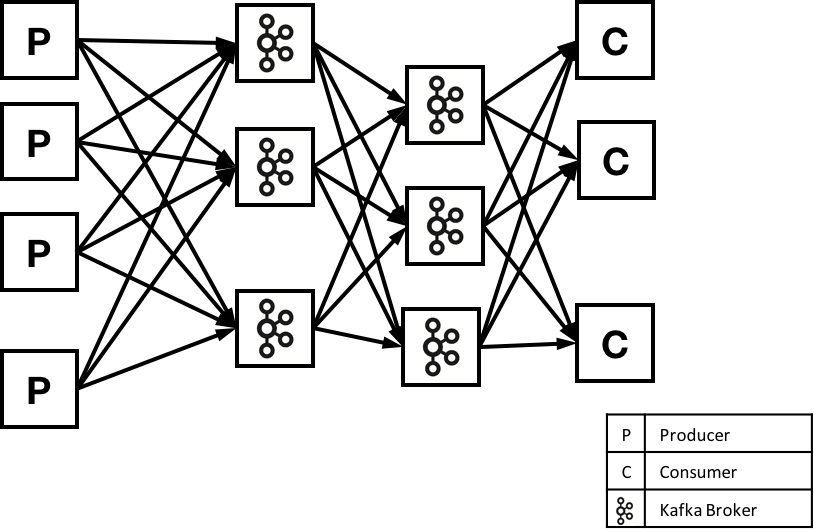

Here is a common Kafka environment that uses Kafka to transport messages from a set of producers to a set of consumers that are in different data centers, and uses Replicator to copy data from one cluster to another:

Confluent Control Center helps you detect any issues when moving data, including any late, duplicate, or lost messages. By adding lightweight code to clients, stream monitoring can count every message sent and received in a streaming application. By using Kafka to send metrics information, stream monitoring metrics are transmitted quickly and reliably to the Control Center application.

Time windows and metrics¶

Stream monitoring is designed to efficiently audit the set of messages that are sent and received. To do this, Control Center uses a set of techniques to measure and verify delivery.

The interceptors work by collecting metrics on messages

produced or consumed on each client, and

sending these to

Control Center for analysis and reporting. Interceptors use Kafka message timestamps to group messages.

Specifically, the interceptors will collect metrics during a one minute time window based on this

timestamp. You can

calculate this by a function like floor(messageTimestamp / 60) * 60. Metrics are

collected for each combination

of producer, consumer group, consumer, topic, and partition. Currently, metrics include a

message count and cumulative

checksum for producers and consumer, and latency information from consumers.

Latency and system clock implications¶

Latency is measured by calculating the difference between the system clock time on the consumer and the timestamp in the message. In a distributed environment, it can be difficult to keep clocks synchronized. If the clock on the consumer is running faster than the clock on the producer, then Control Center might show latency values that are higher than the true values. If the clock on the consumer is running slower than the clock on the producer, then Control Center might show latency values that are lower than the true values (and in the worst case, negative values).

If your clocks are out of sync, you might notice some unexpected results in Confluent Control Center. Confluent recommends using a mechanism like NTP to synchronize time between production machines; this can help keep clocks synchronized to within 20ms over the public internet, and to within 1 ms for servers on the same local network.

Tip

NTP practical example: In an environment where messages take one second or more to be produced and consumed, and NTP is used to synchronize clocks between machines, the latency information should be accurate to within 2%.

- Contents