Important

You are viewing documentation for an older version of Confluent Platform. For the latest, click here.

Write Streaming Queries Against Apache Kafka® Using ksqlDB and Confluent Control Center¶

You can use ksqlDB in Confluent Control Center to write streaming queries against messages in Kafka.

Prerequisites:

- Confluent Platform is installed and running. This installation includes a Kafka broker, ksqlDB, Control Center, ZooKeeper, Schema Registry, REST Proxy, and Connect.

- If you installed Confluent Platform using TAR or ZIP, navigate into the installation directory. The paths and commands used throughout this tutorial assume that you are in this installation directory, indicated as $CONFLUENT_HOME.

- Consider installing the Confluent CLI to start a local installation of Confluent Platform.

- Java: Minimum version 1.8. Install Oracle Java JRE or JDK >= 1.8 on your local machine

Create Topics and Produce Data¶

Create and produce data to the Kafka topics pageviews and users. These steps use the ksqlDB datagen that is included

Confluent Platform.

Create the

pageviewstopic and produce data using the data generator. The following example continuously generates data with a value in DELIMITED format.$CONFLUENT_HOME/bin/ksql-datagen quickstart=pageviews format=delimited topic=pageviews msgRate=5

Produce Kafka data to the

userstopic using the data generator. The following example continuously generates data with a value in JSON format.$CONFLUENT_HOME/bin/ksql-datagen quickstart=users format=json topic=users msgRate=1

Tip

You can also produce Kafka data using the kafka-console-producer CLI provided with Confluent Platform.

Launch the ksqlDB CLI¶

To launch the CLI, set the following local environment variable. It will route the CLI logs to the ./ksql_logs directory, relative to

your current directory. By default, the CLI will look for a ksqlDB Server running at http://localhost:8088.

LOG_DIR=./ksql_logs $CONFLUENT_HOME/bin/ksql

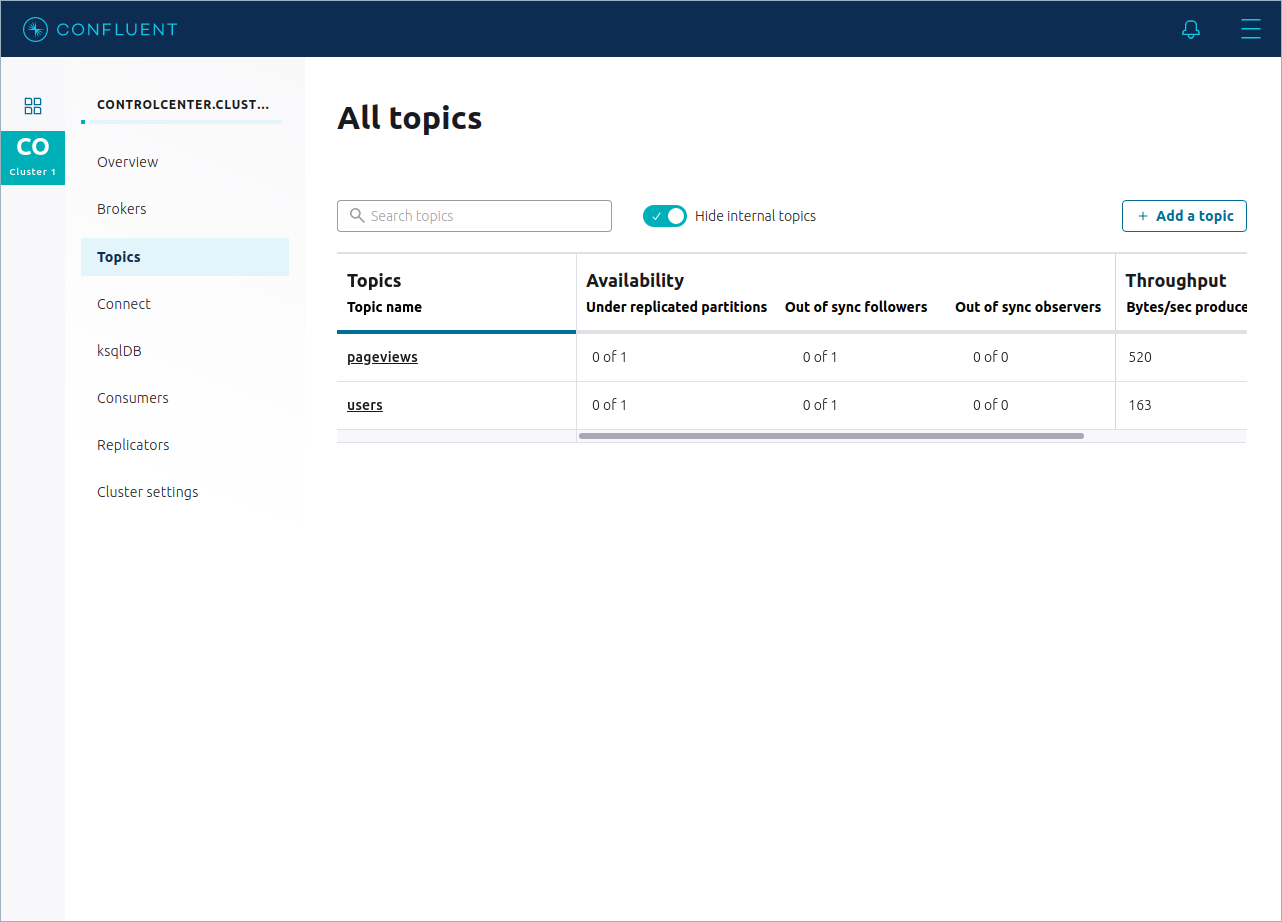

Inspect Topics By Using Control Center¶

Open your browser to http://localhost:9021/. Confluent Control Center opens, showing the Home page for your clusters. In the navigation bar, click the cluster that you want to use with ksqlDB.

In the navigation menu, click Topics to view the

pageviewsanduserstopics that you created previously.

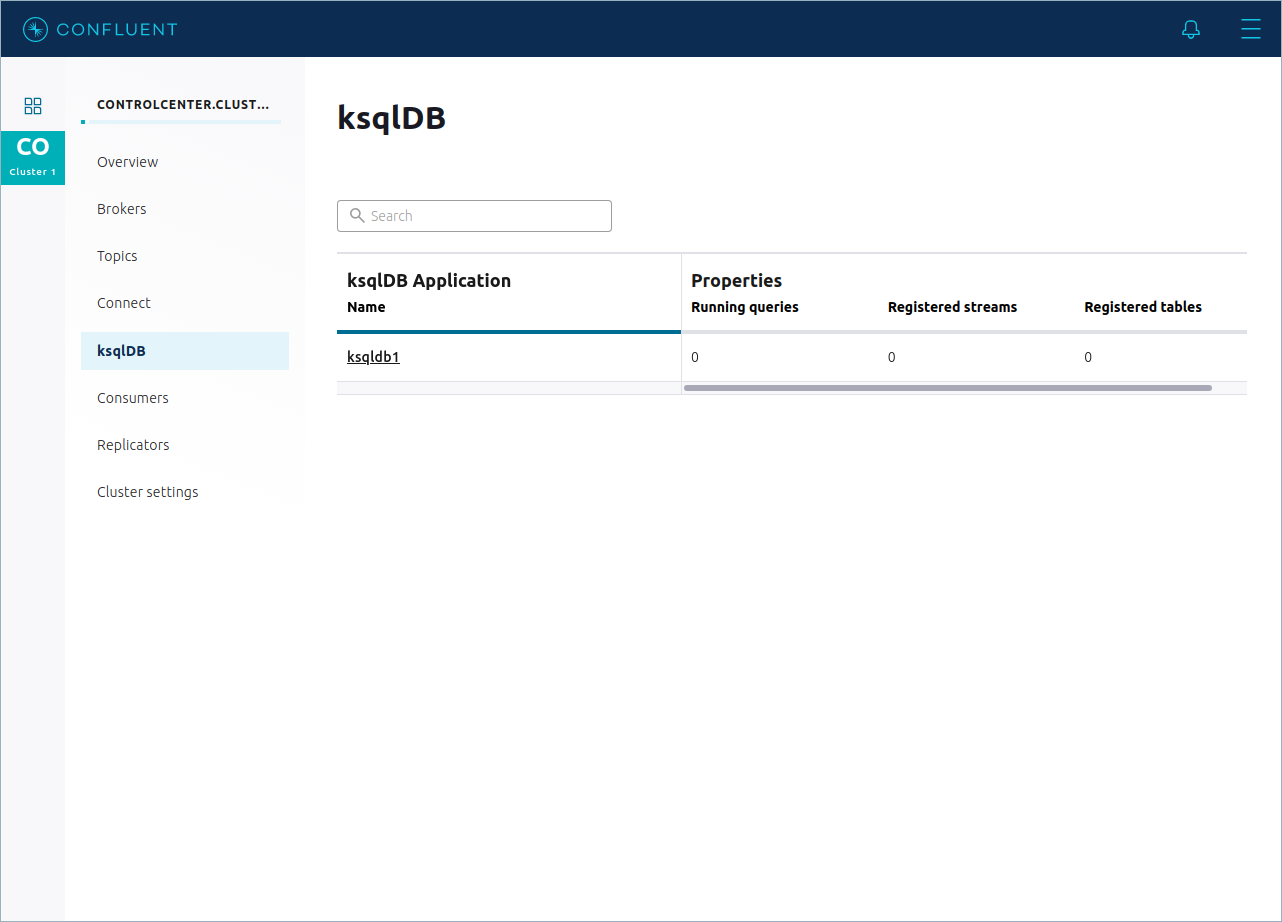

Inspect Topics By Using ksqlDB in Control Center¶

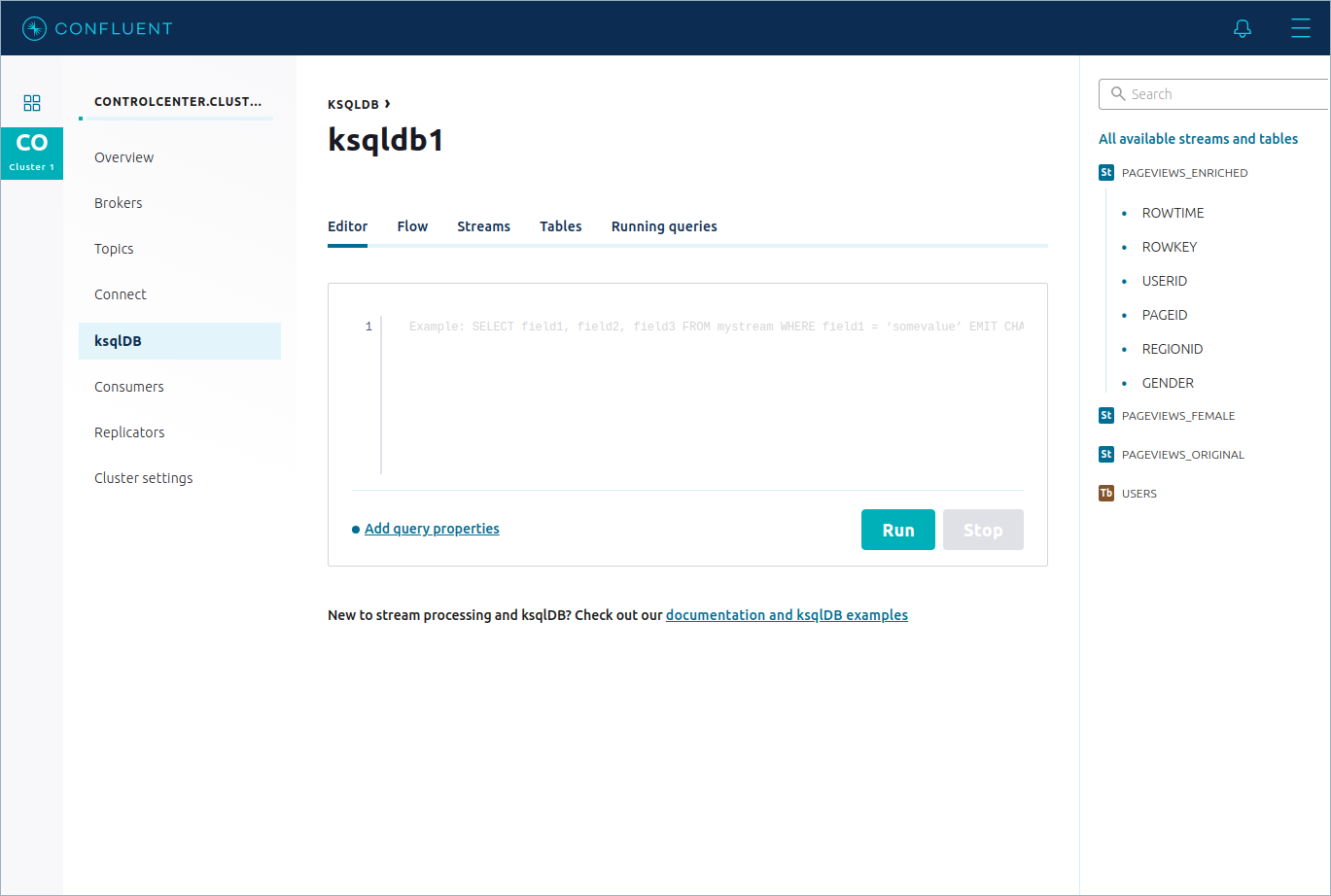

In the cluster submenu, click ksqlDB to open the ksqlDB clusters page, and click a ksqlDB application to open the ksqlDB Editor on the default application.

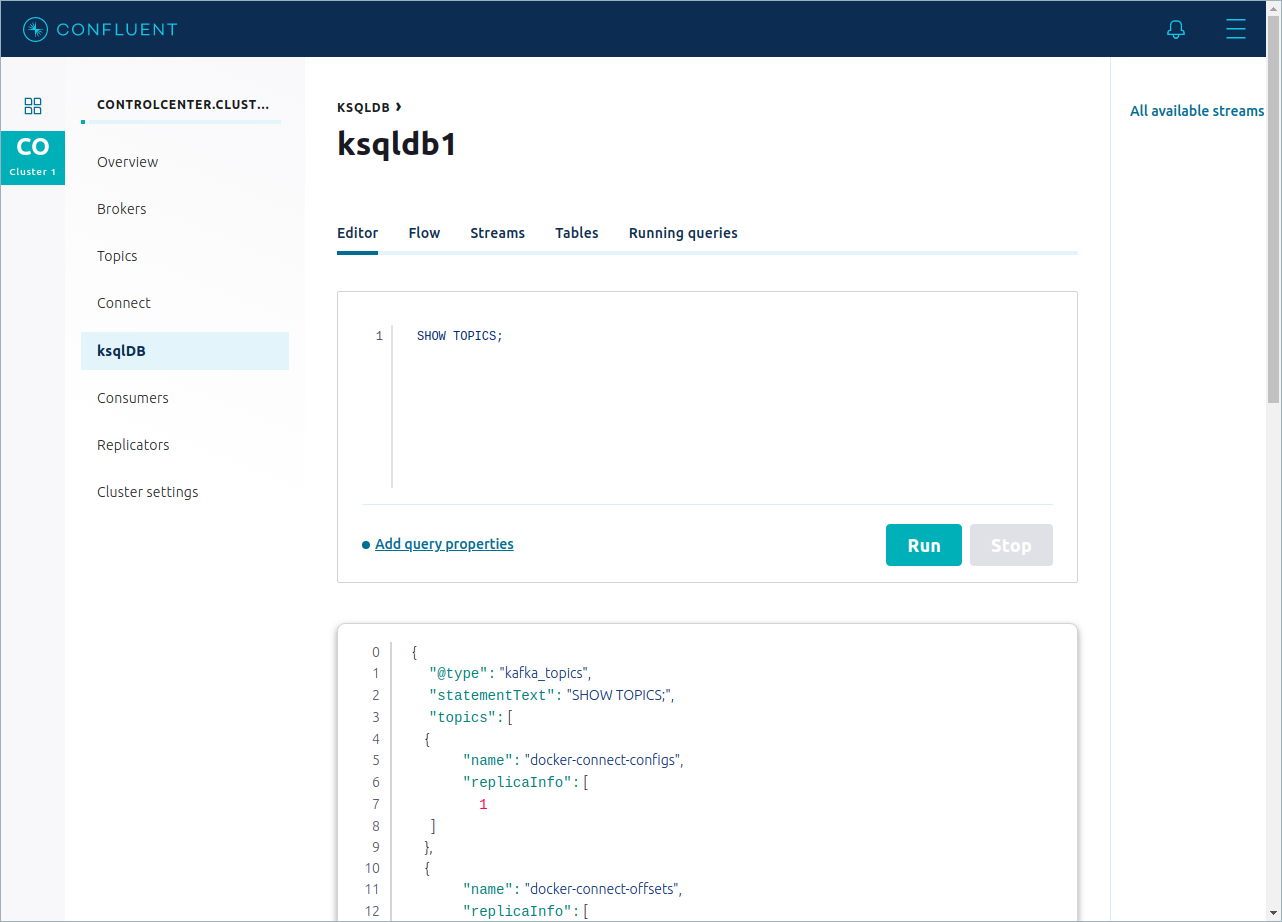

In the editing window, use the SHOW TOPICS statement to see the available topics on the Kafka cluster. Click Run to start the query.

SHOW TOPICS;

In the Query Results window, scroll to the bottom to view the

pageviewsanduserstopics that you created previously. Your output should resemble:{ "name": "pageviews", "replicaInfo": [ 1 ] }, { "name": "users", "replicaInfo": [ 1 ] }To see the count of consumers and consumer groups, use the SHOW TOPICS EXTENDED command.

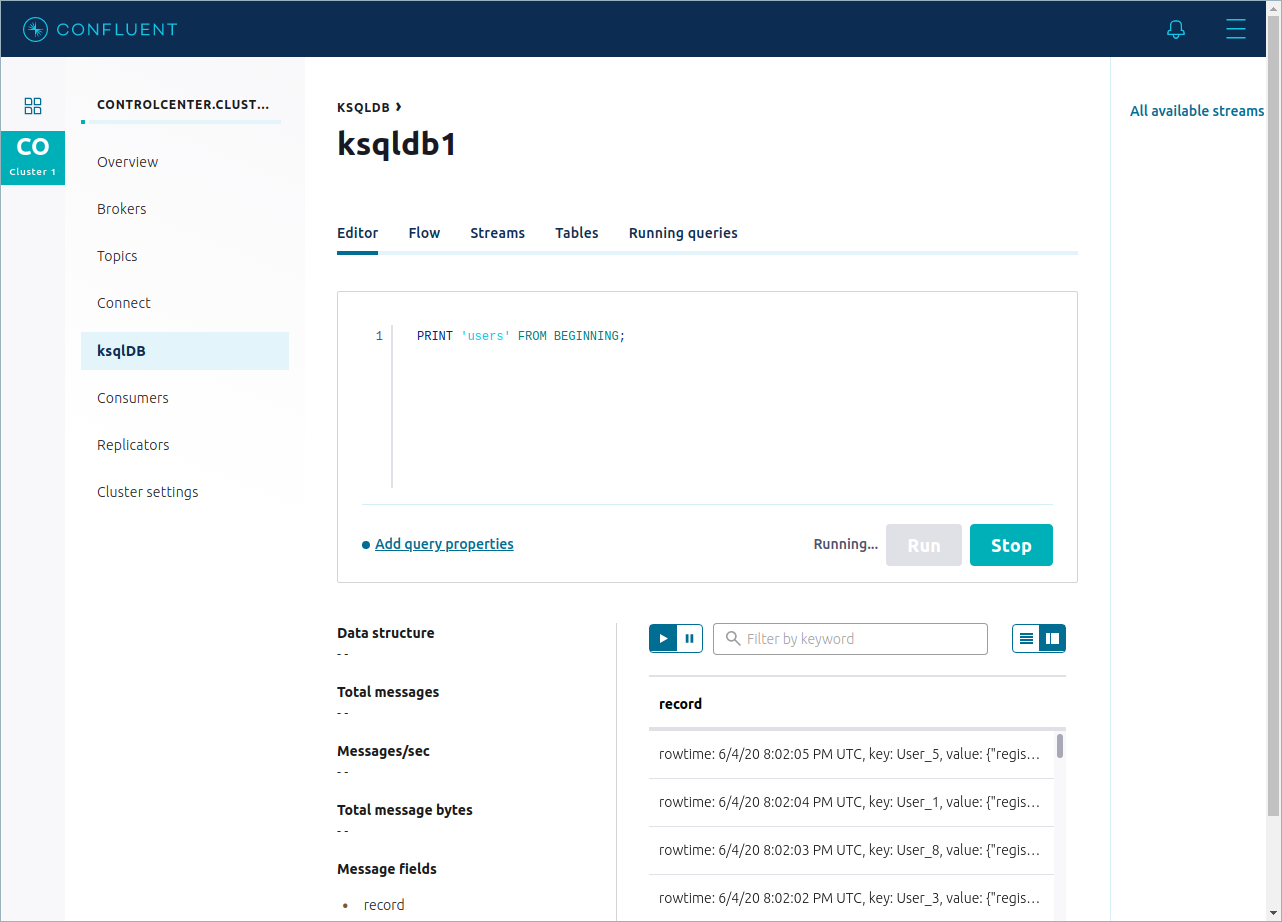

In the editing window, use the PRINT TOPIC statement to inspect the records in the

userstopic. Click Run to start the query.PRINT 'users' FROM BEGINNING;

Your output should resemble:

The query continues until you end it explicitly. Click Stop to end the query.

Create a Stream and Table¶

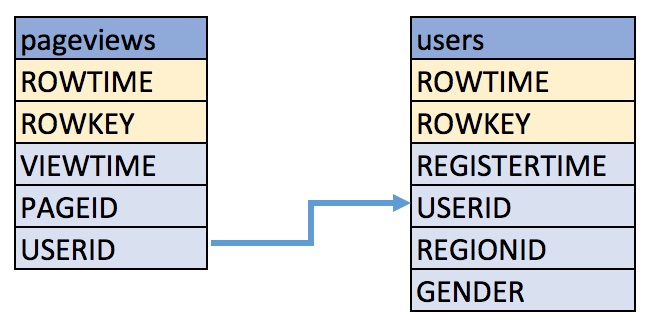

To write streaming queries against the pageviews and users topics,

register the the topics with ksqlDB as a stream and a table. You can use the

CREATE STREAM and CREATE TABLE statements in the ksqlDB Editor, or you can use

the Control Center UI .

These examples query records from the pageviews and users topics using

the following schema.

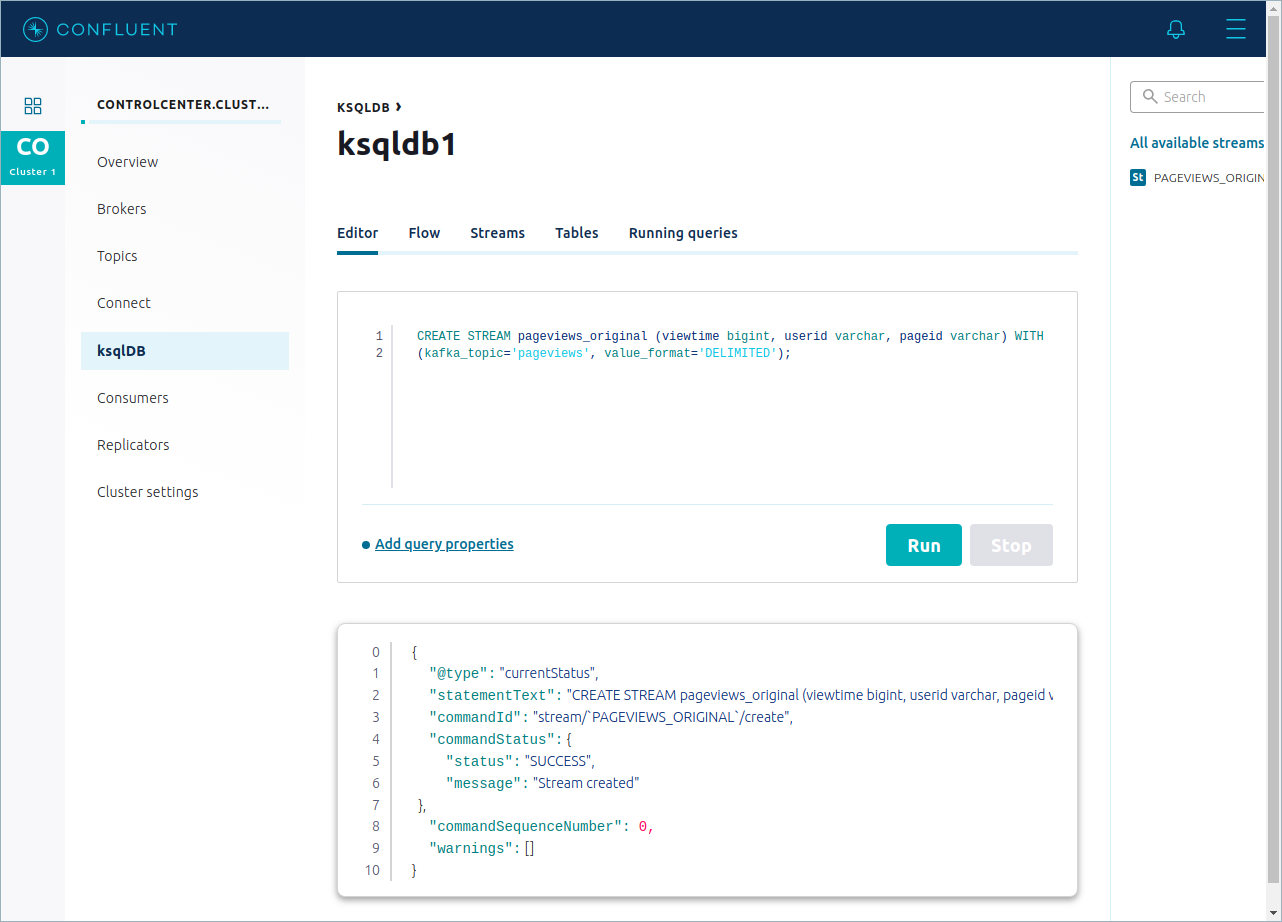

Create a Stream in the ksqlDB Editor¶

You can create a stream or table by using the CREATE STREAM and CREATE TABLE statements in ksqlDB Editor, just like you use them in the ksqlDB CLI.

Copy the following code into the editing window and click Run.

CREATE STREAM pageviews_original (viewtime bigint, userid varchar, pageid varchar) WITH (kafka_topic='pageviews', value_format='DELIMITED');

Your output should resemble:

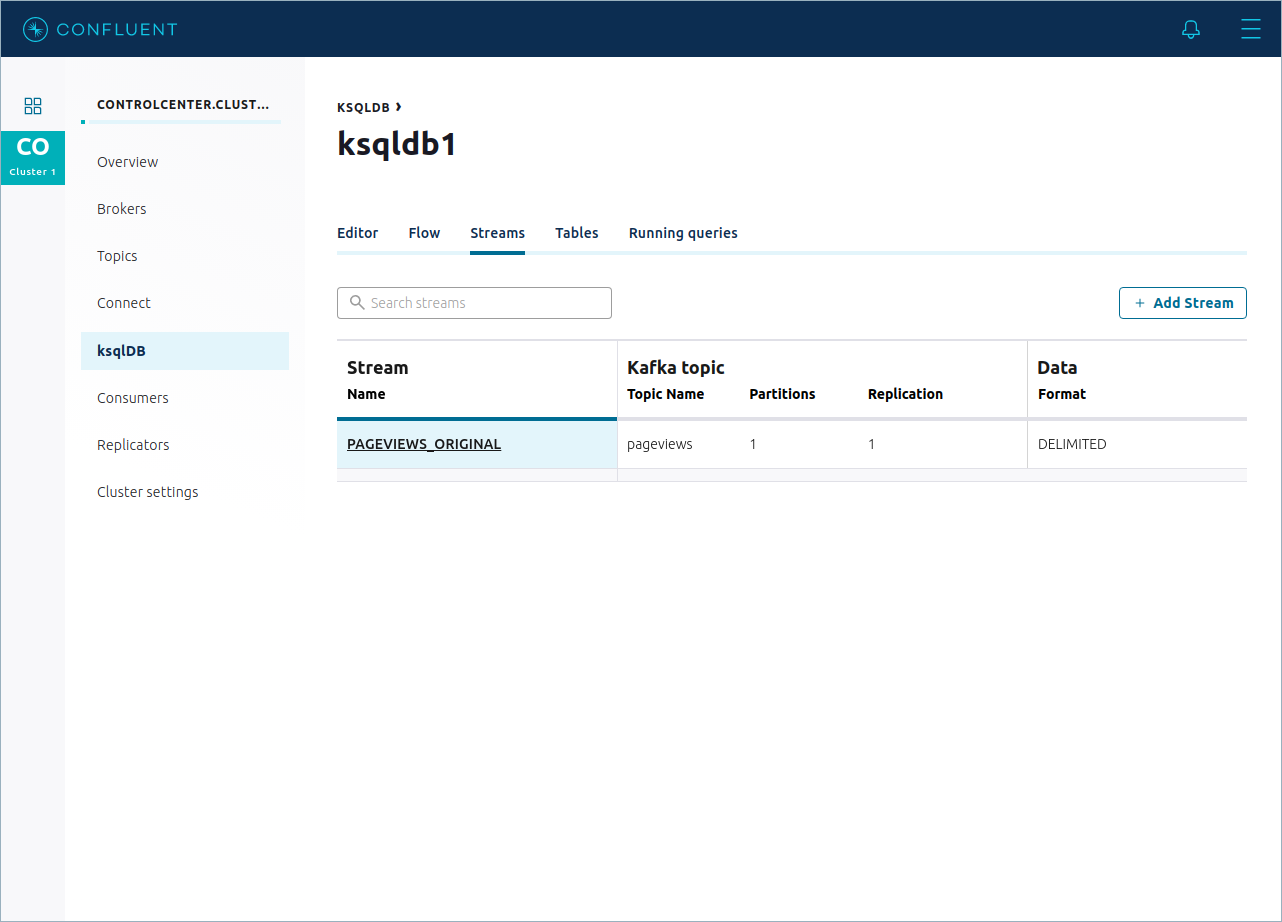

Click Streams to inspect the

pageviews_originalstream that you created.

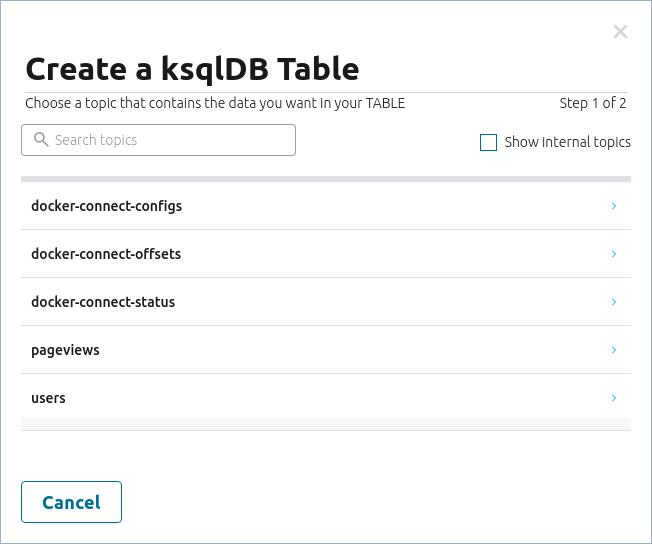

Create a Table in the Control Center UI¶

Confluent Control Center guides you through the process of registering a topic as a stream or a table.

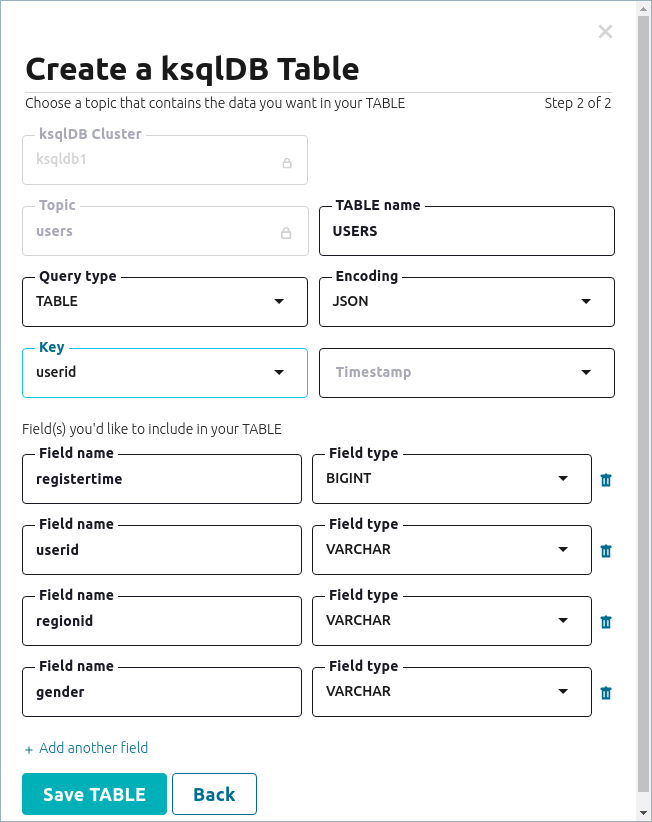

In the ksqlDB Editor, navigate to Tables and click Add a table. The Create a ksqlDB Table dialog opens.

Click users to fill in the details for the table. ksqlDB infers the table schema and displays the field names and types from the topic. You need to choose a few more settings.

- In the Encoding dropdown, select JSON.

- In the Key dropdown, select userid.

Click Save Table to create a table on the the

userstopic.

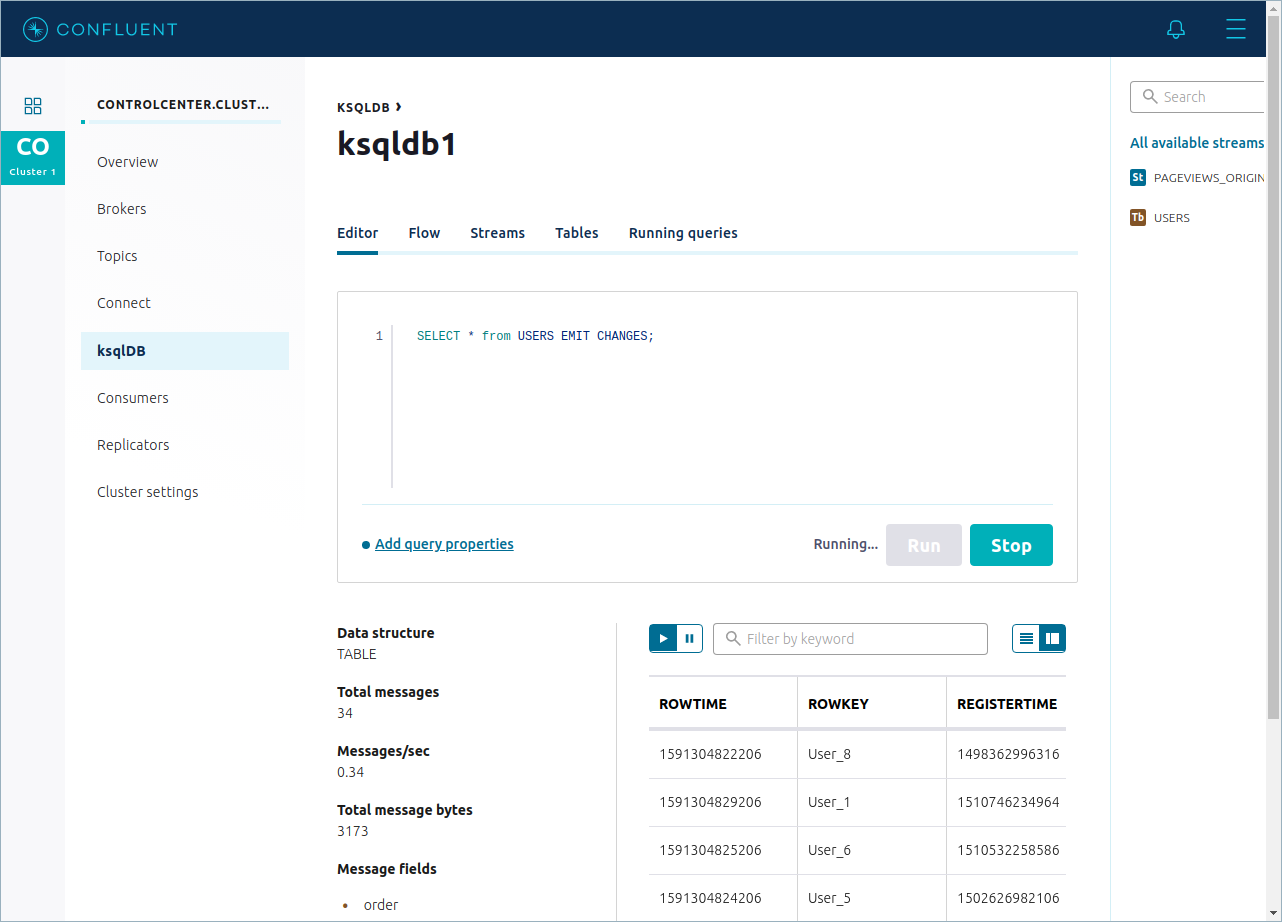

The ksqlDB Editor opens with a suggested query.

The Query Results pane displays query status information, like Messages/sec, and it shows the fields that the query returns.

The query continues until you end it explicitly. Click Stop to end the query.

Write Persistent Queries¶

With the pageviews topic registered as a stream, and the users topic

registered as a table, you can write streaming queries that run until you

end them with the TERMINATE statement.

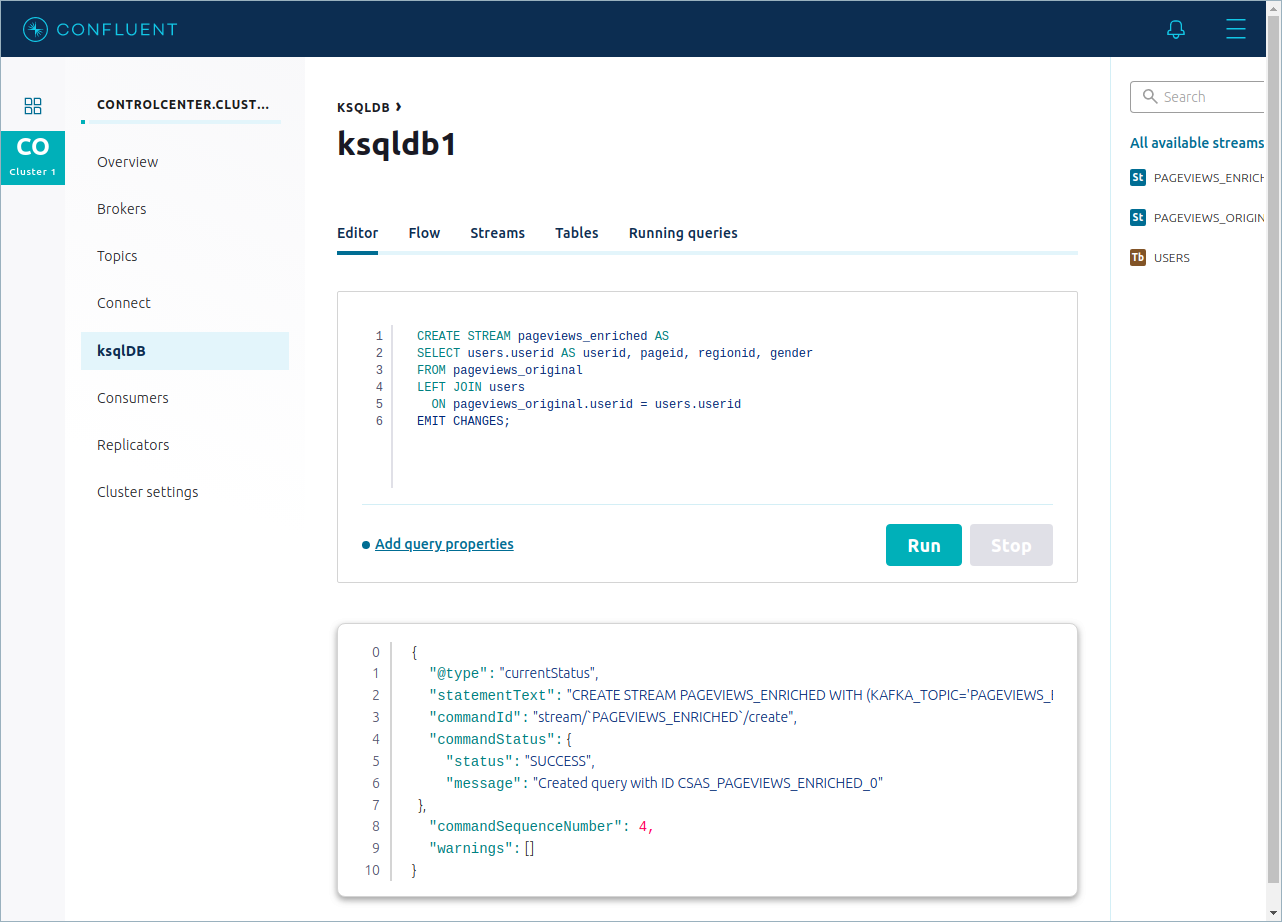

Copy the following code into the editing window and click Run.

CREATE STREAM pageviews_enriched AS SELECT users.userid AS userid, pageid, regionid, gender FROM pageviews_original LEFT JOIN users ON pageviews_original.userid = users.userid EMIT CHANGES;

Your output should resemble:

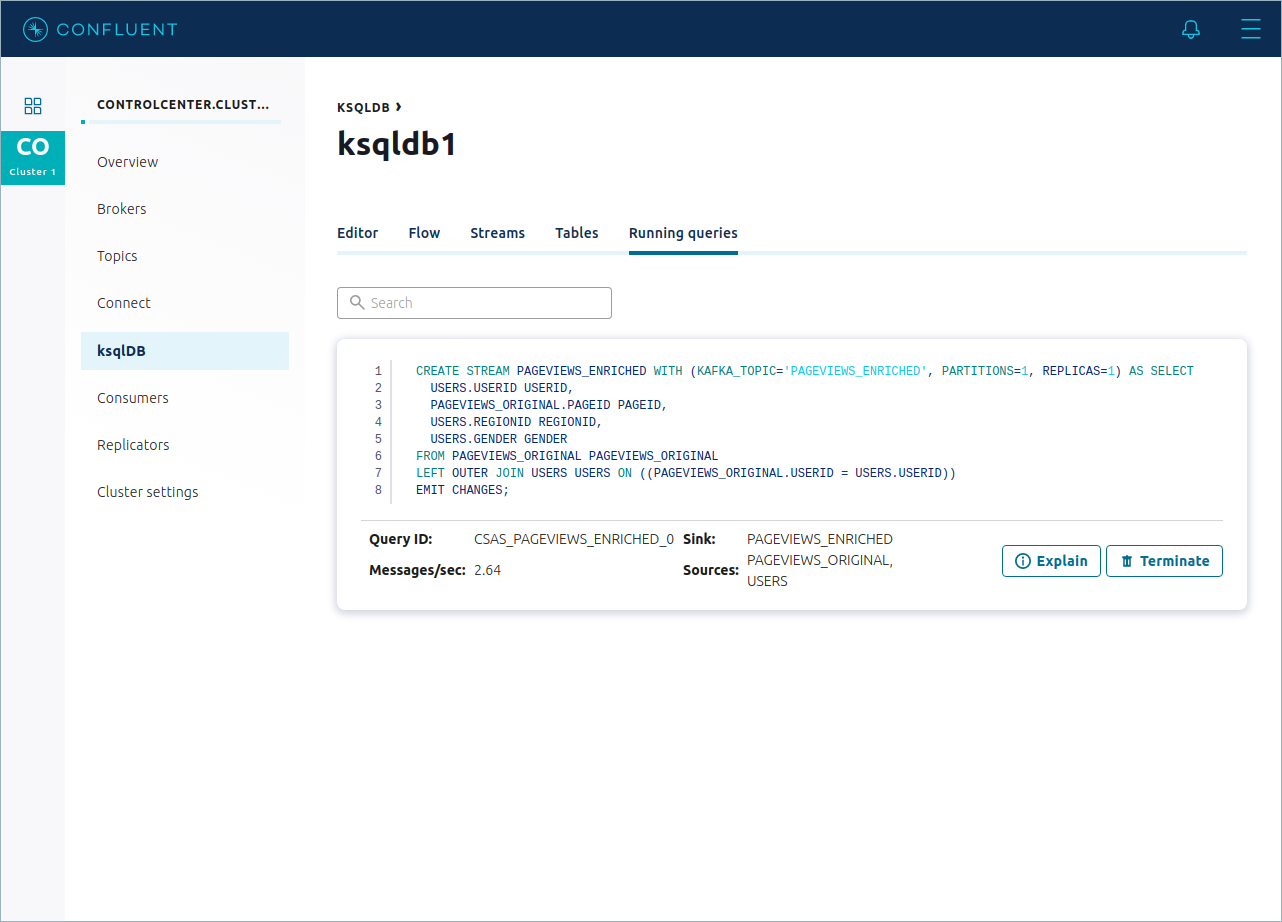

To inspect your persistent queries, navigate to the Running Queries page, which shows details about the

pageviews_enrichedstream that you created in the previous query.

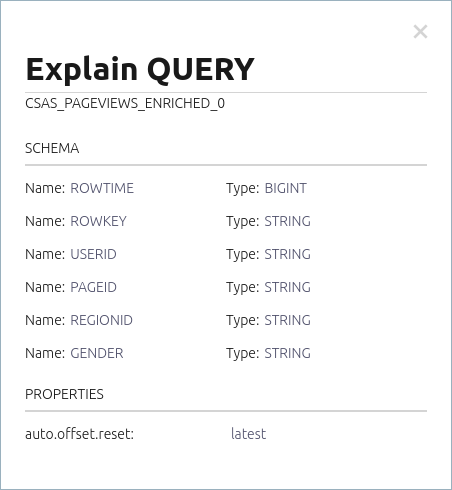

Click Explain to see the schema and query properties for the persistent query.

Monitor Persistent Queries¶

You can monitor your persistent queries visually by using Confluent Control Center.

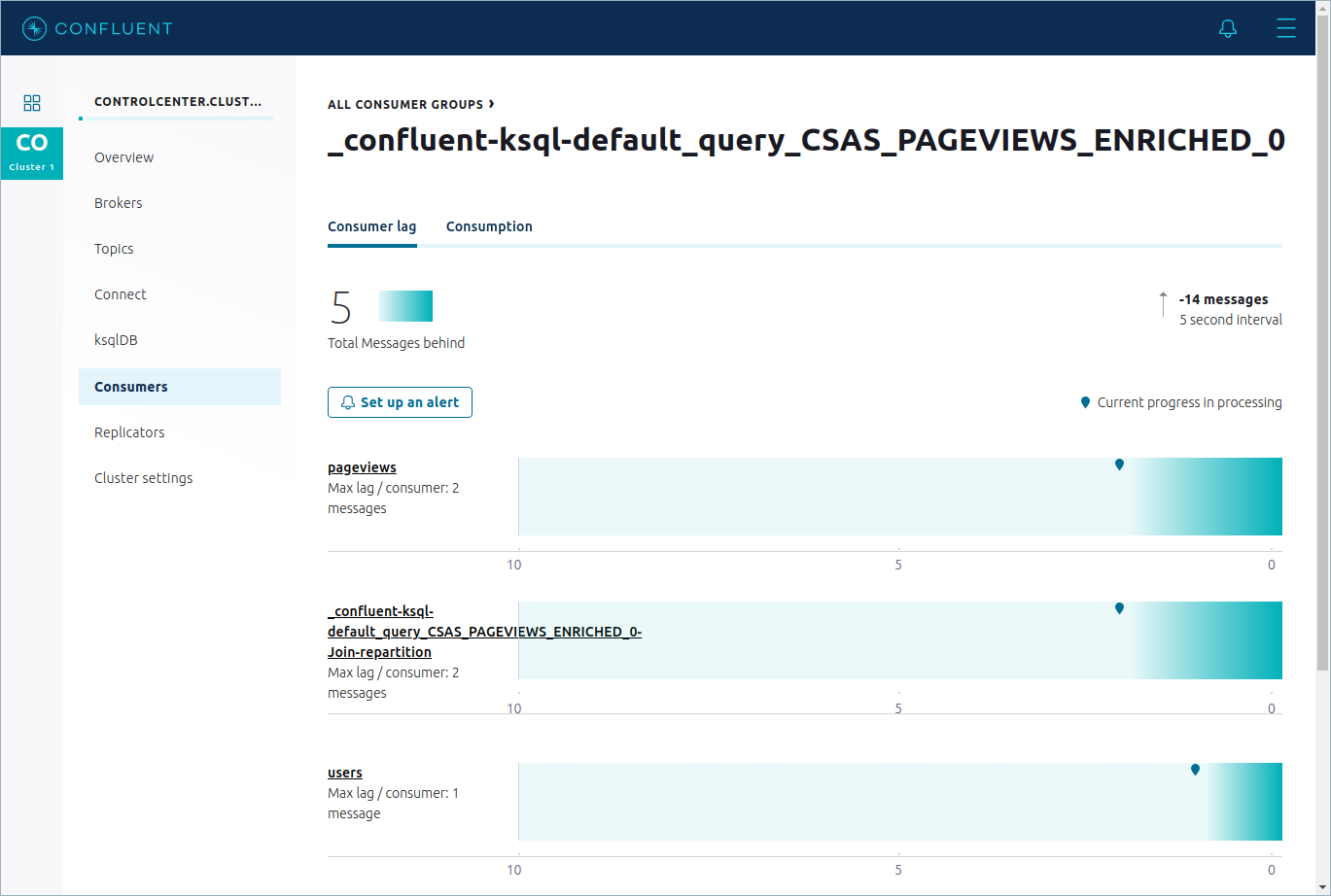

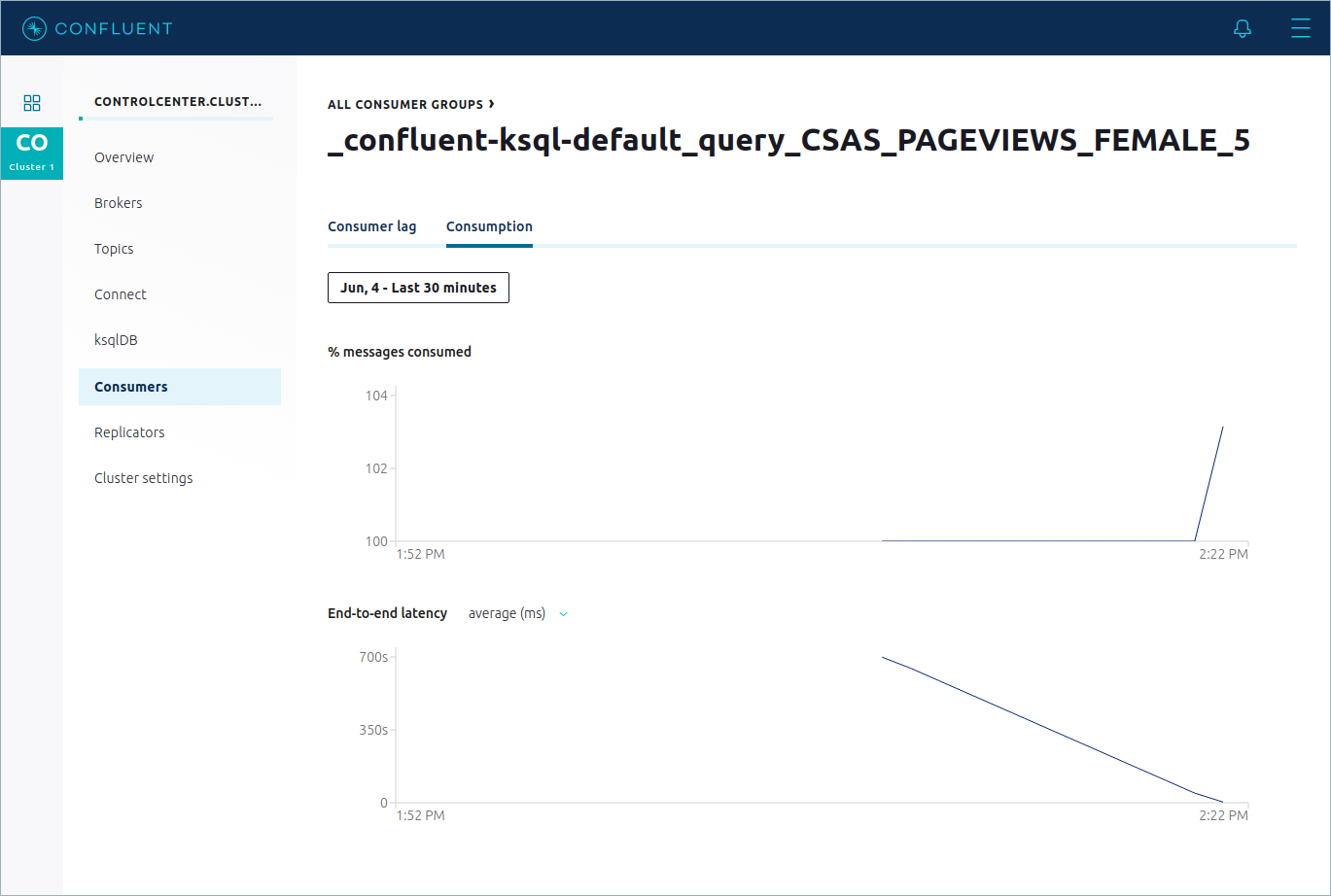

In the cluster submenu, click Consumers and find the consumer group for the

pageviews_enrichedquery, which is named_confluent-ksql-default_query_CSAS_PAGEVIEWS_ENRICHED_0. The Consumer lag page opens.

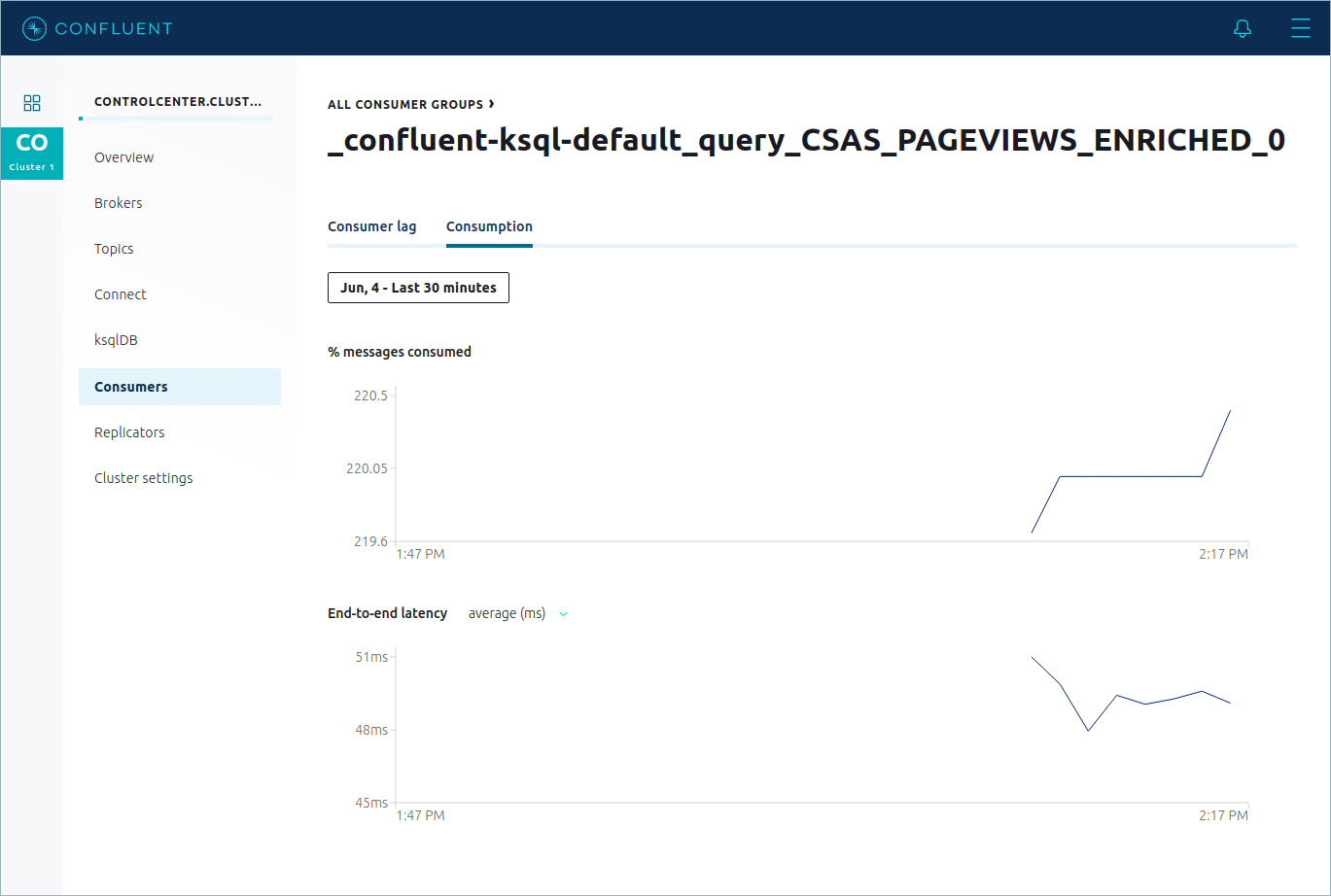

Click Consumption to see the rate that the

pageviews_enrichedquery is consuming records. Change the time scale from Last 4 hours to Last 30 minutes.Your output should resemble:

Query Properties¶

You can assign properties in the ksqlDB Editor before you run your queries.

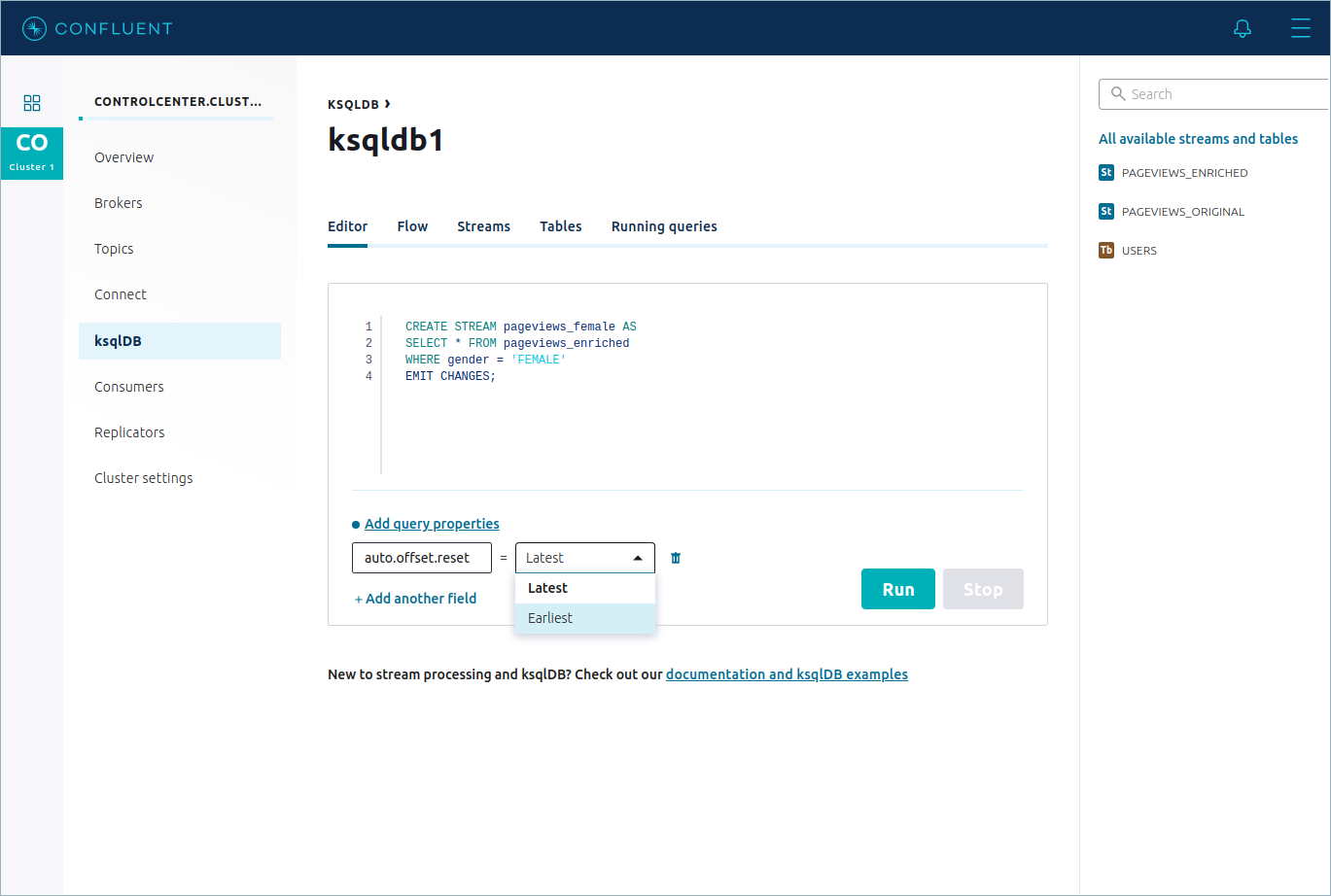

In the cluster submenu, click ksqlDB to open the ksqlDB clusters page, and click the default application to open the ksqlDB Editor.

Click Add query properties and set the

auto.offset.resetfield to Earliest.Copy the following code into the editing window and click Run.

CREATE STREAM pageviews_female AS SELECT * FROM pageviews_enriched WHERE gender = 'FEMALE' EMIT CHANGES;

The

pageviews_femalestream starts with the earliest record in thepageviewstopic, which means that it consumes all of the available records from the beginning.Confirm that the

auto.offset.resetproperty was applied to thepageviews_femalestream. In the cluster submenu, click Consumers and find the consumer group for thepageviews_femalestream, which is named_confluent-ksql-default_query_CSAS_PAGEVIEWS_FEMALE_1.Click Consumption to see the rate that the

pageviews_femalequery is consuming records.

The graph is at 100 percent, because all of the records were consumed when the

pageviews_femalestream started.

View streams and tables¶

You can see all of your persistent queries, streams, and tables in a single, unified view.

In the cluster submenu, click ksqlDB to open the ksqlDB clusters page, and click the default application to open the ksqlDB Editor.

Click Editor and find the All available streams and tables pane on the right side of the page,

Click PAGEVIEWS_ENRICHED to open the stream. The schema for the stream is displayed, including nested data structures.

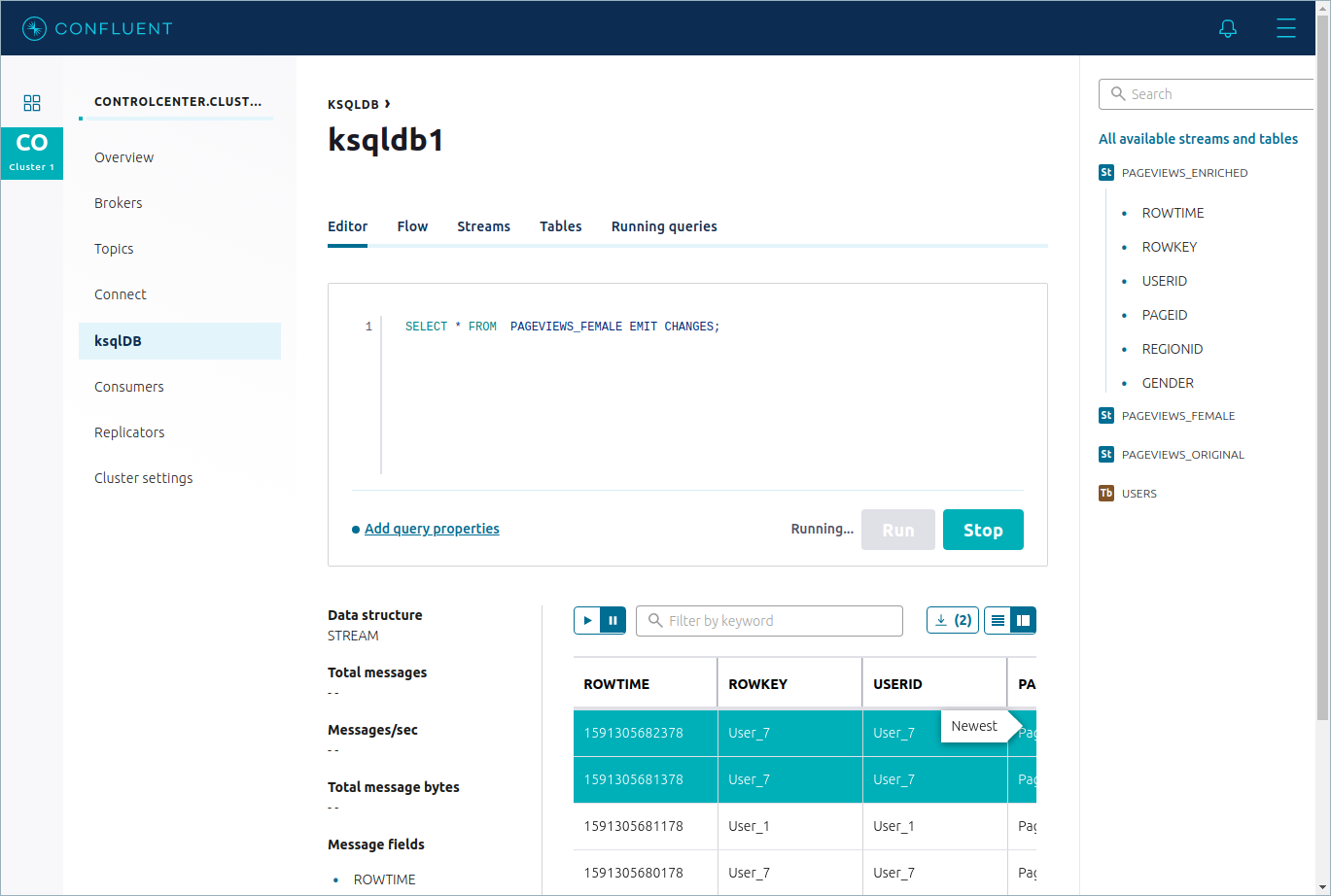

Download selected records¶

You can download records that you select in the query results window as a JSON file.

Copy the following code into the editing window and click Run.

SELECT * FROM PAGEVIEWS_FEMALE EMIT CHANGES;

In the query results window, select some records and click Download.

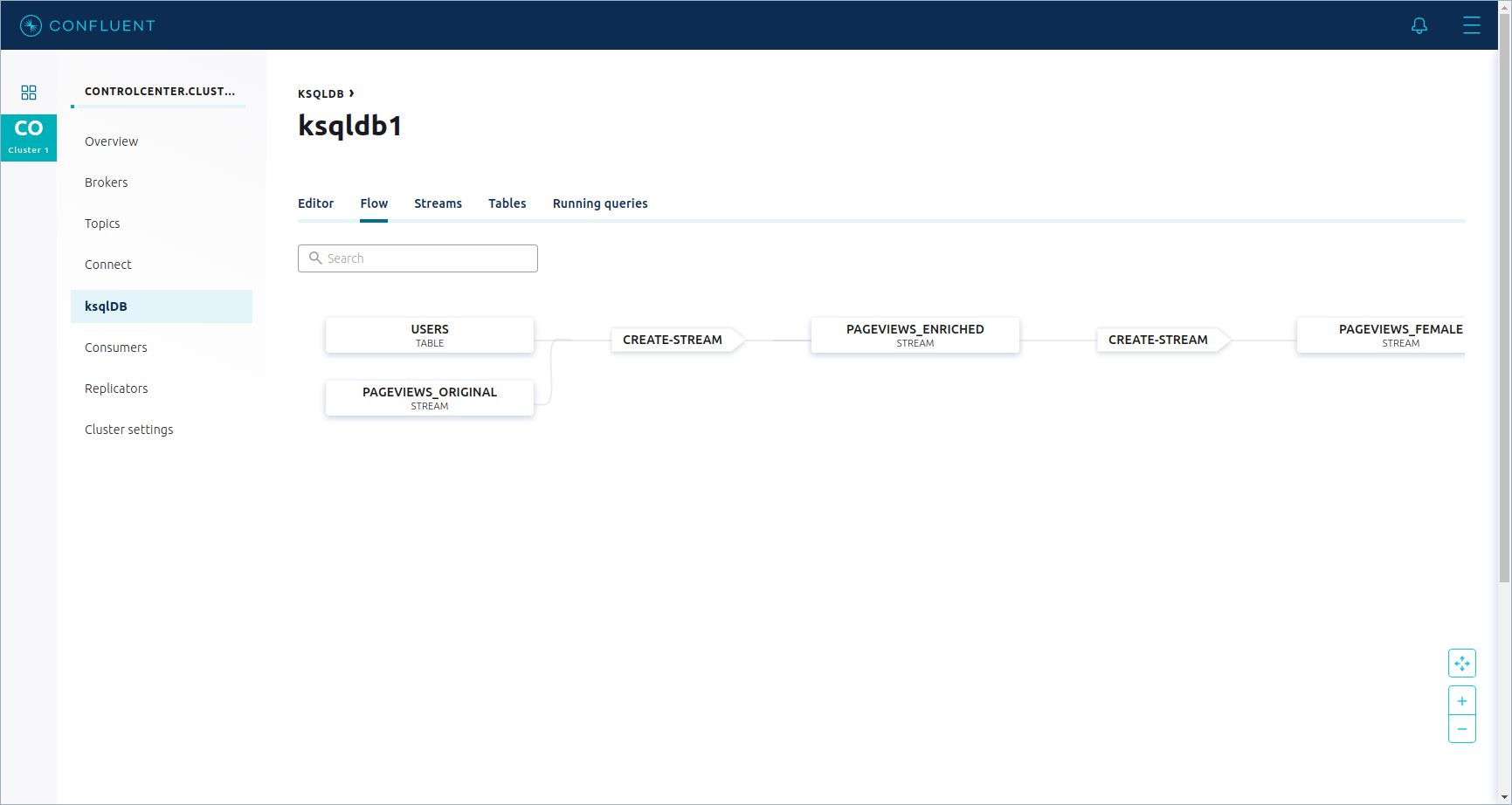

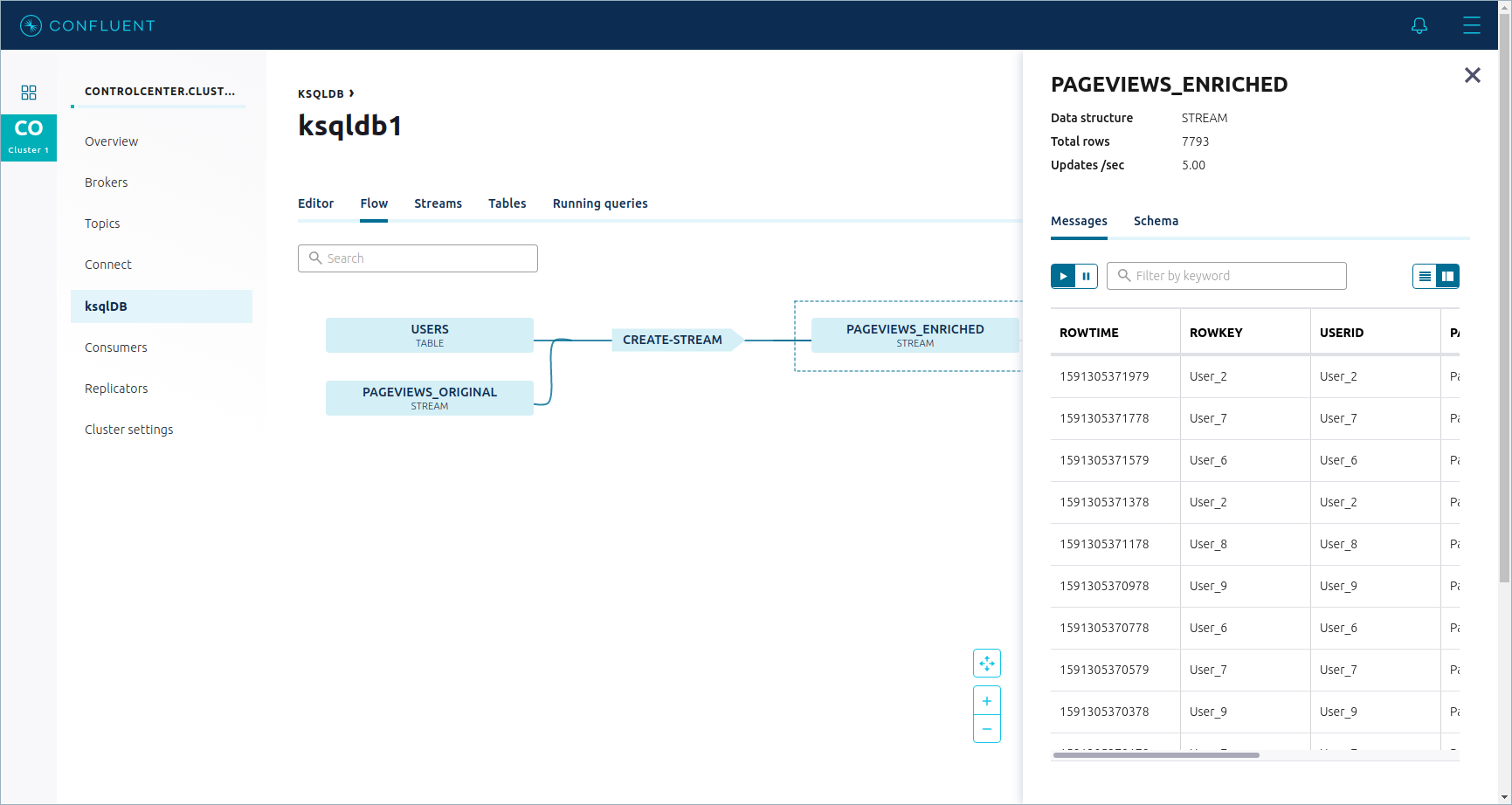

Flow View¶

Control Center enables you to see how events flow through your ksqlDB application.

In the ksqlDB page, click Flow.

Click the PAGEVIEWS_ENRICHED node in the graph to see details about the

PAGEVIEWS_ENRICHEDstream, including current messages and schema.

Click the other nodes in the graph to see details about the topology of your ksqlDB application.

Cleanup¶

Run shutdown and cleanup tasks.

- You can stop each of the running producers (sending data to

usersandpageviewstopics) using Ctl-C in their respective command windows. - To stop Confluent Platform, type

confluent local stop. - If you would like to clear out existing data (topics, schemas, and messages) before starting again with another test , type

confluent local destroy.