Migrate between Kafka Clusters using Confluent Private Cloud Gateway

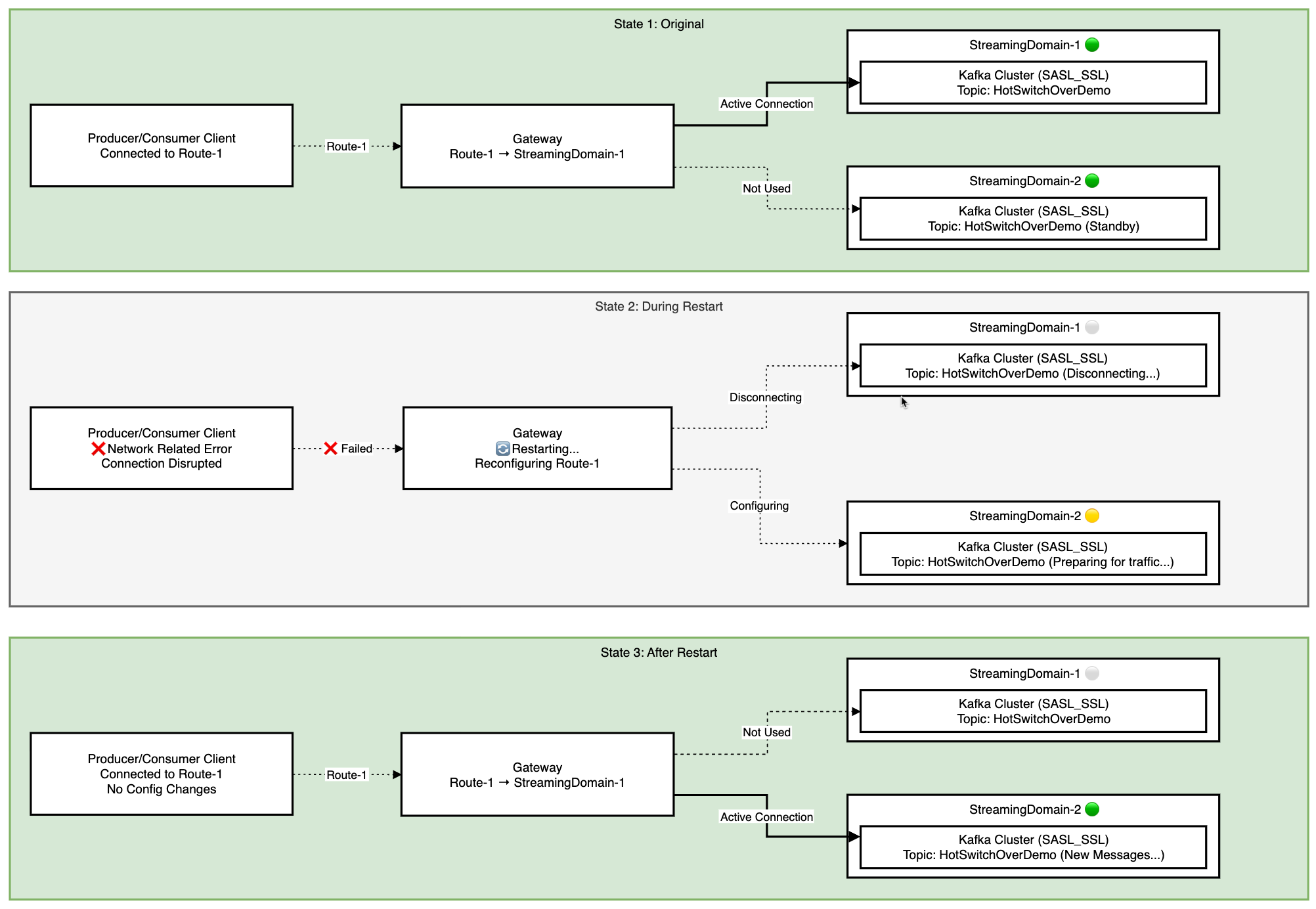

The Client Switchover feature of Confluent Private Cloud Gateway (Confluent Gateway) enables migrations from a source Kafka cluster to a destination Kafka cluster without requiring client-side changes. Confluent Gateway handles the routing and authentication mapping transparently, allowing producers and consumers to continue operating without any changes to their configurations.

Some use cases for client switchover are:

Enable disaster recovery by switching from an unhealthy cluster to a healthy cluster.

Enable easier on-premises broker upgrades by enabling blue-green deployment strategies.

To switch over clients, change the switchover route to point to the destination Kafka cluster by updating the Streaming Domain and corresponding bootstrap server ID in the switchover route.

For a sample configuration, see the Switchover Example in the Gateway image GitHub repository.

Prerequisites and considerations

Review the requirements and potential implications of Client Switchover before performing the migration.

Data replication must be set up outside of Confluent Gateway based on your requirements using tools like Cluster Linking.

If ordering guarantee and data consistency are required, for example Kafka Streams applications, do not use Client Switchover because it involves more than one Kafka cluster.

Client Switchover requires restart of the Confluent Gateway service, which impacts:

Consumer: When the Confluent Gateway restarts after a Client Switchover, it triggers consumer group rebalancing as connections are severed, especially if restart exceeds

session.timeout.ms, with default of45seconds. During rebalancing, consumers may reprocess messages if offsets weren’t committed before the restart. Applications should handle duplicate message processing or enable auto-commit with appropriate intervals:Use

isolation.level=read_committedto avoid reading aborted transactions.Tune server properties (if required):

group.coordinator.rebalance.protocols: Set toeager,cooperative, orconsumer.group.coordinator.session.timeout.ms

Producer: The primary risk during Confluent Gateway restart after a Client Switchover is message duplication when the broker acknowledges a message but the Confluent Gateway fails before relaying the acknowledgment to the producer. This causes producer retries, resulting in duplicate messages.

Use the

enable.idempotence=trueKafka configuration to prevent duplicates, or ensure your application can handle them.Transactions: Exactly Once Semantics (EOS) holds in Client Switchover. In-flight transactions commit if the outage is shorter than

transaction.timeout.ms, and otherwise abort. On switchover, any open transaction on the source will abort and cannot be committed on the target. The producer application must reset the transaction state on failure and start a new transaction.

Client Switchover between Kafka clusters

To perform Client Switchover:

Have your Confluent Gateway configured with two Streaming Domains for two Kafka clusters.

For example:

streamingDomains: - name: kafka1-domain type: kafka kafkaCluster: name: kafka-cluster-1 bootstrapServers: - id: internal-plaintext-listener endpoint: "kafka-1:44444" - name: kafka2-domain type: kafka kafkaCluster: name: kafka-cluster-2 bootstrapServers: - id: internal-plaintext-listener endpoint: "kafka-2:22222"

Reconfigure the Route to point to the destination Kafka cluster by updating the Streaming Domain and corresponding bootstrap server ID in the Route.

For example, the following configuration points the

switchover-routeto thekafka1-domainstreaming domain, and the clients send and receive messages from the source Kafka cluster,kafka-cluster-1.streamingDomains: - name: kafka1-domain type: kafka kafkaCluster: name: kafka-cluster-1 bootstrapServers: - id: internal-plaintext-listener endpoint: "kafka-1:44444" - name: kafka2-domain type: kafka kafkaCluster: name: kafka-cluster-2 bootstrapServers: - id: internal-plaintext-listener endpoint: "kafka-2:22222" routes: - name: switchover-route endpoint: "host.docker.internal:19092" streamingDomain: name: kafka1-domain bootstrapServerId: internal-plaintext-listener

When you update the

switchover-routeto point to thekafka2-domainstreaming domain, the clients will start sending and receiving new messages from the destination Kafka cluster,kafka-cluster-2.streamingDomains: - name: kafka1-domain type: kafka kafkaCluster: name: kafka-cluster-1 bootstrapServers: - id: internal-plaintext-listener endpoint: "kafka-1:44444" - name: kafka2-domain type: kafka kafkaCluster: name: kafka-cluster-2 bootstrapServers: - id: internal-plaintext-listener endpoint: "kafka-2:22222" routes: - name: switchover-route endpoint: "host.docker.internal:19092" streamingDomain: name: kafka2-domain bootstrapServerId: internal-plaintext-listener

Stop and restart the Confluent Gateway container.

When the Confluent Gateway container is restarted, the clients continue sending and receiving new messages from the destination Kafka cluster.

No changes are required on the producer or consumer side.