Important

You are viewing documentation for an older version of Confluent Platform. For the latest, click here.

Build Your Own Demos¶

This page provides resources for you to build your own Apache Kafka® demo or test environment using Confluent Cloud or Confluent Platform. Using these as a foundation, you can add any connectors or applications.

The examples bring up Kafka services with no pre-configured topics, connectors, data sources, or schemas. Once the services are running, you can provision your own topics, etc.

Confluent Cloud¶

The first 20 users to sign up for Confluent Cloud

and use promo code C50INTEG will receive an additional $50 free usage

(details).

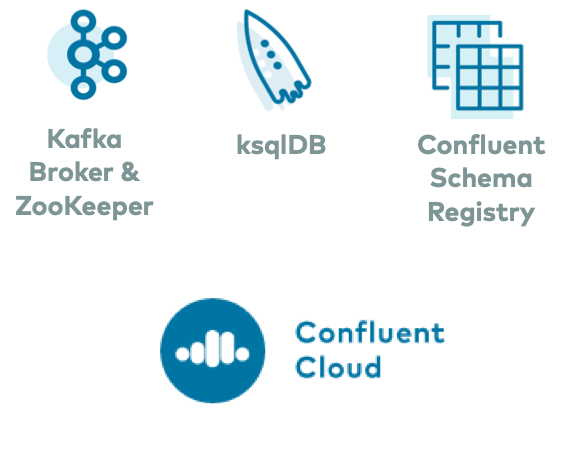

cp-all-in-one-cloud¶

Use cp-all-in-one-cloud to connect your self-managed services to Confluent Cloud. This Docker Compose file launches all services in Confluent Platform (except for the Kafka brokers), runs them in containers in your local host, and automatically configures them to connect to Confluent Cloud. You can optionally use this in conjunction with ccloud-stack Utility for Confluent Cloud to quickly create new Confluent Cloud environments.

For an automated example of how to use cp-all-in-one-cloud, refer to cp-all-in-one-cloud automated quickstart which follows the Quick Start for Apache Kafka using Confluent Cloud.

Setup¶

By default, the example uses Schema Registry running in a local Docker container. If you prefer to use Confluent Cloud Schema Registry instead, you need to first enable Confluent Cloud Schema Registry prior to running the example.

By default, the example uses ksqlDB running in a local Docker container. If you prefer to use Confluent Cloud ksqlDB instead, you need to first enable Confluent Cloud ksqlDB prior to running the example.

Generate and source a file of ENV variables used by Docker to set the bootstrap servers and security configuration, which requires you to first create a local configuration file with connection information. (See Auto-Generating Configurations for Components to Confluent Cloud for more information on using this script.)

Validate your credentials to Confluent Cloud Schema Registry:

curl -u $SCHEMA_REGISTRY_BASIC_AUTH_USER_INFO $SCHEMA_REGISTRY_URL/subjects

Validate your credentials to Confluent Cloud ksqlDB:

curl -H "Content-Type: application/vnd.ksql.v1+json; charset=utf-8" -u $KSQLDB_BASIC_AUTH_USER_INFO $KSQLDB_ENDPOINT/info

Bring up services¶

Make sure you completed the steps in the Setup section above before proceeding.

Clone the confluentinc/cp-all-in-one GitHub repository.

git clone https://github.com/confluentinc/cp-all-in-one.git

Navigate to the

cp-all-in-one-clouddirectory.cd cp-all-in-one-cloud

Checkout the 5.5.15-post branch.

git checkout 5.5.15-post

To bring up all services locally, at once:

docker-compose up -d

To bring up just Schema Registry locally (if you are not using Confluent Cloud Schema Registry):

docker-compose up -d schema-registry

To bring up Connect locally: the docker-compose.yml file has a container called

connectthat is running a custom Docker image cnfldemos/cp-server-connect-datagen which pre-bundles the kafka-connect-datagen connector. Start this Docker container:docker-compose up -d connect

If you want to run Connect with any other connector, you need to first build a custom Docker image that adds the desired connector to the base Kafka Connect Docker image (see Add Connectors or Software). Search through Confluent Hub to find the appropriate connector and set

CONNECTOR_NAME, then build the new, custom Docker container using the provided Dockerfile:docker build --build-arg CONNECTOR_NAME=${CONNECTOR_NAME} -t localbuild/connect_custom_example:latest -f ../Docker/Dockerfile .Start this custom Docker container in one of two ways:

# Override the original Docker Compose file docker-compose -f docker-compose.yml -f ../Docker/connect.overrides.yml up -d connect # Run a new Docker Compose file docker-compose -f docker-compose.connect.local.yml up -d

To bring up Confluent Control Center locally:

docker-compose up -d control-center

To bring up ksqlDB locally (if you are not using Confluent Cloud ksqlDB):

docker-compose up -d ksqldb-server

To bring up ksqlDB CLI locally, assuming you are using Confluent Cloud ksqldB, if you want to just run a Docker container that is transient:

docker run -it confluentinc/cp-ksqldb-cli:5.5.0 -u $(echo $KSQLDB_BASIC_AUTH_USER_INFO | awk -F: '{print $1}') -p $(echo $KSQLDB_BASIC_AUTH_USER_INFO | awk -F: '{print $2}') $KSQLDB_ENDPOINTIf you want to run a Docker container for ksqlDB CLI from the Docker Compose file and connect to Confluent Cloud ksqlDB in a separate step:

docker-compose up -d ksqldb-cli

To bring up REST Proxy locally:

docker-compose up -d rest-proxy

ccloud-stack Utility¶

The ccloud-stack Utility for Confluent Cloud creates a stack of fully managed services in Confluent Cloud. Executed with a single command, it is a quick way to create fully managed components in Confluent Cloud, which you can then use for learning and building other demos. Do not use this in a production environment. The script uses the Confluent Cloud CLI to dynamically do the following in Confluent Cloud:

- Create a new environment.

- Create a new service account.

- Create a new Kafka cluster and associated credentials.

- Enable Confluent Cloud Schema Registry and associated credentials.

- Create a new ksqlDB app and associated credentials.

- Create ACLs with wildcard for the service account.

- Generate a local configuration file with all above connection information, useful for other demos/automation.

To learn how to use ccloud-stack with Confluent Cloud, read more at ccloud-stack Utility for Confluent Cloud.

Generate Test Data with Datagen¶

Read the blog post Creating a Serverless Environment for Testing Your Apache Kafka Applications: a “Hello, World!” for getting started with Confluent Cloud, plus different ways to generate more interesting test data to the Kafka topics.

On-Premises¶

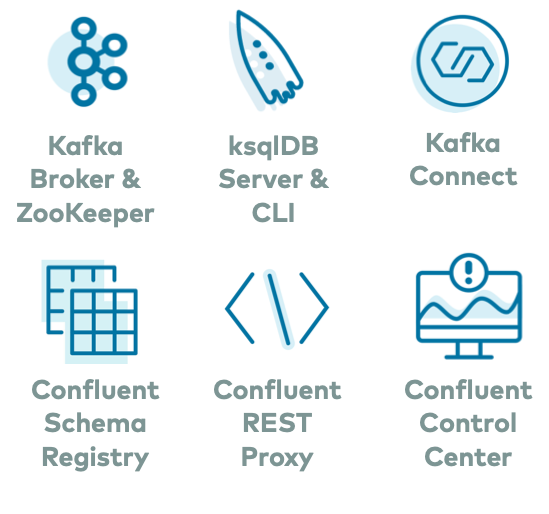

cp-all-in-one¶

Use cp-all-in-one to run the Confluent Platform stack on-premesis. This Docker Compose file launches all services in Confluent Platform, and runs them in containers in your local host.

For an automated example of how to use cp-all-in-one, refer to cp-all-in-one automated quickstart which follows the Quick Start for Apache Kafka using Confluent Platform (Docker).

Clone the confluentinc/cp-all-in-one GitHub repository.

git clone https://github.com/confluentinc/cp-all-in-one.git

Navigate to the

cp-all-in-onedirectory.cd cp-all-in-one

Checkout the 5.5.15-post branch.

git checkout 5.5.15-post

To bring up all services:

docker-compose up -d

cp-all-in-one-community¶

Use cp-all-in-one-community to run only the community services from Confluent Platform on-premesis. This Docker Compose file launches all community services and runs them in containers in your local host.

For an automated example of how to use cp-all-in-one-community, refer to cp-all-in-one-community automated quickstart which follows the Quick Start for Apache Kafka using Confluent Platform Community Components (Docker).

Clone the confluentinc/cp-all-in-one GitHub repository.

git clone https://github.com/confluentinc/cp-all-in-one.git

Navigate to the

cp-all-in-one-communitydirectory.cd cp-all-in-one-community

Checkout the 5.5.15-post branch.

git checkout 5.5.15-post

To bring up all services:

docker-compose up -d

Generate Test Data with kafka-connect-datagen¶

Read the blog post Easy Ways to Generate Test Data in Kafka: a “Hello, World!” for launching Confluent Platform, plus different ways to generate more interesting test data to the Kafka topics.