Configure Audit Logs using the Properties File

This topic describes how to configure audit logs using the server.properties file.

Configure audit logs

You can configure audit logs using the server.properties file as described in the following sections, or using the:

Before setting up audit logs on your system, be aware of the following default settings:

Enabled by default:

Topics: create and delete

ACLs: create and delete

Authorization requests related to RBAC

Every event in the MANAGEMENT category

Disabled by default:

Interbroker communication

Produce and consume-related events

Heartbeat and DESCRIBE events

Advanced audit log configuration

Most organizations have strict and specific security governance requirements and policies. In such cases, you can configure audit logs on a more granular level. Specifically, you can configure:

Which event categories you want to capture (including categories like produce, consume, and interbroker, which are disabled by default)

Multiple topics to capture logs of differing importance

Topic destination routes optimized for security and performance

Retention periods that serve the needs of your organization

Excluded principals, which ensures performance is not compromised by excessively high message volumes

The Kafka port over which to communicate with your audit log cluster

When enabling audit logging for produce and consume, be very selective about which events you want logged, and configure logging for only the most sensitive topics.

Note

It is very common for clients who don’t have READ or WRITE authorizations on a given topic to also not have DESCRIBE authorization. For details, see Produce and consume denials are not appearing in audit logs.

After enabling audit logs in Kafka server.properties, set the confluent.security.event.router.config to a JSON configuration similar to the one shown in the following example advanced audit log configuration:

{

"destinations": {

"bootstrap_servers": [

"audit.example.com:9092"

],

"topics": {

"confluent-audit-log-events_denied": {

"retention_ms": 2592000000

},

"confluent-audit-log-events_secure": {

"retention_ms": 7776000000

},

"confluent-audit-log-events": {

"retention_ms": 2592000000

}

}

},

"default_topics": {

"allowed": "confluent-audit-log-events",

"denied": "confluent-audit-log-events"

},

"excluded_principals": [

"User:Alice",

"User:service_account_id"

],

"routes": {

"crn://mds1.example.com/kafka=*/topic=secure-*": {

"produce": {

"allowed": "confluent-audit-log-events_secure",

"denied": ""

},

"consume": {

"allowed": "confluent-audit-log-events_secure",

"denied": "confluent-audit-log-events_denied"

}

}

}

}

Note that you can use Kafka Dynamic Configurations to update the confluent.security.event.router.config setting without restarting the cluster. Because the router configuration is a complex structure, you must create a .properties file with the desired value (exactly as you would in server.properties) and use kafka-configs to add the configuration:

bin/kafka-configs --bootstrap-server localhost:9092 --entity-type brokers --entity-default \

--alter --add-config-file new.properties

Keep in mind that dynamic configurations take precedence over configurations in server.properties, so if you change the value in the server.properties file later and restart the cluster, the dynamic configuration will continue to be used until you delete the dynamic configuration using kafka-configs.

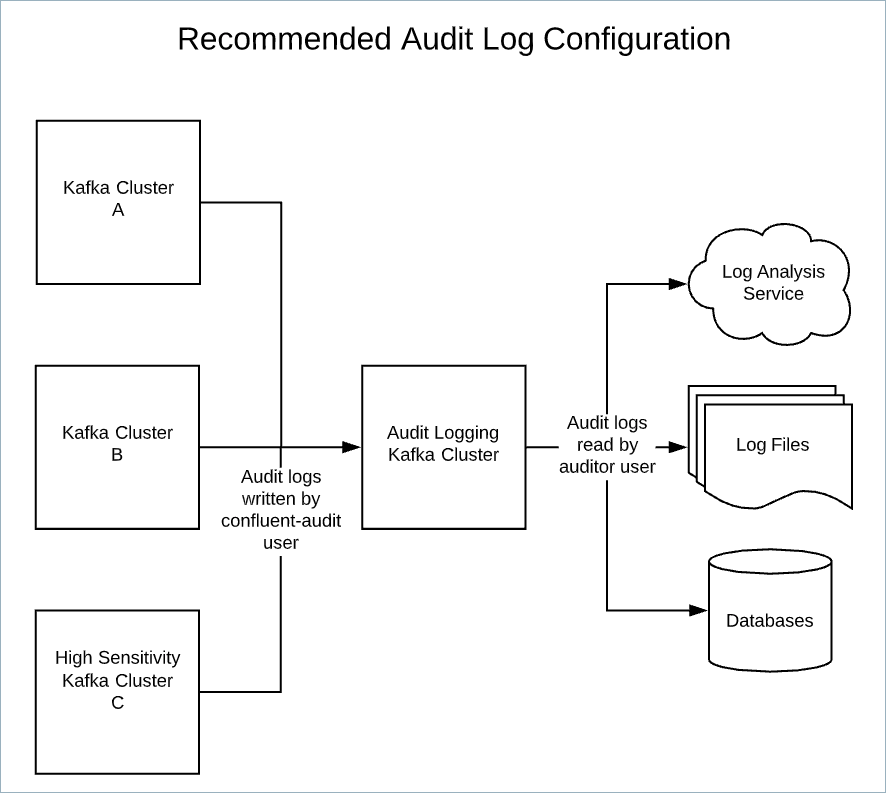

Configure audit log destination

The destinations option identifies the audit log cluster, which is provided by the bootstrap server. Use this setting to identify the communication channel between your audit log cluster and Kafka. You can use the bootstrap_server setting to deliver audit log messages to a specific cluster set aside for the sole purpose of retaining them. This ensures that no one can access or tamper with your organization’s audit logs, and enables you to selectively conduct more in-depth auditing of sensitive data, while keeping log volumes down for less sensitive data.

If you deliver audit logs to another cluster, you must configure the connection to that cluster. Configure this connection as you would any producer writing to the cluster, using the prefix confluent.security.event.logger.exporter.kafka for the producer configuration keys, including the appropriate authentication information.

For example, if you have a Kafka cluster listening on port 9092 of the host audit.example.com, and that cluster accepts SCRAM-SHA-256 authentication and has a principal named confluent-audit that is allowed to connect and produce to the audit log topics, the configuration would look like the following:

confluent.security.event.router.config=\

{ \

"destinations": { \

"bootstrap_servers": ["audit.example.com:9092"], \

"topics": { \

"confluent-audit-log-events": { \

"retention_ms": 7776000000 \

} \

} \

}, \

"default_topics": { \

"allowed": "confluent-audit-log-events", \

"denied": "confluent-audit-log-events" \

} \

}

confluent.security.event.logger.exporter.kafka.sasl.mechanism=SCRAM-SHA-256

confluent.security.event.logger.exporter.kafka.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \

username="confluent-audit" \

password="secretP@ssword123";

Bootstrap servers may be provided in either the router configuration JSON or a producer configuration property; if they appear in both places, the router configuration takes precedence.

Create audit log topics

Most organizations create topics for specific logging purposes. You can send different kinds of audit log messages to multiple topics on a single Kafka cluster and specify a retention period for each topic. For example, if you send produce log messages and management log messages to the same topic, you cannot set the retention for the produce messages to one day while setting the retention for the management messages to 30 days. To achieve those retentions, you must send the messages to different topics.

Important

The topic retention period you specify in the audit log configuration is enforced only if the audit log feature creates a topic that doesn’t already exist. Do not attempt to edit this value in the configuration for a topic that already exists; any retention updates will not apply. See Configure the replication factor and retention period for information on how to use the CLI to revise the retention period for an existing audit log topic.

You must use the prefix confluent-audit-log-events for all topics. The following topics are specified in the example:

confluent-audit-log-events_deniedAll log messages for denied consume requests to topics with names starting with thesecure-prefix are sent to this topic.confluent-audit-log-events_secureAll log messages for allowed produce and consume requests to topics that use thesecure-prefix are sent to this topic.confluent-audit-log-eventsAll log messages for allowed and denied Management and Authorization categories are sent to this topic.

You can include topics that have not yet been created in the topic configuration. If you do so, they will be created automatically if or when the audit log principal has the necessary permissions, and will use the retention period specified in the configuration file. However, you cannot modify the retention period of existing topics merely by editing this configuration file. For details about changing the retention period for existing topics, see Configure the replication factor and retention period.

Route topics

You can flexibly route audit log messages to different topics based on subject, category, and whether authorizations were allowed or denied. You can also specify routes for specific subjects, or use prefixes (for example, _secure-* for sensitive authorization events) and wildcards to cover larger groups of resources.

The routing example above shows a specific CRN that contains wildcard patterns (crn://mds1.example.com/kafka=*/topic=secure-*) for Kafka (kafka=*) that is using a prefix created for highly sensitive topics (topic=secure-*). All allowed produce authorization event logs matching this CRN pattern will be routed to the topic confluent-audit-log-events_secure. All denied produce authorization event logs matching this CRN pattern will be routed nowhere, as specified by the empty string (""). All allowed consume authorization event logs matching this CRN pattern will be routed to the topic confluent-audit-log-events_secure, and all denied consume authorization event logs matching this CRN pattern will be routed to the topic confluent-audit-log-events_denied.

Secure audit logs

Consider the following configuration recommendations for securing your audit logs:

For improved security, send audit logs to a different cluster. Limit permissions on the audit log cluster to ensure the audit log is trustworthy.

Create an audit log principal with limited permissions (for example, a user identified as

confluent-audit). The default is to use the broker principal, which has expansive permissions.

To set up permissions for the audit log principal using an RBAC role binding:

bin/confluent iam rbac role-binding create \

--principal User:confluent-audit \

--role DeveloperWrite \

--resource Topic:confluent-audit-log-events \

--prefix \

--kafka-cluster-id <cluster id>

Alternatively, set the permissions using an ACL:

bin/kafka-acls --bootstrap-server localhost:9092 \

--add \

--allow-principal User:confluent-audit \

--allow-host '*' \

--producer \

--topic confluent-audit-log-events \

--resource-pattern-type prefixed

Audit log configuration examples

This section includes additional audit log configuration examples for specific use cases.

Advanced topic routing use cases

The following configuration example shows how to route billing and payroll data in clusters. In this use case, all of the billing topics use the prefix billing- and all of the payroll topics use the prefix payroll-. These two departments have different auditors who require detailed audit log information. To ensure that audit log messages relating to the specific topics are routed only to the correct auditor, use:

{

"destinations": {

"bootstrap_servers": [

"audit.example.com:9092"

],

"topics": {

"confluent-audit-log-events": {

"retention_ms": 2592000000

},

"confluent-audit-log-events_payroll": {

"retention_ms": 2592000000

},

"confluent-audit-log-events_billing": {

"retention_ms": 2592000000

}

}

},

"default_topics": {

"allowed": "confluent-audit-log-events",

"denied": "confluent-audit-log-events"

},

"routes": {

"crn://mds1.example.com/kafka=*/topic=billing-*": {

"produce": {

"allowed": "confluent-audit-log-events_billing",

"denied": "confluent-audit-log-events_billing"

},

"consume": {

"allowed": "confluent-audit-log-events_billing",

"denied": "confluent-audit-log-events_billing"

},

"management": {

"allowed": "confluent-audit-log-events_billing",

"denied": "confluent-audit-log-events_billing"

}

},

"crn://mds1.example.com/kafka=*/topic=payroll-*": {

"produce": {

"allowed": "confluent-audit-log-events_payroll",

"denied": "confluent-audit-log-events_payroll"

},

"consume": {

"allowed": "confluent-audit-log-events_payroll",

"denied": "confluent-audit-log-events_payroll"

},

"management": {

"allowed": "confluent-audit-log-events_payroll",

"denied": "confluent-audit-log-events_payroll"

}

}

}

}

You cannot create a router configuration to capture messages for a specific event type only. For example, you cannot configure routing to capture all kafka.JoinGroup messages. However, if you know which category that the event type appears in, you can create a router configuration at the category level, and then filter the results using a tool like ksqlDB, Spark, ElasticSerach or Splunk.

To configure routing of all audit log messages for kafka.JoinGroup events, which fall into the consume category:

"routes": {

"crn:///kafka=*/group=*": {

"consume": {

"allowed": "confluent-audit-log-events_group_consume",

"denied": "confluent-audit-log-events_group_consume"

}

}

}

Note

Note that crn:///kafka=/group= is used here because kafka.JoinGroup is for a group, not a topic.

This example shows a configuration where users are frequently making random updates in the test cluster test-cluster-1. To configure topic routing so that you don’t see or receive any logs from this test cluster, but still receive logs from other clusters:

{

"destinations": {

"bootstrap_servers": [

"audit.example.com:9092"

],

"topics": {

"confluent-audit-log-events": {

"retention_ms": 2592000000

}

}

},

"default_topics": {

"allowed": "confluent-audit-log-events",

"denied": "confluent-audit-log-events"

},

"routes": {

"crn://mds1.example.com/kafka=test-cluster-1": {

"management": {

"allowed": "",

"denied": ""

},

"authorize": {

"allowed": "",

"denied": ""

}

}

}

}

Note

Alternatively, you could specify confluent.security.event.logger.enable=false for this test cluster.

This configuration example is for a test automation cluster that creates and deletes topics using names like test-1234. You do not want to create any audit log messages for these topics, but still wish to generate audit log messages from other topics:

{

"destinations": {

"bootstrap_servers": [

"audit.example.com:9092"

],

"topics": {

"confluent-audit-log-events": {

"retention_ms": 2592000000

}

}

},

"default_topics": {

"allowed": "confluent-audit-log-events",

"denied": "confluent-audit-log-events"

},

"routes": {

"crn://mds1.example.com/kafka=*/topic=test-*": {

"management": {

"allowed": "",

"denied": ""

},

"authorize": {

"allowed": "",

"denied": ""

}

}

}

}

In cases where you have frequent malicious attacks, you can create a specific topic for attackers. In this example that topic name is honeypot. In this configuration, you set up audit logging exclusively for honeypot to see if you can find a usage pattern. While you might keep most logs for a day, in this case you are keeping logs with suspected hackers for one year:

{

"destinations": {

"bootstrap_servers": [

"audit.example.com:9092"

],

"topics": {

"confluent-audit-log-events_users": {

"retention_ms": 86400000

},

"confluent-audit-log-events_hackers": {

"retention_ms": 31622400000

}

}

},

"default_topics": {

"allowed": "confluent-audit-log-events_users",

"denied": "confluent-audit-log-events_users"

},

"routes": {

"crn://mds1.example.com/kafka=*/topic=honeypot": {

"produce": {

"allowed": "confluent-audit-log-events_hackers",

"denied": "confluent-audit-log-events_hackers"

},

"consume": {

"allowed": "confluent-audit-log-events_hackers",

"denied": "confluent-audit-log-events_hackers"

},

"management": {

"allowed": "confluent-audit-log-events_hackers",

"denied": "confluent-audit-log-events_hackers"

}

}

}

}

Partition audit logs

In cases where you want predictable ordering for messages on audit log topics, you can configure messages to go to a topic with a single partition:

bin/kafka-topics --bootstrap-server localhost:9093 \

--command-config config/client.properties --create \

--topic confluent-audit-log-events-ordered --partitions 1

Note

If message volume is very high (particularly if produce or consume are enabled), this configuration may not be sustainable. In this case, you should direct audit log messages to one or more topics with additional partitions. Be aware that in such cases, consumers reading those messages may not receive them in the order in which they were sent.

Suppress audit logs for internal topics

If you are considering audit logging for produce and consume, be sure to carefully consider the implications in terms of the number of audit log messages this may generate. If you can identify the topics or topic prefixes for which you require this level of detail, you can maintain a reasonable signal-to-noise ratio by specifying them and not auditing others. If you must enable audit logging for produce and consume, and are using a route similar to the following:

"crn:///kafka=*/topic=*": {

"produce": {

"allowed": "confluent-audit-log-events",

"denied": "confluent-audit-log-events"

},

"consume": {

"allowed": "confluent-audit-log-events",

"denied": "confluent-audit-log-events"

}

}

Be aware that Kafka and Confluent Platform use internal topics for a variety of purposes that you may not need to capture. Kafka internal topics are prefixed with a _, and Confluent Platform internal topics are prefixed with _confluent-. To exclude these prefixes, use the following configuration:

"crn:///kafka=*/topic=_*": {

"consume": {

"allowed": "",

"denied": ""

},

"produce": {

"allowed": "",

"denied": ""

},

"management": {

"allowed": "",

"denied": ""

}

}

Optionally, you can use:

"crn:///kafka=*/topic=_confluent-*": {

"consume": {

"allowed": "",

"denied": ""

},

"produce": {

"allowed": "",

"denied": ""

},

"management": {

"allowed": "",

"denied": ""

}

}

If your produce or consume audit logs are using topic=*, and are sending the audit logs to the same cluster (which we do not recommend), be sure to disable audit logging of the audit log topics themselves. Otherwise, your broker logs will return a large volume of warnings about feedback loops.

"crn:///kafka=*/topic=confluent-audit-log-events*": {

"consume": {

"allowed": "",

"denied": ""

},

"produce": {

"allowed": "",

"denied": ""

},

"management": {

"allowed": "",

"denied": ""

}

}

Configure the replication factor and retention period

The following example shows how to use the CLI to create a topic that will be logged using a specific replication factor and retention period:

bin/kafka-topic --bootstrap-server localhost:9092 \

--create --topic confluent-audit-log-events\

--replication-factor 3 \

--config retention.ms=7776000000

In cases where the Kafka broker creates the audit log topic automatically, by default the replication factor used will be controlled by the cluster setting default.replication.factor.

If you need the replication factor for audit log topics to be different than the default, you can use the confluent.security.event.logger.exporter.kafka.topic.replicas option to specify the replication factor:

confluent.security.event.logger.exporter.kafka.topic.replicas=2

For details about automatic configuration of replication factors in Kafka brokers, refer to Configure replication factors.

If you create a topic manually with a retention.ms that conflicts with the retention specified in the router.config then the manually-created setting is used. The default retention period is 90 days (7776000000 ms).

Note

The retention period specified in the audit log configuration is only enforced if the audit log feature automatically creates a topic that doesn’t already exist. Editing this value in the audit log configuration for a topic that already exists will not change the retention settings for that topic.

The following example shows how to use the CLI to revise the retention period of an existing topic:

bin/kafka-configs --bootstrap-server localhost:9092 \

--alter --topic confluent-audit-log-events\

--config retention.ms=12096000000000

Audit log configuration reference

Confluent Platform audit logging supports the Kafka Producer configurations used for creating producers. You must include the prefix confluent.security.event.logger.exporter. when using any of the producer configuration options for audit logging.

confluent.security.event.logger.authentication.enable

Enables authentication event audit logging.

Type: Boolean

Default: false

Importance: high

confluent.security.event.logger.enable

Specifies whether or not the event logger is enabled.

Type: Boolean

Default: true

Importance: high

confluent.security.event.logger.exporter.kafka.topic.create

Creates the event log topic if it does not already exist.

Type: Boolean

Default: true

Importance: low

confluent.security.event.logger.exporter.kafka.topic.partitions

Specifies the number of partitions in the event log topic. Use this configuration option only when creating a topic (confluent.security.event.logger.exporter.kafka.topic.create=true) that does not already exist. If you attempt to implement this configuration after the topic already exists, no changes will occur.

Type: int

Default: 12

Importance: low

confluent.security.event.logger.exporter.kafka.topic.replicas

Specifies the replication factor for audit log topics. Use this configuration option only when creating a topic (confluent.security.event.logger.exporter.kafka.topic.create=true) that does not already exist. If you attempt to implement this configuration after the topic already exists, no changes will occur.

Type: int

Default: 3

Importance: low

confluent.security.event.logger.exporter.kafka.topic.retention.bytes

Specifies the retention bytes for the event log topic. Use this configuration option only when creating a topic (confluent.security.event.logger.exporter.kafka.topic.create=true) that does not already exist. If you attempt to implement this configuration after the topic already exists, no changes will occur.

Type: long

Default: -1 (unlimited)

Importance: low

confluent.security.event.logger.exporter.kafka.topic.retention.ms

Specifies the retention time for the event log topic. Use this configuration option only when creating a topic (confluent.security.event.logger.exporter.kafka.topic.create=true) that does not already exist. If you attempt to implement this configuration after the topic already exists, no changes will occur.

Type: long

Default: 2592000000 ms (30 days)

Importance: low

confluent.security.event.logger.exporter.kafka.topic.roll.ms

Specifies the log rolling time for the event log topic. This option corresponds to the segment.ms topic configuration property, which is used when creating an event log topic. Use this configuration option only when creating a topic (confluent.security.event.logger.exporter.kafka.topic.create=true) that does not already exist. If you attempt to implement this configuration after the topic already exists, no changes will occur.

Type: long

Default: 14400000 ms (4 hours)

Importance: low

confluent.security.event.router.cache.entries

Specifies the number of resource entries that the router cache should support. If audit logs are enabled for Produce or Consume for some topics, specify a value greater than the number of such topics. Otherwise, the volume of log messages should be small enough that caching will not substantially affect performance.

Type: int

Default: 10000

Importance: low

confluent.security.event.router.config

Identifies the JSON configuration used to route events to topics.

Type: string

Default: “”

Importance: low

Disabling audit logs

To disable audit logging in Kafka clusters, set the following in server.properties:

confluent.security.event.logger.enable=false

Note that this disables all the structured audit logs, including for MANAGEMENT events. Authorization logs will still be written to log4j in Kafka format. For details, refer to Kafka Logging.

Configure audit logs in Docker

To configure Confluent Docker containers, pass properties to them using environment variables where the Docker container is run (typically in docker-compose.yml or in a Kubernetes configuration). For details about running the Confluent Kafka configuration in Docker refer to Kafka configuration.

To configure audit logging in Docker containers, determine which server.properties options you want to set in your audit logging configuration, and then modify them from lowercase dotted to uppercase with underscore characters, and prefix with KAFKA_. The only difference between configuring audit logging in Confluent Platform and Docker is the naming convention used. For example, the following fragment from docker-compose.yml shows how to configure confluent.security.event.router.config in a Docker container:

version: '2'

services:

broker:

image: confluentinc/cp-server:5.4.x-latest

...

environment:

KAFKA_AUTHORIZER_CLASS_NAME: io.confluent.kafka.security.authorizer.ConfluentServerAuthorizer

KAFKA_CONFLUENT_SECURITY_EVENT_ROUTER_CONFIG: "{\"routes\":{\"crn:///kafka=*/group=*\":{\"consume\":{\"allowed\":\"confluent-audit-log-events\",\"denied\":\"confluent-audit-log-events\"}},\"crn:///kafka=*/topic=*\":{\"produce\":{\"allowed\":\"confluent-audit-log-events\",\"denied\":\"confluent-audit-log-events\"},\"consume\":{\"allowed\":\"confluent-audit-log-events\",\"denied\":\"confluent-audit-log-events\"}}},\"destinations\":{\"topics\":{\"confluent-audit-log-events\":{\"retention_ms\":7776000000}}},\"default_topics\":{\"allowed\":\"confluent-audit-log-events\",\"denied\":\"confluent-audit-log-events\"},\"excluded_principals\":[\"User:kafka\",\"User:ANONYMOUS\"]}"

Troubleshoot audit logging

If the audit logging mechanism tries to write to the Kafka topic and doesn’t succeed for any reason, it writes the JSON audit log message to log4j instead. You can specify where this message goes by configuring io.confluent.security.audit.log.fallback in Kafka’s broker log4j.properties in ce-kafka/config/.

Here is an example of the relevant log4j.properties content:

log4j.appender.auditLogAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.auditLogAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.auditLogAppender.File=${kafka.logs.dir}/metadata-service.log

log4j.appender.auditLogAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.auditLogAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

# Fallback logger for audit logging. Used when the Kafka topics are initializing.

log4j.logger.io.confluent.security.audit.log.fallback=INFO, auditLogAppender

log4j.additivity.io.confluent.security.audit.log.fallback=false

If you find that audit log messages are not being created or routed to topics as you have configured them, check to ensure that the:

Audit log principal has been granted the required permissions

Principal can connect to the target cluster

Principal can create and produce to all of the topics

You may need to temporarily increase log level: log4j.logger.io.confluent.security.audit=DEBUG.

View audit logs on the fly

During initial setup or troubleshooting, you can quickly and iteratively examine recent audit log entries using simple command line tools. This can aid in audit log troubleshooting, and also when troubleshooting role bindings for RBAC.

First pipe your audit log topics into a local file. Grep works faster on your local file system.

If you have the kafka-console-consumer installed locally and can directly consume from the audit log destination Kafka cluster, your command should look similar to the following:

./kafka-console-consumer --bootstrap-server auditlog.example.com:9092 --consumer-config ~/auditlog-consumer.properties --whitelist '^confluent-audit-log-events.*' > /tmp/streaming.audit.log

If you don’t have direct access and must instead connect using a “jump box” (a machine or server on a network that you use to access and manage devices in a separate security zone), use a command similar to the following:

ssh -tt -i ~/.ssh/theuser.key theuser@jumpbox './kafka-console-consumer --bootstrap-server auditlog.example.com:9092 --consumer-config ~/auditlog-consumer.properties --whitelist '"'"'^confluent-audit-log-events.*'"'"' ' > /tmp/streaming.audit.log

Regardless of which method you use, at this point you can open another terminal and locally run tail -f /tmp/streaming.audit.log to view audit log messages on the fly. After you’ve gotten the audit logs, you can use grep and jq (or another utility) to examine them. For example:

tail -f /tmp/streaming.audit.log | grep 'connect' | jq -c '[.time, .data.authenticationInfo.principal, .data.authorizationInfo.operation, .data.resourceName]'

Produce and consume denials are not appearing in audit logs

When a client goes to produce to or consume from to a topic, it must first get the metadata for the topic to determine what partitions are available. This is accomplished using a Kafka API metadata operation. It is very common for clients who don’t have READ or WRITE authorizations on a given topic to also not have DESCRIBE authorization. In such cases, the metadata operation fails and the Kafka API produce or consume operation is never even attempted, and therefore doesn’t appear in the audit logs.

If you want to suppress allowed describes because they are very noisy, but capture denials because they may indicate a misconfiguration or unauthorized access attempt, add routing instructions to your configuration, similar to the following:

"crn://mds1.example.com/kafka=*/topic=secure-*": {

"produce": {

"allowed": "confluent-audit-log-events_secure",

"denied": "confluent-audit-log-events_denied"

},

"consume": {

"allowed": "confluent-audit-log-events_secure",

"denied": "confluent-audit-log-events_denied"

},

"describe": {

"allowed": "",

"denied": "confluent-audit-log-events_denied"

}

}