Manage Schemas in Confluent Platform

Use the Schema Registry feature in Control Center (Legacy) to manage Confluent Platform topic schemas.

You can:

create, edit, and view schemas

compare schema versions

download schemas

The Schema Registry performs validations and compatibility checks on schemas.

Tip

Review the step-by-step tutorial for using Schema Registry.

For comprehensive information on Schema Registry, see the Schema Registry Documentation.

The Schema Registry feature in Control Center (Legacy) is enabled by default. Disabling the feature disables both viewing and editing of schemas.

Create a topic schema in Control Center (Legacy)

Create key and value schemas. Value schemas are typically created more frequently than a key schema.

Best practices:

Provide default values for fields to facilitate backward-compatibility if pertinent to your schema.

Document at least the more obscure fields for human-readability of a schema.

Create a topic value schema

Select a cluster.

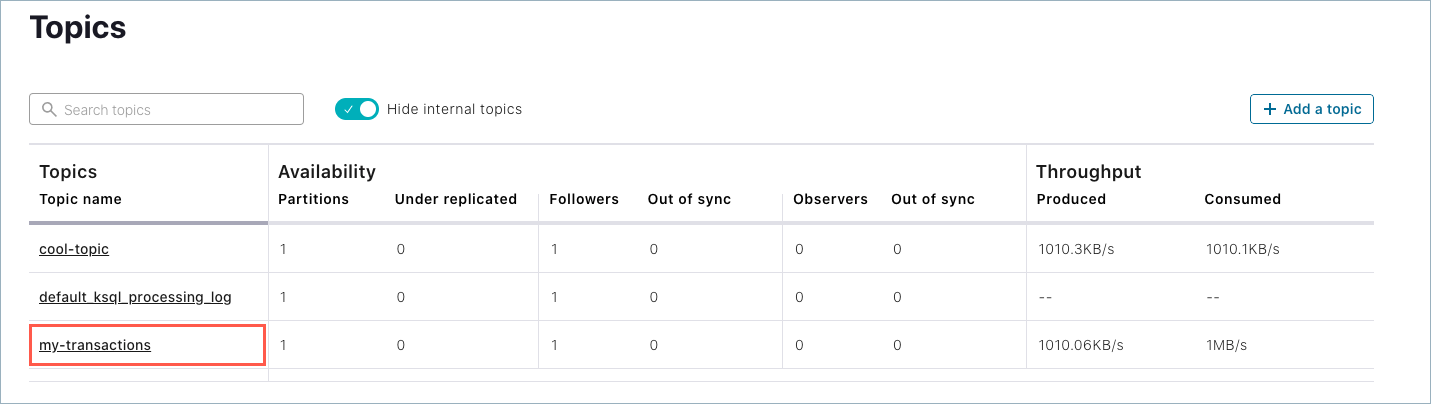

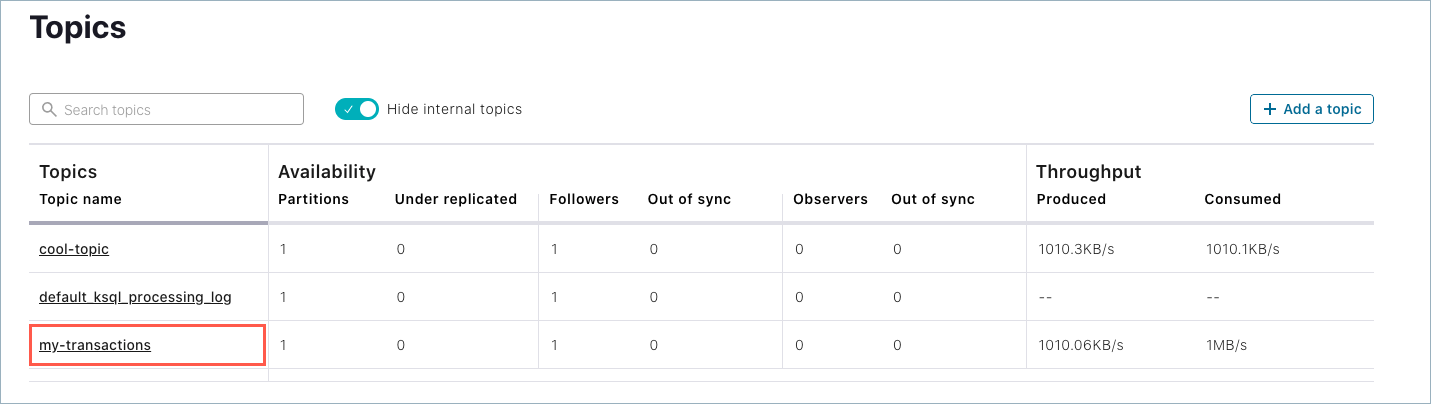

Click Topics on the menu. The Manage Topics Using Control Center (Legacy) for Confluent Platform appears.

Select a topic.

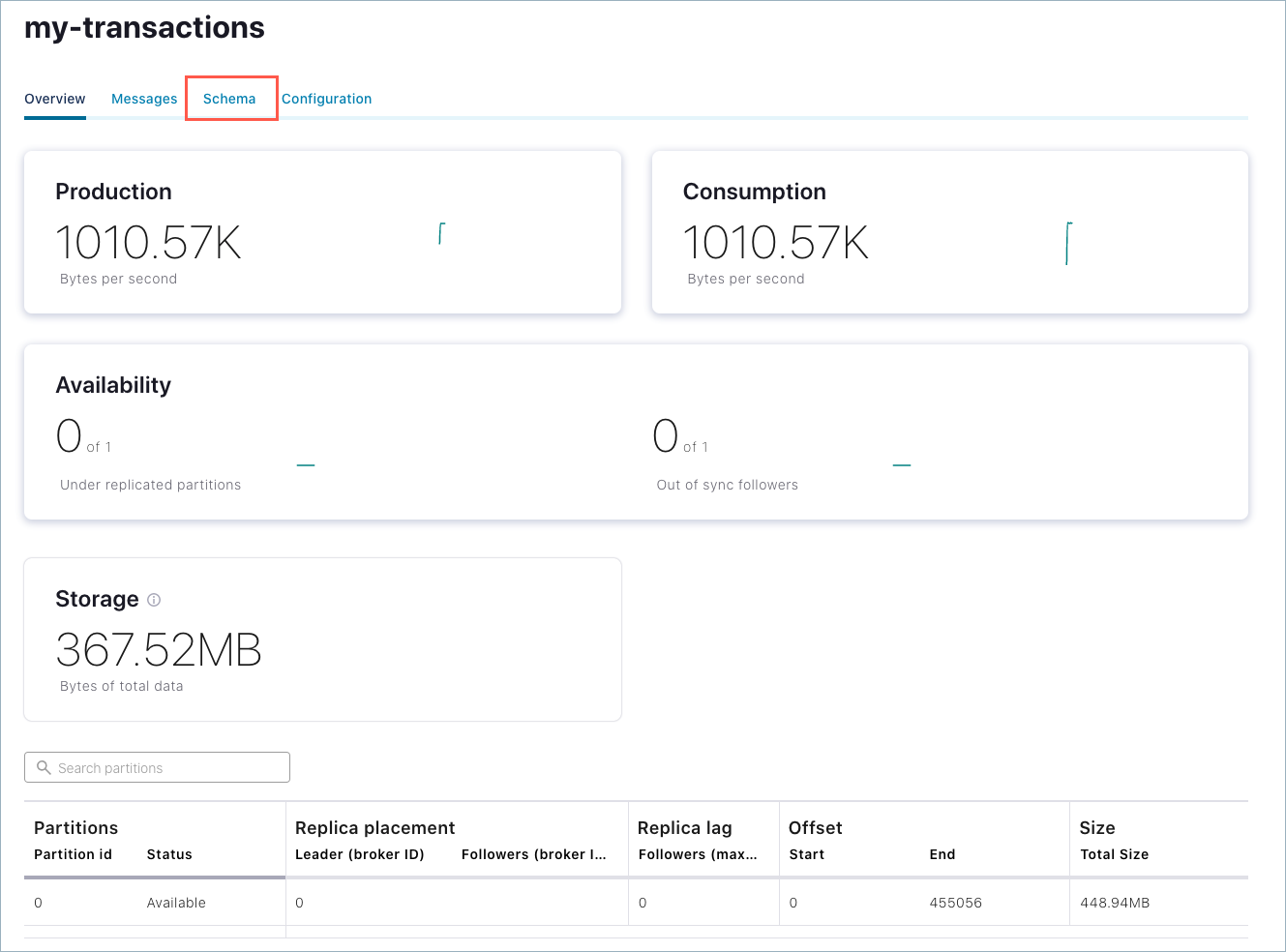

The topic overview page is displayed.

Click the Schema tab.

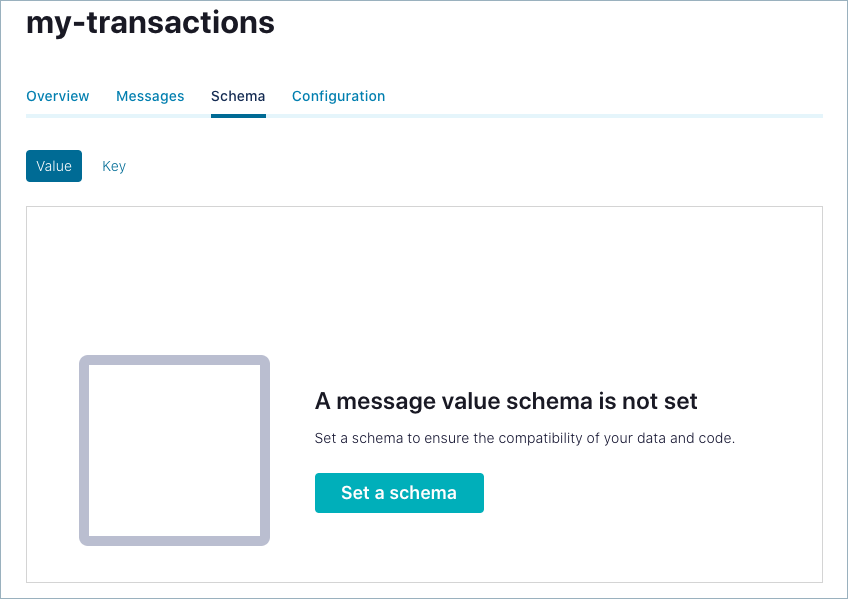

You are prompted to set a message value schema.

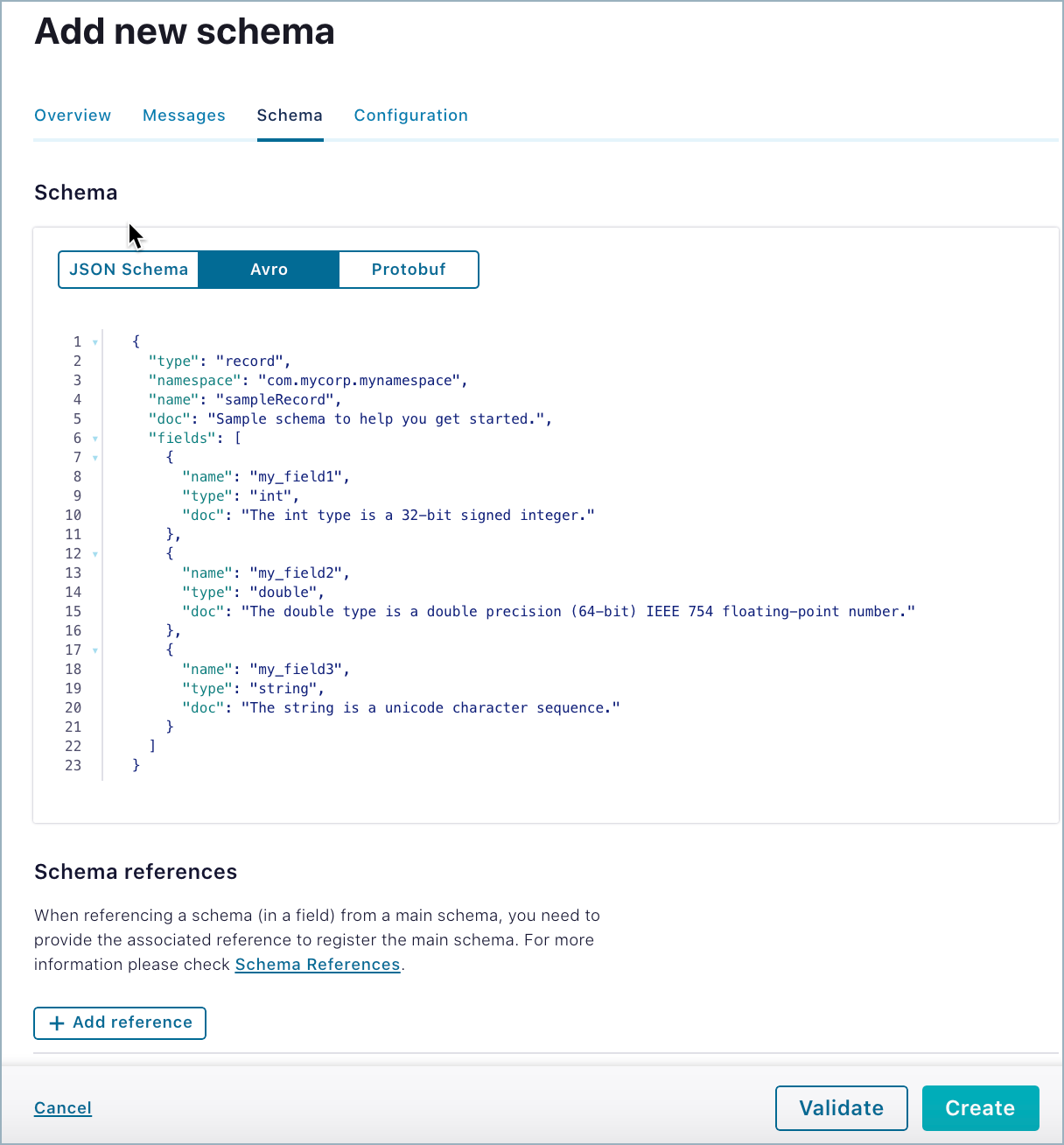

Click Set a schema. The Schema editor appears pre-populated with the basic structure of an Avro schema to use as a starting point, if desired.

Select a schema format type:

Avro

JSON

Protobuf

Choose Avro if you want to try out the code examples provided in the next steps.

Tip

To learn more about each of the schema types, see Supported formats.

Enter the schema in the editor:

name: Enter a name for the schema if you do not want to accept the default, which is determined by the subject name strategy. The default isschema_type_topic_name. Required.type: Eitherrecord,enum,union,array,map, orfixed. (The typerecordis specified at the schema’s top level and can include multiple fields of different data types.) Required.namespace: Fully-qualified name to prevent schema naming conflicts. String that qualifies the schemaname. Optional but recommended.fields: JSON array listing one or more fields for a record. Required.Each field can have the following attributes:

name: Name of the field. Required.type: Data type for the field. Required.doc: Field metadata. Optional but recommended.default: Default value for a field. Optional but recommended.order: Sorting order for a field. Valid values are ascending, descending, or ignore. Default: Ascending. Optional.aliases: Alternative names for a field. Optional.

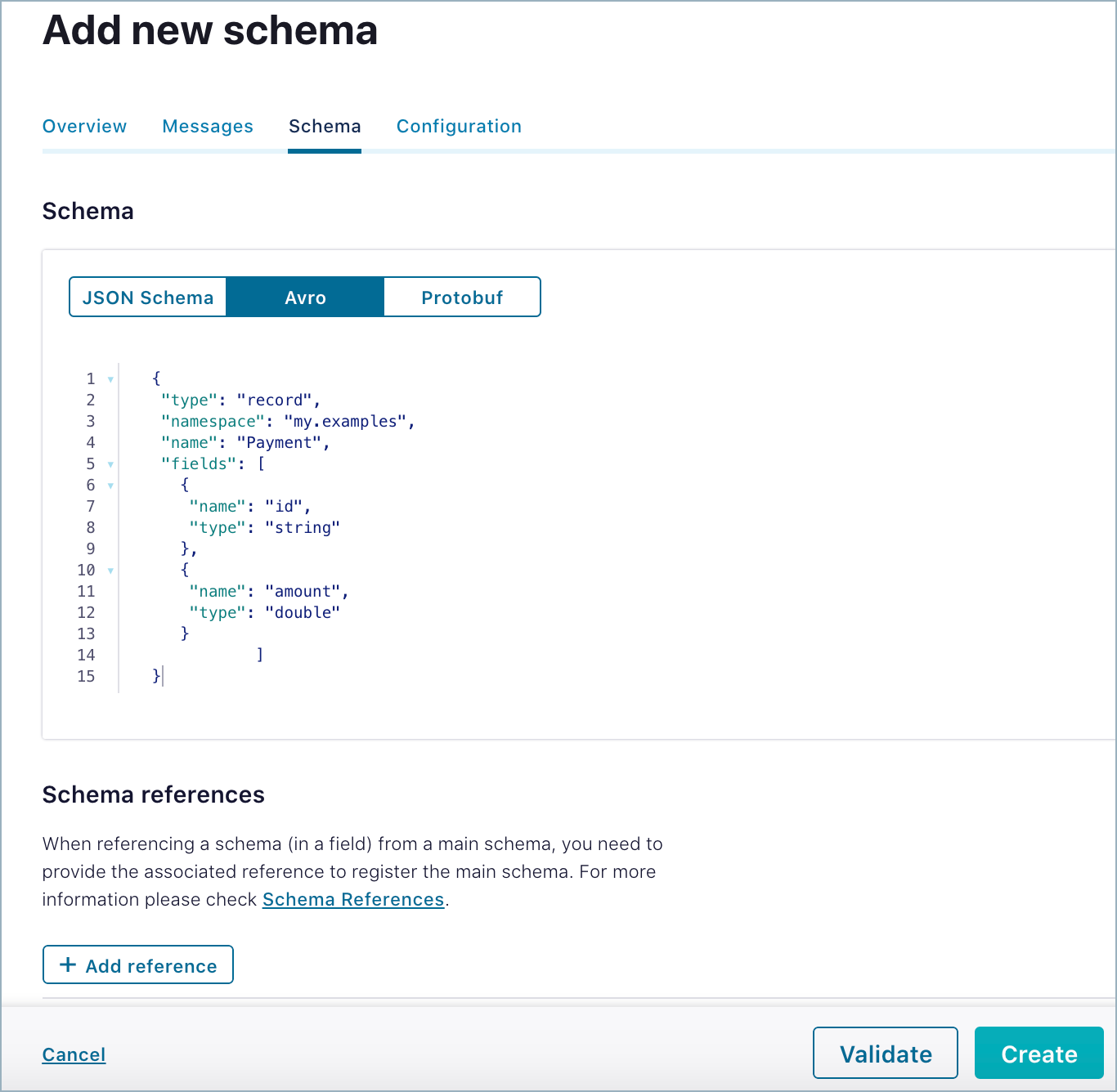

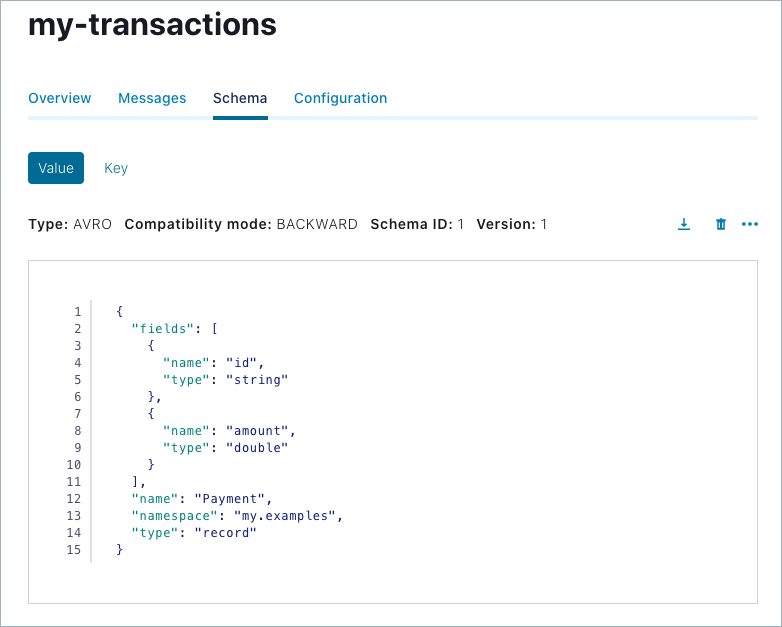

Copy and paste the following example schema.

{ "type": "record", "namespace": "my.examples", "name": "Payment", "fields": [ { "name": "id", "type": "string" }, { "name": "amount", "type": "double" } ] }

In edit mode, you have options to:

Validate the schema for syntax and structure before you create it.

Add schema references with a guided wizard.

Click Create.

If the entered schema is valid, the Schema updated message is briefly displayed in the banner area.

If the entered schema is not valid, an Input schema is an invalid Avro schema error is displayed in the banner area.

If applicable, repeat the procedure as appropriate for the topic key schema.

Working with schema references

You can add a reference to another schema, using the wizard to help locate available schemas and versions.

The Reference name you provide must match the target schema, based on guidelines for the schema format you are using:

In JSON Schema, the name is the value on the

$reffield of the referenced schemaIn Avro, the name is the value on the

typefield of the referenced schemaIn Protobuf, the name is the value on the

Importstatement referenced schema

First, locate the schema you want to reference, and get the reference name for it.

Add a schema reference to the current schema in the editor

Click Add reference.

Provide a Reference name per the rules described above.

Select the schema fro the Subject list.

Select the Version of the schema you want to use.

Click Validate to check if the reference will pass.

Click Save to save the reference.

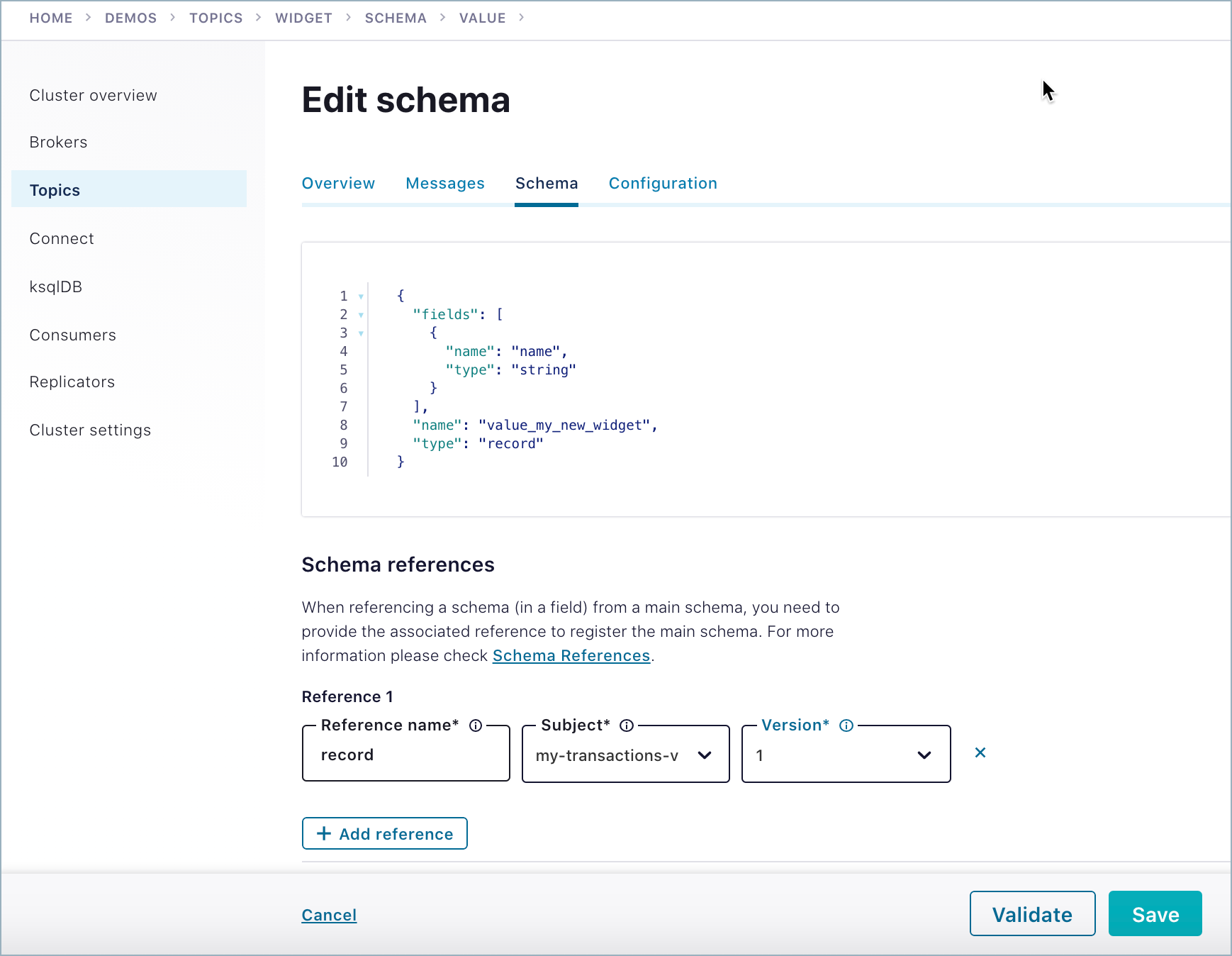

For example, to reference the schema for the my-transactions topic (my-transactions-value) from the widget schema, you can configure a reference to type, record as shown.

To learn more, see Schema references in the schema formats developer documentation.

View, edit, or delete schema references for a topic

Existing schema references show up on editable versions of the schema where they are configured.

Navigate to a topic; for example, the

widget-valueschema associated with thewidgettopic in the previous example.Click into the editor as if to edit the schema.

If there are references to other Schemas configured in this schema, they will display in the Schema references list below the editor.

You can also add more references to this schema, modify existing, or delete references from this view.

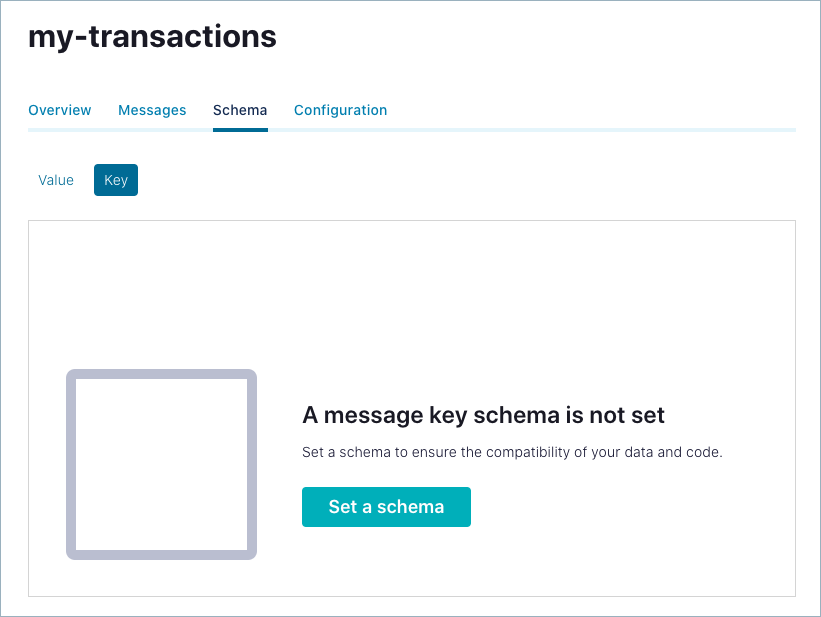

Create a topic key schema

Click the Key option. You are prompted to set a message key schema.

Click Set a schema.

Choose Avro format and/or delete the sample formatting and simply paste in a string UUID.

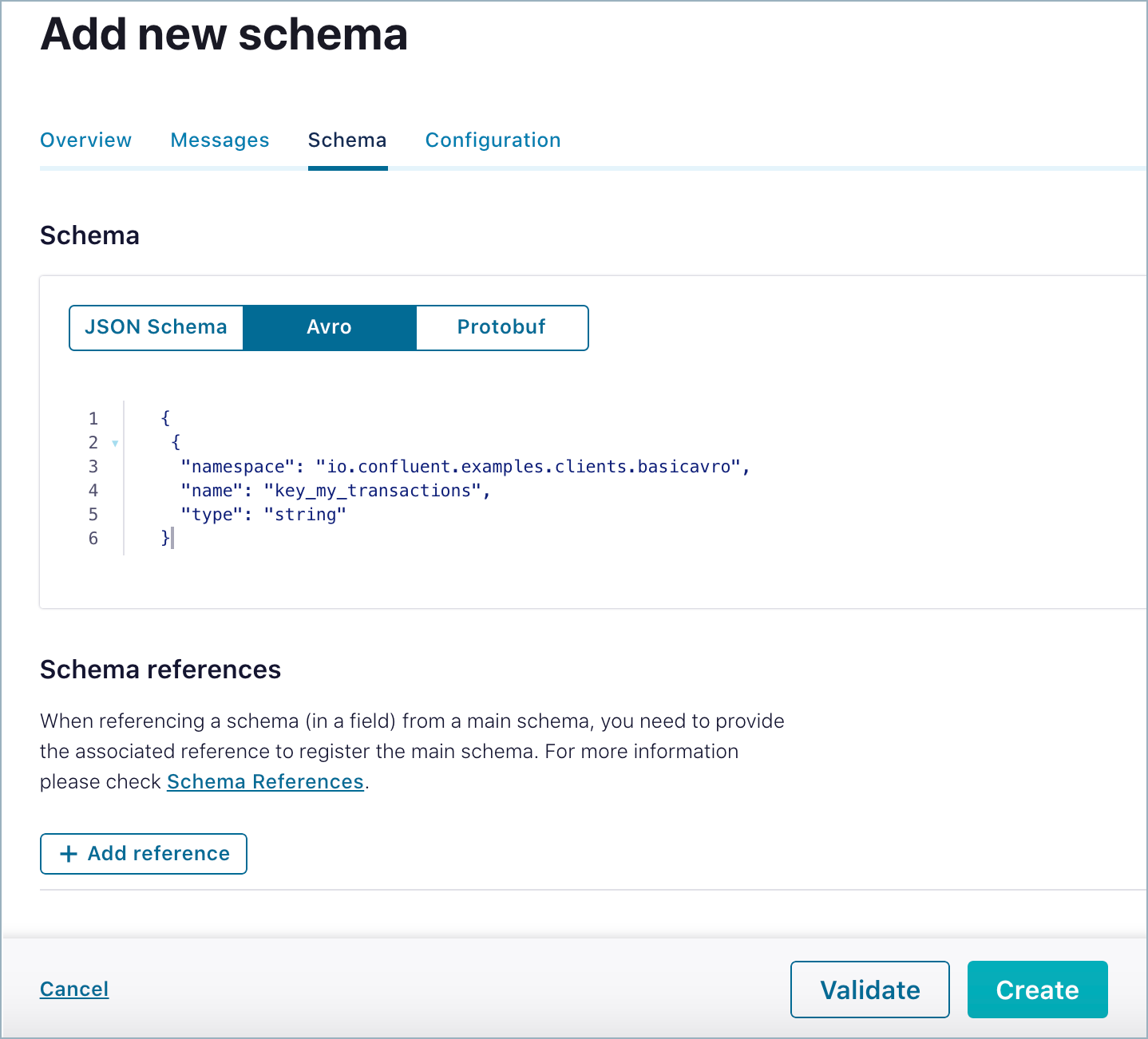

Enter the schema into the editor and click Save.

Copy and paste the following example schema, and save it.

{ "namespace": "io.confluent.examples.clients.basicavro", "name": "key_my_transactions", "type": "string" }

In edit mode, you have options to:

Validate the schema for syntax and structure before you create it.

Add schema references with a guided wizard.

Best Practices and Pitfalls for Key Values

Kafka messages are key-value pairs. Message keys and message values can be serialized independently. For example, the value may be using an Avro record, while the key may be a primitive (string, integer, and so forth). Typically message keys, if used, are primitives. How you set the key is up to you and the requirements of your implementation.

As a best practice, keep key value schema complexity to a minimum. Use either a simple, non-serialized data type such as a string UUID or long ID, or an Avro record that does not use maps or arrays as fields, as shown in the example below. Do not use Protobuf messages and JSON objects for key values. Avro does not guarantee deterministic serialization for maps or arrays, and Protobuf and JSON schema formats do not guarantee deterministic serialization for any object. Using these formats for key values will break topic partitioning. If you do decide to use a complex format for a key value schema, set auto.register.schemas=false to prevent registration of new valid and compatible schemas that, because of the complex key value format, will break your partitioning. To learn more, see Auto Schema Registration in the On-Premises Schema Registry Tutorial, and Partitioning gotchas in the Confluent Community Forum.

For detailed examples of key and value schemas, see the discussion under Formats, Serializers, and Deserializers.

Viewing a schema in Control Center (Legacy)

View the schema details for a specific topic.

Select a cluster from the navigation bar.

Click the Topics menu. The Manage Topics Using Control Center (Legacy) for Confluent Platform appears.

Select a topic.

The topic overview page appears.

Click the Schema tab.

The Value schema is displayed by default.

Tip

The Control Center (Legacy) schema display may re-order the placement of meta information about the schema. Comparing this view with the example provided in the previous section, note that schema name, namespace, and record type are shown below the field definitions. This is an artifact of the display in Control Center (Legacy); the schema definition is the same.

Click the Key tab to view the key schema if present.

Editing a schema in Control Center (Legacy)

Edit an existing schema for a topic.

Select a cluster from the navigation bar.

Click the Topics menu. The Manage Topics Using Control Center (Legacy) for Confluent Platform appears.

Select a topic.

Click the Schema tab.

Select the Value or Key tab for the schema.

Click anywhere in the schema to enable edit mode and make changes in the schema editor.

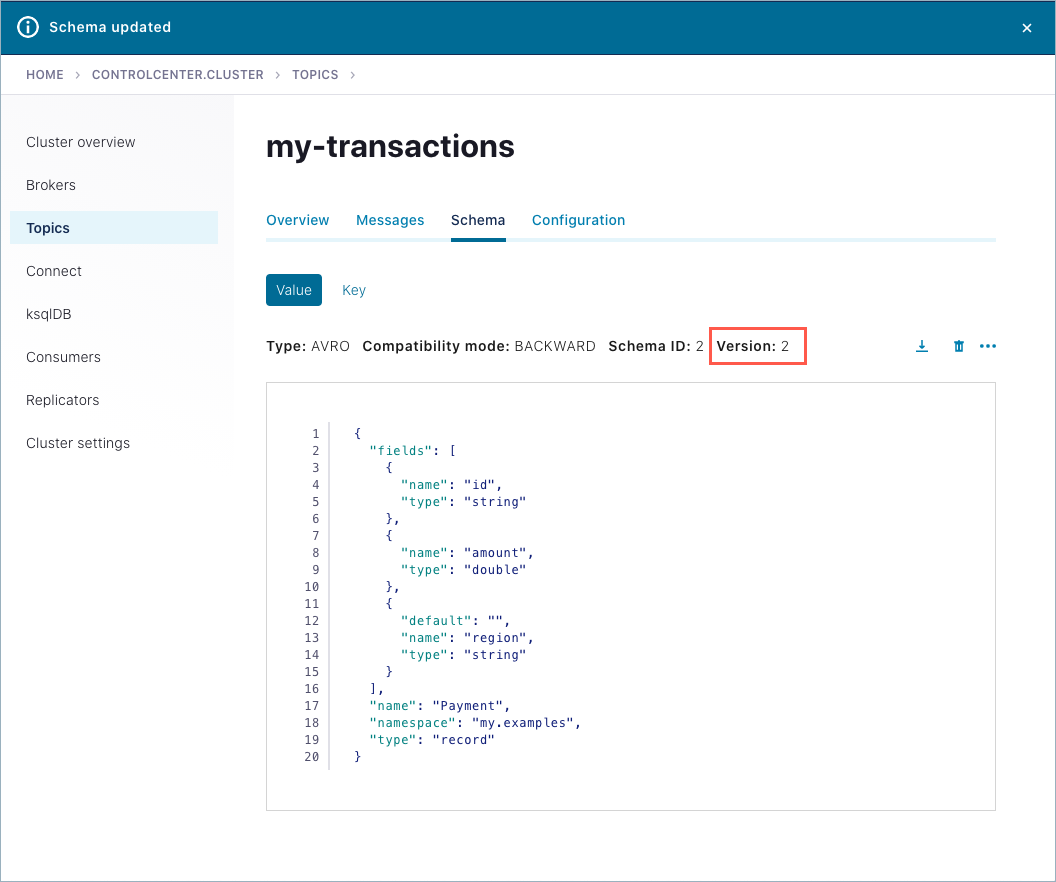

For example, if you are following along with the example:

Select the topic

my-transactions, click Schema, and select the Value tab.Edit the schema by copy-pasting the following definition for a new

regionfield, after theidandamountfields. Precede your new definition with a comma, per the syntax.{ "name": "region", "type": "string", "default": "" }

Note that the new

regionfield includes a default value, which makes it backward compatible. By plugging in the default value, consumers can use the new schema to read data submitted by producers that use the older schema (without theregionfield).Click Save.

If the schema update is valid and compatible with its prior versions (assuming a backward-compatible mode), the schema is updated and the version count is incremented. You can compare the different versions of a schema.

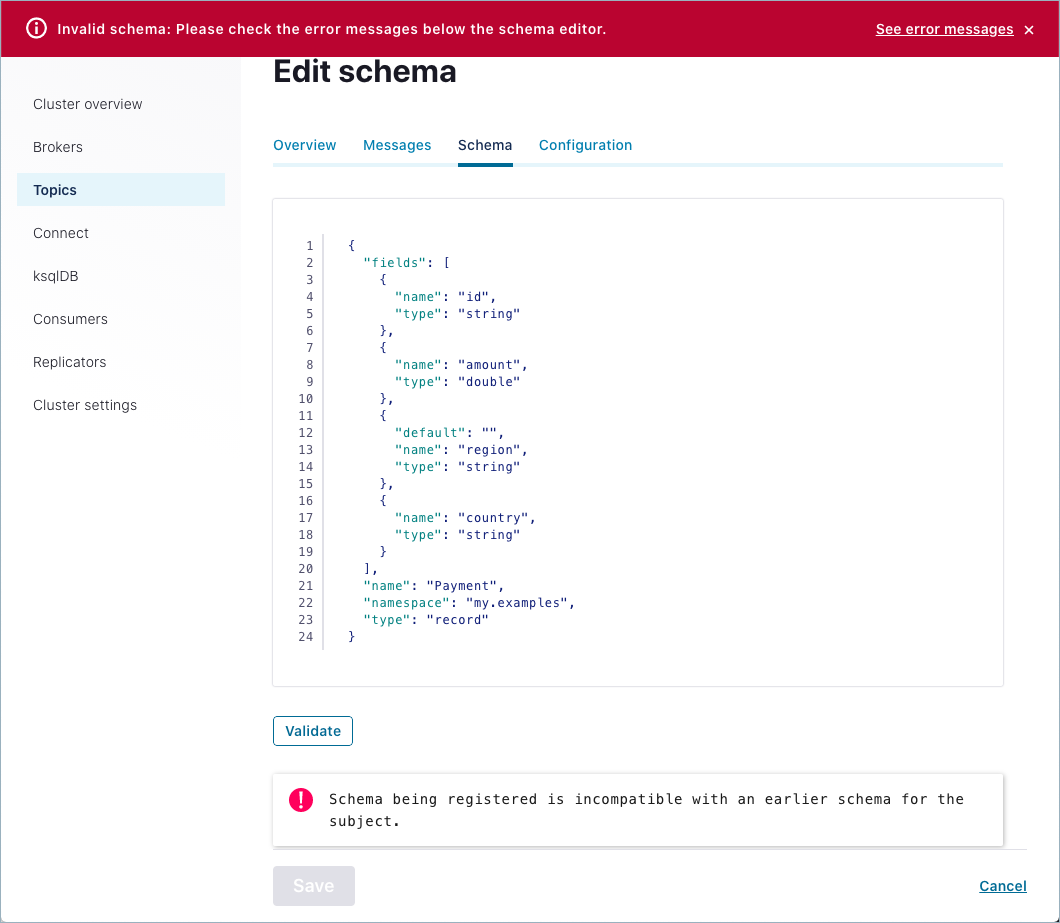

If the schema update is invalid or incompatible with an earlier schema version, an error is displayed.

The example below shows the addition of another new field,

country, with no default provided for backward compatibility.

Tip

You can also add schema references as a part of editing a schema, as described in Working with schema references.

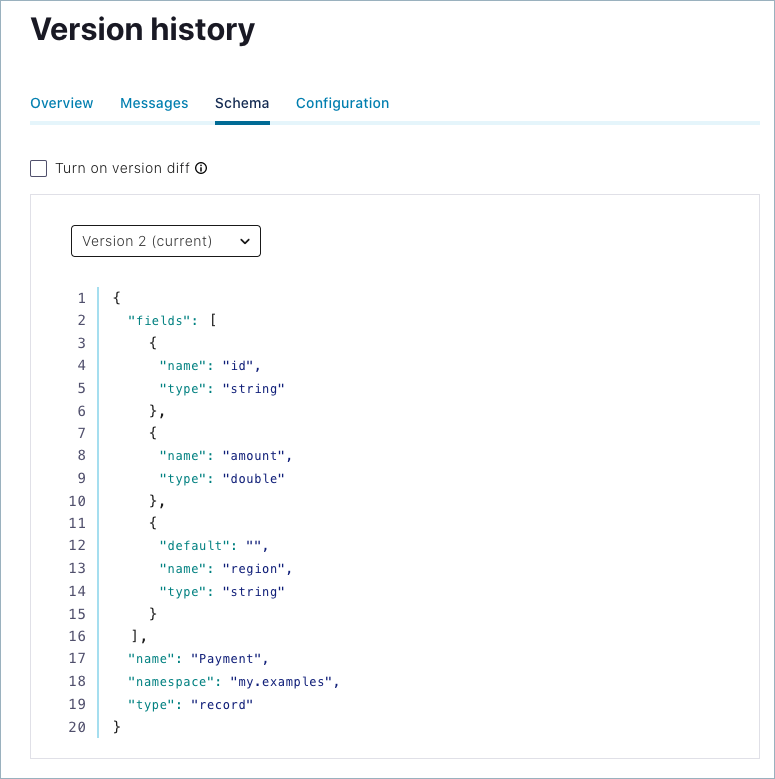

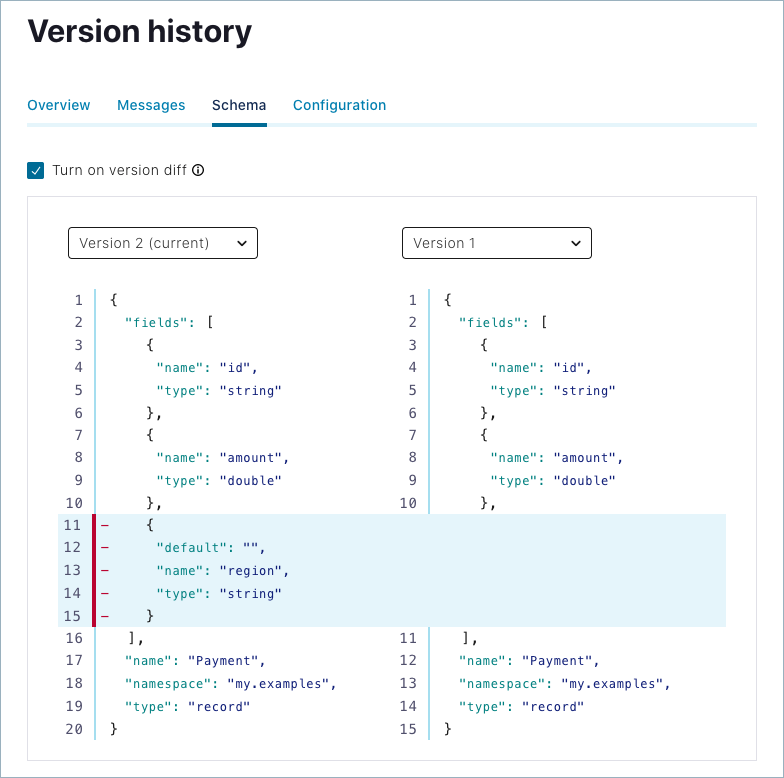

Comparing schema versions in Control Center (Legacy)

Compare versions of a schema to view its evolutionary differences.

Select a cluster from the navigation bar.

Click the Topics menu. The Manage Topics Using Control Center (Legacy) for Confluent Platform appears.

Select a topic.

Click the Schema tab.

Select the Key or Value tab for the schema.

Select Version history from the inline menu.

The current version number of the schema is indicated on the version menu.

Select the Turn on version diff check box.

Select the versions to compare from each version menu. The differences are highlighted for comparison.

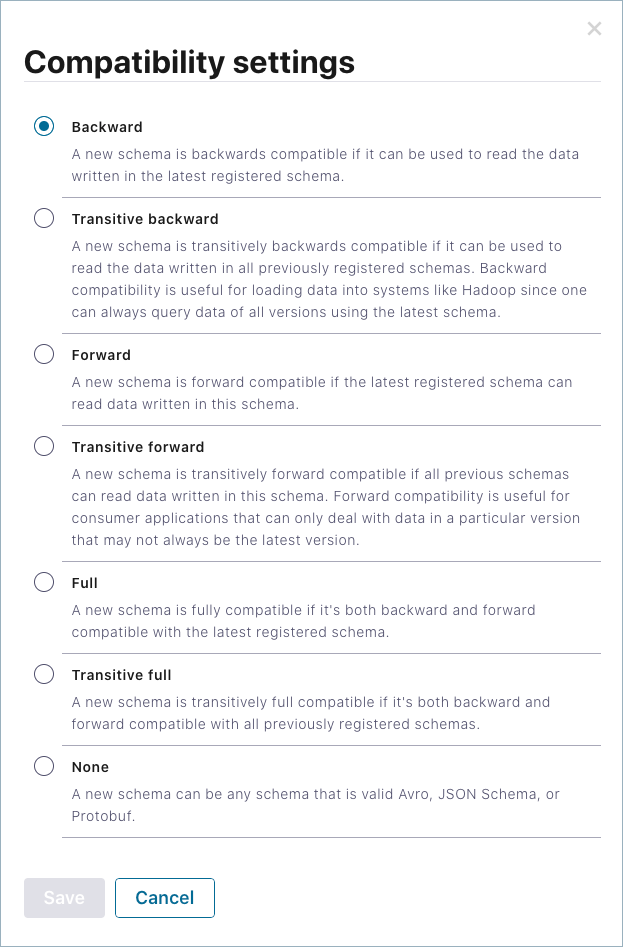

Changing the compatibility mode of a schema in Control Center (Legacy)

The default compatibility mode is Backward. The mode can be changed for the schema of any topic if necessary.

Caution

If you change the compatibility mode of an existing schema already in production use, be aware of any possible breaking changes to your applications.

Select a cluster from the navigation bar.

Click the Topics menu. The Manage Topics Using Control Center (Legacy) for Confluent Platform appears.

Select a topic.

Click the Schema tab.

Select the Key or Value tab for the schema.

Select Compatibility setting from the inline menu.

The Compatibility settings are displayed.

Select a mode option:

Descriptions indicate the compatibility behavior for each option. For more information, including the changes allowed for each option, see Schema Evolution and Compatibility for Schema Registry on Confluent Platform.

Click Save.

Downloading a schema from Control Center (Legacy)

Select a cluster from the navigation bar.

Click the Topics menu. The Manage Topics Using Control Center (Legacy) for Confluent Platform appears.

Select a topic.

Click the Schema tab.

Select the Key or Value tab for the schema.

Click Download. A schema JSON file for the topic is downloaded into your Downloads directory.

Example filename:

schema-transactions-v1-Ry_XaOGvTxiZVZ5hbBhWRA.jsonExample contents:

{"subject":"transactions-value","version":1,"id":2,"schema":"{\"type\":\"record\",\"name\":\"Payment\", \"namespace\":\"io.confluent.examples.clients.basicavro\", \"fields\":[{\"name\":\"id\",\"type\":\"string\"},{\"name\":\"amount\",\"type\":\"double\"}, {\"name\":\"region\",\"type\":\"string\"}]}"}

This is the first version of the schema, and it has an

idof 2. The schema is escaped JSON. A backslash precedes double-quotes.

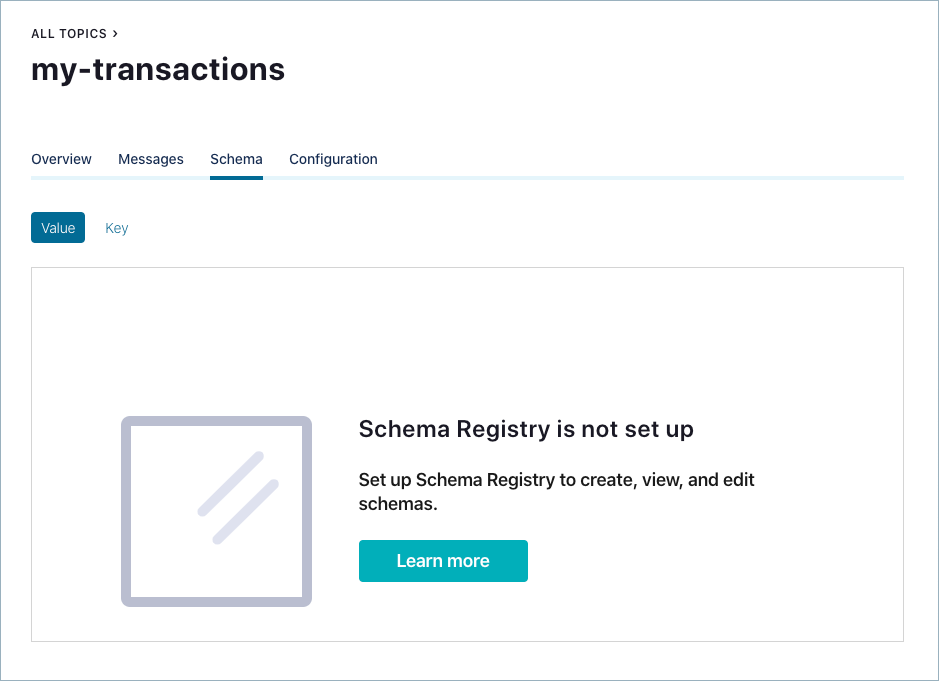

Troubleshoot error “Schema Registry is not set up”

If you get an error message on Control Center (Legacy) when you try to access a topic schema (”Schema Registry is not set up”), first make sure that Schema Registry is running. Then verify that the Schema Registry listeners configuration matches the Control Center (Legacy) confluent.controlcenter.schema.registry.url configuration. Also check the HTTPS configuration parameters.

For more information, see A schema for message values has not been set for this topic, and start-up procedures for Quick Start for Confluent Platform, or Install Confluent Platform On-Premises, depending on which one of these you are using to run Confluent Platform.

Enabling and disabling Schema Registry in Control Center (Legacy)

The feature that allows working with schemas in Control Center (Legacy) is enabled by default. The feature can be disabled if an organization does not want any users to access the feature. After disabling the feature, the Topics Schema menu and the Schema tab are no longer visible in the Control Center (Legacy) UI. The ability to view and edit schemas is disabled.

To disable the edit schema feature in Control Center (Legacy):

Set the

confluent.controlcenter.schema.registry.enableoption in yourcontrol-center.propertiesfile tofalse.confluent.controlcenter.schema.registry.enable=false

Note

Make the change in the appropriate Control Center (Legacy) properties file or files configured for your environments, including

control-center-dev.propertiesorcontrol-center-production.properties. The properties files are located in/path-to-confluent/etc/confluent-control-center/.Restart Control Center (Legacy) and pass in the properties file for the configuration to take effect:

./bin/control-center-stop ./bin/control-center-start ../etc/confluent-control-center/control-center.propertiesTip

If you are using a Confluent Platform development environment with a confluent local, stop and start as follows:

|confluent_local_stop_control_center| |confluent_local_stop_control_center| ../etc/confluent-control-center/control-center-dev.properties

To enable the feature again, set the option back to true and restart Control Center (Legacy) with the updated properties file.

Enabling Multi-Cluster Schema Registry

Confluent Platform supports the ability to run multiple schema registries and associate a unique Schema Registry to each Kafka cluster in multi-cluster environments.

The ability to scale up schema registries in conjunction with Kafka clusters is useful for evolving businesses; and particularly supports data governance, organization, and management across departments in large enterprises.

When multi-cluster Schema Registry is configured and running, you can create and manage schemas per topics in Control Center (Legacy) as usual.

Configuration Properties and Files

Multiple Schema Registry clusters may be specified with confluent.controlcenter.schema.registry.{name}.url in the appropriate Control Center (Legacy) properties file. To use a Schema Registry cluster identified in this way, add or verify the following broker and Control Center (Legacy) configurations.

A new endpoint /v1/metadata/schemaRegistryUrls has been exposed by Kafka to return the confluent.schema.registry.url field from the Kafka broker configurations. Control Center (Legacy) uses this field to look up the registries from Kafka broker configurations. To use this, you must configure unique listener endpoints for each cluster:

In the broker

server.propertiesfiles (unique for each Kafka cluster), specify the REST endpoint with theconfluent.http.server.listenersfield, which defaults tohttp://0.0.0.0:8090.In the appropriate Control Center (Legacy) properties file, use

confluent.controlcenter.streams.cprest.urlto define the REST endpoint forcontrolcenter.cluster.For additional clusters, define REST endpoints using

confluent.controlcenter.kafka.{name}.cprest.url. This should be consistent with the Kafka cluster name used for other Kafka Control Center (Legacy) configurations; for example,confluent.controlcenter.kafka.{name}.bootstrap.servers.

A minimal viable configuration touches the following files, and includes settings for these properties (example names and ports are given):

Control Center (Legacy) properties file

The Control Center (Legacy) Configuration Examples for Confluent Platform file includes:

confluent.controlcenter.schema.registry.url=http://localhost:8081confluent.controlcenter.schema.registry.sr-1.url=http://localhost:8082confluent.controlcenter.streams.cprest.url=http://localhost:8090confluent.controlcenter.kafka.AK1.cprest.url=http://localhost:8091

See Control Center configuration reference for a full description of confluent.controlcenter.schema.registry.url.

Broker configuration file for the Control Center (Legacy) cluster

The Kafka broker configuration file for controlcenter.cluster, such as server0.properties, includes:

confluent.http.server.listeners=http://localhost:8090confluent.schema.registry.url=http://localhost:8081

Broker configuration file for the Kafka cluster

The Kafka broker configuration file for AK1, server1.properties) includes:

confluent.http.server.listeners=http://localhost:8091confluent.schema.registry.url=http://localhost:8082

With these configurations, editing the schema through the Control Center (Legacy) UI will connect to http://localhost:8081 for controlcenter.cluster and http://localhost:8082 for AK1.

Defaults and Fallback

If confluent.schema.registry.url fields are not specified for any brokers, the confluent.controlcenter.schema.registry.url Schema Registry URL is applied. For example, if the Schema Registry URL was not provided for AK1, AK1’s associated Schema Registry cluster would also be specified at http://localhost:8081. If confluent.controlcenter.schema.registry.url is not explicitly specified in the Control Center (Legacy) properties file, it defaults to http://localhost:8081.

Example

Following is a detailed example of a functional multi-cluster Schema Registry setup with two Kafka clusters connected to Control Center (Legacy), one the controlcenter.cluster, and the other named AK1, each with one broker. Example instructions refer to the location of your Confluent Platform installation as $CONFLUENT_HOME.

To run the example, copy default configuration files to new files per the example filenames below, add/modify properties as shown, and run the components as described in Run the Example.

Important

As of Confluent Platform 7.5, ZooKeeper is deprecated for new deployments. Confluent recommends KRaft mode for new deployments. To learn more about running Kafka in KRaft mode, see KRaft Overview for Confluent Platform, the KRaft steps in the Platform Quick Start, and Settings for other components. The following example provides both KRaft (combined mode) and ZooKeeper configurations. Another example of running multi-cluster Schema Registry on either KRaft or ZooKeeper mode is shown in the Schema Linking Quick Start for Confluent Platform.

Key Configurations

The example properties files are based on the defaults. You can copy the default properties files to use as a basis for the specialized versions of them shown here. The example assumes the new files are in the same directories as the originals.

KRaft server file is in

$CONFLUENT_HOME/etc/kafka/kraft/server.properties(KRaft combined mode). Copy this to createserver0.propertiesandserver1.properties.Schema Registry properties file is

$CONFLUENT_HOME/etc/schema-registry/schema-registry.properties. Copy this to createschema-registry0.propertiesandschema-registry1.properties.Control Center (Legacy) properties file is

$CONFLUENT_HOME/etc/confluent-control-center/control-center-dev.properties. Copy this to create$CONFLUENT_HOME/etc/confluent-control-center/control-center-multi-sr.properties. In addition to the configs, shown below for this file, it is recommended to comment out thezookeeper.connectline, as it doesn’t apply in this mode.

| File | Properties |

| server0.properties |

|

| server1.properties |

|

| schema-registry0.properties |

|

| schema-registry1.properties |

|

| control-center-multi-sr.properties |

cpcrest.url, confluent.controlcenter.kafka.AK1.cprest.url, and confluent.controlcenter.schema.registry.SR-AK1.url are new properties, specific to multi-cluster Schema Registry. |

Tip

The values for kafkastore.topic and schema.registry.group.id must be unique for each Schema Registry properties file because in this example the two registries are colocated on localhost. If the Schema Registry clusters were on different hosts, you would not need to make these changes.

The example properties files are based on the defaults. You can copy the default properties files to use as a basis for the specialized versions of them shown here. The example assumes the new files are in the same directories as the originals.

ZooKeeper

zookeeper.propertiesfile is in$CONFLUENT_HOME/etc/kafka/zookeeper.properties. Copy this to createzookeeper0.propertiesandzookeeper1.properties.KRaft

server.propertiesfile is in$CONFLUENT_HOME/etc/kafka/. Copy this to createserver0.propertiesandserver1.properties.Schema Registry properties file is

$CONFLUENT_HOME/etc/schema-registry/schema-registry.properties. Copy this to createschema-registry0.propertiesandschema-registry1.properties.Control Center (Legacy) properties file is

$CONFLUENT_HOME/etc/confluent-control-center/control-center-dev.properties. Copy this to create$CONFLUENT_HOME/etc/confluent-control-center/control-center-multi-sr.properties.

| File | Properties |

| zookeeper0.properties |

|

| zookeeper1.properties |

|

| server0.properties |

|

| server1.properties |

|

| schema-registry0.properties |

|

| schema-registry1.properties |

|

| control-center-multi-sr.properties |

cpcrest.url, confluent.controlcenter.kafka.AK1.cprest.url, and confluent.controlcenter.schema.registry.SR-AK1.url are new properties, specific to multi-cluster Schema Registry. |

Tip

The values for kafkastore.topic and schema.registry.group.id must be unique for each Schema Registry properties file because in this example the two registries are colocated on localhost. If the Schema Registry clusters were on different hosts, you would not need to make these changes.

Run the Example

To run the example in KRaft mode:

Configure cluster IDs and format log directories for the Kafka clusters.

Start the Kafka brokers in dedicated command windows, one per broker.

Start the Schema Registry clusters in dedicated command windows, one per Schema Registry cluster.

Start Confluent Control Center (Legacy) in its own dedicated command window.

Configure KRaft specific settings for server0 and server1

The following configuration commands must be run from $CONFLUENT_HOME (the top level directory where you installed Confluent Platform). Assuming you configured your example KRaft servers in the same directory as the default server.properties file, this would be $CONFLUENT_HOME/etc/kafka/kraft.

In a new command window where you plan to run server0, generate a

random-uuidfor server0 using the kafka-storage tool.KAFKA_CLUSTER_ID="$(bin/kafka-storage random-uuid)"

Format the log directories for server0:

./bin/kafka-storage format -t $KAFKA_CLUSTER_ID -c $CONFLUENT_HOME/etc/kafka/kraft/server0.properties --ignore-formatted

This is the dedicated window in which you will run server0.

In a new command window where you plan to run server1, a

random-uuidfor server1 using the kafka-storage tool.KAFKA_CLUSTER_ID="$(bin/kafka-storage random-uuid)"

Format the log directories for server0:

./bin/kafka-storage format -t $KAFKA_CLUSTER_ID -c $CONFLUENT_HOME/etc/kafka/kraft/server1.properties --ignore-formatted

This is the dedicated window in which you will run server0.

Start the Kafka brokers

kafka-server-start etc/kafka/server0.properties

kafka-server-start etc/kafka/server1.properties

Start Schema Registry clusters

schema-registry-start etc/schema-registry/schema-registry0.properties

schema-registry-start etc/schema-registry/schema-registry1.properties

Start Control Center

control-center-start etc/confluent-control-center/control-center-multi-sr.properties

To run the example in ZooKeeper mode:

Start the ZooKeepers in dedicated command windows, one per ZooKeeper.

Start the Kafka brokers in dedicated command windows, one per broker.

Start the Schema Registry clusters in dedicated command windows, one per Schema Registry cluster.

Start Confluent Control Center (Legacy) in its own dedicated command window.

Start ZooKeepers

zookeeper-server-start etc/kafka/zookeeper0.properties

zookeeper-server-start etc/kafka/zookeeper1.properties

Start the Kafka brokers

kafka-server-start etc/kafka/server0.properties

kafka-server-start etc/kafka/server1.properties

Start Schema Registry clusters

schema-registry-start etc/schema-registry/schema-registry0.properties

schema-registry-start etc/schema-registry/schema-registry1.properties

Start Control Center

control-center-start etc/confluent-control-center/control-center-multi-sr.properties

Manage Schemas for Both Clusters on Control Center (Legacy)

When the example clusters are running and Control Center (Legacy) finishes initialization, open Control Center (Legacy) in your web browser. (Control Center (Legacy) runs at

http://localhost:9021/by default, as described in Configure and access Control Center (Legacy).)Select a cluster from the navigation bar, click the Topics menu, and explore the schema management options for one or both clusters.

Security

Any other configurations used to set up a Schema Registry client with Control Center (Legacy) can be configured for an additional Schema Registry cluster by simply appending the Schema Registry cluster’s name to the confluent.controlcenter.schema.registry prefix.

For example, for HTTP Basic authentication with multi-cluster Schema Registry, specify the following in the Confluent Control Center (Legacy) configuration file:

Use

confluent.controlcenter.schema.registry.basic.auth.credentials.sourceandconfluent.controlcenter.schema.registry.basic.auth.user.infoto define authentication for theconfluent.controlcenter.schema.registry.urlcluster.Use

confluent.controlcenter.schema.registry.{name}.basic.auth.credentials.sourceandconfluent.controlcenter.schema.registry.{name}.basic.auth.user.infofor additional Schema Registry clusters (associated with the URL fields by{name}).

Some Schema Registry client configurations also include a schema.registry prefix. For TLS/SSL security settings, specify the following in the Confluent Control Center (Legacy) configuration file:

Use

confluent.controlcenter.schema.registry.schema.registry.ssl.truststore.locationandconfluent.controlcenter.schema.registry.schema.registry.ssl.truststore.passwordfor theconfluent.controlcenter.schema.registry.urlcluster.Use

confluent.controlcenter.schema.registry.{name}.schema.registry.ssl.truststore.locationandconfluent.controlcenter.schema.registry.{name}.schema.registry.ssl.truststore.passwordfor additional Schema Registry clusters (associated with the URL fields by{name}).

To learn more, see Schema Registry authentication properties in the Control Center (Legacy) Configuration Reference under Security for Confluent Platform components settings and the section on Schema Registry in HTTP Basic authentication. To learn more, see How to configure clients to Schema Registry in the Schema Registry Security Overview.

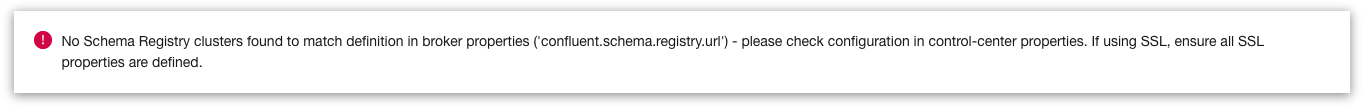

Errors and Troubleshooting

If the brokers for the cluster have matching Schema Registry URLs, but these URLs were not defined in the Control Center (Legacy) properties file, an error message is displayed on the cluster overview page.

Version Compatibility

The confluent.controlcenter.schema.registry.url configuration in the Control Center (Legacy) properties file acts as a default if a cluster’s broker configurations do not contain confluent.schema.registry.urlfields. Multiple Schema Registry clusters may be specified with confluent.controlcenter.schema.registry.{name}.url fields.

Multi-cluster Schema Registry cannot be used with Kafka versions prior to Kafka 2.4.x, the version current with Confluent Platform 5.4.0. However, using a single cluster Schema Registry setup will work with earlier Kafka versions. To learn more, see Confluent Platform and Apache Kafka compatibility.

Suggested Reading

See Tutorial: Use Schema Registry on Confluent Platform to Implement Schemas for a Client Application for hands-on examples of developing schemas, mapping to topics, and sending messages.

See Schema Registry for an overview of Schema Registry and schema management.

Control Center (Legacy) Configuration Reference for Confluent Platform

Control Center (Legacy) Configuration Examples for Confluent Platform

A schema for message values has not been set for this topic in Troubleshoot Control Center (Legacy) for Confluent Platform