Schema Registry Deployment Architectures for Confluent Platform

Schema Registry on Confluent Platform can be deployed using a single primary source, with either Kafka or ZooKeeper leader election. You can also set up multiple Schema Registry servers for high availability deployments, where you switch to a secondary Schema Registry cluster if the primary goes down, and for data migration, one time or as a continuous feed.

This section describes these architectures.

Single Primary Architecture

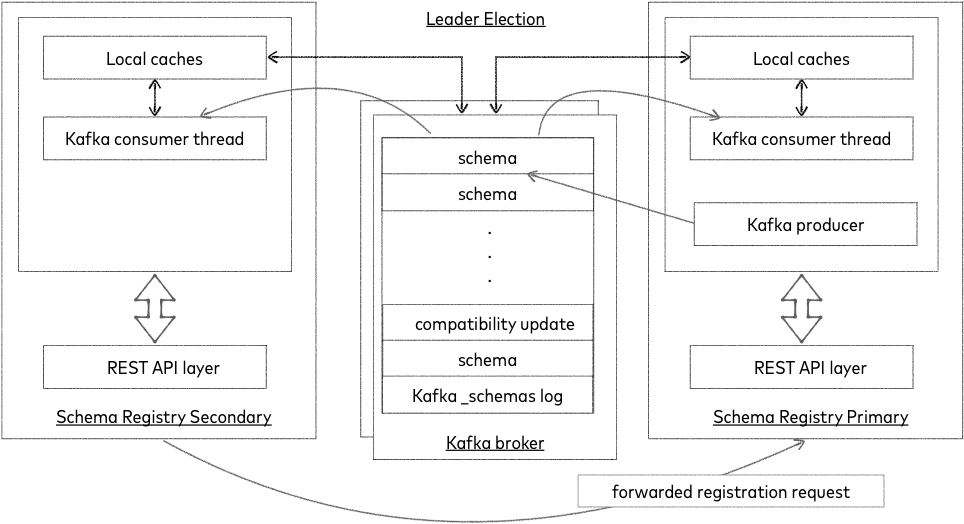

Schema Registry is designed to work as a distributed service using single primary architecture. In this configuration, at most one Schema Registry instance is the primary at any given moment (ignoring pathological ‘zombie primaries’). Only the primary is capable of publishing writes to the underlying Kafka log, but all nodes are capable of directly serving read requests. Secondary nodes serve registration requests indirectly by simply forwarding them to the current primary, and returning the response supplied by the primary. Primary election is accomplished with the Kafka group protocol. (ZooKeeper based primary election was removed in Confluent Platform 7.0.0. )

Note

Make sure not to mix up the election modes amongst the nodes in same cluster. This will lead to multiple primaries and issues with your operations.

Kafka Coordinator Primary Election

Kafka based Schema Registry

Kafka based primary election is chosen when kafkastore.connection.url is not configured and has the Kafka bootstrap brokers <kafkastore.bootstrap.servers> specified. The Kafka group protocol, chooses one amongst the primary eligible nodes leader.eligibility=true as the primary. Kafka based primary election should be used in all cases. (ZooKeeper based leader election was removed in Confluent Platform 7.0.0. See Migration from ZooKeeper primary election to Kafka primary election.)

Schema Registry is also designed for multi-colocated configuration. See Multi-Datacenter Setup for more details.

ZooKeeper Primary Election

Important

ZooKeeper leader election was removed in Confluent Platform 7.0.0. Kafka leader election should be used instead. See Migration from ZooKeeper primary election to Kafka primary election for full details.

High Availability for Single Primary Setup

Many services in Confluent Platform are effectively stateless (they store state in Kafka and load it on demand at start-up) and can redirect requests automatically. You can treat these services as you would deploying any other stateless application and get high availability features effectively for free by deploying multiple instances. Each instance loads all of the Schema Registry state so any node can serve a READ, and all nodes know how to forward requests to the primary for WRITEs.

A recommended approach is to define multiple Schema Registry servers for high availability clients using the Schema Registry instance URLs in the Schema Registry client schema.registry.url property, thereby using the entire cluster of Schema Registry instances and providing a method for failover.

This also makes it easy to handle changes to the set of servers without having to reconfigure and restart all of your applications. The same strategy applies to REST proxy or Kafka Connect.

A simple setup with just a few nodes means Schema Registry can fail over easily with a simple multi-node deployment and single primary election protocol.

Alternatively, you can use a single URL for schema.registry.url and still use the entire cluster of Schema Registry instances. However, this configuration does not support failover to different Schema Registry instances in a dynamic DNS or virtual IP setup because only one schema.registry.url is surfaced to schema registry clients.

Examples and Runbooks

The following sections describe single and multi datacenter deployments using Kafka leader election.

Note that ZooKeeper leader election was removed in Confluent Platform 7.0.0. Use Kafka leader election instead. To upgrade to Kafka leader election, see Migration from ZooKeeper primary election to Kafka primary election.

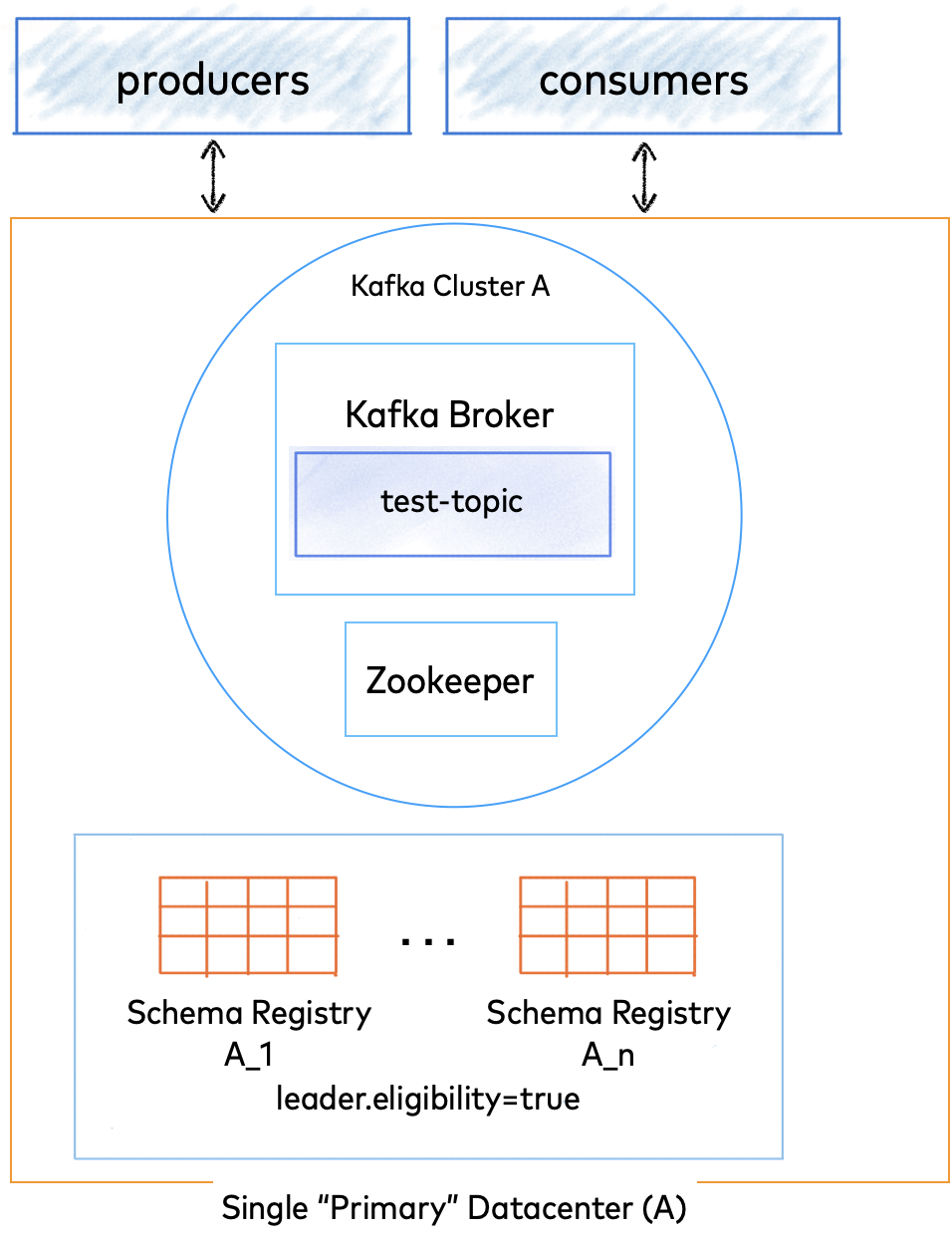

Single Datacenter Setup

Within a single datacenter or location, a multi-node, multi-broker cluster provides Kafka data replication across the nodes.

Producers write and consumers read data to/from topic partition leaders. Leaders replicate data to followers so that messages are copied to more than one broker.

You can configure parameters on producers and consumers to optimize your single cluster deployment for various goals, including message durability and high availability.

Kafka producers can set the acks configuration parameter to control when a write is considered successful. For example, setting producers to acks=all requires other brokers in the cluster acknowledge receiving the data before the leader broker responds to the producer.

If a leader broker fails, the Kafka cluster recovers when a follower broker is elected leader and client applications can continue to write and read messages through the new leader.

Kafka Election

Recommended Deployment

Single datacenter with Kafka intra-cluster replication

The image above shows a single data center - DC A. For this example, Kafka is used for leader election, which is recommended.

Note

You can also set up a single cluster with ZooKeeper, but this configuration is deprecated in favor of Kafka leader election.

Important Settings

kafkastore.bootstrap.servers- This should point to the primary Kafka cluster (DC A in this example).schema.registry.group.id- Theschema.registry.group.idis used as the consumergroup.id. For single datacenter setup, make this setting the same for all nodes in the cluster. When set,schema.registry.group.idoverridesgroup.idfor the Kafka group when Kafka is used for leader election. (Without this configuration,group.idwill be “schema-registry”.)leader.eligibility- In a single datacenter setup, all Schema Registry instances will be local to the Kafka cluster and should haveleader.eligibilityset to true.

Run Book

If you have Schema Registry running in a single datacenter , and the primary node goes down; what do you do? First, note that the remaining Schema Registry instances can continue to serve requests.

If one Schema Registry node goes down, another node is elected leader and the cluster auto-recovers.

Restart the node, and it will come back as a follower (since a new leader was elected in the meantime).

Multi-Datacenter Setup

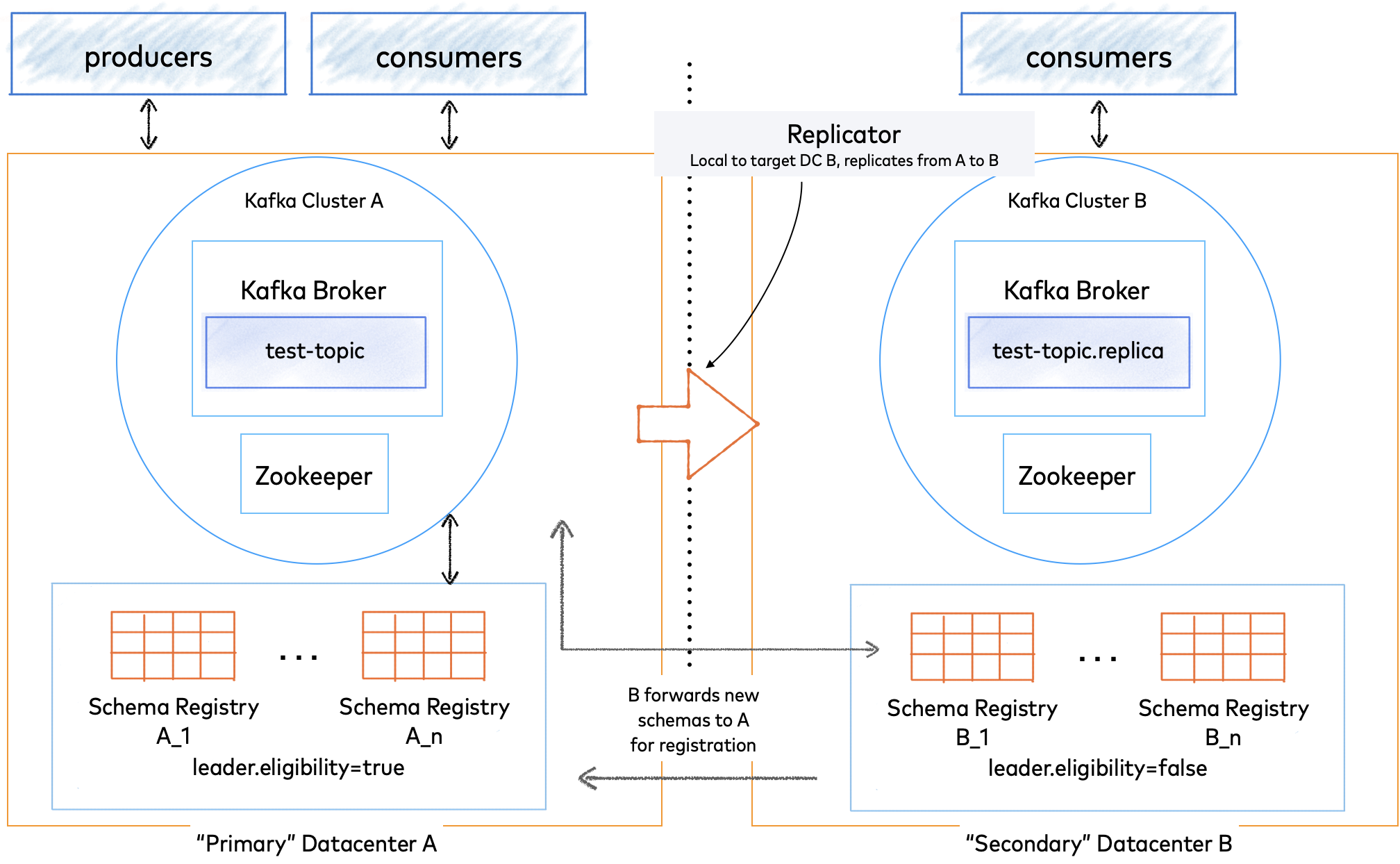

Spanning multiple datacenters (DCs) with your Confluent Schema Registry synchronizes data across sites, further protects against data loss, and reduces latency. The recommended multi-datacenter deployment designates one datacenter as “primary” and all others as “secondary”. If the “primary” datacenter fails and is unrecoverable, you must manually designate what was previously a “secondary” datacenter as the new “primary” per the steps in the Run Books below.

Kafka Election

Recommended Deployment

Multi datacenter with Kafka based primary election

The image above shows two datacenters - DC A, and DC B. Either could be on-premises, in Confluent Cloud, or part of a bridge to cloud solution. Each of the two datacenters has its own Apache Kafka® cluster, ZooKeeper cluster, and Schema Registry.

The Schema Registry nodes in both datacenters link to the primary Kafka cluster in DC A, and the secondary datacenter (DC B) forwards Schema Registry writes to the primary (DC A). Note that Schema Registry nodes and hostnames must be addressable and routable across the two sites to support this configuration.

Schema Registry instances in DC B have leader.eligibility set to false, meaning that none can be elected leader during steady state operation with both datacenters online.

To protect against complete loss of DC A, Kafka cluster A (the source) is replicated to Kafka cluster B (the target). This is achieved by running the Replicator local to the target cluster (DC B).

In this active-passive setup, Replicator runs in one direction, copying Kafka data and configurations from the active DC A to the passive DC B. The Schema Registry instances in both data centers point to the internal _schemas topic in DC A. For the purposes of disaster recovery, you must replicate the internal schemas topic itself. If DC A goes down, the system will failover to DC B. Therefore, DC B needs a copy of the _schemas topic for this purpose.

Tip

Keep in mind, this failover scenario does not require the same overall configuration needed to migrate schemas. So, do not set schema.registry.topic or schema.subject.translator.class, as you would for a schema migration.

Producers write data to just the active cluster. Depending on the overall design, consumers can read data from the active cluster only, leaving the passive cluster for disaster recovery, or from both clusters to optimize reads on a geo-local cache.

In the event of a partial or complete disaster in one datacenter, applications can failover to the secondary datacenter.

ACLs and Security

In a multi-DC setup with ACLs enabled, the schemas ACL topic must be replicated.

In the case of an outage, the ACLs will be cached along with the schemas. Schema Registry will continue to run READs with ACLs if the primary Kafka cluster goes down.

For an overview of security strategies and protocols for Schema Registry, see Secure Schema Registry for Confluent Platform.

To learn how to configure ACLs on roles related to Schema Registry, see Schema Registry ACL Authorizer for Confluent Platform.

To learn how to define Kafka topic based ACLs, see Schema Registry Topic ACL Authorizer for Confluent Platform.

To learn about using role-based authorization with Schema Registry, see Configure Role-Based Access Control for Schema Registry in Confluent Platform.

To learn more about Replicator security, see Security and ACL Configurations in the Replicator documentation.

Important Settings

kafkastore.bootstrap.servers- This should point to the primary Kafka cluster (DC A in this example).schema.registry.group.id- Use this setting to override thegroup.idfor the Kafka group used when Kafka is used for leader election. Without this configuration,group.idwill be “schema-registry”. If you want to run more than one Schema Registry cluster against a single Kafka cluster you, should make this setting unique for each cluster.leader.eligibility- A Schema Registry server withleader.eligibilityset to false is guaranteed to remain a follower during any leader election. Schema Registry instances in a “secondary” datacenter should have this set to false, and Schema Registry instances local to the shared Kafka (primary) cluster should have this set to true.

Hostnames must be reachable and resolve across datacenters to support forwarding of new schemas from DC B to DC A.

Setup

Assuming you have Schema Registry running, here are the recommended steps to add Schema Registry instances in a new “secondary” datacenter (call it DC B):

In DC B, make sure Kafka has

unclean.leader.election.enableset to false.In DC B, run Replicator with Kafka in the “primary” datacenter (DC A) as the source and Kafka in DC B as the target.

In Schema Registry config files in DC B, set the

kafkastore.bootstrap.serversto point to Kafka cluster in DC A and setleader.eligibilityto false.Start your new Schema Registry instances with these configs.

Run Book

If you have Schema Registry running in multiple datacenters, and you lose your “primary” datacenter; what do you do? First, note that the remaining Schema Registry instances running on the “secondary” can continue to serve any request that does not result in a write to Kafka. This includes GET requests on existing IDs and POST requests on schemas already in the registry. They will be unable to register new schemas.

If possible, revive the “primary” datacenter by starting Kafka and Schema Registry as before.

If you must designate a new datacenter (call it DC B) as “primary”, reconfigure the

kafkastore.bootstrap.serversin DC B to point to its local Kafka cluster and update Schema Registry config files to setleader.eligibilityto true.Restart your Schema Registry instances with these new configs in a rolling fashion.

Multi-Cluster Schema Registry

In the previous disaster recovery scenarios, a single Schema Registry typically serves multiple environments, with each environment potentially containing multiple Kafka clusters.

Starting with version 5.4.1, Confluent Platform supports the ability to run multiple schema registries and associate a unique Schema Registry to each Kafka cluster in multi- cluster environments. Rather than disaster recovery, the primary goal of these types of deployments is the ability to scale by adding special purpose registries to support governance across diverse and massive datasets in large organizations.

To learn more about this configuration, see Enabling Multi-Cluster Schema Registry.