Datagen Source Connector for Confluent Cloud

The fully-managed Confluent Cloud Datagen Source connector for Confluent Cloud is used to generate mock data for development and testing. The connector supports Avro, JSON Schema, Protobuf, and JSON (schemaless) output formats. The mock source data is provided through GitHub from datagen resources. This connector is not suitable for production use.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Note

This Quick Start is for the fully-managed Confluent Cloud connector. If you are installing the connector locally for Confluent Platform, see Datagen Source Connector for Confluent Platform.

If you require private networking for fully-managed connectors, make sure to set up the proper networking beforehand. For more information, see Manage Networking for Confluent Cloud Connectors.

Limitations

Be sure to review the following information.

If you plan to use one or more Single Message Transforms (SMTs), see SMT Limitations.

If you plan to use Confluent Cloud Schema Registry, see Schema Registry Enabled Environments.

Quick start options

There are several ways to start using the Datagen Source connector in Confluent Cloud. You can get started quickly using a tutorial or you can manually configure the connector. Note that the tutorials are available only if your user account has OrganizationAdmin RBAC role privileges. The following descriptions provide additional information about how to start using the Datagen Source connector.

Start the Produce sample data quick start tutorial from the Confluent Cloud home page after you first launch Confluent Cloud. Using this tutorial, you can create a Kafka topic and configure the Datagen Source connector to produce sample records in the topic. This tutorial is available to you after you log into your new Confluent Cloud cluster and may be launched later using the following UI tile.

Start the Launch Sample Data quick start tutorial from the Datagen Source connector tile. This quick start automatically creates a

sample_dataKafka topic and launches the Datagen Source connector using the quick start template you select. Note that this connector quick start is unavailable if you have already started the Produce sample data tutorial.

Manually configure and launch the Datagen Source connector using the quick start instructions provided in this document.

Quick Start

Use this quick start to get up and running with the Confluent Cloud Datagen source connector. The quick start provides the basics of selecting the connector and configuring it to use for testing and development. This connector is not suitable for production use.

- Prerequisites

Authorized access to a Confluent Cloud cluster on Amazon Web Services (AWS), Microsoft Azure (Azure), or Google Cloud.

The Confluent CLI installed and configured for the cluster. See Install the Confluent CLI.

Schema Registry must be enabled to use a Schema Registry-based format (for example, Avro, JSON Schema, or Protobuf). See Schema Registry Enabled Environments for additional information.

Kafka cluster credentials. The following lists the different ways you can provide credentials.

Enter an existing service account resource ID.

Create a Confluent Cloud service account for the connector. Make sure to review the ACL entries required in the service account documentation. Some connectors have specific ACL requirements.

Create a Confluent Cloud API key and secret. To create a key and secret, you can use confluent api-key create or you can autogenerate the API key and secret directly in the Cloud Console when setting up the connector.

Using the Confluent Cloud Console

Step 1: Launch your Confluent Cloud cluster

To create and launch a Kafka cluster in Confluent Cloud, see Create a kafka cluster in Confluent Cloud.

Step 2: Add a connector

In the left navigation menu, click Connectors. If you already have connectors in your cluster, click + Add connector.

Step 3: Select your connector

Click the Datagen Source connector card. Note that you may see a Launch Sample Data tutorial when you click this tile. For more information, see Quick start options.

Step 4: Enter the connector details

Note

Ensure you have all your prerequisites completed.

An asterisk ( * ) designates a required entry.

At the Add Datagen Source Connector screen, complete the following:

Select the topic you want to send data to from the Topics list. To create a new topic, click +Add new topic.

Select the way you want to provide Kafka Cluster credentials. You can choose one of the following options:

My account: This setting allows your connector to globally access everything that you have access to. With a user account, the connector uses an API key and secret to access the Kafka cluster. This option is not recommended for production.

Service account: This setting limits the access for your connector by using a service account. This option is recommended for production.

Use an existing API key: This setting allows you to specify an API key and a secret pair. You can use an existing pair or create a new one. This method is not recommended for production environments.

Note

Freight clusters support only service accounts for Kafka authentication.

Click Continue.

Select a template: Select from built-in quickstart schema specifications. Cannot be used with schema.string. Refer to kafka-connect-datagen on Github for additional information.

Schema String: Schema String: Provide a custom JSON-encoded Avro schema. The length of the schema string cannot exceed 10000 characters. This option cannot be used with the Sample data template option. For schema string examples, see datagen resources. For supported annotations, see annotation types. Note the following current annotation type limitations:

The options annotation type object

filevariant is not supported. The JSONarrayvariant is supported.The regex annotation type is not supported.

Output messages

Select output record value format: Under Output Kafka record value format, select an output message format (data coming from the connector): AVRO, JSON_SR (JSON Schema), PROTOBUF, or JSON. Schema Registry must be enabled to use a Schema Registry-based format (for example, AVRO, JSON_SR, or PROTOBUF). See Schema Registry Enabled Environments for more information.

Show advanced configurations

Schema context: Select a schema context to use for this connector, if using a schema-based data format. This property defaults to the Default context, which configures the connector to use the default schema set up for Schema Registry in your Confluent Cloud environment. A schema context allows you to use separate schemas (like schema sub-registries) tied to topics in different Kafka clusters that share the same Schema Registry environment. For example, if you select a non-default context, a Source connector uses only that schema context to register a schema and a Sink connector uses only that schema context to read from. For more information about setting up a schema context, see What are schema contexts and when should you use them?.

Schema Keyfield: Name of a field to use as the message key.

Max interval between messages (ms): Sets the maximum interval (in milliseconds) between messages. The default value is 1000.

Additional Configs

Value Converter Decimal Format: Specify the JSON/JSON_SR serialization format for Connect DECIMAL logical type values with two allowed literals: BASE64 to serialize DECIMAL logical types as base64 encoded binary data and NUMERIC to serialize Connect DECIMAL logical type values in JSON/JSON_SR as a number representing the decimal value.

value.converter.replace.null.with.default: Whether to replace fields that have a default value and that are null to the default value. When set to true, the default value is used, otherwise null is used. Applicable for JSON Converter.

Key Converter Schema ID Serializer: The class name of the schema ID serializer for keys. This is used to serialize schema IDs in the message headers.

Value Converter Reference Subject Name Strategy: Set the subject reference name strategy for value. Valid entries are DefaultReferenceSubjectNameStrategy or QualifiedReferenceSubjectNameStrategy. Note that the subject reference name strategy can be selected only for PROTOBUF format with the default strategy being DefaultReferenceSubjectNameStrategy.

value.converter.schemas.enable: Include schemas within each of the serialized values. Input messages must contain schema and payload fields and may not contain additional fields. For plain JSON data, set this to false. Applicable for JSON Converter.

errors.tolerance: Use this property if you would like to configure the connector’s error handling behavior. WARNING: This property should be used with CAUTION for SOURCE CONNECTORS as it may lead to dataloss. If you set this property to ‘all’, the connector will not fail on errant records, but will instead log them (and send to DLQ for Sink Connectors) and continue processing. If you set this property to ‘none’, the connector task will fail on errant records.

Value Converter Connect Meta Data: Allow the Connect converter to add its metadata to the output schema. Applicable for Avro Converters.

Value Converter Value Subject Name Strategy: Determines how to construct the subject name under which the value schema is registered with Schema Registry.

Key Converter Key Subject Name Strategy: How to construct the subject name for key schema registration.

value.converter.ignore.default.for.nullables: When set to true, this property ensures that the corresponding record in Kafka is NULL, instead of showing the default column value. Applicable for AVRO,PROTOBUF and JSON_SR Converters.

Value Converter Schema ID Serializer: The class name of the schema ID serializer for values. This is used to serialize schema IDs in the message headers.

Auto-restart policy

Enable Connector Auto-restart: Control the auto-restart behavior of the connector and its task in the event of user-actionable errors. Defaults to

true, enabling the connector to automatically restart in case of user-actionable errors. Set this property tofalseto disable auto-restart for failed connectors. In such cases, you would need to manually restart the connector.

Transforms

Single Message Transforms: To add a new SMT, see Add transforms. For more information about unsupported SMTs, see Unsupported transformations.

See Configuration Properties for all property values and definitions.

Click Continue.

Based on the number of topic partitions you select, you will be provided with a recommended number of tasks.

To change the number of tasks, use the Range Slider to select the desired number of tasks.

Click Continue.

Verify the connection details by previewing the running configuration.

Once you’ve validated that the properties are configured to your satisfaction, click Launch.

Tip

For information about previewing your connector output, see Data Previews for Confluent Cloud Connectors.

The status for the connector should go from Provisioning to Running.

Step 5: Check the Kafka topic

After the connector is running, verify that messages are populating your Kafka topic.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Using the Confluent CLI

Complete the following steps to set up and run the connector using the Confluent CLI.

Note

Make sure you have all your prerequisites completed.

Step 1: List the available connectors

Enter the following command to list available connectors:

confluent connect plugin list

Step 2: List the connector configuration properties

Enter the following command to show the connector configuration properties:

confluent connect plugin describe <connector-plugin-name>

The command output shows the required and optional configuration properties.

Step 3: Create the connector configuration file

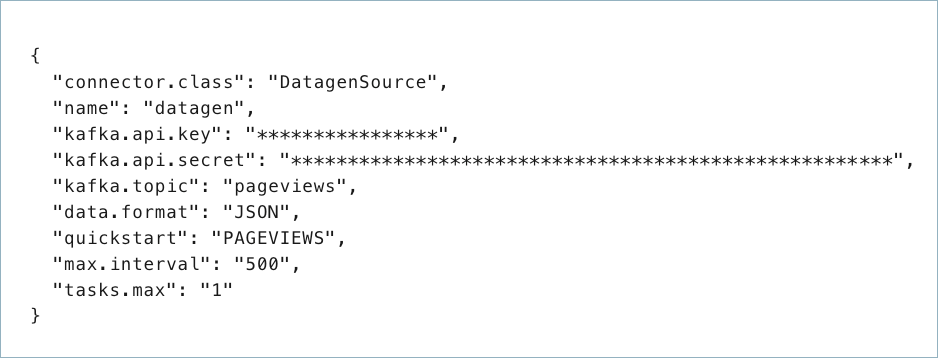

Create a JSON file that contains the connector configuration properties. The following example shows the required connector properties.

{

"name" : "<datagen-connector-name>",

"connector.class": "DatagenSource",

"kafka.auth.mode": "KAFKA_API_KEY",

"kafka.api.key": "<my-kafka-api-key>",

"kafka.api.secret" : "<my-kafka-api-secret>",

"kafka.topic" : "topic1, topic2",

"output.data.format" : "JSON",

"quickstart" : "PAGEVIEWS",

"tasks.max" : "1"

}

Note the following property definitions:

"name": Sets a name for your new connector."connector.class": Identifies the connector plugin class.

"kafka.auth.mode": Identifies the connector authentication mode you want to use. There are two options:SERVICE_ACCOUNTorKAFKA_API_KEY(the default). To use an API key and secret, specify the configuration propertieskafka.api.keyandkafka.api.secret, as shown in the example configuration (above). To use a service account, specify the Resource ID in the propertykafka.service.account.id=<service-account-resource-ID>. To list the available service account resource IDs, use the following command:confluent iam service-account list

For example:

confluent iam service-account list Id | Resource ID | Name | Description +---------+-------------+-------------------+------------------- 123456 | sa-l1r23m | sa-1 | Service account 1 789101 | sa-l4d56p | sa-2 | Service account 2

"kafka.topic": Enter one topic or multiple comma-separated topics."output.data.format": Sets the output Kafka record value format (data coming from the connector). Valid entries are AVRO, JSON_SR, PROTOBUF, or JSON (schemaless). You must have Confluent Cloud Schema Registry configured if using a schema-based format (for example, Avro).

The following two configuration properties are available to create sample source data:

"schema.string": Provide a custom JSON-encoded Avro schema. The length of the schema string cannot exceed 10000 characters. This option cannot be used with thequickstartproperty. For schema string examples, see datagen resources. For supported annotations, see annotation types. Note the following current annotation type limitations:The options annotation type object

filevariant is not supported. The JSONarrayvariant is supported.The regex annotation type is not supported.

"quickstart": Enter one of the listed Quick Start schema names. This property cannot be used with theschema.stringproperty. To view the sample data and schema specifications, see datagen resources.Show quickstart schema list

CLICKSTREAM_CODES

CLICKSTREAM

CLICKSTREAM_USERS

ORDERS

RATINGS

USERS

USERS_ARRAY

PAGEVIEWS

STOCK_TRADES

INVENTORY

PRODUCT

PURCHASES

TRANSACTIONS

STORES

CREDIT_CARDS

CAMPAIGN_FINANCE

FLEET_MGMT_DESCRIPTION

FLEET_MGMT_LOCATION

FLEET_MGMT_SENSORS

PIZZA_ORDERS

PIZZA_ORDERS_COMPLETED

PIZZA_ORDERS_CANCELLED

INSURANCE_OFFERS

INSURANCE_CUSTOMERS

INSURANCE_CUSTOMER_ACTIVITY

GAMING_GAMES

GAMING_PLAYERS

GAMING_PLAYER_ACTIVITY

PAYROLL_EMPLOYEE

PAYROLL_EMPLOYEE_LOCATION

PAYROLL_BONUS

SYSLOG_LOGS

DEVICE_INFORMATION

SIEM_LOGS

SHOES

SHOE_CUSTOMERS

SHOE_ORDERS

SHOE_CLICKSTREAM

Single Message Transforms: See the Single Message Transforms (SMT) documentation for details about adding SMTs using the CLI.

See Configuration Properties for all property values and definitions.

Step 4: Load the configuration file and create the connector

Enter the following command to load the configuration and start the connector:

confluent connect cluster create --config-file <file-name>.json

For example:

confluent connect cluster create --config-file datagen-source-config.json

Example output:

Created connector confluent-datagen-source lcc-ix4dl

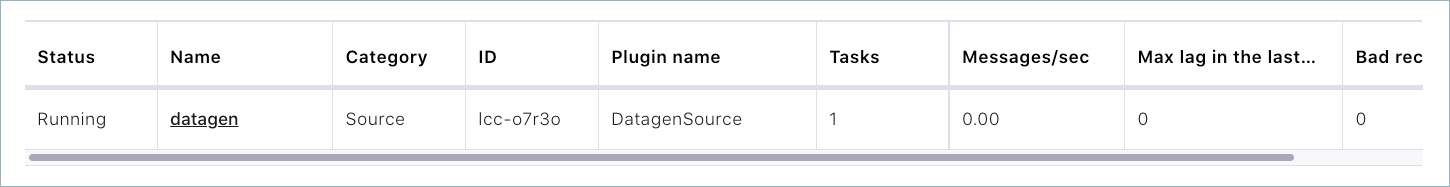

Step 5: Check the connector status

Enter the following command to check the connector status:

confluent connect cluster list

Example output:

ID | Name | Status | Type

+-----------+--------------------------+---------+-------+

lcc-ix4dl | confluent-datagen-source | RUNNING | source

Step 6: Check the Kafka topic.

After the connector is running, verify that messages are populating your Kafka topic.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Example

Follow the steps in the Quick Start for Confluent Cloud to stream sample data to Kafka using the Datagen Source connector for Confluent Cloud.

Configuration Properties

Use the following configuration properties with the fully-managed connector. For self-managed connector property definitions and other details, see the connector docs in Self-managed connectors for Confluent Platform.

How should we connect to your data?

nameSets a name for your connector.

Type: string

Valid Values: A string at most 64 characters long

Importance: high

Kafka Cluster credentials

kafka.auth.modeKafka Authentication mode. It can be one of KAFKA_API_KEY or SERVICE_ACCOUNT. It defaults to KAFKA_API_KEY mode, whenever possible.

Type: string

Valid Values: SERVICE_ACCOUNT, KAFKA_API_KEY

Importance: high

kafka.api.keyKafka API Key. Required when kafka.auth.mode==KAFKA_API_KEY.

Type: password

Importance: high

kafka.service.account.idThe Service Account that will be used to generate the API keys to communicate with Kafka Cluster.

Type: string

Importance: high

kafka.api.secretSecret associated with Kafka API key. Required when kafka.auth.mode==KAFKA_API_KEY.

Type: password

Importance: high

Which topic do you want to send data to?

kafka.topicIdentifies the topic name to write the data to.

Type: string

Importance: high

Schema Config

schema.context.nameAdd a schema context name. A schema context represents an independent scope in Schema Registry. It is a separate sub-schema tied to topics in different Kafka clusters that share the same Schema Registry instance. If not used, the connector uses the default schema configured for Schema Registry in your Confluent Cloud environment.

Type: string

Default: default

Importance: medium

Output messages

output.data.formatSets the output Kafka record value format. Valid entries are AVRO, JSON_SR, PROTOBUF, or JSON. Note that you need to have Confluent Cloud Schema Registry configured if using a schema-based message format like AVRO, JSON_SR, and PROTOBUF

Type: string

Default: JSON

Importance: high

Datagen Details

quickstartSelect from built-in quickstart schema specifications. Cannot be used with schema.string. Refer to kafka-connect-datagen on Github for additional information.

Type: string

Default: “”

Importance: high

schema.stringThe literal JSON-encoded Avro schema to use. Cannot be set with quickstart.

Type: string

Default: “”

Valid Values: A string at most 10000 characters long

Importance: medium

schema.keyfieldName of the field to use as message key. It’s optional when using quickstart.

Type: string

Default: “”

Importance: medium

max.intervalSet the maximum interval (in milliseconds) between each message.

Type: int

Default: 1000

Valid Values: [10,…] for non-dedicated clusters and [5,…] for dedicated clusters

Importance: high

Number of tasks for this connector

tasks.maxMaximum number of tasks for the connector.

Type: int

Valid Values: [1,…]

Importance: high

Additional Configs

header.converterThe converter class for the headers. This is used to serialize and deserialize the headers of the messages.

Type: string

Importance: low

producer.override.compression.typeThe compression type for all data generated by the producer. Valid values are none, gzip, snappy, lz4, and zstd.

Type: string

Importance: low

producer.override.linger.msThe producer groups together any records that arrive in between request transmissions into a single batched request. More details can be found in the documentation: https://docs.confluent.io/platform/current/installation/configuration/producer-configs.html#linger-ms.

Type: long

Valid Values: [100,…,1000]

Importance: low

value.converter.allow.optional.map.keysAllow optional string map key when converting from Connect Schema to Avro Schema. Applicable for Avro Converters.

Type: boolean

Importance: low

value.converter.auto.register.schemasSpecify if the Serializer should attempt to register the Schema.

Type: boolean

Importance: low

value.converter.connect.meta.dataAllow the Connect converter to add its metadata to the output schema. Applicable for Avro Converters.

Type: boolean

Importance: low

value.converter.enhanced.avro.schema.supportEnable enhanced schema support to preserve package information and Enums. Applicable for Avro Converters.

Type: boolean

Importance: low

value.converter.enhanced.protobuf.schema.supportEnable enhanced schema support to preserve package information. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.flatten.unionsWhether to flatten unions (oneofs). Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.generate.index.for.unionsWhether to generate an index suffix for unions. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.generate.struct.for.nullsWhether to generate a struct variable for null values. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.int.for.enumsWhether to represent enums as integers. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.latest.compatibility.strictVerify latest subject version is backward compatible when use.latest.version is true.

Type: boolean

Importance: low

value.converter.object.additional.propertiesWhether to allow additional properties for object schemas. Applicable for JSON_SR Converters.

Type: boolean

Importance: low

value.converter.optional.for.nullablesWhether nullable fields should be specified with an optional label. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.optional.for.proto2Whether proto2 optionals are supported. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.scrub.invalid.namesWhether to scrub invalid names by replacing invalid characters with valid characters. Applicable for Avro and Protobuf Converters.

Type: boolean

Importance: low

value.converter.use.latest.versionUse latest version of schema in subject for serialization when auto.register.schemas is false.

Type: boolean

Importance: low

value.converter.use.optional.for.nonrequiredWhether to set non-required properties to be optional. Applicable for JSON_SR Converters.

Type: boolean

Importance: low

value.converter.wrapper.for.nullablesWhether nullable fields should use primitive wrapper messages. Applicable for Protobuf Converters.

Type: boolean

Importance: low

value.converter.wrapper.for.raw.primitivesWhether a wrapper message should be interpreted as a raw primitive at root level. Applicable for Protobuf Converters.

Type: boolean

Importance: low

errors.toleranceUse this property if you would like to configure the connector’s error handling behavior. WARNING: This property should be used with CAUTION for SOURCE CONNECTORS as it may lead to dataloss. If you set this property to ‘all’, the connector will not fail on errant records, but will instead log them (and send to DLQ for Sink Connectors) and continue processing. If you set this property to ‘none’, the connector task will fail on errant records.

Type: string

Default: none

Importance: low

key.converter.key.schema.id.serializerThe class name of the schema ID serializer for keys. This is used to serialize schema IDs in the message headers.

Type: string

Default: io.confluent.kafka.serializers.schema.id.PrefixSchemaIdSerializer

Importance: low

key.converter.key.subject.name.strategyHow to construct the subject name for key schema registration.

Type: string

Default: TopicNameStrategy

Importance: low

value.converter.decimal.formatSpecify the JSON/JSON_SR serialization format for Connect DECIMAL logical type values with two allowed literals:

BASE64 to serialize DECIMAL logical types as base64 encoded binary data and

NUMERIC to serialize Connect DECIMAL logical type values in JSON/JSON_SR as a number representing the decimal value.

Type: string

Default: BASE64

Importance: low

value.converter.flatten.singleton.unionsWhether to flatten singleton unions. Applicable for Avro and JSON_SR Converters.

Type: boolean

Default: false

Importance: low

value.converter.ignore.default.for.nullablesWhen set to true, this property ensures that the corresponding record in Kafka is NULL, instead of showing the default column value. Applicable for AVRO,PROTOBUF and JSON_SR Converters.

Type: boolean

Default: false

Importance: low

value.converter.reference.subject.name.strategySet the subject reference name strategy for value. Valid entries are DefaultReferenceSubjectNameStrategy or QualifiedReferenceSubjectNameStrategy. Note that the subject reference name strategy can be selected only for PROTOBUF format with the default strategy being DefaultReferenceSubjectNameStrategy.

Type: string

Default: DefaultReferenceSubjectNameStrategy

Importance: low

value.converter.replace.null.with.defaultWhether to replace fields that have a default value and that are null to the default value. When set to true, the default value is used, otherwise null is used. Applicable for JSON Converter.

Type: boolean

Default: true

Importance: low

value.converter.schemas.enableInclude schemas within each of the serialized values. Input messages must contain schema and payload fields and may not contain additional fields. For plain JSON data, set this to false. Applicable for JSON Converter.

Type: boolean

Default: false

Importance: low

value.converter.value.schema.id.serializerThe class name of the schema ID serializer for values. This is used to serialize schema IDs in the message headers.

Type: string

Default: io.confluent.kafka.serializers.schema.id.PrefixSchemaIdSerializer

Importance: low

value.converter.value.subject.name.strategyDetermines how to construct the subject name under which the value schema is registered with Schema Registry.

Type: string

Default: TopicNameStrategy

Importance: low

Auto-restart policy

auto.restart.on.user.errorEnable connector to automatically restart on user-actionable errors.

Type: boolean

Default: true

Importance: medium

Next Steps

For an example that shows fully-managed Confluent Cloud connectors in action with Confluent Cloud for Apache Flink, see the Cloud ETL Demo. This example also shows how to use Confluent CLI to manage your resources in Confluent Cloud.

Suggested Reading

Blog post: Creating a Serverless Environment for Testing Your Apache Kafka Applications