Monitor Dedicated Clusters in Confluent Cloud

Use this section to understand and implement Confluent Cloud monitoring capabilities exclusively on Dedicated clusters. For monitoring capabilities of Basic, Standard, Enterprise, and Freight clusters, see Confluent Cloud Metrics and Monitor Kafka Consumer Lag in Confluent Cloud.

- Cluster Load Metric

Use the cluster load metric to evaluate cluster load on a Dedicated cluster. For more information, see Monitor cluster load.

- Manage Performance and Expansion

Learn how to combine and analyze a number of factors to manage Dedicated cluster performance. For more information, see Dedicated Cluster Performance and Expansion in Confluent Cloud.

- Track Usage by Team

Learn how to track usage by application to gather consumption information for multiple tenants. For more information, see Track Usage by Team on Dedicated Clusters in Confluent Cloud.

Monitor cluster load

The cluster load metric for Dedicated clusters helps provide visibility into the current load on a cluster.

Access the cluster load metric

You can access the cluster load metric as both a current value and a time series graph that represents historical load values. Use the Metrics API or view the metric in the Confluent Cloud Console.

To view the cluster load metric in the Cloud Console:

Details about cluster load

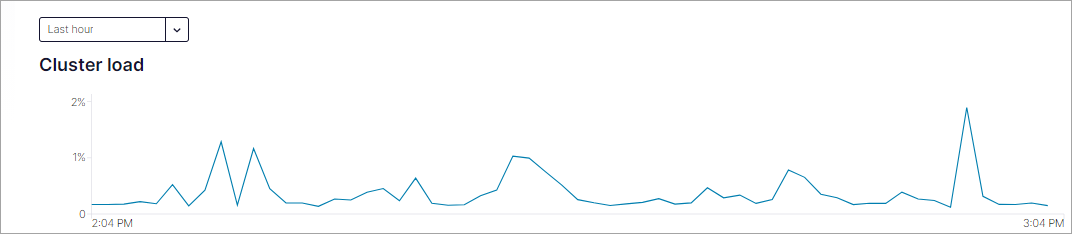

Cluster load is a metric that measures how utilized a Confluent Cloud Dedicated cluster is with its current workload. In the Cloud Console, the cluster load graph shows percentage values between 0 and 100 over the time period selected, with 0% indicating no load, and 100% representing a fully-utilized cluster. When the cluster load increases, you are also likely to see an increase in observed production and consumption latency.

There are a number of considerations when calculating the cluster load for a Dedicated cluster.

A CKU is the unit that specifies the maximum capability of the following dimensions of a Dedicated cluster:

ingress

egress

connections

requests

The ability to utilize any one of these dimension depends on workload behavior, and a wide range of workload behaviors can overload a Confluent Cloud cluster without fully utilizing any of these individual dimensions. For example, application patterns such as many partitions or a lot of small requests will drive cluster load increases despite using relatively low amounts of throughput. In contrast, applications with long-lived connections and efficient request batching generally can drive more throughput without excessively increasing cluster load.

As a general rule, a higher cluster load results in higher latencies. Latency increases from high cluster load are most visible at the 99th percentile (p99). At p99, very high latencies that result in request or connection timeouts may cause the cluster to appear unavailable.

Clusters in Confluent Cloud protect themselves from getting to an over-utilized state via request backpressure (throttling). 80% is the cluster load threshold where applications may start seeing high latencies or timeouts on the cluster, or when they are more likely to experience throttling as the cluster backpressures requests or connection attempts. To understand if your applications are being throttled, see Throttling.

Evaluate cluster expansion with cluster load

Cluster expansion is a reasonable first step in troubleshooting performance issues in your Apache Kafka® applications. Use the cluster load metric to decide whether to expand your clusters, or if this does not resolve the performance issues, shrink them back to the original size.

Generally, expanding clusters provides more capacity for your workloads, and in many cases, will help improve the performance of your Kafka applications. However, there are scenarios in which cluster expansion does not adequately resolve application performance concerns. For more complicated scenarios, see Dedicated Cluster Performance and Expansion in Confluent Cloud.

Confluent provides multiple aggregations of cluster load you can leverage based on your application’s sensitivity. For most scenarios, Confluent recommends using the average aggregation of cluster load to determine when to scale your cluster.

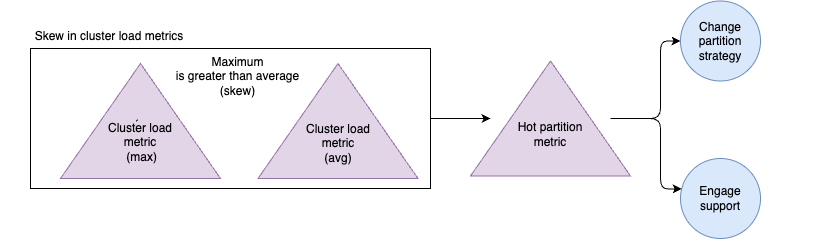

Use skew to determine cluster health

Data skew refers to an uneven distribution of workload across different partitions in a cluster. Skew manifests as a significant difference between the average and maximum values of cluster load across partitions. Use the Metrics API to view metrics.

Cluster load metrics:

cluster_load_percent_maxcluster_load_percent_avg

To view maximum cluster load (cluster_load_percent_max) in Cloud Console:

Navigate to the clusters page for your environment and choose a cluster.

View the Cluster load metric on the cluster Overview page.

Use the drop-down to choose the time period to cover for the maximum cluster load.

Skew can lead to performance bottlenecks and inefficiencies, as the overall processing time or throughput is often determined by the slowest partition. You can address skew by redistributing workloads more evenly among clients or adding partitions.

Considerations:

If cluster load is evenly distributed, the average and maximum values should be relatively close.

If there is skew, you may see a low average cluster load but a much higher maximum cluster load.

Skew indicates an imbalance, where some nodes are overloaded while others are underutilized.

Skew can be caused by factors like too few partitions or partitions receiving heavy traffic.

Monitoring for skew by comparing the average and maximum cluster load values can help detect these imbalances and potential bottlenecks.

Response:

Regularly monitor partition metrics and identify hot partitions.

Redistribute traffic or add partitions to alleviate load on hot partitions.

Redistribute traffic among partitions in the same topic by using a different partitioner or unkeyed messages.

If you find partitions that persistently overload, consider scaling up the cluster by adding CKU to increase capacity.

Address any network-related issues that might be causing uneven data distribution.