Tutorial: Access Management on Confluent Cloud

This tutorial provides an end-to-end workflow for Confluent Cloud user and service account management. The steps are:

Step 1: Invite User

Refer to Create a local user account.

Step 2: Configure the CLI, Cluster, and Access to Kafka

Accept the invitation from email and log in using a web browser.

Log in to the Confluent CLI using the confluent login command.

confluent login

Specify your credentials.

Enter your Confluent Cloud credentials: Email: jane.smith@big-data.com Password: ************

The output should resemble:

Logged in as "jane.smith@big-data.com". Using environment "a-42619" ("default").You are logged in to the default environment for your organization. If work in a different environment than the default, set the environment using the ID (

<env-id>):confluent environment use <env-id>

Your output should resemble:

Now using a-4985 as the default (active) environment.

List the available clusters using the confluent kafka cluster list command.

confluent kafka cluster list

The output should resemble:

Id | Name | Type | Cloud | Region | Availability | Status +--------------+---------------+----------+----------+-------------+--------------+----------+ lkc-j5zrrm | dev-test-01 | STANDARD | gcp | us-central1 | single-zone | UP lkc-382g7m | dev-test-02 | BASIC | aws | us-west-2 | single-zone | UP lkc-43npm | prod-test-01 | BASIC | aws | us-west-2 | single-zone | UP lkc-lq8dd | stage-test-01 | BASIC | aws | us-west-2 | single-zone | DELETED

Connect to cluster dev-test-01 (

lkc-j5zrrm) using the confluent kafka cluster use command. This is the cluster where the commands are run. Be sure to replace the cluster ID shown in the example with your own.confluent kafka cluster use lkc-j5zrrm

The output should look like:

Set Kafka cluster "lkc-j5zrrm" as the active cluster for environment "a-42619".

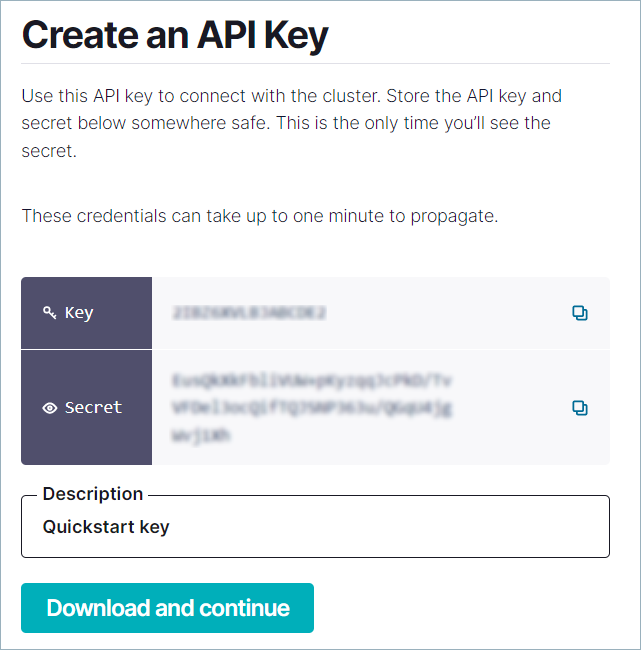

Create an API key and secret, and save them. You must complete this step to produce or consume to your topics. In this step you create an API Key for your user account, which has full permissions. See steps 5 and 6 for guidance on how to create API Keys for service accounts and grant them permissions with ACLs.

You can generate the API key using either the Confluent Cloud Console or the Confluent CLI. Be sure to save the API key and secret.

If using the web UI, click the API access tab and click + Add key. Save the key and secret, then click the checkbox next to I have saved my API key and secret and am ready to continue.

If using the Confluent CLI, type the following command (be sure to replace the cluster ID shown in the example with your own):

confluent api-key create --resource lkc-j5zrrm

The output should look like:

It may take a couple of minutes for the API key to be ready. Save the API key and secret. The secret is not retrievable later. +-------------+------------------------------------------------------------------+ | API Key | ABCD1EFGHIJK2LMN | | API Secret | abC1dEf23G4H567IJKLmn8O1PqrST1UvW0XyZAbcdefGHIjK23LMNOpQRSTUv4WX | +-------------+------------------------------------------------------------------+

Optional: Add the API secret using the

confluent api-key storecommand. command. When you create an API key with the CLI, it is automatically stored locally. However, when you create an API key using the Confluent Cloud Console or with the CLI on another machine, the secret is not available for use with the Confluent CLI until you store it.API secrets cannot be retrieved after creation.

confluent api-key store <api-key> <api-secret> --resource <resource-id>

Set the API key to use for Confluent CLI commands:

confluent api-key use <api-key> --resource <resource-id>

Step 3: Create and Manage Topics

Create topics with all the default values using the confluent kafka topic create command.

confluent kafka topic create myTopic1

The output should look like:

Created topic "myTopic1".

Add another topic:

confluent kafka topic create myTopic2

Create a topic with six partitions.

confluent kafka topic create myTopic3 --partitions 6

The output should look like:

Created topic "myTopic3".

List topics using the confluent kafka topic list command.

confluent kafka topic list

The output should resemble:

Name +----------+ myTopic1 myTopic2 myTopic3

Delete a topic named

myTopic1using the confluent kafka topic delete command.confluent kafka topic delete myTopic1

The output should look like:

Deleted topic "myTopic1".

Describe a topic using the confluent kafka topic configuration list command.

confluent kafka topic configuration list myTopic2

The output should resemble:

Name | Value ------------------------------------------+---------------------- cleanup.policy | delete compression.type | producer delete.retention.ms | 86400000 file.delete.delay.ms | 60000 flush.messages | 9223372036854775807 flush.ms | 9223372036854775807 follower.replication.throttled.replicas | index.interval.bytes | 4096 leader.replication.throttled.replicas | max.compaction.lag.ms | 9223372036854775807 max.message.bytes | 2097164 message.downconversion.enable | true message.format.version | 3.0-IV1 message.timestamp.difference.max.ms | 9223372036854775807 message.timestamp.type | CreateTime min.cleanable.dirty.ratio | 0.5 min.compaction.lag.ms | 0 min.insync.replicas | 2 num.partitions | 6 preallocate | false retention.bytes | -1 retention.ms | 604800000 segment.bytes | 104857600 segment.index.bytes | 10485760 segment.jitter.ms | 0 segment.ms | 604800000 unclean.leader.election.enable | false

Modify the

myTopic2configuration to setnum.partitionsusing the confluent kafka topic update command.confluent kafka topic update myTopic2 --config num.partitions=8

The output should resemble:

Updated the following configuration values for topic "myTopic2": Name | Value | -----------------+-------- num.partitions | 6 |

Step 4: Produce and consume

Produce messages to a topic using the confluent kafka topic produce command.

confluent kafka topic produce myTopic3

Type your messages at the prompt, and press Return after each one.

Your command window should resemble the following:

confluent kafka topic produce myTopic3 Starting Kafka Producer. ^C or ^D to exit hello cool topic did you get this message? first second third yes! I love this cool topic

If you want to stay on a single screen, type

^Cto exit the producer, and then run the consumer with-b(or--from-beginning) as shown in the next step.Consume messages from a topic using the confluent kafka topic consume command.

confluent kafka topic consume myTopic3 --from-beginning

Your output should resemble the following:

confluent kafka topic consume myTopic3 --from-beginning Starting Kafka Consumer. ^C or ^D to exit second did you get this message? first third cool topic hello yes! I love this cool topic

Step 5: Create service accounts and API keys

In Step 2 you added an API key for your user account, which has super.user privileges. This step describes how to create a service account so that you can grant application access with limited permissions.

Create a service account named

dev-appsusing the confluent iam service-account create command.confluent iam service-account create "dev-apps" \ --description "Service account for dev apps"

The output should resemble:

+----------------+----------------------------------+ | ID | sa-1a2b3c | | Name | dev-apps | | Description | Service account for dev apps | +----------------+----------------------------------+

Note the

IDassociated to this service account, in this casesa-1a2b3c.Create an API key and API secret for this service account using the confluent api-key create command. For the

resourcevalue, use the cluster ID, which is available from the output ofconfluent kafka cluster list.confluent api-key create --service-account sa-1a2b3c --resource lkc-4xrp1

Take note of the API key and API secret — this is the only time you will be able to see the API secret.

Client applications that will connect to this cluster will need to configure at least these three identifying parameters:

API key: available when you initially create the API key pair

API secret: available when you initially create the API key pair

bootstrap.servers: set to theEndpointin the output ofconfluent kafka cluster describe

Step 6: Manage access with ACLs

Grant the

dev-appsservice account the ability to produce to topics using the confluent kafka acl create command.confluent kafka acl create --allow --service-account sa-1a2b3c --operations write --topic myTopic2 Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+---------------+--------------- User:sa-1a2b3c | ALLOW | WRITE | TOPIC | myTopic2 | LITERALIf the service also needs to create topics, grant the

dev-appsservice account the ability to create new topics.confluent kafka acl create --allow --service-account sa-1a2b3c --operations create --topic "*" Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+---------------+--------------- User:sa-1a2b3c | ALLOW | CREATE | TOPIC | * | LITERALGrant the

dev-appsservice account the ability to consume from a particular topic using the confluent kafka acl create command. Note that it requires two commands: one to specify the topic and one to specify the consumer group.confluent kafka acl create --allow --service-account sa-1a2b3c --operations read --topic myTopic2 Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+---------------+--------------- User:sa-1a2b3c | ALLOW | READ | TOPIC | myTopic2 | LITERAL confluent kafka acl create --allow --service-account sa-1a2b3c --operations read --consumer-group java_example_group_1 Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+----------------------+--------------- User:sa-1a2b3c | ALLOW | READ | GROUP | java_example_group_1 | LITERALList all ACLs for the

dev-appsservice account using the confluent kafka acl list command.confluent kafka acl list --service-account sa-1a2b3c

The output should resemble:

Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+----------------------+--------------- User:sa-1a2b3c | ALLOW | READ | TOPIC | * | LITERAL User:sa-1a2b3c | ALLOW | READ | TOPIC | myTopic2 | LITERAL User:sa-1a2b3c | ALLOW | WRITE | TOPIC | myTopic2 | LITERAL User:sa-1a2b3c | ALLOW | READ | GROUP | java_example_group_1 | LITERAL

You can add ACLs on prefixed resource patterns. For example, you can add an ACL for any topic whose name starts with

demo.confluent kafka acl create --allow --service-account sa-1a2b3c --operations write --topic demo --prefix Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+---------------+--------------- User:sa-1a2b3c | ALLOW | WRITE | TOPIC | demo | PREFIXEDYou can add ACLs using a wildcard which matches any name for that resource. For example, you can add an ACL to allow a topic of any name.

confluent kafka acl create --allow --service-account sa-1a2b3c --operations write --topic "*" Principal | Permission | Operation | Resource Type | Resource Name | Pattern Type -----------------+------------+-----------+---------------+---------------+--------------- User:sa-1a2b3c | ALLOW | WRITE | TOPIC | * | LITERALLinux and macOS users can use either double or single quotes. Windows users must use double quotes around the wildcard character.

Remove an ACL from the

dev-appsservice account using the confluent kafka acl delete command.confluent kafka acl delete --allow --service-account sa-1a2b3c --operations write --topic myTopic2 Deleted 1 ACLs.

Step 7: Log out

Log out using the confluent logout command.

confluent logout

You are now logged out.