Access and Consume Audit Logs on Confluent Cloud

Confluent Cloud audit logs are enabled by default in Standard, Enterprise, Dedicated, and Freight clusters. Basic clusters do not include audit logs.

Live audit log records are deleted after seven days, but you can retain audit logs by replicating them to another Kafka cluster or external system. For details, see Retain Audit Log Records on Confluent Cloud.

Tip

You can also use Cluster Linking to sync your audit logs to your own Dedicated Confluent Cloud clusters, which enables you to consume audit logs with your existing consumer configurations, or use audit logs with fully-managed Connect and ksqlDB. For a step-by-step guide, see Use Cluster Linking to Manage Audit Logs.

Prerequisites

- Kafka client

You can use any Kafka client (for example, Confluent CLI, C/C++, or Java) to consume data from the Confluent Cloud audit log topic as long as the client supports SASL authentication. Thus, any prerequisites are specific to the Kafka client you use.

- Consume configuration

The Confluent Cloud Console provides a configuration you can copy and paste into your Kafka client of choice. This configuration allows you to connect to the audit log cluster and consume from the audit log topic.

- Cluster type

Standard, Enterprise, Dedicated, and Freight Kafka clusters support audit logs, which are enabled by default. Basic clusters do not include audit logs.

Access the audit log user interface

To access audit log information in the Confluent Cloud Console, click the Administration menu. If your role grants you permission to manage your organization, and your organization has at least one Standard, Enterprise or Dedicated Kafka cluster, click Audit log to access your audit log cluster information. Enablement is automatic, and typically takes place within five minutes of successfully provisioning your first Standard, Enterprise, Dedicated, and Freight cluster.

Consume with Confluent CLI

An OrganizationAdmin must create the API key and secret for the audit log cluster. Once created, the API key can be used by any user to consume audit logs. The API key is bound only to the audit log cluster.

Open a terminal and log in to your Confluent Cloud organization.

confluent login

Run the

confluent audit-log describecommand to identify which resources to use.The following example shows the audit log information provided for a sample cluster.

confluent audit-log describe +-----------------+----------------------------+ | Cluster | lkc-yokxv6 | | Environment | env-abc123 | | Service Account | sa-ymnkzp | | Topic Name | confluent-audit-log-events | +-----------------+----------------------------+

Note

The topic

confluent-audit-log-eventscannot be viewed in the Confluent Cloud Console, but you can consume it using the Confluent CLI or any Kafka client.The Service Account in the output is only for information. The Service Account does not need to be used when creating API keys for the audit log cluster.

Specify the environment and cluster to use by running the

confluent environment useandconfluent kafka cluster usecommands. The environment and cluster names are available from the data retrieved in the previous step.confluent environment use env-abc123 confluent kafka cluster use lkc-yokxv6

If you have an existing API key and secret for your audit log cluster, you can store it locally using the

confluent api-key storecommand.confluent api-key store <API-key> --resource lkc-yokxv6

To view the existing API keys for your audit log cluster, run the

confluent api-key listcommand using the--resourceoption.confluent api-key list --resource lkc-yokxv6

Note

To consume data from the audit log topic, you must have an API key and secret.

If you need to create a new API key and secret for your audit log cluster, run the

confluent api-key createcommand with the--resourceflag.confluent api-key create --resource lkc-yokxv6

Note

Be sure to save the API key and secret. The secret is not retrievable later.

Important

To get the identifier for the audit log cluster, run the Confluent CLI

confluent audit-log describecommand.There is a limit of two API keys per audit log cluster. If you need to delete an existing API key for your audit log cluster, run the following command:

confluent api-key delete <API-key>

After creating your API key and secret, copy the API key and paste it into the following command:

confluent api-key use <API-key> --resource lkc-yokxv6

Consume audit log event messages from the audit log topic.

You can use the Confluent CLI to consume audit log events from the audit log cluster by running the following

confluent kafka topic consumecommand:confluent kafka topic consume -b confluent-audit-log-events

For details about the options you can use with this command, see confluent kafka topic consume.

New connections using a deleted API key are not allowed. You can rotate keys by creating a new key, configuring your clients to use the new key, and then deleting the old one.

To watch events “live” as they’re being used to authenticate, and also view which Kafka cluster API keys are being used:

confluent kafka topic consume confluent-audit-log-events > audit-events.json &

tail -f audit-events.json \

| grep 'kafka.Authentication' \

| jq .data.authenticationInfo.metadata.identifier \

> recently-used-api-keys.txt &

tail -f recently-used-api-keys.txt

Note

The jq command-line JSON processor is third-party software that is not included or installed in Confluent Cloud. If you wish to use it, you must download and install it yourself.

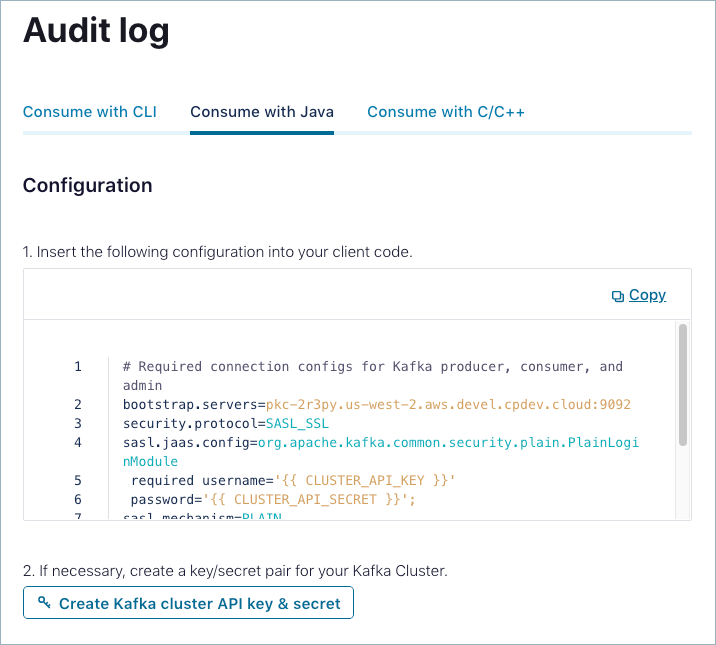

Consume with Java

An OrganizationAdmin role must create the API key and secret for the audit log cluster. Once created, the API key can be used by any user to consume audit logs. The API key is bound only to the audit log cluster.

Sign in to Confluent Cloud Console at https://confluent.cloud.

Go to ADMINISTRATION -> Audit log, which you can find in the top-right Administration menu in the Confluent Cloud Console.

On the Audit log page, click the Consume with Java tab.

Copy and paste the provided configuration into your client.

If necessary, click Create Kafka cluster API key & secret to create a key/secret pair for your Kafka cluster.

Start and connect to the Java client.

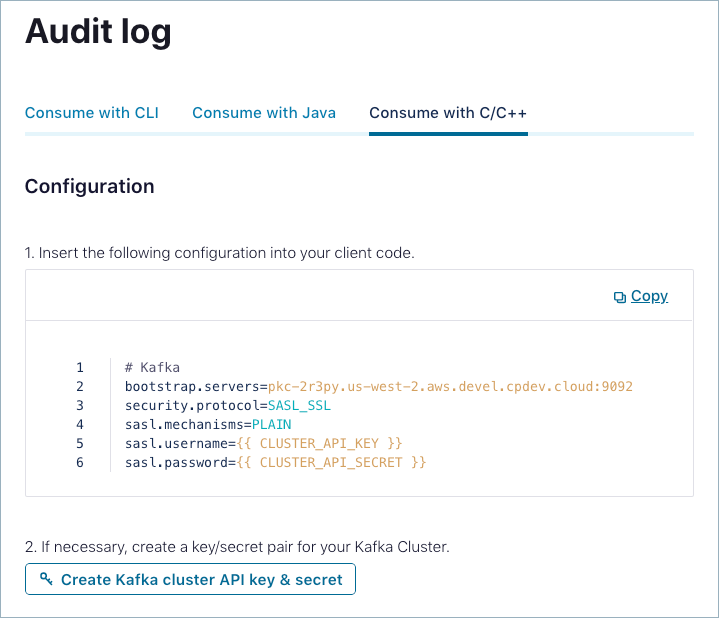

Consume with C/C++

Sign in to Confluent Cloud Console at https://confluent.cloud.

Go to ADMINISTRATION -> Audit log.

On the Audit log page, click the Consume with C/C++ tab.

Copy and paste the provided configuration into your client.

If necessary, click Create Kafka cluster API key & secret to create a an API key and secret for your Kafka cluster.

Start and connect to the C/C++ client.

Securing Confluent Cloud audit logs

The Confluent Cloud audit log destination cluster is located on a public cluster in AWS us-west-2 with encrypted storage and a default KMS-managed key rotation schedule of every three years. API keys specific to the audit log cluster are the only means of gaining read-only access.

Secure your organization’s audit logs by protecting the API keys used to read them.

As mentioned previously, Confluent Cloud supports up to two active audit log cluster API keys, and allows live key rotation. For API key best practices, see Best Practices for Using API Keys on Confluent Cloud.

Accessing audit log messages from behind a firewall

Like other Confluent Cloud public clusters, audit log clusters do not have a static IP address. For information about configuring firewall access to public clusters, see Are Confluent Cloud IP addresses and hostnames static?.