View Connector Dead Letter Queue Errors in Confluent Cloud

An invalid record may occur for a number of reasons. For Connect, errors that may occur are typically serialization and deserialization (serde) errors. For example, an error occurs when a record arrives at the sink connector in JSON format, but the sink connector configuration is expecting another format, like Avro. In Confluent Cloud, the connector does not stop when serde errors occur. Instead, the connector continues processing records and sends the errors to a Dead Letter Queue (DLQ). You can use the record headers in a DLQ topic record to identify and troubleshoot an error when it occurs. Typically, these are configuration errors that can be easily corrected.

Note

The DLQ topic is created automatically, based on the resource associated with the connector API key.

You cannot add a sink connector’s DLQ topic to the list of topics consumed by the same sink connector (to prevent an infinite loop).

For details about how DLQ topics are created for sink connectors in Confluent Platform, see Dead Letter Queue.

View the DLQ

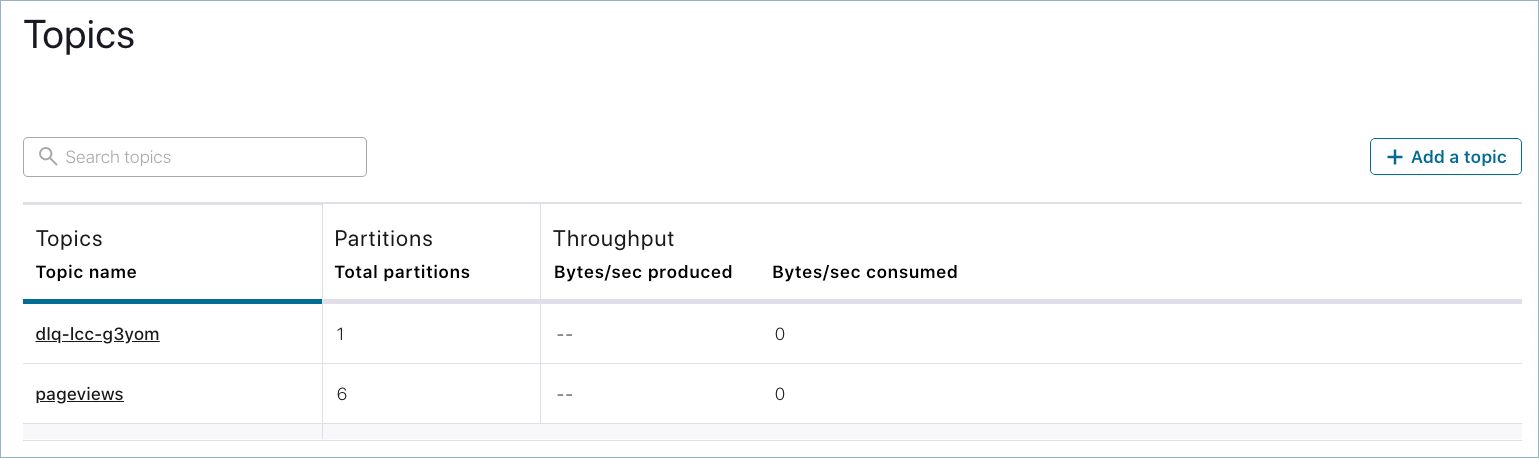

When you launch a sink connector in Confluent Cloud, the DLQ topic is automatically created. The topic is named dlq-<connector-ID> as shown below.

Click the DLQ topic to open it.

DLQ topic

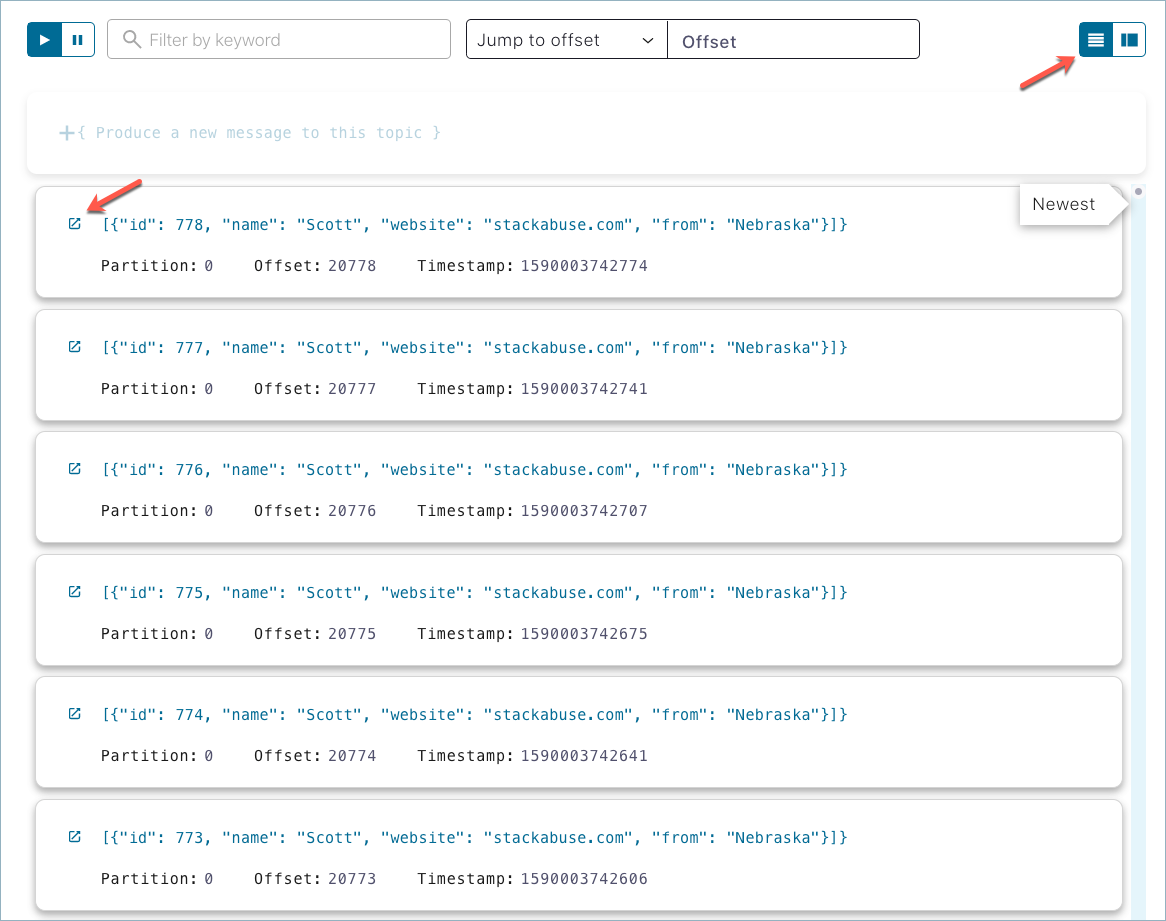

Change the default view to show the record list and then click on the record open icon (next to the record ID in the example below).

DLQ topic records

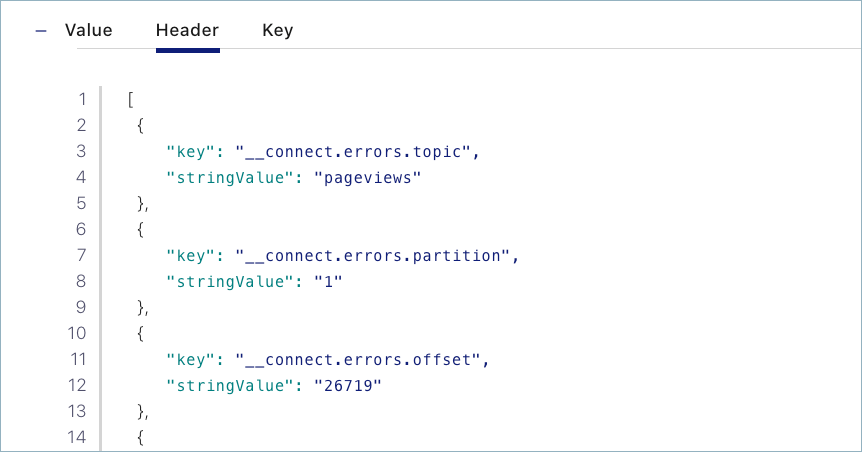

After you’ve opened the record, select Header.

DLQ record header

Each DLQ record header contains the name of the topic with the error, the error exception, and a stack trace (along with other information). Review the DLQ record header to identify any configuration changes you need to make to correct errors.

You can view the record header in Confluent Cloud (as shown above) or download it as a JSON file. The following header output shows the information provided. In the example, the error is caused by a failure to deserialize a topic named pageviews. The connector was expecting Avro formatted data, but was provided a different data format, like JSON. To correct this, you would check the connector configuration and change the expected topic format to JSON from Avro.

[

{

"key": "__connect.errors.topic",

"stringValue": "pageviews"

},

{

"key": "__connect.errors.partition",

"stringValue": "1"

},

{

"key": "__connect.errors.offset",

"stringValue": "8583"

},

{

"key": "__connect.errors.connector.name",

"stringValue": "lcc-g3yom"

},

{

"key": "__connect.errors.task.id",

"stringValue": "0"

},

{

"key": "__connect.errors.stage",

"stringValue": "VALUE_CONVERTER"

},

{

"key": "__connect.errors.class.name",

"stringValue": "io.confluent.connect.avro.AvroConverter"

},

{

"key": "__connect.errors.exception.class.name",

"stringValue": "org.apache.kafka.connect.errors.DataException"

},

{

"key": "__connect.errors.exception.message",

"stringValue": "Failed to deserialize data for topic pageviews to Avro: "

},

{

"key": "__connect.errors.exception.stacktrace",

"stringValue": "org.apache.kafka.connect.errors.DataException: Failed to deserialize data for topic pageviews to Avro: /

\n\tat io.confluent.connect.avro.AvroConverter.toConnectData(AvroConverter.java:110) ...omitted"

}

]

Next Steps

Listen to the podcast Handling Message Errors and Dead Letter Queues in Apache Kafka featuring Jason Bell.