Dedicated Cluster Performance and Expansion in Confluent Cloud

A number of factors can affect the overall performance of applications running on Dedicated Kafka clusters. You should monitor your clusters for clues to how you can improve performance in your applications, and to determine whether cluster expansion is the right solution.

Key cluster performance metrics:

Cluster load metrics

CKU count metric

Hot partition metrics

Consumer lag

Unsupported client versions

Client throttling

Producer latency

For more about monitoring your applications, see Monitoring Your Event Streams: Tutorial for Observability Into Apache Kafka Clients (blog).

Interpret a high cluster load value

Cluster load is a percentage value between 0-100, with 0% indicating no load on the cluster, and 100% representing a fully-saturated cluster. Higher load values on a cluster commonly result in higher latencies and/or client throttling for your application. You should expect higher latency and some degree of throttling if the cluster load is greater than 80%.

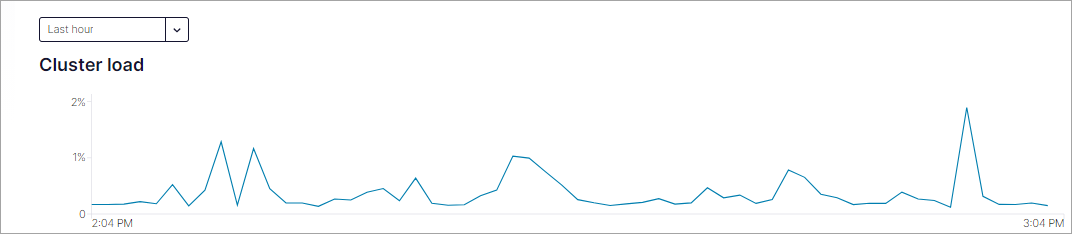

You could expand your cluster in an attempt to lower the load, but before you do so you should look at the time-series graph for cluster load to obtain a historical perspective of the cluster load variation. For details on how to access cluster load, see Cluster load metric.

Cluster load example

When viewing this graph consider that a cluster load of 70% may be acceptable if it is an occasional spike or a normal high point for a workload. However, a load of 70% may be too high if the cluster needs additional capacity to accommodate load spikes due to variations in application workload patterns, or if new workloads will be added to the cluster. In this case, expanding the cluster is probably the right solution.

Generally, expanding your dedicated cluster provides more capacity for your workloads, and in many cases, will help improve the performance of your Kafka applications. In addition, a lower cluster load can help improve latency for your applications.

If you expand a dedicated cluster, and the expansion does not resolve the performance issues, you can shrink the cluster back to its original size.

To interpret a high cluster load value, consider the following guidelines:

Values of 70 - 80: unless these are occasional peaks, consider adding CKU

Values of 80 or more: expect throttling and degraded performance if the workload pattern changes and introduces additional load on the overall system

Determine CKU count

CKUs determine the capacity of your cluster. Use the CKU count metric to determine the capacity of your Dedicated cluster. While some performance dimensions for Dedicated clusters are fixed, others have a recommended guideline that allows you greater use of one dimension at the expense of another. For more information, see Fixed limits and recommended guidelines, Dimensions with fixed limits and Dimensions with recommended guidelines.

Identify hot partitions

Use the hot partition metric to identify partitions that self-balancing clusters (SBC) may not be able to balance. A hot partition is a partition where maximum cluster load is much higher than average cluster load. To avoid throttling or availability issues, you must manually address hot partitions.

Considerations:

If

hot_partition_ingressorhot_partition_egresshas a value of1, then the cluster load for that partition is too high for SBC to balance the partition.To recover a hot partition, consider adding partitions and redistributing client traffic. The goal is to have enough partitions with traffic distributed evenly across the partitions.

Ensure unsupported clients are not accessing the hot partition. Unsupported clients can cause high cluster load and issues with balancing clusters. For more information, see What client and protocol versions are supported? and Confluent Platform and Apache Kafka compatibility.

To use the hot-partition metrics with an application performance monitoring (APM) application, your APM must use a default zero function to fill empty time intervals with a zero value or an interpolation value, if your APM uses interpolation.

Review high consumer lag

The Consumer lag metric indicates the number of records for any partition that the consumer is behind in the log. If the rate of production of data exceeds the rate at which it is getting consumed, consumer groups will measure lag. An increase in consumer lag can indicate a client-side issue, a Kafka server-side issue, or both.

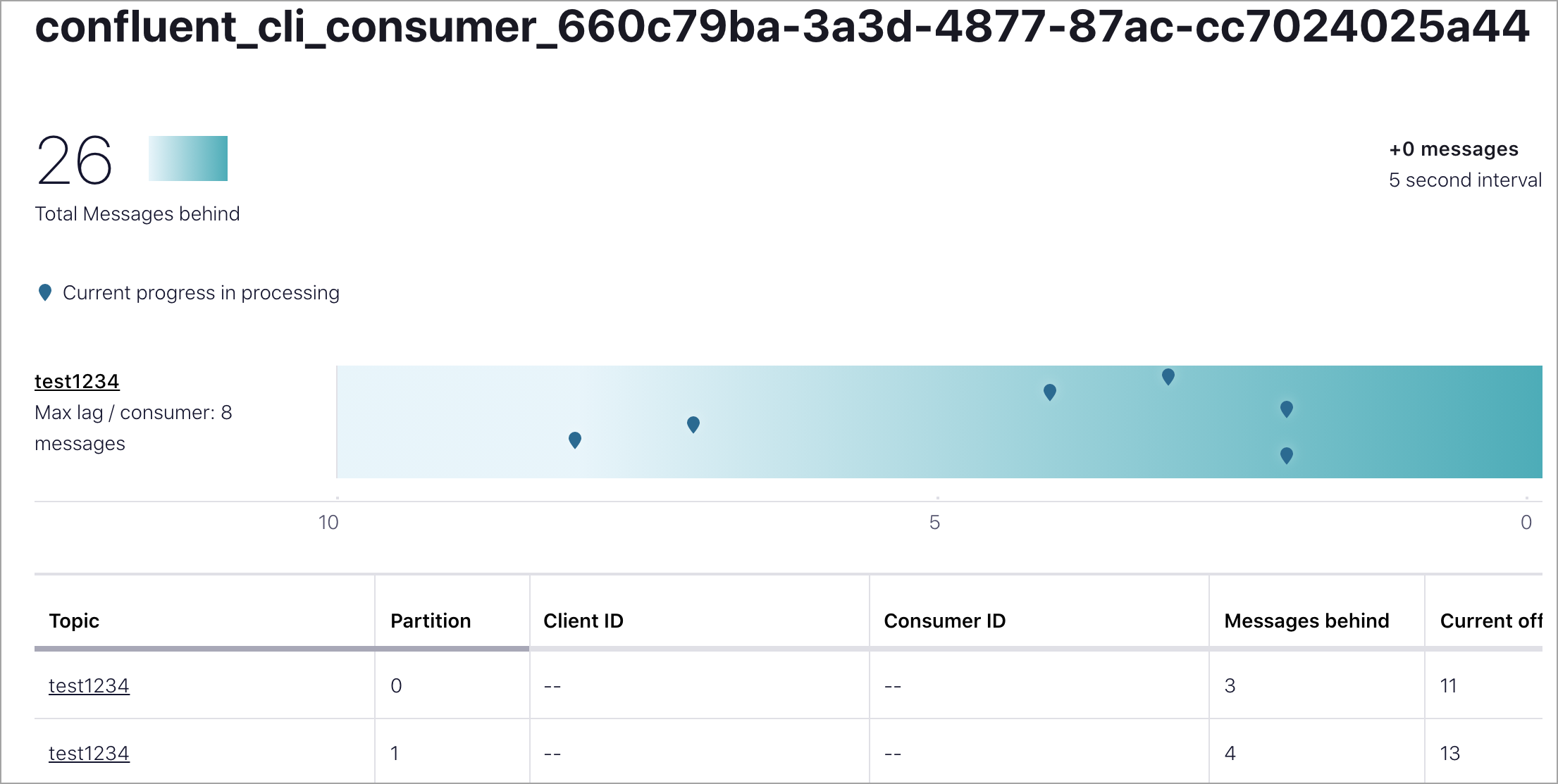

Consumer lag example

To identify if consumer lag is increasing because of server-side issues, first use the cluster load metric to confirm your cluster isn’t overloaded. If the cluster load metric indicates your cluster is heavily loaded (> 70%), it is safe to assume that expanding your cluster will help with consumer lag.

If the cluster is not heavily loaded, next look at the number of partitions on the cluster. Partitions parallelize the workload across your cluster. As a rule of thumb, you should have somewhere between 6-10 partitions at a minimum (per CKU) to get the benefits of parallelization, although your specific workload may warrant a higher or lower number of partitions. For more information on improving parallelization, see Optimize and Tune Confluent Cloud Clients.

If your cluster is not heavily loaded and you have the recommended number of partitions or more on your cluster, then consumer lag may be increasing because there is not sufficient parallelism in your consumer application. Adding consumers may help resolve this issue.

Monitor for unsupported client versions

Use only supported clients with Confluent Cloud. Update client versions regularly to avoid undesired behavior in your clusters. Some issues that can arise from running unsupported client versions:

Compatibility issues: Confluent Cloud is designed to work with specific versions of client libraries (e.g., Java, .NET, Python, etc.). If you use an unsupported client, it may not have access to necessary libraries, which can lead to unexpected behavior, errors, or failures.

Security vulnerabilities: Confluent Cloud is regularly updated with security patches and bug fixes. Unsupported clients may be missing critical security updates, leaving your applications and data vulnerable to potential security threats or exploits.

Lack of support: Confluent provides support and maintenance only for supported client versions. If you encounter issues or bugs while using an unsupported client version, Confluent may not be able to provide assistance or troubleshooting help, leaving you to resolve the issues on your own.

Missing features and improvements: Newer versions of client libraries often introduce new features, performance improvements, and bug fixes. By using an unsupported client version, you may miss out on these enhancements, which could impact the functionality, performance, and reliability of your applications.

Potential service disruptions: Confluent Cloud may introduce changes or updates that are designed to work only with supported client versions. Using an unsupported client version could lead to service disruptions or unexpected behavior when such changes are made.

To ensure a stable, secure, and well-supported experience with Confluent Cloud, use only supported client versions for your specific programming language or framework. Confluent provides documentation and guidance on the supported client versions and their compatibility with Confluent Cloud.

For more information, see Supported Versions and Interoperability for Confluent Platform.

Review client application throttles

Throttles are a normal part of working with cloud services. Confluent Cloud clusters throttle client applications if they exceed the rate the cluster is configured to handle based on its allocated capacity. This throttling prevents additional usage that could cause a cluster outage, which might be catastrophic. Throttles are negotiated between the Kafka server and Kafka Consumers and Producers, ensuring the clients wait a sufficient amount of time to ensure the server can handle the request without compromising uptime.

For more information about client side and producer and consumer metrics, which provide visibility into whether your producers and/or consumers are being throttled, see and Client Monitoring and the discussion of produce-throttle-time-avg and produce-throttle-time-max in the Producer Metrics section of the Confluent Platform documentation.

Determine if throttling is caused by server or client issues

To determine if the client throttling is a client side or a server side issue, again, refer to the Monitor cluster load. If the cluster load metric indicates that your cluster is under a high or increasing load, you can reasonably assume that cluster expansion will mitigate throttles.

Other server-side characteristics that are important to evaluate as specific causes of throttling are ingress, egress, and request rate.

Remember that the throughput and request rate for a dedicated cluster are limited by the number of CKUs allocated to the cluster. If your applications are consuming more throughput and making more requests than your cluster can currently handle, expanding your cluster will likely resolve the issue.

However, if your cluster is being throttled and you cannot identify a server-side reason, there could be client-side implementations that are causing throttling. For example, your workload may be unbalanced, meaning that one or few partitions are sustaining the vast majority of traffic, leaving the remainder of the cluster underutilized. Confluent Cloud constantly monitors the balance of your cluster to automatically optimize the distribution of your workload. In some cases, Confluent Cloud might not be able to find an optimal balance due to client-side access patterns, such as unbalanced partition assignment strategies.

To ensure the best possible experience for interacting with Kafka, architect your client-side applications appropriately to ensure the best throughput potential is recommended.

Monitor latency in producer applications

Certain metrics can also indicate that the producers are experiencing latency. Specifically, the buffer-available-bytes(=0), and / or increases in bufferpool-wait-time could be an indication of producers experiencing latency.

Monitor active_connection_count. Benchmarking shows that exceeding the number of total client connections per-CKU often leads to an exponential increase in produce latency. For more information, see the total client connections dimension in Dimensions with recommended guidelines table.

If the cluster load metric is high and the producer buffer is high, it is likely that expanding the cluster by adding CKUs will improve producer latency. If latency is not improved after cluster expansion, see the previous section on Client applications.