Google BigQuery Sink V2 Connector for Confluent Cloud

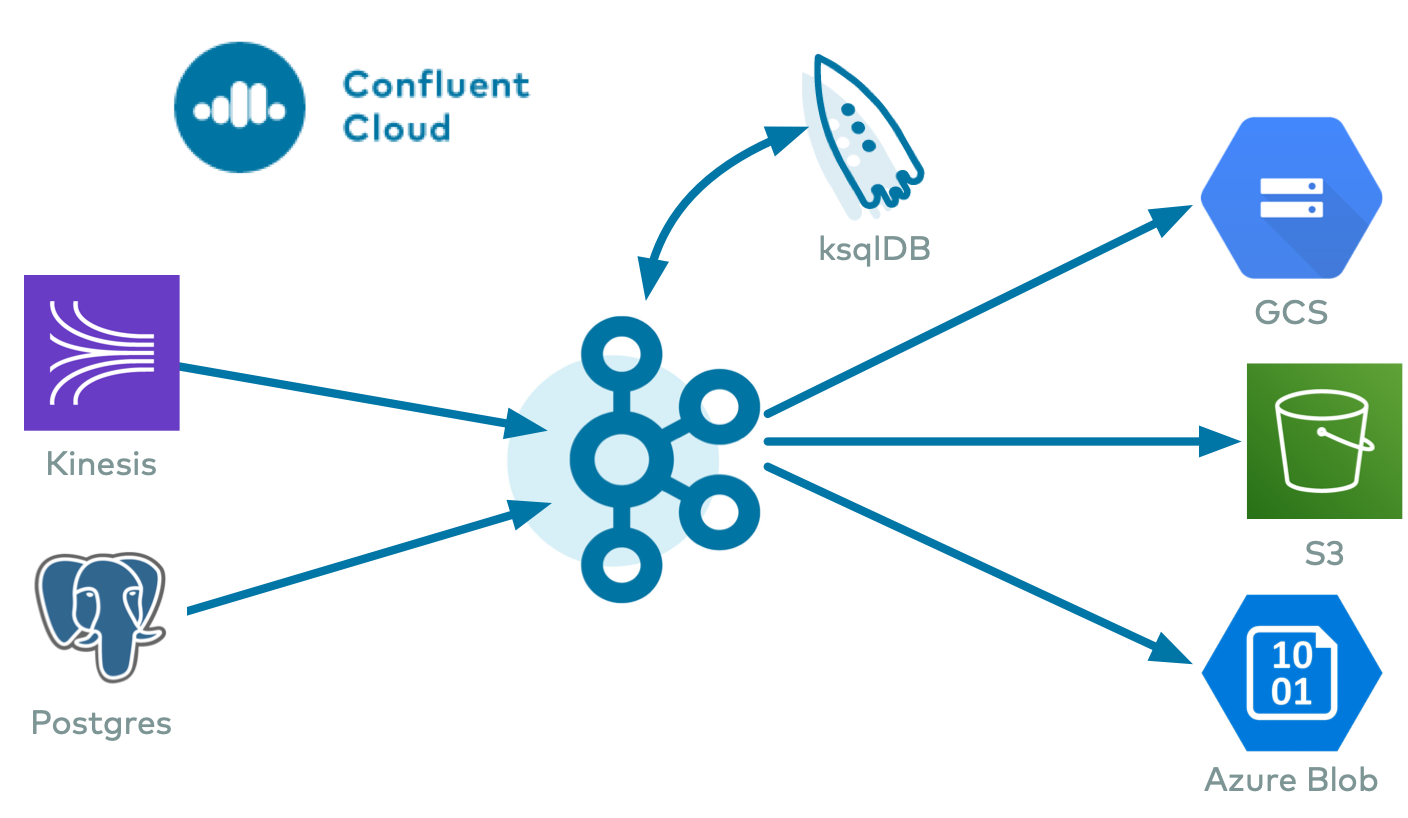

You can use the Kafka Connect Google BigQuery Sink V2 connector for Confluent Cloud to export Avro, JSON Schema, Protobuf, or JSON (schemaless) data from Apache Kafka® topics to BigQuery. The BigQuery table schema is based upon information in the Apache Kafka® schema for the topic.

Confluent Cloud is available through Google Cloud Marketplace or directly from Confluent.

With version 2 of the Google BigQuery Sink connector, you can perform upsert and delete actions on ingested data. Additionally, this version allows you to use the Storage Write API to ingest data directly into BigQuery tables. For more details, see the following section.

Note

The Google BigQuery Sink V2 connector for Confluent Cloud connects to resources in the same region and cloud provider as your Confluent Cloud cluster. If your Google BigQuery instance is in a different region or cloud provider than your Confluent Cloud cluster, contact Confluent Support to enable cross-region or cross-cloud connectivity before you configure the connector.

Features

The connector supports the following functionalities for data ingestion:

Upsert functionality: With the upsert functionality, you can insert new data or update existing matching key data. For more details, see Stream table updates with change data capture.

Upsert and delete functionality: With the upsert and delete functionality, you can insert new data, update existing matching key data, or delete matching key data for tombstone records. For more details, see Stream table updates with change data capture.

Google Cloud BigQuery Storage Write API. The BigQuery Storage Write API combines streaming ingestion and batch loading into a single high-performance API. For more information, see Batch load and stream data with BigQuery Storage Write API. Note that using the Storage Write API may provide a cost-benefit for your BigQuery project. Also, note that BigQuery API quotas apply.

Review the following table for details about the differences between using BATCH LOADING versus STREAMING mode with the BigQuery API. For more information, see Introduction to loading data.

BATCH LOADING

STREAMING

Records are available after the commit interval has expired and may not be provided in real time

Records are available immediately after an append call (minimal latency) in near real time

Requires creating specific application streams to handle the records

Uses the default stream

May cost less

May cost more

Multiple API quota limits with more restrictions (for example, maximum number of streams and buffering)

Lower quota with fewer restrictions

Provider integration support: The connector supports Google Cloud’s native identity authorization using Confluent Provider Integration. For more information about provider integration setup, see the connector authentication.

Client-side encryption (CSFLE and CSPE) support: The connector supports CSFLE and CSPE for sensitive data. For more information about CSFLE or CSPE setup, see the connector configuration.

The connector supports OAuth 2.0 for connecting to BigQuery. Note that OAuth is only available when creating a connector using the Cloud Console. OAuth 2.0 support is available in two variations:

Shared app: Use the application provided by Confluent, shared with all users, for an easy and quick start without the hassle of application management.

Bring your own app: Register and manage your own connected application on the OAuth platform, maintaining control over your authentication setup.

The connector supports streaming from a list of topics into corresponding tables in BigQuery.

Even though the connector streams records by default (as opposed to running in batch mode), the connector is scalable because it contains an internal thread pool that allows it to stream records in parallel. The internal thread pool defaults to 10 threads. Note that this is only applicable for

BATCH LOADINGandSTREAMINGmode and notUPSERTandUPSERT_DELETEmode.The connector supports several time-based table partitioning strategies.

The connector supports routing invalid records to the DLQ. This includes any records that have gRPC status code INVALID_ARGUMENT from the BigQuery Storage Write API.

Note

DLQ routing does not work if Auto update schemas (

auto.update.schemas) is enabled and the connector detects that the failure is due to schema mismatch.The connector supports

default_missing_value_interpretation. For more information about default value interpretation for missing value, see Google BigQuery default value settings. Contact Confluent Support to enable default value settings in your connector.The connector supports Avro, JSON Schema, Protobuf, or JSON (schemaless) input data formats. Schema Registry must be enabled to use a Schema Registry-based format (for example, Avro, JSON_SR (JSON Schema), or Protobuf). See Schema Registry Enabled Environments for additional information.

For Avro, JSON_SR, and Protobuf, the connector provides the following configuration properties that support automated table creation and schema updates. You can select these properties in the UI or add them to the connector configuration, if using the Confluent CLI.

auto.create.tables: Automatically create BigQuery tables if they don’t already exist. The connector expects that the BigQuery table name is the same as the topic name. If you create the BigQuery tables manually, make sure the table name matches the topic name. This property adds/updates Kafka record keys as primary keys ifingestion.modeis set toUPSERTorUPSERT_DELETE. Note that you must adhere to the BiqQuery’s primary key constraints.auto.update.schemas: Automatically update BigQuery schemas. Note that new fields are added as NULLABLE in the BigQuery schema. This property adds/updates Kafka record keys as primary keys ifingestion.modeis set toUPSERTorUPSERT_DELETE. Note that you must adhere to the BiqQuery’s primary key constraints.sanitize.topics: Automatically sanitize topic names before using them as BigQuery table names. If not enabled, topic names are used as table names. If enabled, the table names created may be different from the topic names.sanitize.field.namesAutomatically sanitize field names before using them as column names in BigQuery.sanitize.field.names.in.array: Automatically sanitize field names inside array-type objects before using them as column names in BigQuery.

Note

New tables and schema updates may take a few minutes to be detected by the Google Client Library. For more information see the Google Cloud BigQuery API guide.

Clustering Support: Clustering organizes data within a table based on one or more specified columns. The order of the clustered columns determines the sort order of the data, thus enhancing the query performance. Clustering is beneficial for queries that include filter clauses or aggregate large datasets, as it reduces the amount of data scanned.

The

topic2ClusteringFieldsMapconfiguration allow users to define clustering columns for each topic. Clustering is only applicable whenauto.create.tableis enabled and applies only to tables created through the connector. For more information, check the following resources:For limits on clustering, see BigQuery Clustering Limitations

For supported data types, see BigQuery Clustering Data Types

_CHANGE_SEQUENCE_NUMBER in CDC mode: The connector supports the _CHANGE_SEQUENCE_NUMBER pseudo-column to maintain correct record ordering in CDC upsert mode. For identical primary keys, the record with the higher sequence number takes precedence. If both primary key and sequence number are identical, the later ingested record is retained. Note that

_CHANGE_SEQUENCE_NUMBERis a pseudo-column and is not included in the BigQuery table schema. When enabled, this is an optional column and the value for this needs to be added in the data.Note

Disabling the

_CHANGE_SEQUENCE_NUMBERfeature will not remove existing values from records. To remove these values, contact GCP Support.

Hexadecimal converter: BigQuery requires

_CHANGE_SEQUENCE_NUMBERvalues to be in hexadecimal format. You must ensure these values are in the correct format or use this converter to transform decimal string values to hexadecimal.Note the following:

The converter expects decimal numeric values as strings.

Applies only when

_CHANGE_SEQUENCE_NUMBERis enabled and the connector is inUPSERTorUPSERT_DELETEmode.The converter supports multi-part sequence numbers separated by forward slashes where each part is converted independently. For example,

"100/200/300"becomes"64/C8/12C".The converter allows a maximum of four parts with up to 16 hexadecimal characters per part, per BigQuery CDC specifications.

If the converter is enabled and the connector receives values that are already in hexadecimal format (for example,

"FF"), the connector treats them as non-numeric and sends them to the Dead Letter Queue (DLQ), if configured. Do not enable the converter if your source data already produces hexadecimal values.

Caution

Once enabled, do not disable the hexadecimal converter for tables that already contain converted values. Disabling the converter after processing records causes incorrect ordering comparisons. For example:

With the converter enabled: the decimal value

"18"is converted to the hexadecimal"12".If the converter is later disabled: A new decimal value

"13"is compared as-is.BigQuery comparison:

"13"is greater than"12", even though the logical sequence of 18 is greater than 13.

This inconsistency causes records with lower sequence numbers to incorrectly take precedence over records with higher sequence numbers.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Limitations

Be sure to review the following information.

For connector limitations, see Google BigQuery Sink V2 Connector limitations.

If you plan to use OAuth 2.0 for connecting to BigQuery, see OAuth Limitations.

If you plan to use one or more Single Message Transforms (SMTs), see SMT Limitations.

If you plan to use Confluent Cloud Schema Registry, see Schema Registry Enabled Environments.

If you plan to consume schemaless JSON, contact Confluent Support.

Supported data types

The following sections describe supported BigQuery data types and the associated connector mapping.

General mapping

The following is a general mapping of supported data types for the Google BigQuery Sink V2 connector. Note that this mapping applies even if a table is created or updated outside of the connector lifecycle. For automatic table creation and schema update mapping, see the following section.

BigQuery Data Type | Connector Mapping | Conditions |

|---|---|---|

JSON | STRING | |

GEOGRAPHY | STRING | |

INTEGER | STRING | |

INTEGER | INT32 | |

INTEGER | INT64 | |

FLOAT | INT8 | |

FLOAT | INT16 | |

FLOAT | INT32 | |

FLOAT | INT64 | |

FLOAT | STRING | |

FLOAT | FLOAT32 | |

FLOAT | FLOAT64 | |

BOOL | BOOLEAN | |

BOOL | STRING | “true” or “false” |

BYTES | BYTES | |

STRING | STRING | |

BIGNUMERIC | INT16 | |

BIGNUMERIC | INT32 | |

BIGNUMERIC | INT64 | |

BIGNUMERIC | STRING | |

BIGNUMERIC | FLOAT32 | |

BIGNUMERIC | FLOAT64 | |

NUMERIC | INT16 | |

NUMERIC | INT32 | |

NUMERIC | INT64 | |

NUMERIC | STRING | |

NUMERIC | FLOAT32 | |

NUMERIC | FLOAT64 | |

DATE | STRING | YYYY-MM-DD |

DATE | INT32 | Number of days since epoch. The valid range is -719162 (0001-01-01) to 2932896 (9999-12-31). |

DATE | INT64 | Number of days since epoch. The valid range is -719162 (0001-01-01) to 2932896 (9999-12-31). |

DATETIME | STRING | YYYY-MM-DD[t|T]HH:mm:ss[.F] |

TIMESTAMP | STRING | YYYY-MM-DD HH:mm:SS[.F] |

TIMESTAMP | INT64 | microseconds since epoch |

TIME | STRING | HH:mm:SS[.F] |

TIMESTAMP | Logical TIMESTAMP | |

TIME | Logical TIME | |

DATE | Logical DATE | |

DATE | Debezium Date | |

TIME | Debezium MicroTime | |

TIME | Debezium Time | |

TIMESTAMP | Debezium MicroTimestamp | |

TIMESTAMP | Debezium TIMESTAMP | |

TIMESTAMP | Debezium ZonedTimestamp |

Table creation and schema update mapping

The following mapping applies if the connector creates a table automatically or updates schemas (that is, if either auto.create.tables or auto.update.schemas is not disabled).

BigQuery Data Type | Connector Mapping |

|---|---|

STRING | String |

FLOAT | INT8 |

FLOAT | INT16 |

INTEGER | INT32 |

INTEGER | INT64 |

FLOAT | FLOAT32 |

FLOAT | FLOAT64 |

BOOLEAN | Boolean |

BYTES | Bytes |

TIMESTAMP | Logical TIMESTAMP |

TIME | Logical TIME |

DATE | Logical DATE |

FLOAT | Logical Decimal |

DATE | Debezium Date |

TIME | Debezium MicroTime |

TIME | Debezium Time |

TIMESTAMP | Debezium MicroTimestamp |

TIMESTAMP | Debezium TIMESTAMP |

TIMESTAMP | Debezium ZonedTimestamp |

Note

Enable use.integer.for.int8.int16 configuration property to store INT8 and INT16 as INTEGER in BigQuery.

Quick Start

Use this quick start to get up and running with the Confluent Cloud Google BigQuery Storage API Sink connector. The quick start provides the basics of selecting the connector and configuring it to stream events to a BigQuery data warehouse.

- Prerequisites

An active Google Cloud account with authorization to create resources.

A BigQuery project is required. The project can be created using the Google Cloud Console. A BigQuery dataset is required in the project.

To use the Storage Write API, the connector user account authenticating with BigQuery must have

bigquery.tables.updateDatapermissions. The minimum permissions are:bigquery.datasets.get bigquery.tables.create bigquery.tables.get bigquery.tables.getData bigquery.tables.list bigquery.tables.update bigquery.tables.updateData

You must create a BigQuery table before using the connector, if you leave Auto create tables (

auto.create.tables) disabled (the default).You may need to create a schema in BigQuery, depending on how you set the Auto update schemas property (

auto.update.schemas).

Kafka cluster credentials. The following lists the different ways you can provide credentials.

Enter an existing service account resource ID.

Create a Confluent Cloud service account for the connector. Make sure to review the ACL entries required in the service account documentation. Some connectors have specific ACL requirements.

Create a Confluent Cloud API key and secret. To create a key and secret, you can use confluent api-key create or you can autogenerate the API key and secret directly in the Cloud Console when setting up the connector.

The Confluent CLI installed and configured for the cluster.

Schema Registry must be enabled to use a Schema Registry-based format (for example, Avro, JSON_SR (JSON Schema), or Protobuf). For more information, see Schema Registry Enabled Environments.

Using the Confluent Cloud Console

Step 1: Launch your Confluent Cloud cluster

To create and launch a Kafka cluster in Confluent Cloud, see Create a kafka cluster in Confluent Cloud.

Step 2: Add a connector

In the left navigation menu, click Connectors. If you already have connectors in your cluster, click + Add connector.

Step 3: Select your connector

Click the Google BigQuery Sink V2 connector card.

Step 4: Enter the connector details

Note

Be sure you have all the prerequisites completed.

An asterisk ( * ) in the Cloud Console designates a required entry.

At the Add Google BigQuery Sink V2 screen, complete the following:

If you’ve already added Kafka topics, select the topics you want to connect from the Topics list.

To create a new topic, click +Add new topic.

Select the way you want to provide Kafka Cluster credentials. You can choose one of the following options:

My account: This setting allows your connector to globally access everything that you have access to. With a user account, the connector uses an API key and secret to access the Kafka cluster. This option is not recommended for production.

Service account: This setting limits the access for your connector by using a service account. This option is recommended for production.

Use an existing API key: This setting allows you to specify an API key and a secret pair. You can use an existing pair or create a new one. This method is not recommended for production environments.

Note

Freight clusters support only service accounts for Kafka authentication.

Click Continue.

Configure the authentication properties:

Authentication method: Under GCP credentials, select one of the following authenticatation methods with GCP:

OAuth 2.0 (Shared app): (Default) Select this option to use shared OAuth 2.0 credentials.

OAuth 2.0 (Bring your own app): Select this option to use client credentials you configure for OAuth 2.0. To configure Google OAuth 2.0 to bring your own app, see Set up Google OAuth (Bring your own app).

Google Cloud service account: Select this option if you want to authenticate using the GCP credentials file.

Google service account impersonation: Select this option to use Provider integration for authentication.

GCP credentials file: If you select Google Cloud service account, upload your Google Cloud credentials JSON file with write permissions for BigQuery. For additional details, see Create a Service Account.

GCP credentials

Provider Integration: If you select Google service account impersonation, choose an existing integration name under Provider integration name dropdown that has access to your resource or create a new provider integration. For more information, see Manage a Google Cloud Provider Integration.

Setting up client credentials

Client ID: Enter the OAuth client ID returned when setting up OAuth 2.0. Applicable when authenication method is set to OAuth 2.0 (Bring your own app).

Client secret: Enter the client secret for the client ID. Applicable when authenication method is set to OAuth 2.0 (Bring your own app).

Add project details

Project ID: In the Project ID field, enter the ID for the Google Cloud project where BigQuery is located.

Dataset: Enter the name for the BigQuery dataset the connector writes to in the Dataset field.

Click Continue.

Ingestion Mode: Select the Ingestion Mode:

STREAMING,BATCH LOADING,UPSERT, orUPSERT_DELETE. Defaults toSTREAMING.Review the following table for details about the differences between using

BATCH LOADINGandSTREAMING modewith the BigQuery API. For more information, see Introduction to loading data.BATCH LOADING

STREAMING

Records are available after a stream commit and may not be real time

Records are available for reading immediately after append call (minimal latency)

Requires creation of application streams

Default stream is used

May cost less

May cost more

More API quota limits (max streams, buffering, etc.)

Less quota limits

Note that BigQuery API quotas apply.

Input Kafka record value format: Select the input Kafka record value format (data coming from the Kafka topic). Valid entires AVRO, JSON_SR (JSON Schema), or PROTOBUF. A valid schema must be available in Schema Registry to use a schema-based message format (for example, Avro, JSON_SR (JSON Schema), or Protobuf). See Schema Registry Enabled Environments for additional information.

Data decryption

Enable Client-Side Field Level Encryption for data decryption. Specify a Service Account to access the Schema Registry and associated encryption rules or keys with that schema. Select the connector behavior (

ERRORorNONE) on data decryption failure. If set toERROR, the connector fails and writes the encrypted data in the DLQ. If set toNONE, the connector writes the encrypted data in the target system without decryption. For more information on CSFLE or CSPE setup, see Manage encryption for connectors.

Show advanced configurations

Schema context: Select a schema context to use for this connector, if using a schema-based data format. This property defaults to the Default context, which configures the connector to use the default schema set up for Schema Registry in your Confluent Cloud environment. A schema context allows you to use separate schemas (like schema sub-registries) tied to topics in different Kafka clusters that share the same Schema Registry environment. For example, if you select a non-default context, a Source connector uses only that schema context to register a schema and a Sink connector uses only that schema context to read from. For more information about setting up a schema context, see What are schema contexts and when should you use them?.

Input Kafka record key format: Select the input Kafka record key format to set the data format for incoming record keys. Valid entries are: Avro, Bytes, JSON, JSON Schema, Protobuf, or String. A valid schema must be available in Schema Registry to use a schema-based message format.

Note

If the

ingestion.modeis set toUPSERTorUPSERT_DELETE, you must set the record keys to one of the following formats: Avro, JSON, JSON Schema, or Protobuf.Commit Interval: Used only when BATCH LOADING (

ingestion.mode) is enabled. Enter an interval, in seconds, in which the connector attempts to commit records. You can set this to a minimum of60seconds up to14,400seconds (four hours). BigQuery API quotas apply.Kafka Topic to BigQuery Table Map: Map of topics to tables (optional). The required format is comma-separated tuples. For example,

<topic-1>:<table-1>,<topic-2>:<table-2>,...Note that a topic name must not be modified using a regex single message transform (SMT) if using this option. Note that if this property is used,sanitize.topicsis ignored. Also, if the topic-to-table map doesn’t contain the topic for a record, the connector creates a table with the same name as the topic name.Sanitize topics: Specifies whether to automatically sanitize topic names before using them as table names in BigQuery. If not enabled, topic names are used as table names. If enabled, the table names created may be different from the topic names.

Auto update schemas: Defaults to

DISABLED. Designates whether or not to automatically update BigQuery schemas. IfADDNEW FIELDSis selected, new fields are added with mode NULLABLE in the BigQuery schema. Note that this property is applicable for AVRO, JSON_SR, and PROTOBUF message formats only.Sanitize field names: Specifies whether to automatically sanitize field names before using them as field names in BigQuery.

Sanitize array fields: Whether to automatically sanitize field names inside arrays. When enabled, field names inside arrays are also sanitized according to BigQuery naming rules. This setting only takes effect if

sanitize.field.namesis enabled.Auto create tables: Designates whether to automatically create BigQuery tables. Defaults to

DISABLED. Note that this property is applicable for AVRO, JSON_SR, and PROTOBUF message formats only. The other options available are listed below.NON-PARTITIONED: The connector creates non-partitioned tables.

PARTITION by INGESTION TIME: The connector creates tables partitioned by ingestion time. Uses Partitioning type to set partitioning.

PARTITION by FIELD: The connector creates tables partitioned using a field in a Kafka record value. The field name is set using the property Timestamp partition field name (

timestamp.partition.field.name). Uses Partitioning type to set partitioning.

New tables and schema updates may take a few minutes to be detected by the Google Client Library. For more information see the Google Cloud BigQuery API guide.

Partitioning type: The time partitioning type to use when creating new partitioned tables. Existing tables are not altered to use this partitioning type. Defaults to

DAY.Timestamp partition field name: The name of the field in the value that contains the timestamp to partition by in BigQuery. Used when PARTITION by FIELD is used.

Enable change sequence number: Enable the

_CHANGE_SEQUENCE_NUMBERpseudo-column for CDC operations to ensure record are ordered correctly during upsert mode. When primary keys match, the record with the higher sequence number takes precedence. Because_CHANGE_SEQUENCE_NUMBERrequires hexadecimal values, enable the hexadecimal converter if your source data produces decimal values. For more information, see _CHANGE_SEQUENCE_NUMBER in CDC mode.Use hexadecimal converter for change sequence number: Use this converter when

use.change.sequence.numberis enabled to convert the_CHANGE_SEQUENCE_NUMBERstring field from decimal to hexadecimal format for BigQuery. The converter expects decimal numeric values as strings (for example, “255” becomes “FF”). For multi-part sequence numbers separated by forward slashes, the converter processes each part independently (for example, “100/200/300” becomes “64/C8/12C”). It supports up to four parts with a maximum of 16 hexadecimal characters per part. If configured, non-numeric values are sent to DLQ. For more information, see Hexadecimal converter.Use Date Time Formatter: Specify whether to use a

DateTimeFormatterto support a wide range of epochs. Setting this true will useDateTimeFormatterover defaultSimpleDateFormat. The output might vary for same input between the two formatters.Kafka Topic to Clustering Fields Map: Maps topics to their corresponding table clustering fields (optional). It accepts a list of comma-separated topic-to-field mappings, for example,

topic1:[col1|col2], topic2:[col1|col2|..], .... The fields specified are used to cluster data in BigQuery. The order of the fields determines the clustering precedence.”Use INTEGER for INT8 and INT16: Determines how the connector stores INT8 (BYTE) and INT16 (SHORT) data types in BigQuery during auto table creation and schema update. When set to

false``(default), these values are stored as FLOAT. When set to ``true, INT8 (BYTE) and INT16 (SHORT) values are stored as INTEGER.

Additional Configs

Value Converter Replace Null With Default: Whether to replace fields that have a default value and that are null to the default value. When set to true, the default value is used, otherwise null is used. Applicable for JSON Converter.

Value Converter Schema ID Deserializer: The class name of the schema ID deserializer for values. This is used to deserialize schema IDs from the message headers.

Value Converter Reference Subject Name Strategy: Set the subject reference name strategy for value. Valid entries are DefaultReferenceSubjectNameStrategy or QualifiedReferenceSubjectNameStrategy. Note that the subject reference name strategy can be selected only for PROTOBUF format with the default strategy being DefaultReferenceSubjectNameStrategy.

Schema ID For Value Converter: The schema ID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema ID to be used for deserializing message values. Only applicable whenvalue.converter.value.schema.id.deserializeris set toConfigSchemaIdDeserializer.Value Converter Schemas Enable: Include schemas within each of the serialized values. Input messages must contain schema and payload fields and may not contain additional fields. For plain JSON data, set this to false. Applicable for JSON Converter.

Errors Tolerance: Use this property if you would like to configure the connector’s error handling behavior. WARNING: This property should be used with CAUTION for SOURCE CONNECTORS as it may lead to dataloss. If you set this property to ‘all’, the connector will not fail on errant records, but will instead log them (and send to DLQ for Sink Connectors) and continue processing. If you set this property to ‘none’, the connector task will fail on errant records.

Value Converter Ignore Default For Nullables: When set to true, this property ensures that the corresponding record in Kafka is NULL, instead of showing the default column value. Applicable for AVRO,PROTOBUF and JSON_SR Converters.

Key Converter Schema ID Deserializer: The class name of the schema ID deserializer for keys. This is used to deserialize schema IDs from the message headers.

Value Converter Decimal Format: Specify the JSON/JSON_SR serialization format for Connect DECIMAL logical type values with two allowed literals: BASE64 to serialize DECIMAL logical types as base64 encoded binary data and NUMERIC to serialize Connect DECIMAL logical type values in JSON/JSON_SR as a number representing the decimal value.

Schema GUID For Key Converter: The schema GUID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema GUID to be used for deserializing message keys. Only applicable whenkey.converter.key.schema.id.deserializeris set toConfigSchemaIdDeserializer.Schema GUID For Value Converter: The schema GUID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema GUID to be used for deserializing message values. Only applicable whenvalue.converter.value.schema.id.deserializeris set toConfigSchemaIdDeserializer.Value Converter Connect Meta Data: Allow the Connect converter to add its metadata to the output schema. Applicable for Avro Converters.

Value Converter Value Subject Name Strategy: Determines how to construct the subject name under which the value schema is registered with Schema Registry.

Key Converter Key Subject Name Strategy: How to construct the subject name for key schema registration.

Schema ID For Key Converter: The schema ID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema ID to be used for deserializing message keys. Only applicable whenkey.converter.key.schema.id.deserializeris set toConfigSchemaIdDeserializer.

Auto-restart policy

Enable Connector Auto-restart: Control the auto-restart behavior of the connector and its task in the event of user-actionable errors. Defaults to

true, enabling the connector to automatically restart in case of user-actionable errors. Set this property tofalseto disable auto-restart for failed connectors. In such cases, you would need to manually restart the connector.

Consumer configuration

Max poll interval(ms): Set the maximum delay between subsequent consume requests to Kafka. Use this property to improve connector performance in cases when the connector cannot send records to the sink system. The default is 300,000 milliseconds (5 minutes).

Max poll records: Set the maximum number of records to consume from Kafka in a single request. Use this property to improve connector performance in cases when the connector cannot send records to the sink system. The default is 500 records.

Transforms

Single Message Transforms: To add a new SMT, see Add transforms. For more information about unsupported SMTs, see Unsupported transformations.

Processing position

Set offsets: Click Set offsets to define a specific offset for this connector to begin procession data from. For more information on managing offsets, see Manage offsets.

Click Continue.

Based on the number of topic partitions you select, you will be provided with a recommended number of tasks.

To change the number of recommended tasks, enter the number of tasks for the connector to use in the Tasks field.

Click Continue.

Verify the connection details.

Click Launch.

The status for the connector should go from Provisioning to Running.

Step 5: Check the results in BigQuery

From the Google Cloud Console, go to your BigQuery project.

Query your datasets and verify that new records are being added.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Tip

When you launch a connector, a Dead Letter Queue topic is automatically created. See View Connector Dead Letter Queue Errors in Confluent Cloud for details.

Using the Confluent CLI

Complete the following steps to set up and run the connector using the Confluent CLI.

Note

Be sure you have all the prerequisites completed.

Step 1: List the available connectors

Enter the following command to list available connectors:

confluent connect plugin list

Step 2: List the connector configuration properties

Enter the following command to show the connector configuration properties:

confluent connect plugin describe <connector-plugin-name>

The command output shows the required and optional configuration properties.

Step 3: Create the connector configuration file

Create a JSON file that contains the connector configuration properties. The following example shows example connector properties.

{

"name" : "confluent-bigquery-storage-sink",

"connector.class" : "BigQueryStorageSink",

"kafka.auth.mode": "KAFKA_API_KEY",

"kafka.api.key" : "<my-kafka-api-key>",

"kafka.api.secret" : "<my-kafka-api-secret>",

"keyfile" : "....",

"project" : "<my-BigQuery-project>",

"datasets" : "<my-BigQuery-dataset>",

"ingestion.mode" : "STREAMING"

"input.data.format" : "AVRO",

"auto.create.tables" : "DISABLED"

"sanitize.topics" : "true"

"sanitize.field.names" : "false"

"sanitize.field.names.in.array" : "false"

"tasks.max" : "1"

"topics" : "pageviews",

}

Note the following property definitions:

"name": Sets a name for the new connector."connector.class": Identifies the connector plugin name.

"kafka.auth.mode": Identifies the connector authentication mode you want to use. There are two options:SERVICE_ACCOUNTorKAFKA_API_KEY(the default). To use an API key and secret, specify the configuration propertieskafka.api.keyandkafka.api.secret, as shown in the example configuration (above). To use a service account, specify the Resource ID in the propertykafka.service.account.id=<service-account-resource-ID>. To list the available service account resource IDs, use the following command:confluent iam service-account list

For example:

confluent iam service-account list Id | Resource ID | Name | Description +---------+-------------+-------------------+------------------- 123456 | sa-l1r23m | sa-1 | Service account 1 789101 | sa-l4d56p | sa-2 | Service account 2

"topics": Identifies the topic name or a comma-separated list of topic names."keyfile": This contains the contents of the downloaded Google Cloud credentials JSON file for a Google Cloud service account. See Format service account keyfile credentials for details about how to format and use the contents of the downloaded credentials file as the"keyfile"property value."input.data.format": Sets the input Kafka record value format (data coming from the Kafka topic). Valid entries are AVRO, JSON_SR, PROTOBUF, or JSON. You must have Confluent Cloud Schema Registry configured if using a schema-based message format (for example, Avro, JSON_SR (JSON Schema), or Protobuf)."ingestion.mode": Sets the connector to use either STREAMING or BATCH LOADING. Defaults to STREAMING.Review the following table for details about the differences between using BATCH LOADING versus STREAMING mode with the BigQuery API. For more information, see Introduction to loading data.

BATCH LOADING

STREAMING

Records are available after a stream commit and may not be real time

Records are available for reading immediately after append call (minimal latency)

Requires creation of application streams

Default stream is used

May cost less

May cost more

More API quota limits (max streams, buffering, etc.)

Less quota limits

Note that BigQuery API quotas apply.

The following are additional properties you can use. See Configuration Properties for all property values and definitions.

"auto.create.tables": Designates whether to automatically create BigQuery tables. Defaults toDISABLED. Note that this property is applicable for AVRO, JSON_SR, and PROTOBUF message formats only. The other options available are listed below.NON-PARTITIONED: The connector creates non-partitioned tables.PARTITION by INGESTION TIME: The connector creates tables partitioned by ingestion time. Usespartitioning.typeto set partitioning.PARTITION by FIELD: The connector creates tables partitioned using a field in a Kafka record value. The field name is set using the propertytimestamp.partition.field.name. Usespartitioning.typeto set partitioning.

Note

New tables and schema updates may take a few minutes to be detected by the Google Client Library. For more information see the Google Cloud BigQuery API guide.

"auto.update.schemas": Defaults toDISABLED. Designates whether or not to automatically update BigQuery schemas. IfADD NEW FIELDSis selected, new fields are added with mode NULLABLE in the BigQuery schema. Note that this property is applicable for AVRO, JSON_SR, and PROTOBUF message formats only."partitioning.type": The time partitioning type to use when creating new partitioned tables. Existing tables are not altered to use this partitioning type. Defaults toDAY."timestamp.partition.field.name": The name of the field in the value that contains the timestamp to partition by in BigQuery. Used when PARTITION by FIELD is used.

"sanitize.topics": Designates whether to automatically sanitize topic names before using them as table names. If not enabled, topic names are used as table names. If enabled, the table names created may be different from the topic names. Source topic names must comply with BigQuery naming conventions even ifsanitize.topicsis set totrue."sanitize.field.names": Designates whether to automatically sanitize field names before using them as column names in BigQuery. BigQuery specifies that field names can only contain letters, numbers, and underscores. The sanitizer replaces invalid symbols with underscores. If the field name starts with a digit, the sanitizer adds an underscore in front of the field name. Defaults tofalse.Caution

Fields

a.banda_bwill have the same value after sanitizing, which could cause a key duplication error. If not used, field names are used as column names.sanitize.field.names.in.array: Determines whether to sanitize field names that are nested inside array-type objects. By default, the connector does not sanitize fields inside array-type objects during record ingestion. To enable sanitization for these fields, setsanitize.field.names.in.arraytotrue.Note

This property requires

sanitize.field.names=true. To sanitize all fields, including those in arrays, you must set both properties totrue."use.date.time.formatter": Defaults tofalseand the connector uses theSimpleDateFormat. If set totrue, the connector usesDateTimeFormatter, which supports a wide range of epochs. UseDateTimeFormatterfor time-based field such as Logical TIMESTAMP, Logical TIME, Logical DATE, Debezium Date , Debezium MicroTime, Debezium Time , Debezium MicroTimestamp, Debezium TIMESTAMP, or Debezium ZonedTimestamp.The output can vary for the same input across the two formatters. For example, with the epoch

-62104147200000, the connector performs the following:With

SimpleDateFormat: Writes the timestamp as0002-01-02T00:00:00to the destination.With

DateTimeFormatter: Writes the timestamp as0001-12-31 00:00:00.000to the destination.

Note

To enable CSFLE or CSPE for data encryption, specify the following properties:

csfle.enabled: Flag to indicate whether the connector honors CSFLE or CSPE rules.sr.service.account.id: A Service Account to access the Schema Registry and associated encryption rules or keys with that schema.csfle.onFailure: Configures the connector behavior (ERRORorNONE) on data decryption failure. If set toERROR, the connector fails and writes the encrypted data in the DLQ. If set toNONE, the connector writes the encrypted data in the target system without decryption.

When using CSFLE or CSPE with connectors that route failed messages to a Dead Letter Queue (DLQ), be aware that data sent to the DLQ is written in unencrypted plaintext. This poses a significant security risk as sensitive data that should be encrypted may be exposed in the DLQ.

Do not use DLQ with CSFLE or CSPE in the current version. If you need error handling for CSFLE- or CSPE-enabled data, use alternative approaches such as:

Setting the connector behavior to

ERRORto throw exceptions instead of routing to DLQImplementing custom error handling in your applications

Using

NONEto pass encrypted data through without decryption

For more information on CSFLE or CSPE setup, see Manage encryption for connectors.

Single Message Transforms: See the Single Message Transforms (SMT) documentation for details about adding SMTs using the CLI. See Unsupported transformations for a list of SMTs that are not supported with this connector.

See Configuration Properties for all property values and definitions.

Step 4: Load the configuration file and create the connector

Enter the following command to load the configuration and start the connector:

confluent connect cluster create --config-file <file-name>.json

For example:

confluent connect cluster create --config-file bigquery-storage-sink-config.json

Example output:

Created connector confluent-bigquery-storage-sink lcc-ix4dl

Step 5: Check the connector status

Enter the following command to check the connector status:

confluent connect cluster list

Example output:

ID | Name | Status | Type

+-----------+---------------------------------+---------+------+

lcc-ix4dl | confluent-bigquery-storage-sink | RUNNING | sink

Step 6: Check the results in BigQuery.

From the Google Cloud Console, go to your BigQuery project.

Query your datasets and verify that new records are being added.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Tip

When you launch a connector, a Dead Letter Queue topic is automatically created. See View Connector Dead Letter Queue Errors in Confluent Cloud for details.

Connector authentication

You can use a Google Cloud service account or Google OAuth to authenticate the connector with BigQuery. Note that OAuth is only available when using the Confluent Cloud UI to create the connector.

Create a Service Account

For details about how to create a Google Cloud service account and get a JSON credentials file, see Create and delete service account keys. Note the following when creating the service account:

The service account must have write access to the BigQuery project containing the dataset.

To use the Storage Write API, the connector must have

bigquery.tables.updateDatapermissions. The minimum permissions are:bigquery.datasets.get bigquery.tables.create bigquery.tables.get bigquery.tables.getData bigquery.tables.list bigquery.tables.update bigquery.tables.updateData

You create and download a key when creating a service account. The key must be downloaded as a JSON file. It resembles the example below:

{ "type": "service_account", "project_id": "confluent-123456", "private_key_id": ".....", "private_key": "-----BEGIN PRIVATE ...omitted... =\n-----END PRIVATE KEY-----\n", "client_email": "confluent2@confluent-123456.iam.gserviceaccount.com", "client_id": "....", "auth_uri": "https://accounts.google.com/oauth2/auth", "token_uri": "https://oauth2.googleapis.com/token", "auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/certs", "client_x509_cert_url": "https://www.googleapis.com/robot/metadata/confluent2%40confluent-123456.iam.gserviceaccount.com" }

Format service account keyfile credentials

Formatting the keyfile is only required when using the CLI or Terraform to create the connector, where you must add the service account key directly into the connector configuration.

The contents of the downloaded service account credentials JSON file must be converted to string format before it can be used in the connector configuration.

Convert the JSON file contents into string format.

Add the escape character

\before all\nentries in the Private Key section so that each section begins with\\n(see the highlighted lines below). The example below has been formatted so that the\\nentries are easier to see. Most of the credentials key has been omitted.Tip

A script is available that converts the credentials to a string and also adds the additional escape characters where needed. See Stringify Google Cloud Credentials.

{ "name" : "confluent-bigquery-sink", "connector.class" : "BigQueryStorageSink", "kafka.api.key" : "<my-kafka-api-key>", "kafka.api.secret" : "<my-kafka-api-secret>", "topics" : "pageviews", "keyfile" : "{\"type\":\"service_account\",\"project_id\":\"connect- 1234567\",\"private_key_id\":\"omitted\", \"private_key\":\"-----BEGIN PRIVATE KEY----- \\nMIIEvAIBADANBgkqhkiG9w0BA \\n6MhBA9TIXB4dPiYYNOYwbfy0Lki8zGn7T6wovGS5\opzsIh \\nOAQ8oRolFp\rdwc2cC5wyZ2+E+bhwn \\nPdCTW+oZoodY\\nOGB18cCKn5mJRzpiYsb5eGv2fN\/J \\n...rest of key omitted... \\n-----END PRIVATE KEY-----\\n\", \"client_email\":\"pub-sub@connect-123456789.iam.gserviceaccount.com\", \"client_id\":\"123456789\",\"auth_uri\":\"https:\/\/accounts.google.com\/o\/oauth2\/ auth\",\"token_uri\":\"https:\/\/oauth2.googleapis.com\/ token\",\"auth_provider_x509_cert_url\":\"https:\/\/ www.googleapis.com\/oauth2\/v1\/ certs\",\"client_x509_cert_url\":\"https:\/\/www.googleapis.com\/ robot\/v1\/metadata\/x509\/pub-sub%40connect- 123456789.iam.gserviceaccount.com\"}", "project": "<my-BigQuery-project>", "datasets":"<my-BigQuery-dataset>", "data.format":"AVRO", "tasks.max" : "1" }

Add all the converted string content to the

"keyfile"credentials section of your configuration file as shown in the example above.

Set up Google OAuth (Bring your own app)

Complete the following steps to set up Google OAuth and get the Client ID and Client secret. This is applicable for the OAuth 2.0 (Bring your own app) BigQuery authentication option. For additional information, see Setting up OAuth 2.0.

Create a Project in Google Cloud Console. If you haven’t already, create a new project in the Google Cloud Console.

Enable the BigQuery API.

Navigate to the API Library in the Google Cloud Console.

Search for the BigQuery API.

Select it and enable it for your project.

Configure the OAuth Consent Screen.

Go to the OAuth consent screen in the Credentials section of the Google Cloud Console.

Select Internal when prompted to set the User Type. This means only users within your organization can consent to the connector accessing data in BigQuery.

In the Scopes section of the Consent screen, enter the following scope:

https://www.googleapis.com/auth/bigquery

Fill in the remaining required fields and save.

Create OAuth 2.0 Credentials.

In the Google Cloud Console, go to the Credentials page.

Click on Create Credentials and select OAuth client ID.

Choose the application type Web application.

Set up the authorized redirect URI. Enter the following Confluent-provided URI:

https://confluent.cloud/api/connect/oauth/callback

Click Create. You are provided with the Client ID and Client secret.

OAuth Limitations

Note the following limitations when using OAuth for the connector.

If you enter the wrong Client ID when authenticating, the UI attempts to launch the consent screen, and then displays the error for an invalid client. Click the back button and re-enter the connector configuration. This is applicable for the OAuth 2.0 (Bring your own app) BigQuery authentication option.

If you enter the correct Client ID but enter the wrong Client secret, the UI successfully launches the consent screen and the user is able to authorize. However, the API request fails. The user is redirected to the connect UI provisioning screen. This is applicable for the OAuth 2.0 (Bring your own app) BigQuery authentication option.

You must manually revoke permissions for the connector from Third-party apps & services if you delete the connector or switch to a different auth mechanism.

Google Cloud allows a maximum of 100 refresh tokens for a single user using a single OAuth 2.0 client ID. For example, a single user can create a maximum of 100 connectors using the same client ID. When this user creates connector 101 using the same client ID, the refresh token for the first connector created is revoked. If you want to run more than 100 connectors, you can use multiple Client IDs or User IDs. Or, you can use a service account.

Legacy to V2 Connector Migration

The BigQuery Sink Legacy connector uses the BigQuery legacy streaming API and will reach end of life (EOL) on March 31, 2026. Confluent recommends migrating to the BigQuery Sink V2 connector. The BigQuery Sink V2 connector uses the BiqQuery storage write API, which offers higher throughput, improved reliability, and better schema evolution support than the legacy connector.

Due to fundamental differences between the underlying Google Cloud APIs, a direct in-place upgrade is not possible. The following sections provide the migration approach with no data loss, including the known API changes, and mappings for key configuration properties.

You can choose one of two paths for your migration:

Automated migration (recommended): Use the Bigquery V2 Sink migration tool provided by Confluent. This is the simplest and fastest method, as it automates the transfer of configurations and offsets.

Manual migration: Set up a parallel pipeline, validate data, and manually cut over from the legacy to the V2 connector. This approach offers more granular control but requires more hands-on effort.

- Prerequisites

Confluent Cloud: An active account with access to the legacy connector.

Google Cloud: A Google Cloud service account with the necessary BigQuery permissions.

Automated migration script: If you choose to use the automated script, ensure your local machine has Python 3.6 or later installed.

Using the automated migration

For a streamlined approach, use the Bigquery V2 Sink migration tool. This tool is designed to simplify the process by automating the transfer of existing offsets and topic mappings from your legacy connector configuration to the new V2 connector. The migration tool, migrate-to-bq-v2-sink.py, is available in the official Confluent GitHub repository.

Quick start

Clone or download the repository containing the script.

Run the migration tool from your local machine:

python3 migrate-to-bq-v2-sink.py --legacy_connector "<YOUR_LEGACY_CONNECTOR_NAME>" --environment "<YOUR_ENVIRONMENT_NAME>" --cluster_id "<YOUR_KAFKA_CLUSTER_ID>"

The tool will guide you through configuring the new V2 connector, including:

Check V1 Sink connector status: The tool shows your connector’s current status.

If testing on dummy tables: You can keep the existing connector running.

For production tables: It’s recommended to pause the V1 connector to avoid data duplication.

Get environment details: Fetch the environment name and cluster ID from your Kafka cluster’s URL in Confluent Cloud.

Set credentials: The tool supports three methods for providing your Confluent Cloud credentials.

Environment variables: Export your email and password as environment variables. Note that the environment variables can be visible in process lists and command history.

export EMAIL="your-email@example.com" export PASSWORD="your-password"

Credentials file (recommended): Create a JSON file with your credentials.

{ "email": "your-email@example.com", "password": "your-password" }Secure input: Enter credentials interactively when prompted.

Follow the interactive prompts: The tool will guide you through configuring the new V2 connector, including:

Connector Name: Enter a name for the new V2 connector.

Ingestion Mode: Select a mode for ingestion. Defaults to

STREAMING.STREAMING: Lower latency, higher cost (default)BATCH LOADING: Higher latency, lower costUPSERT: For upsert operations (requires key fields)UPSERT_DELETE: For upsert and delete operations (requires key fields)

Int8/Int16 casting for Byte and Short fields: Choose between

FLOAT(default) orINTEGER.Commit Interval: For

BATCH LOADINGmode, set interval between 60-14400 seconds.Auto Create Tables: Configure table creation (defaults to

DISABLED).DISABLED: Do not auto-create tables (default)NON-PARTITIONED: Create tables without partitioningPARTITION by INGESTION TIME: Create time-partitioned tablesPARTITION by FIELD: Create field-partitioned tables

Partitioning: Set partitioning type and field (if applicable).

HOUR: Partition by hourDAY: Partition by dayMONTH: Partition by monthYEAR: Partition by year

Topic to Table Mapping: For testing purposes, you can configure

topic2table.mapto redirect data to different tables.Use existing mapping: If already configured in the legacy connector.

Configure new mapping: For testing without affecting production tables. For example,

my-topic:my-test-table,another-topic:another-test-table.Skip mapping: Use default table names.

Date Time Formatter: Choose between

SimpleDateFormatorDateTimeFormatter.GCP Service Account Keyfile: Provide your credentials via file path, environment variable, or direct input.

File Path: Provide the path to your JSON keyfile

Environment Variable: Set

GCP_KEYFILE_PATHenvironment variableDirect Input: Paste the JSON content directly

Credentials: Choose a secure method for Confluent Cloud authentication.

Review and Confirm: The tool displays the final configuration and asks for confirmation before creating the connector.

Considerations

Preserve offsets: The V2 connector will start from the latest offset of your legacy connector.

Prevent data loss: The tool automatically transfers offsets to avoid data duplication.

Review breaking changes: Before you migrate, review the breaking changes section.

Test first: Always test with a small dataset first before upgrading a production connector.

Monitor after migration: Monitor the new connector for any issues after migration.

Check connector status: The tool shows the connector status and recommends actions based on whether you’re testing or migrating production data.

Using the manual migration

Follow these steps to migrate your pipelines from the legacy connector to the V2 connector.

Understand the key changes: Review the differences in API behavior, data type handling, and configuration properties. Pay close attention to how

TIMESTAMPvalues are handled.Set up a parallel pipeline: Never attempt to upgrade a production connector in place. A parallel environment is essential for validation without impacting your production data flow. Create a new BigQuery Sink V2 connector in a test environment, pointing it to a new, separate BigQuery table for validation.

To directly compare fields, data types, and stored data between legacy and V2, you can continue using the same Kafka topic as your production connector.

Validate data integrity: Run the legacy and V2 connectors in parallel, writing to separate tables. Compare the data in both tables to ensure consistency.

Confirm that

TIMESTAMP,DATE, andDATETIMEfields are handled correctly.Ensure all fields are mapped as

NULLABLEin BigQuery, as expected.Check for any inconsistencies with nested or complex data schemas.

Cutover to V2: After validating the V2 pipeline, perform the following:

Gracefully stop the legacy BigQuery sink connector.

Start or resume the new V2 connector from the last committed offsets of the V1 connector.

After confirming the V2 connector is running stably, decommission the legacy connector and update any monitoring systems to use the new connector.

Breaking API changes

The following table lists API differences that were identified during connector development and testing. Be aware that there may be additional breaking changes that were not identified.

BigQuery Schema Types | Legacy InsertAll API | Storage Write API (V2) |

|---|---|---|

TIMESTAMP | Input value is considered seconds since epoch | Input value is considered microseconds since epoch |

DATE | INT is not supported | INT in range -719162 (0001-01-01) to 2932896 (9999-12-31) is supported |

TIMESTAMP, JSON, INTEGER, BIGNUMERIC, NUMERIC, STRING, DATE, TIME, DATETIME, BYTES | Supports most primitive data type inputs | |

DATE, TIME, DATETIME, TIMESTAMP | Supports entire datetime canonical format | Supports a subset of the datetime canonical format |

New V2 properties

These properties provide new functionality that wasn’t available in the legacy connector.

V2 property | Default value | Description |

|---|---|---|

|

| Selects the data ingestion strategy. |

|

| Provides more granular control over table creation. Options include: |

|

| Allows switching from the default |

|

| Determines how |

Discontinued properties

The following legacy configuration properties have been removed in V2.

Discontinued legacy property | V2 behavior & equivalent |

|---|---|

| Not supported. All fields in the V2 connector are created as |

| Removed. This functionality is now part of the |

| Not user-configurable. The V2 connector uses built-in retry mechanisms with a default retry count of 10 and a wait time of 1,000 ms. |

| Removed. Datasets are specified directly in the connector configuration. |

| Removed. Its functionality for creating and updating tables is now handled by the |

Properties with changed behavior

The following configuration properties exist in both the legacy and V2 versions but have different implementations or capabilities in V2. Note the legacy to V2 mapping for the key configuration properties:

Configuration property | Legacy behavior | V2 behavior |

|---|---|---|

| Allowed automatic updates for new primitive fields only. | Supports schema updates for both primitive and complex field types (structs and arrays), making it more robust. |

| Could be set to | Removed. All fields are created as |

| Offered basic partitioning options. | These configurations offer more granular control and work in tandem with the enhanced |

| User-configurable | Not exposed in Confluent Cloud. The default behavior is |

Migration FAQs

The frequently asked questions (FAQs) address common questions and issues encountered while migrating from the legacy to BigQuery Sink V2 connector for Confluent Cloud.

Why should I migrate to the V2 connector?

The V2 connector, which uses the underlying Storage Write API, offers significant benefits:

Higher throughput: Sustains much higher data ingestion rates.

Future-proof: Aligns with Google Cloud’s official recommendation for streaming data into BigQuery.

Improved reliability: Provides better error handling and built-in retry mechanisms.

Expanded type support: Natively supports

NUMERICandBIGNUMERICdata types.Enhanced schema evolution: More robustly handles changes to Kafka topic schemas.

Flexible write strategies: Supports multiple delivery modes, including

STREAMING,BATCH LOADING,UPSERT, andUPSERT_DELETE.

What happens if I don’t migrate before the legacy connector EOL?

The legacy BigQuery streaming API is already deprecated, and in alignment with this, the legacy BigQuery connector is scheduled for EOL on March 31, 2026.

After this date:

You can no longer create new legacy BigQuery connectors.

Existing connectors may continue to function, but they will not receive any updates or fixes. To ensure long-term stability and support, migrate to the BigQuery Sink V2 connector as soon as possible.

Are these two connectors just different versions of the same software?

No. They are two completely different connectors from an architectural standpoint. The legacy connector is built on BigQuery’s old InsertAll API, while the V2 connector was written from the ground up to leverage the modern BiqQuery storage write API. This fundamental difference in the underlying technology is why a direct, in-place upgrade is not possible and requires a parallel migration strategy.

Is there a price difference between the legacy and V2 connectors?

No, Confluent prices both the legacy and V2 connectors the same.

Are there any changes to my Google Cloud costs?

While Confluent’s pricing for the connector remains the same, the underlying Google Cloud APIs have different pricing models. The legacy InsertAll API and the new Storage Write API are billed differently by Google. You should review Google Cloud’s BigQuery pricing documentation to estimate the cost impact for your specific workload.

Do I need to stop my legacy connector before starting V2 connector?

No. During the validation phase, you should run them in parallel. However, once you cut over to the V2 connector for production traffic, you must stop the legacy connector to prevent duplicate data writes.

Will my old offsets work with the V2 connector?

Yes, but you should not reuse them manually. Offsets are stored per partition and can be reused by the V2 connector to ensure continuity. The Bigquery V2 Sink migration tool leverages these offsets so the V2 connector starts from the last committed offset of each partition, avoiding skipped data or duplication. You should always use the migration tool to correctly map offsets.

Can I use the BigQuery V2 Sink migration tool mentioned in the docs?

Yes. Confluent provides a Bigquery V2 Sink migration tool to automate the migration process for users looking for a more automated approach. However, we still strongly recommend that you understand the manual steps and perform thorough validation before switching production workloads.

Does the connector support a single topic with multiple schemas to write to multiple tables?

No. The BigQuery V2 Sink connector does not support splitting data from a single Kafka topic into multiple BigQuery tables based on different schemas. Each Kafka topic maps to one dedicated BigQuery table. However, a single connector instance can consume from multiple Kafka topics, with each topic mapping to its own respective table and schema.

Is allow.schema.unionization configuration property available in V2 connector?

No. The allow.schema.unionization property is removed in V2. In the V1 connector, you needed this property enabled to update complex fields (structs and arrays). In the V2 connector, the auto.update.schemas property handles schema updates for both primitive and complex field types.

Why are all fields mapped as NULLABLE in V2?

The V2 connector uses this design to ensure maximum compatibility and prevent load failures that occur when a source record is missing an optional field. Unlike the legacy connector (all.bq.fields.nullable), V2 does not currently offer a configuration to override this behavior. If you require a field to be REQUIRED, manually update the table schema in the BigQuery UI after the table is created.

Why is the convert.double.special.values configuration not available in BigQuery Sink V2?

The V2 connector supports the convert.double.special.values configuration internally, but it is not exposed in Confluent Cloud. The default value is false, which means non-finite double values (NaN, +Infinity, -Infinity) are passed through as-is and are not converted to maximum (Double.MAX_VALUE) or minimum values (Double.MIN_VALUE).

Does the BigQuery V2 connector require users to include _CHANGE_TYPE in Kafka records?

No. In UPSERT_DELETE mode, the connector automatically interprets a record containing only primary key fields (with all other fields as null) as a delete operation. The connector’s backend then internally sets _CHANGE_TYPE=DELETE before applying the change in BigQuery.

Will there be a V2 connector for self-managed Confluent Platform?

At this time, there are no plans to release a self-managed V2 connector. We are currently evaluating customer demand. If you need this capability, submit a Feature Request through your Confluent account team so we can track and prioritize interest.

Configuration Properties

Use the following configuration properties with this connector.

Which topics do you want to get data from?

topics.regexA regular expression that matches the names of the topics to consume from. This is useful when you want to consume from multiple topics that match a certain pattern without having to list them all individually.

Type: string

Importance: low

topicsIdentifies the topic name or a comma-separated list of topic names.

Type: list

Importance: high

errors.deadletterqueue.topic.nameThe name of the topic to be used as the dead letter queue (DLQ) for messages that result in an error when processed by this sink connector, or its transformations or converters. Defaults to ‘dlq-${connector}’ if not set. The DLQ topic will be created automatically if it does not exist. You can provide

${connector}in the value to use it as a placeholder for the logical cluster ID.Type: string

Default: dlq-${connector}

Importance: low

Schema Config

schema.context.nameAdd a schema context name. A schema context represents an independent scope in Schema Registry. It is a separate sub-schema tied to topics in different Kafka clusters that share the same Schema Registry instance. If not used, the connector uses the default schema configured for Schema Registry in your Confluent Cloud environment.

Type: string

Default: default

Importance: medium

Input messages

input.key.formatSets the input Kafka record key format. Valid entries are AVRO, BYTES, JSON, JSON_SR, PROTOBUF. Note that you need to have Confluent Cloud Schema Registry configured if using a schema-based message format like AVRO, JSON_SR, and PROTOBUF

Type: string

Default: BYTES

Valid Values: AVRO, BYTES, JSON, JSON_SR, PROTOBUF, STRING

Importance: high

input.data.formatSets the input Kafka record value format. Valid entries are AVRO, JSON_SR, PROTOBUF, and JSON. Note that you need to have Confluent Cloud Schema Registry configured if using a schema-based message format like AVRO, JSON_SR, or PROTOBUF.

Type: string

Default: JSON

Importance: high

How should we connect to your data?

nameSets a name for your connector.

Type: string

Valid Values: A string at most 64 characters long

Importance: high

Kafka Cluster credentials

kafka.auth.modeKafka Authentication mode. It can be one of KAFKA_API_KEY or SERVICE_ACCOUNT. It defaults to KAFKA_API_KEY mode, whenever possible.

Type: string

Valid Values: SERVICE_ACCOUNT, KAFKA_API_KEY

Importance: high

kafka.api.keyKafka API Key. Required when kafka.auth.mode==KAFKA_API_KEY.

Type: password

Importance: high

kafka.service.account.idThe Service Account that will be used to generate the API keys to communicate with Kafka Cluster.

Type: string

Importance: high

kafka.api.secretSecret associated with Kafka API key. Required when kafka.auth.mode==KAFKA_API_KEY.

Type: password

Importance: high

GCP credentials

provider.integration.idSelect an existing integration that has access to your resource. In case you need to integrate a new Google Service Account, use provider integration

Type: string

Importance: high

authentication.methodSelect how you want to authenticate with BigQuery.

Type: string

Default: Google cloud service account

Importance: high

keyfileGCP service account JSON file with write permissions for BigQuery.

Type: password

Importance: high

oauth.client.idClient ID of your Google OAuth application.

Type: string

Importance: high

oauth.client.secretClient secret of your Google OAuth application.

Type: password

Importance: high

oauth.refresh.tokenOAuth 2.0 refresh token for BigQuery.

Type: password

Importance: high

BigQuery details

projectID for the GCP project where BigQuery is located.

Type: string

Importance: high

datasetsName of the BigQuery dataset where table(s) is located.

Type: string

Importance: high

Ingestion Mode details

ingestion.modeSelect a mode to ingest data into the table. Select STREAMING for reduced latency. Select BATCH LOADING for cost savings. Select UPSERT for upserting records. Select UPSERT_DELETE for upserting and deleting records.

Type: string

Default: STREAMING

Importance: high

Insertion and DDL support

commit.intervalThe interval, in seconds, the connector attempts to commit streamed records. Set the interval between 60 seconds (1 minute) and 14,400 seconds (4 hours). Be careful when setting the commit interval as on every commit interval, a task calls the

CreateWriteStreamAPI which is subject to a quota <https://cloud.google.com/bigquery/quotas#write-api-limits:~:text=handle%20unexpected%20demand.-,CreateWriteStream,-requests>. For example, if you have five tasks (may belong to different connectors also) set to commit every 60 seconds to a project, there will be five calls toCreateWriteStreamAPI on that project every minute. If the count exceeds the allowed quota, some tasks may fail.Type: int

Default: 60

Valid Values: [60,…,14400]

Importance: high

topic2table.mapMap of topics to tables (optional). The required format is comma-separated tuples. For example, <topic-1>:<table-1>,<topic-2>:<table-2>,… Note that a topic name must not be modified using a regex SMT while using this option. If this property is used,

sanitize.topicsis ignored. Also, if the topic-to-table map doesn’t contain the topic for a record, the connector creates a table with the same name as the topic name.Type: string

Default: “”

Importance: medium

sanitize.topicsDesignates whether to automatically sanitize topic names before using them as table names in BigQuery. If not enabled, topic names are used as table names.

Type: boolean

Default: true

Importance: high

sanitize.field.namesWhether to automatically sanitize field names before using them as field names in BigQuery. BigQuery specifies that field names can only contain letters, numbers, and underscores. The sanitizer replaces invalid symbols with underscores. If the field name starts with a digit, the sanitizer adds an underscore in front of field name. Caution: Key duplication errors can occur if different fields are named a.b and a_b, for instance. After being sanitized, field names a.b and a_b will have same value.

Type: boolean

Default: false

Importance: high

auto.create.tablesDesignates whether or not to automatically create BigQuery tables. Note: Supports AVRO, JSON_SR, and PROTOBUF message format only.

Type: string

Default: DISABLED

Importance: high

auto.update.schemasDesignates whether or not to automatically update BigQuery schemas. New fields in record schemas must be nullable. Note: Supports AVRO, JSON_SR, and PROTOBUF message format only.

Type: string

Default: DISABLED

Importance: high

sanitize.field.names.in.arrayWhether to automatically sanitize field names inside arrays. When enabled, field names inside arrays will also be sanitized according to BigQuery naming rules. This setting only takes effect if ‘sanitize.field.names’ is also enabled.

Type: boolean

Default: false

Importance: medium

partitioning.typeThe time partitioning type to use when creating new partitioned tables. Existing tables will not be altered to use this partitioning type.

Type: string

Default: DAY

Importance: low

timestamp.partition.field.nameThe name of the field in the value that contains the timestamp to partition by in BigQuery. This also enables timestamp partitioning for each table.

Type: string

Importance: low

use.change.sequence.numberEnables the _CHANGE_SEQUENCE_NUMBER pseudo-column for CDC operations to ensure correct record ordering in upsert mode. For identical primary keys, the record with the higher sequence number takes precedence. Because _CHANGE_SEQUENCE_NUMBER requires hexadecimal values, enable the hexadecimal converter if your source data produces decimal values. For more information, see _CHANGE_SEQUENCE_NUMBER in CDC mode.

Type: boolean

Default: false

Importance: medium

use.hexadecimal.converter.for.change.sequence.numberOnly applies when

use.change.sequence.numberis enabled. Converts the _CHANGE_SEQUENCE_NUMBER string field from decimal to hexadecimal format, as required by BigQuery. Expects decimal numeric values as strings (for example, “255” converts to “FF”). Supports multi-part sequence numbers separated by forward slashes where each part is converted independently (for example, “100/200/300” becomes “64/C8/12C”). Supports a maximum of four parts with a maximum of 16 hex characters per part. Non-numeric values are sent to the DLQ, if configured. For more information, see Hexadecimal converter.Type: boolean

Default: false

Importance: low

use.date.time.formatterSpecify whether to use a

DateTimeFormatterto support a wide range of epochs. Setting this true will useDateTimeFormatterover defaultSimpleDateFormat. The output might vary for same input between the two formatters.Type: boolean

Default: false

Importance: high

topic2clustering.fields.mapMaps topics to their corresponding table clustering fields (optional). Format: comma-separated tuples, e.g., topic1:[col1|col2], topic2:[col1|col2|..], … Specifies the fields used to cluster data in BigQuery. The order of the fields determines the clustering precedence.

Type: string

Default: “”

Importance: low

use.integer.for.int8.int16Determines how the connector stores INT8 (BYTE) and INT16 (SHORT) data types in BigQuery during auto table creation and schema update. When set to

false``(default), these values are stored as FLOAT. When set to ``true, INT8 (BYTE) and INT16 (SHORT) values are stored as INTEGER.Type: boolean

Default: false

Importance: low

Consumer configuration

max.poll.interval.msThe maximum delay between subsequent consume requests to Kafka. This configuration property may be used to improve the performance of the connector, if the connector cannot send records to the sink system. Defaults to 300000 milliseconds (5 minutes).

Type: long

Default: 300000 (5 minutes)

Valid Values: [60000,…,1800000] for non-dedicated clusters and [60000,…] for dedicated clusters

Importance: low

max.poll.recordsThe maximum number of records to consume from Kafka in a single request. This configuration property may be used to improve the performance of the connector, if the connector cannot send records to the sink system. Defaults to 500 records.

Type: long

Default: 500

Valid Values: [1,…,500] for non-dedicated clusters and [1,…] for dedicated clusters

Importance: low

Number of tasks for this connector

tasks.maxMaximum number of tasks for the connector.

Type: int

Valid Values: [1,…]

Importance: high

Additional Configs

consumer.override.auto.offset.resetDefines the behavior of the consumer when there is no committed position (which occurs when the group is first initialized) or when an offset is out of range. You can choose either to reset the position to the “earliest” offset (the default) or the “latest” offset. You can also select “none” if you would rather set the initial offset yourself and you are willing to handle out of range errors manually. More details: https://docs.confluent.io/platform/current/installation/configuration/consumer-configs.html#auto-offset-reset

Type: string

Importance: low

consumer.override.isolation.levelControls how to read messages written transactionally. If set to read_committed, consumer.poll() will only return transactional messages which have been committed. If set to read_uncommitted (the default), consumer.poll() will return all messages, even transactional messages which have been aborted. Non-transactional messages will be returned unconditionally in either mode. More details: https://docs.confluent.io/platform/current/installation/configuration/consumer-configs.html#isolation-level

Type: string

Importance: low

header.converterThe converter class for the headers. This is used to serialize and deserialize the headers of the messages.

Type: string

Importance: low

key.converter.use.schema.guidThe schema GUID to use for deserialization when using ConfigSchemaIdDeserializer. This allows you to specify a fixed schema GUID to be used for deserializing message keys. Only applicable when key.converter.key.schema.id.deserializer is set to ConfigSchemaIdDeserializer.

Type: string

Importance: low

key.converter.use.schema.idThe schema ID to use for deserialization when using ConfigSchemaIdDeserializer. This allows you to specify a fixed schema ID to be used for deserializing message keys. Only applicable when key.converter.key.schema.id.deserializer is set to ConfigSchemaIdDeserializer.

Type: int

Importance: low

value.converter.allow.optional.map.keysAllow optional string map key when converting from Connect Schema to Avro Schema. Applicable for Avro Converters.

Type: boolean

Importance: low

value.converter.auto.register.schemasSpecify if the Serializer should attempt to register the Schema.

Type: boolean

Importance: low