Manage Service Accounts for Connectors in Confluent Cloud

All Confluent Cloud connectors require credentials to allow the connector to operate and access Kafka. You can either create and use an API key and secret or use a service account.

When you create a service account, you configure access control list (ACL) DESCRIBE, CREATE, READ, and WRITE access to topics and create the API key and secret. Once the service account is created, a user creating a connector can select the service account ID when configuring the connector.

Important

A connector configuration must include either an API key and secret or a service account ID. For additional Confluent Cloud service account information, see Service Accounts on Confluent Cloud.

Create a service account using the Confluent Cloud Console

When you create a new connector using the Cloud Console, you have the option to select an existing service account or create a new one. Complete the following steps to create a new service account while creating a new connector. For additional Confluent Cloud service account information, see Service Accounts on Confluent Cloud.

Select your new connector from the Connector Plugins screen and, if applicable, select an existing topic or create a new topic.

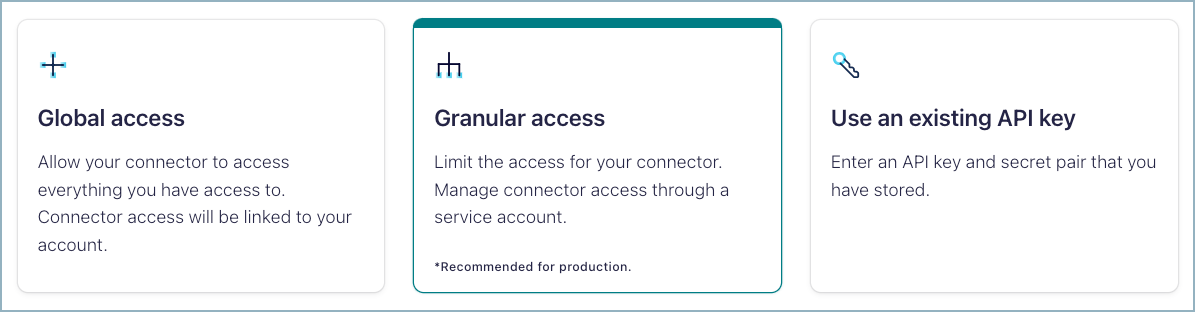

Click Service account on the Kafka credentials screen.

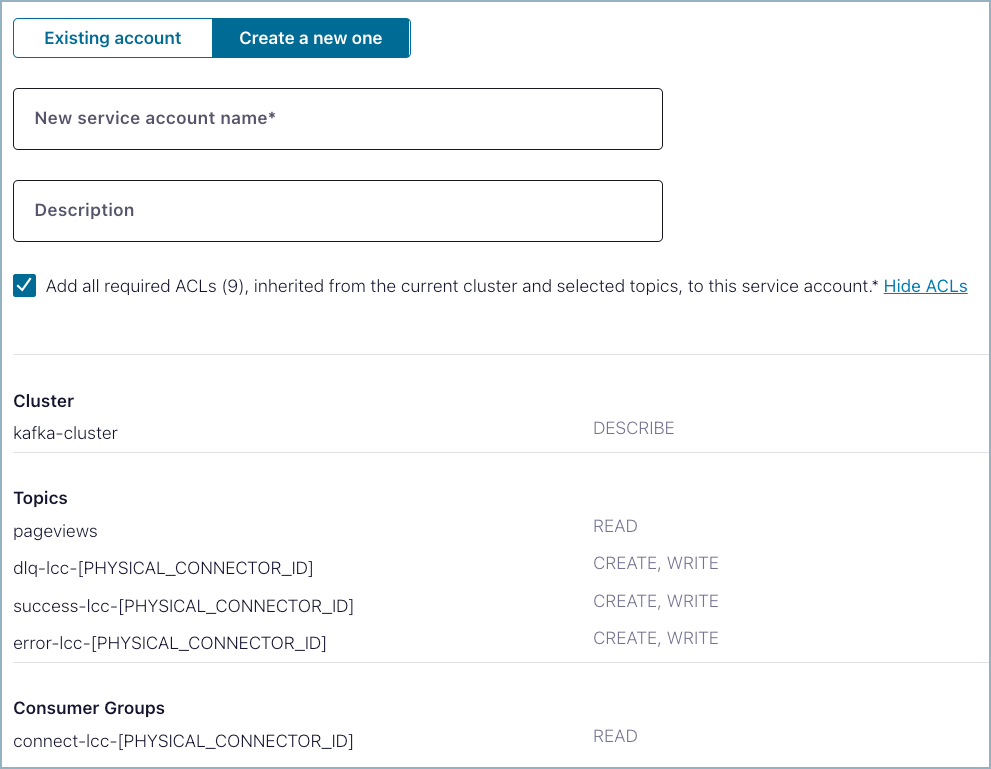

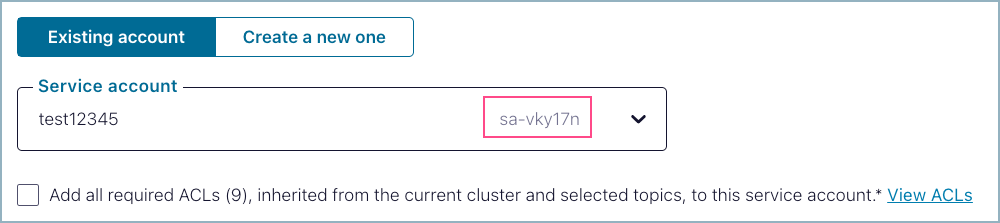

You can select an existing service account or create a new one. Click Create a new one. Create a name and description for the service account.

Click Add all required ACLs… to be sure that the connector can read, create, and write to any topics it may require for operation.

Click Continue and configure the connector. Your new service account ID is similar to the ID

sa-vky17nhighlighted below.

Create a service account using the Confluent CLI

The following examples show how to set up a service account using the Confluent Cloud CLI. These steps can be used for a cluster running on any cloud provider.

Sink connector service account

This example assumes the following:

You have a Kafka cluster with cluster ID

lkc-abcd123.You want the sink connector to read from a topic named

pageviews.

Use the following example steps to create a service account, set ACLs, and add the API key and secret.

Note

The following steps show basic ACL entries for sink connector service accounts. Be sure to review the Sink connector SUCCESS and ERROR topics and Sink connector offset management sections for additional ACL entries that may be required for certain connectors or tasks.

Create a service account named

myserviceaccount:confluent iam service-account create myserviceaccount --description "test service account"

Find the service account ID for

myserviceaccount:confluent iam service-account list

Set a DESCRIBE ACL to the cluster.

confluent kafka acl create --allow --service-account "<service-account-id>" --operations describe --cluster-scope

Set a READ ACL to

pageviews:confluent kafka acl create --allow --service-account "<service-account-id>" --operations read --topic pageviews

Set a CREATE ACL to the following topic prefix:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations create --prefix --topic "dlq-lcc-"

Set a WRITE ACL to the following topic prefix:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --prefix --topic "dlq-lcc-"

Set a READ ACL to a consumer group with the following prefix:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations read --prefix --consumer-group "connect-lcc-"

Create a Kafka API key and secret for

<service-account-id>:confluent api-key create --resource "lkc-abcd123" --service-account "<service-account-id>"

Save the API key and secret.

The connector configuration must include either an API key and secret or a service account ID. For additional service account information, see Service Accounts on Confluent Cloud.

Source connector service account

This example assumes the following:

You have a Kafka cluster with cluster ID

lkc-abcd123.You want the source connector to write to a topic named

passengers.

Use the following example steps to create a service account, set ACLs, and add the API key and secret.

Note

The following steps show basic ACL entries for source connector service accounts. Make sure to review Debezium [Legacy] Source Connectors and JDBC-based Source Connectors and the MongoDB Atlas Source Connector for additional ACL entries that may be required for certain connectors.

Create a service account named

myserviceaccount:confluent iam service-account create myserviceaccount --description "test service account"

Find the service account ID for

myserviceaccount:confluent iam service-account list

Set a DESCRIBE ACL to the cluster.

confluent kafka acl create --allow --service-account "<service-account-id>" --operations describe --cluster-scope

Set a WRITE ACL to

passengers:confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --topic "passengers"

Create a Kafka API key and secret for

<service-account-id>:confluent api-key create --resource "lkc-abcd123" --service-account "<service-account-id>"

Save the API key and secret.

The connector configuration must include either an API key and secret or a service account ID. For additional service account information, see Service Accounts on Confluent Cloud.

Additional ACL entries

Certain connectors require additional ACL entries.

Debezium [Legacy] Source Connectors

The Source connector service account section provides basic ACL entries for source connector service accounts. Debezium [Legacy] Source connectors require additional ACL entries. Add the following ACL entries for Debezium [Legacy] Source connectors:

ACLs to create and write to table related topics prefixed with

<database.server.name>. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "<database.server.name>"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "<database.server.name>"

ACLs to describe configurations at the cluster scope level. Use the following commands to set these ACLs:

confluent kafka acl create --allow --service-account "<service-account-id>" \ --cluster-scope --operations describe

confluent kafka acl create --allow --service-account "<service-account-id>" \ --cluster-scope --operations describe_configs

The Debezium MySQL CDC Source (Debezium) [Legacy] and the Debezium Microsoft SQL Source (Debezium) [Legacy] connectors require the following additional ACL entries:

ACLs to read, create, and write to database history topics prefixed with

dbhistory.<database.server.name>.lcc-. For example, the server name iscdcin the configuration property"database.server.name": "cdc". Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations read --prefix --topic "dbhistory.<database.server.name>.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "dbhistory.<database.server.name>.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "dbhistory.<database.server.name>.lcc-"

ACLs to read database history consumer group named

<database.server.name>-dbhistory. For example, the server name iscdcin the configuration property"database.server.name": "cdc". Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations "read" --consumer-group "<database.server.name>-dbhistory"

Debezium V2 Source Connectors

The Source connector service account section provides basic ACL entries for source connector service accounts. Debezium V2 Source connectors require additional ACL entries. Add the following ACL entries for Debezium V2 Source connectors:

ACLs to create and write to table-related topics prefixed with

<topic.prefix>. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "<topic.prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "<topic.prefix>"

ACLs to describe configurations at the cluster scope. Use the following commands to set these ACLs:

confluent kafka acl create --allow --service-account "<service-account-id>" \ --cluster-scope --operations describe

confluent kafka acl create --allow --service-account "<service-account-id>" \ --cluster-scope --operations describe_configs

The Debezium MySQL CDC Source V2 (Debezium), Debezium Microsoft SQL Source V2 (Debezium), and the MariaDB CDC Source (Debezium) connectors require the following additional ACL entries:

ACLs to read, create, and write to schema history topics prefixed with

dbhistory.<topic.prefix>.lcc-. For example, the prefix value iscdcin the configuration property"topic.prefix": "cdc". Use the following commands to set these ACLs:

Note

These steps are applicable only when no custom value is specified for the configuration

Database schema history topic namein Confluent Cloud Console orschema.history.internal.kafka.topicin the Confluent CLI. In case a value is specified, replace the--topicparameter with the specified value.

confluent kafka acl create --allow --service-account "<service-account-id>" \

--operations read --prefix --topic "dbhistory.<topic.prefix>.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \

--operations create --prefix --topic "dbhistory.<topic.prefix>.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \

--operations write --prefix --topic "dbhistory.<topic.prefix>.lcc-"

ACLs to read schema history consumer group named

<topic.prefix>-schemahistory. For example, the prefix value iscdcin the configuration property"topic.prefix": "cdc". Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations "read" --consumer-group "<topic.prefix>-schemahistory"

ACLs to read, create, and write to heartbeat topics prefixed with

__debezium-heartbeat-lcc-. Use the following commands to set these ACLs if heartbeats are enabled for the connector using theheartbeat.interval.msconfiguration property.:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations read --prefix --topic "__debezium-heartbeat-lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "__debezium-heartbeat-lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "__debezium-heartbeat-lcc-"

JDBC-based Source Connectors and the MongoDB Atlas Source Connector

The Source connector service account section provides basic ACL entries for source connector service accounts. Several source connectors allow a topic prefix. When a prefix is used and the following connectors are created using the CLI or API, you need to add ACL entries.

Microsoft SQL Server Source (JDBC) Connector for Confluent Cloud

Get Started with the MongoDB Atlas Source Connector for Confluent Cloud

Add the following ACL entries for these source connectors:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations create --prefix --topic "<topic.prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --prefix --topic "<topic.prefix>"

Oracle CDC Source connector

To access redo log topics, you must grant the connector a corresponding operation–that is, CREATE, READ, or WRITE in an ACL. The default redo log topic for the Oracle CDC Source connector is ${connectorName}-${databaseName}-redo-log. When this topic is created by the connector, it appends the lcc- prefix.

Add the following ACL entries for Redo Log topic access:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations create --prefix --topic "lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations read --prefix --topic "lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --prefix --topic "lcc-"

Add the following ACL entry for Consumer Group READ access:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations read --prefix --consumer-group "lcc-"

If you set the following configuration properties, you need to set ACLs for the resulting output topics:

table.topic.name.templatefor table-specific topics.lob.topic.name.templatefor LOB objects.redo.log-corruption.topicfor corrupted redo log records.

For these output topics, you must grant the connector either CREATE or WRITE. When granted READ, WRITE, or DELETE, the connector implicitly derives the DESCRIBE operation.

Oracle XStream CDC Source connector

The Source connector service account section provides basic ACL entries for source connector service accounts. Oracle XStream CDC Source connector require additional ACL entries. Add the following ACL entries for Oracle XStream CDC Source connector:

ACLs to create and write to change event topics prefixed with

<topic.prefix>. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "<topic.prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "<topic.prefix>"

ACLs to describe configurations at the cluster scope level. Use the following commands to set these ACLs:

confluent kafka acl create --allow --service-account "<service-account-id>" \ --cluster-scope --operations describe

confluent kafka acl create --allow --service-account "<service-account-id>" \ --cluster-scope --operations describe_configs

ACLs to read, create, and write to schema history topics prefixed with

__orcl-schema-changes.<topic.prefix>.lcc-. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations read --prefix --topic "__orcl-schema-changes.<topic.prefix>.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "__orcl-schema-changes.<topic.prefix>.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "__orcl-schema-changes.<topic.prefix>.lcc-"

ACLs to read schema history consumer group named

<topic.prefix>-schemahistory. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations "read" --consumer-group "<topic.prefix>-schemahistory"

The following additional ACL entries are required if heartbeats are enabled for the connector using the heartbeat.interval.ms configuration property.

ACLs to read, create, and write to heartbeat topics prefixed with

__orcl-heartbeat.lcc-. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations read --prefix --topic "__orcl-heartbeat.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "__orcl-heartbeat.lcc-"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "__orcl-heartbeat.lcc-"

The following additional ACL entries are required if signaling using Kafka topic is enabled and configured for the connector using the signal.enabled.channels and signal.kafka.topic configuration properties.

ACLs to read from the signaling topic. Use the following commands to set these ACLs:

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations read --topic "<signal.kafka.topic>"

ACLs to read Kafka signaling consumer group named

kafka-signal. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations "read" --consumer-group "kafka-signal"

Azure Cosmos DB Source V2 Connector

Note

It is recommended to download the API key and secret for this connector instead of using a service account, as the source connector creates an internal topic, and using a service account will cause an error.

The Source connector service account section provides basic ACL entries for source connector service accounts. Azure Cosmos DB Source V2 connectors require additional ACL entries. Add the following ACL entries for Azure Cosmos DB Source V2 connectors:

ACLs to read, create and write to table-related topics prefixed with

<topic.prefix>. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" --operations read --prefix --topic "<topic.prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations create --prefix --topic "<topic.prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" \ --operations write --prefix --topic "<topic.prefix>"

ACLs to read, create, and write to metadata storage prefixed with

cosmos.metadata.topic/<metadata-storage-name-prefix>. Use the following commands to set these ACLs:confluent kafka acl create --allow --service-account "<service-account-id>" --operations create --prefix --topic "cosmos.metadata.topic/<metadata-storage-name-prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations read --prefix --topic "cosmos.metadata.topic/<metadata-storage-name-prefix>"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --prefix --topic "cosmos.metadata.topic/<metadata-storage-name-prefix>"

Sink connector SUCCESS and ERROR topics

The Sink connector service account section provides basic ACL entries for sink connector service accounts. Several sink connectors create additional success-lcc and error-lcc topics when the connector is launched. The following sink connectors create these topics and require additional ACL entries:

Google Cloud Functions Sink [Deprecated] Connector for Confluent Cloud

Salesforce Platform Event Sink Connector for Confluent Cloud

Add the following ACL entries for these sink connectors:

confluent kafka acl create --allow --service-account "<service-account-id>" --operations create --prefix --topic "success-lcc"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --prefix --topic "success-lcc"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations create --prefix --topic "error-lcc"

confluent kafka acl create --allow --service-account "<service-account-id>" --operations write --prefix --topic "error-lcc"

Sink connector offset management

The Sink connector service account section provides basic ACL entries for sink connector service accounts. Sink connectors require additional permissions to manage offsets. You must assign READ, DESCRIBE, and DELETE permissions on the consumer group for the sink connector.

Add the following role binding to configure sink connectors:

confluent iam rbac role-binding create --principal User:sa-lq5v76 --role ResourceOwner --resource Group:connect-lcc-xyz --kafka-cluster lkc-defg123 --environment env-dvr9z --cloud-cluster lkc-defg123

Exactly once semantics for source connectors

Note

Exactly once semantics (EOS) is an Early Access Program feature in Confluent Cloud.

An Early Access feature is a component of Confluent Cloud introduced to gain feedback. This feature should be used only for evaluation and non-production testing purposes or to provide feedback to Confluent, particularly as it becomes more widely available in follow-on preview editions.

Early Access Program features are intended for evaluation use in development and testing environments only, and not for production use. Early Access Program features are provided: (a) without support; (b) “AS IS”; and (c) without indemnification, warranty, or condition of any kind. No service level commitment will apply to Early Access Program features. Early Access Program features are considered to be a Proof of Concept as defined in the Confluent Cloud Terms of Service. Confluent may discontinue providing preview releases of the Early Access Program features at any time in Confluent’s sole discretion.

The Source connector service account section provides basic ACL entries for source connector service accounts to use exactly once semantics (EOS).

Source connectors enabling EOS automatically create resources with specific naming conventions:

Offset Topic: Used for storing connector offsets.

Default Name:

connect-offsets-lcc_idCustom Name: Can be user-provided.

Transaction Resources: Used for transactional guarantees.

Default Name:

transaction-lcc_id-task_idCustom Name: Based on the user-provided offset topic name, followed by

-lcc_id-task_id.

Add the following ACL entries with supported source connectors to provide necessary permissions for EOS:

ACLs to

DESCRIBE,CREATE,READ,WRITEoffset topic prefixed with<topic.prefix>:confluent kafka acl create --allow \ --operations describe,create,read,write \ --service-account <sa-id> \ --topic <offset-topic> \ --cluster <lkc-id>

ACLs to

DESCRIBE,WRITEtransaction resources:confluent kafka acl create --allow \ --operations describe,write \ --service-account <sa-id> \ --transactional-id <transaction-prefix> --prefix \ --cluster <lkc-id>