Azure Cosmos DB Sink Connector for Confluent Cloud

The fully-managed Azure Cosmos DB Sink connector for Confluent Cloud writes data to a Microsoft Azure Cosmos database. The connector polls data from Apache Kafka® and writes to database containers.

Confluent Cloud is available through Azure Marketplace or directly from Confluent.

Note

If you require private networking for fully-managed connectors, make sure to set up the proper networking beforehand. For more information, see Manage Networking for Confluent Cloud Connectors.

Features

The Azure Cosmos DB Sink connector supports the following features:

Topic mapping: Maps the Kafka Topic to the Azure Cosmos DB container.

Multiple key strategies:

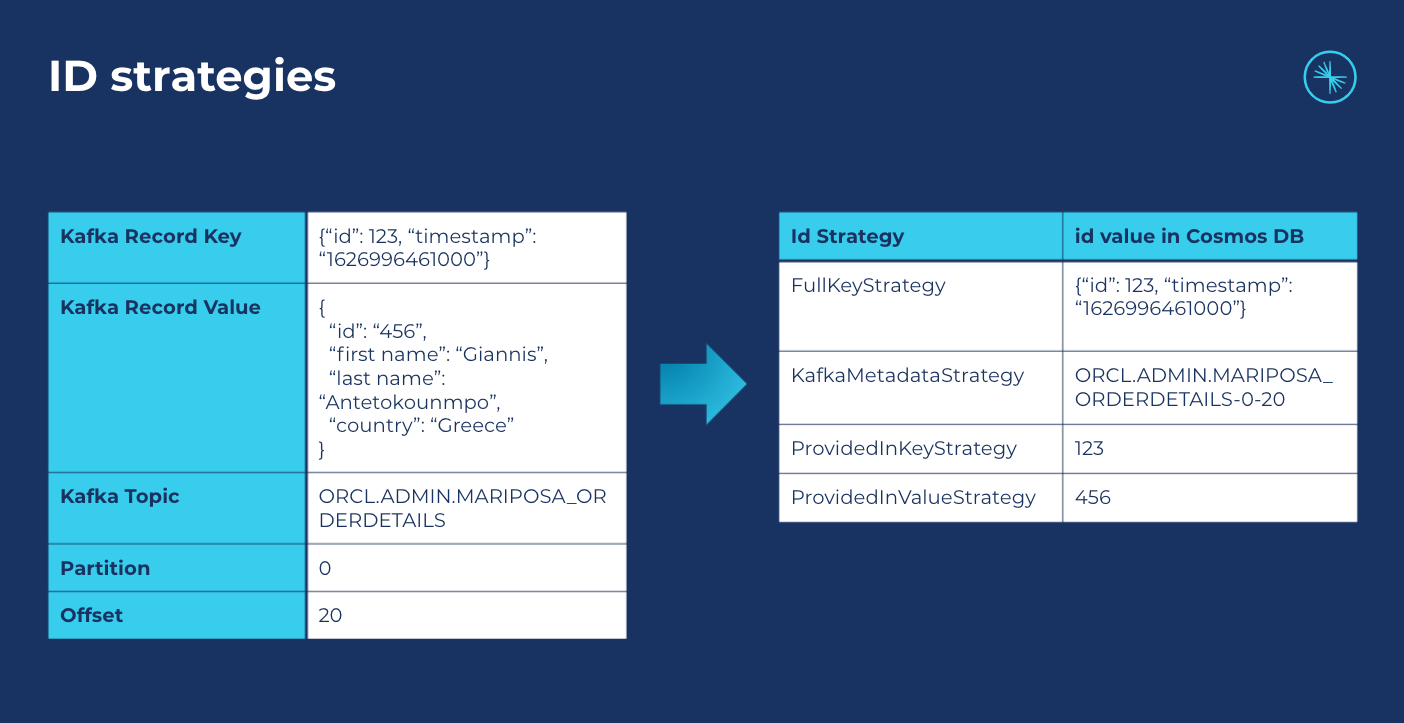

FullKeyStrategy: The ID generated is the Kafka record key. This is the default option.KafkaMetadataStrategy: The ID generated is a concatenation of the Kafka topic, partition, and offset. For example:${topic}-${partition}-${offset}.ProvidedInKeyStrategy: The ID generated is theidfield found in the key object.ProvidedInValueStrategy: The ID generated is theidfield found in the value object. Every record must have (lower case)idfield. This is an Azure Cosmos DB requirement. See the lower case id prerequisite.

The following shows an example of each strategy and the resulting id in Azure Cosmos.

ID strategies

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Limitations

Be sure to review the following information.

For connector limitations, see Azure Cosmos DB Sink Connector limitations.

If you plan to use one or more Single Message Transforms (SMTs), see SMT Limitations.

If you plan to use Confluent Cloud Schema Registry, see Schema Registry Enabled Environments.

Quick Start

Use this quick start to get up and running with the Confluent Cloud Azure Cosmos DB Sink connector. The quick start provides the basics of selecting the connector and configuring it to stream Kafka events to an Azure Cosmos DB container.

- Prerequisites

Authorized access to a Confluent Cloud cluster on Azure.

The Confluent CLI installed and configured for the cluster. See Install the Confluent CLI.

Schema Registry must be enabled to use a Schema Registry-based format (for example, Avro, JSON_SR (JSON Schema), or Protobuf). See Schema Registry Enabled Environments for additional information.

At least one source Kafka topic must exist in your Confluent Cloud cluster before creating the sink connector.

The Azure Cosmos DB and the Kafka cluster must be in the same region.

The Azure Cosmos DB requires an

idfield in every record. See ID strategies for an example of how each of these works. The following strategies are provided to generate the ID:FullKeyStrategy: The ID generated is the Kafka record key. This is the default option.KafkaMetadataStrategy: The ID generated is a concatenation of the Kafka topic, partition, and offset. For example:${topic}-${partition}-${offset}.ProvidedInKeyStrategy: The ID generated is theidfield found in the key object.ProvidedInValueStrategy: The ID generated is theidfield found in the value object. If you select this ID strategy, you must create a new field namedid. You can also use the following ksqlDB statement. The example below uses a topic namedorders.CREATE STREAM ORDERS_STREAM WITH ( KAFKA_TOPIC = 'orders', VALUE_FORMAT = 'AVRO' ); CREATE STREAM ORDER_AUGMENTED AS SELECT ORDERID AS `id`, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS FROM ORDERS_STREAM;

Note

The connector supports

Upsertbased onid.The connector does not support

Deletefor tombstone records.

Using the Confluent Cloud Console

Step 1: Launch your Confluent Cloud cluster

To create and launch a Kafka cluster in Confluent Cloud, see Create a kafka cluster in Confluent Cloud.

Step 2: Add a connector

In the left navigation menu, click Connectors. If you already have connectors in your cluster, click + Add connector.

Step 3: Select your connector

Click the Azure Cosmos DB sink connector card.

Step 4: Enter the connector details

Note

Ensure you have all your prerequisites completed.

An asterisk ( * ) designates a required entry.

At the Add Azure Cosmos DB Sink Connector screen, complete the following:

If you’ve already populated your Kafka topics, select the topics you want to connect from the Topics list.

To create a new topic, click +Add new topic.

Select the way you want to provide Kafka Cluster credentials. You can choose one of the following options:

My account: This setting allows your connector to globally access everything that you have access to. With a user account, the connector uses an API key and secret to access the Kafka cluster. This option is not recommended for production.

Service account: This setting limits the access for your connector by using a service account. This option is recommended for production.

Use an existing API key: This setting allows you to specify an API key and a secret pair. You can use an existing pair or create a new one. This method is not recommended for production environments.

Note

Freight clusters support only service accounts for Kafka authentication.

Click Continue.

Configure the authentication properties:

Cosmos Endpoint: Cosmos endpoint URL. For example,

https://connect-cosmosdb.documents.azure.com:443/.Cosmos Connection Key: The Cosmos connection master (primary) key.

Cosmos Database name: Cosmos target database’s name to write records into.

Click Continue.

Note

Configuration properties that are not shown in the Cloud Console use the default values. See Configuration Properties for all property values and definitions.

Input Kafka record value format: Select an input Kafka record value format (data coming from the Kafka topic). Valid entries are AVRO, PROTOBUF, JSON_SR (JSON Schema), or JSON (schemaless). A valid schema must be available in Schema Registry to use a schema-based message format (for example, Avro, JSON_SR (JSON Schema), or Protobuf). See Schema Registry Enabled Environments for additional information.

Topic-Container map: In the Topic-Container Map field, input a comma-delimited list of Kafka topics mapped to Cosmos DB containers–the mapping between Kafka topics and Azure Cosmos DB containers. For example,

topic#container1,topic2#container2.

Show advanced configurations

Schema context: Select a schema context to use for this connector, if using a schema-based data format. This property defaults to the Default context, which configures the connector to use the default schema set up for Schema Registry in your Confluent Cloud environment. A schema context allows you to use separate schemas (like schema sub-registries) tied to topics in different Kafka clusters that share the same Schema Registry environment. For example, if you select a non-default context, a Source connector uses only that schema context to register a schema and a Sink connector uses only that schema context to read from. For more information about setting up a schema context, see What are schema contexts and when should you use them?.

Id strategy: The IdStrategy class name to use for generating a unique document ID:

FullKeyStrategy: The ID generated is the Kafka record key.KafkaMetadataStrategy: The ID generated is a concatenation of the Kafka topic, partition, and offset. For example:${topic}-${partition}-${offset}.ProvidedInKeyStrategy: The ID generated is theidfield found in the key object.ProvidedInValueStrategy: The ID generated is theidfield found in the value object. Every record must have (lower case)idfield. This is an Azure Cosmos DB requirement. See Lower case id prerequisite.

Auto-restart policy

Enable Connector Auto-restart: Control the auto-restart behavior of the connector and its task in the event of user-actionable errors. Defaults to

true, enabling the connector to automatically restart in case of user-actionable errors. Set this property tofalseto disable auto-restart for failed connectors. In such cases, you would need to manually restart the connector.

Additional Configs

Value Converter Decimal Format: Specify the JSON/JSON_SR serialization format for Connect DECIMAL logical type values with two allowed literals: BASE64 to serialize DECIMAL logical types as base64 encoded binary data and NUMERIC to serialize Connect DECIMAL logical type values in JSON/JSON_SR as a number representing the decimal value.

Schema GUID For Key Converter: The schema GUID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema GUID to be used for deserializing message keys. Only applicable whenkey.converter.key.schema.id.deserializeris set toConfigSchemaIdDeserializer.Value Converter Schema ID Deserializer: The class name of the schema ID deserializer for values. This is used to deserialize schema IDs from the message headers.

Schema GUID For Value Converter: The schema GUID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema GUID to be used for deserializing message values. Only applicable whenvalue.converter.value.schema.id.deserializeris set toConfigSchemaIdDeserializer.Value Converter Reference Subject Name Strategy: Set the subject reference name strategy for value. Valid entries are DefaultReferenceSubjectNameStrategy or QualifiedReferenceSubjectNameStrategy. Note that the subject reference name strategy can be selected only for PROTOBUF format with the default strategy being DefaultReferenceSubjectNameStrategy.

Schema ID For Value Converter: The schema ID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema ID to be used for deserializing message values. Only applicable whenvalue.converter.value.schema.id.deserializeris set toConfigSchemaIdDeserializer.Value Converter Connect Meta Data: Allow the Connect converter to add its metadata to the output schema. Applicable for Avro Converters.

Value Converter Value Subject Name Strategy: Determines how to construct the subject name under which the value schema is registered with Schema Registry.

Key Converter Key Subject Name Strategy: How to construct the subject name for key schema registration.

Key Converter Schema ID Deserializer: The class name of the schema ID deserializer for keys. This is used to deserialize schema IDs from the message headers.

Schema ID For Key Converter: The schema ID to use for deserialization when using

ConfigSchemaIdDeserializer. This is used to specify a fixed schema ID to be used for deserializing message keys. Only applicable whenkey.converter.key.schema.id.deserializeris set toConfigSchemaIdDeserializer.

Consumer configuration

Max poll interval(ms): Set the maximum delay between subsequent consume requests to Kafka. Use this property to improve connector performance in cases when the connector cannot send records to the sink system. The default is 300,000 milliseconds (5 minutes).

Max poll records: Set the maximum number of records to consume from Kafka in a single request. Use this property to improve connector performance in cases when the connector cannot send records to the sink system. The default is 500 records.

Transforms

Single Message Transforms: To add a new SMT, see Add transforms. For more information about unsupported SMTs, see Unsupported transformations.

Processing position

Set offsets: Click Set offsets to define a specific offset for this connector to begin procession data from. For more information on managing offsets, see Manage offsets.

See Configuration Properties for all property values and definitions.

Click Continue.

Based on the number of topic partitions you select, you will be provided with a recommended number of tasks.

To change the number of recommended tasks, enter the number of tasks for the connector to use in the Tasks field. More tasks may improve performance.

Click Continue.

Verify the connection details.

Click Launch.

The status for the connector should go from Provisioning to Running.

Step 5: Check for records

Verify that records are being produced in your Azure Cosmos database.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Tip

When you launch a connector, a Dead Letter Queue topic is automatically created. See View Connector Dead Letter Queue Errors in Confluent Cloud for details.

Using the Confluent CLI

Complete the following steps to set up and run the connector using the Confluent CLI.

Note

Make sure you have all your prerequisites completed.

Step 1: List the available connectors

Enter the following command to list available connectors:

confluent connect plugin list

Step 2: List the connector configuration properties

Enter the following command to show the connector configuration properties:

confluent connect plugin describe <connector-plugin-name>

The command output shows the required and optional configuration properties.

Step 3: Create the connector configuration file

Create a JSON file that contains the connector configuration properties. The following example shows the required connector properties.

{

"name": "CosmosDbSinkConnector_0",

"config": {

"connector.class": "CosmosDbSink",

"name": "CosmosDbSinkConnector_0",

"input.data.format": "AVRO",

"kafka.auth.mode": "KAFKA_API_KEY",

"kafka.api.key": "****************",

"kafka.api.secret": "**********************************************",

"topics": "pageviews",

"connect.cosmos.connection.endpoint": "https://myaccount.documents.azure.com:443/",

"connect.cosmos.master.key": "****************************************",

"connect.cosmos.databasename": "myDBname",

"connect.cosmos.containers.topicmap": "pageviews#Container2",

"cosmos.id.strategy": "FullKeyStrategy",

"tasks.max": "1"

}

}

Note the following property definitions:

"connector.class": Identifies the connector plugin name."input.data.format": Sets the input Kafka record value format (data coming from the Kafka topic). Valid entries are AVRO, JSON_SR, PROTOBUF, or JSON. You must have Confluent Cloud Schema Registry configured if using a schema-based message format (for example, Avro, JSON_SR (JSON Schema), or Protobuf)."name": Sets a name for your new connector.

"kafka.auth.mode": Identifies the connector authentication mode you want to use. There are two options:SERVICE_ACCOUNTorKAFKA_API_KEY(the default). To use an API key and secret, specify the configuration propertieskafka.api.keyandkafka.api.secret, as shown in the example configuration (above). To use a service account, specify the Resource ID in the propertykafka.service.account.id=<service-account-resource-ID>. To list the available service account resource IDs, use the following command:confluent iam service-account list

For example:

confluent iam service-account list Id | Resource ID | Name | Description +---------+-------------+-------------------+------------------- 123456 | sa-l1r23m | sa-1 | Service account 1 789101 | sa-l4d56p | sa-2 | Service account 2

"connect.cosmos.connection.endpoint": A URI with the formhttps://ccloud-cosmos-db-1.documents.azure.com:443/."connect.cosmos.master.key": The Azure Cosmos master key."connect.cosmos.databasename": The name of your Cosmos DB."connect.cosmos.containers.topicmap": A comma-delimited list of Kafka topics mapped to Cosmos DB containers. Note that this property only supports 1:1 mapping between topic and container name. For example:topic#container1,topic2#container2.(Optional)

"cosmos.id.strategy": Defaults toFullKeyStrategy. Enter one of the following strategies:FullKeyStrategy: The ID generated is the Kafka record key.KafkaMetadataStrategy: The ID generated is a concatenation of the Kafka topic, partition, and offset. For example:${topic}-${partition}-${offset}.ProvidedInKeyStrategy: The ID generated is theidfield found in the key object. Every record must have (lower case)idfield. This is an Azure Cosmos DB requirement. See Lower case id prerequisite.ProvidedInValueStrategy: The ID generated is theidfield found in the value object. Every record must have (lower case)idfield. This is an Azure Cosmos DB requirement. See Lower case id prerequisite.

See ID strategies for an example of how each of these works.

"tasks": The number of tasks to use with the connector. More tasks may improve performance.

Single Message Transforms: See the Single Message Transforms (SMT) documentation for details about adding SMTs using the CLI.

See Configuration Properties for all property values and descriptions.

Step 4: Load the properties file and create the connector

Enter the following command to load the configuration and start the connector:

confluent connect cluster create --config-file <file-name>.json

For example:

confluent connect cluster create --config-file azure-cosmos-sink-config.json

Example output:

Created connector CosmosDbSinkConnector_0 lcc-do6vzd

Step 4: Check the connector status.

Enter the following command to check the connector status:

confluent connect cluster list

Example output:

ID | Name | Status | Type | Trace

+------------+-------------------------------+---------+------+-------+

lcc-do6vzd | CosmosDbSinkConnector_0 | RUNNING | sink | |

Step 5: Check for records

..Verify that records are populating the endpoint.

For more information and examples to use with the Confluent Cloud API for Connect, see the Confluent Cloud API for Connect Usage Examples section.

Tip

When you launch a connector, a Dead Letter Queue topic is automatically created. See View Connector Dead Letter Queue Errors in Confluent Cloud for details.

Configuration Properties

Use the following configuration properties with the fully-managed connector. For self-managed connector property definitions and other details, see the connector docs in Self-managed connectors for Confluent Platform.

How should we connect to your data?

nameSets a name for your connector.

Type: string

Valid Values: A string at most 64 characters long

Importance: high

Schema Config

schema.context.nameAdd a schema context name. A schema context represents an independent scope in Schema Registry. It is a separate sub-schema tied to topics in different Kafka clusters that share the same Schema Registry instance. If not used, the connector uses the default schema configured for Schema Registry in your Confluent Cloud environment.

Type: string

Default: default

Importance: medium

Input messages

input.data.formatSets the input Kafka record value format. Valid entries are AVRO, JSON_SR, PROTOBUF, or JSON. Note that you need to have Confluent Cloud Schema Registry configured if using a schema-based message format like AVRO, JSON_SR, and PROTOBUF.

Type: string

Importance: high

Kafka Cluster credentials

kafka.auth.modeKafka Authentication mode. It can be one of KAFKA_API_KEY or SERVICE_ACCOUNT. It defaults to KAFKA_API_KEY mode, whenever possible.

Type: string

Valid Values: SERVICE_ACCOUNT, KAFKA_API_KEY

Importance: high

kafka.api.keyKafka API Key. Required when kafka.auth.mode==KAFKA_API_KEY.

Type: password

Importance: high

kafka.service.account.idThe Service Account that will be used to generate the API keys to communicate with Kafka Cluster.

Type: string

Importance: high

kafka.api.secretSecret associated with Kafka API key. Required when kafka.auth.mode==KAFKA_API_KEY.

Type: password

Importance: high

Which topics do you want to get data from?

topicsIdentifies the topic name or a comma-separated list of topic names.

Type: list

Importance: high

How should we connect to your Azure Cosmos DB?

connect.cosmos.connection.endpointCosmos endpoint URL. For example: https://connect-cosmosdb.documents.azure.com:443/.

Type: string

Importance: high

connect.cosmos.master.keyCosmos connection master (primary) key.

Type: password

Importance: high

connect.cosmos.databasenameCosmos target database to write records into.

Type: string

Importance: high

connect.cosmos.containers.topicmapA comma delimited list of Kafka topics mapped to Cosmos containers. For example: topic1#con1,topic2#con2.

Type: string

Importance: high

Database details

cosmos.id.strategyThe IdStrategy class name to use for generating a unique document id (id).

FullKeyStrategyuses the full record key as ID.KafkaMetadataStrategyuses a concatenation of the kafka topic, partition, and offset as ID, with dashes as separator. i.e.${topic}-${partition}-${offset}.ProvidedInKeyStrategyandProvidedInValueStrategyuse theidfield found in the key and value objects respectively as ID.Type: string

Default: FullKeyStrategy

Valid Values: FullKeyStrategy, KafkaMetadataStrategy, ProvidedInKeyStrategy, ProvidedInValueStrategy

Importance: low

Consumer configuration

max.poll.interval.msThe maximum delay between subsequent consume requests to Kafka. This configuration property may be used to improve the performance of the connector, if the connector cannot send records to the sink system. Defaults to 300000 milliseconds (5 minutes).

Type: long

Default: 300000 (5 minutes)

Valid Values: [60000,…,1800000] for non-dedicated clusters and [60000,…] for dedicated clusters

Importance: low

max.poll.recordsThe maximum number of records to consume from Kafka in a single request. This configuration property may be used to improve the performance of the connector, if the connector cannot send records to the sink system. Defaults to 500 records.

Type: long

Default: 500

Valid Values: [1,…,500] for non-dedicated clusters and [1,…] for dedicated clusters

Importance: low

Number of tasks for this connector

tasks.maxMaximum number of tasks for the connector.

Type: int

Valid Values: [1,…]

Importance: high

Auto-restart policy

auto.restart.on.user.errorEnable connector to automatically restart on user-actionable errors.

Type: boolean

Default: true

Importance: medium

Additional Configs

key.converter.use.schema.guidThe schema GUID to use for deserialization when using ConfigSchemaIdDeserializer. This allows you to specify a fixed schema GUID to be used for deserializing message keys. Only applicable when key.converter.key.schema.id.deserializer is set to ConfigSchemaIdDeserializer.

Type: string

Importance: low

key.converter.use.schema.idThe schema ID to use for deserialization when using ConfigSchemaIdDeserializer. This allows you to specify a fixed schema ID to be used for deserializing message keys. Only applicable when key.converter.key.schema.id.deserializer is set to ConfigSchemaIdDeserializer.

Type: int

Importance: low

value.converter.connect.meta.dataAllow the Connect converter to add its metadata to the output schema. Applicable for Avro Converters.

Type: boolean

Importance: low

value.converter.use.schema.guidThe schema GUID to use for deserialization when using ConfigSchemaIdDeserializer. This allows you to specify a fixed schema GUID to be used for deserializing message values. Only applicable when value.converter.value.schema.id.deserializer is set to ConfigSchemaIdDeserializer.

Type: string

Importance: low

value.converter.use.schema.idThe schema ID to use for deserialization when using ConfigSchemaIdDeserializer. This allows you to specify a fixed schema ID to be used for deserializing message values. Only applicable when value.converter.value.schema.id.deserializer is set to ConfigSchemaIdDeserializer.

Type: int

Importance: low

key.converter.key.schema.id.deserializerThe class name of the schema ID deserializer for keys. This is used to deserialize schema IDs from the message headers.

Type: string

Default: io.confluent.kafka.serializers.schema.id.DualSchemaIdDeserializer

Importance: low

key.converter.key.subject.name.strategyHow to construct the subject name for key schema registration.

Type: string

Default: TopicNameStrategy

Importance: low

value.converter.decimal.formatSpecify the JSON/JSON_SR serialization format for Connect DECIMAL logical type values with two allowed literals:

BASE64 to serialize DECIMAL logical types as base64 encoded binary data and

NUMERIC to serialize Connect DECIMAL logical type values in JSON/JSON_SR as a number representing the decimal value.

Type: string

Default: BASE64

Importance: low

value.converter.reference.subject.name.strategySet the subject reference name strategy for value. Valid entries are DefaultReferenceSubjectNameStrategy or QualifiedReferenceSubjectNameStrategy. Note that the subject reference name strategy can be selected only for PROTOBUF format with the default strategy being DefaultReferenceSubjectNameStrategy.

Type: string

Default: DefaultReferenceSubjectNameStrategy

Importance: low

value.converter.value.schema.id.deserializerThe class name of the schema ID deserializer for values. This is used to deserialize schema IDs from the message headers.

Type: string

Default: io.confluent.kafka.serializers.schema.id.DualSchemaIdDeserializer

Importance: low

value.converter.value.subject.name.strategyDetermines how to construct the subject name under which the value schema is registered with Schema Registry.

Type: string

Default: TopicNameStrategy

Importance: low

Next Steps

For an example that shows fully-managed Confluent Cloud connectors in action with Confluent Cloud for Apache Flink, see the Cloud ETL Demo. This example also shows how to use Confluent CLI to manage your resources in Confluent Cloud.